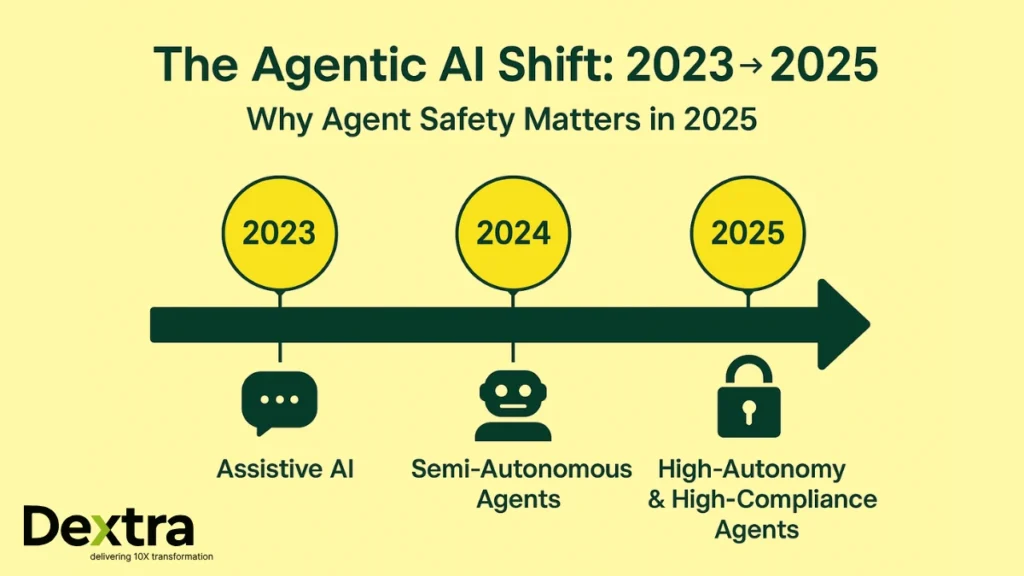

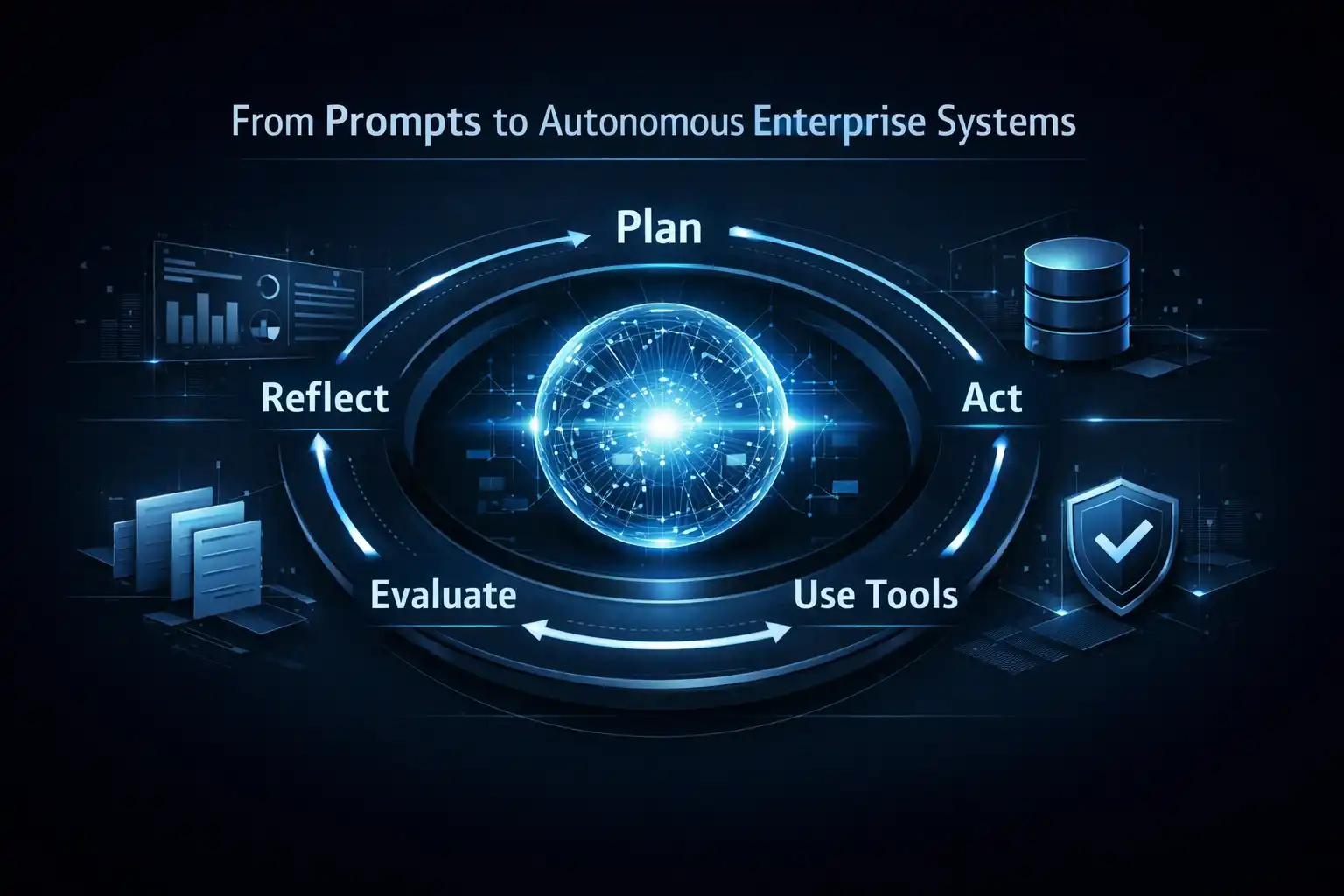

Even compared to where we were even only 18 months ago, the artificial intelligence scene in 2025 feels quite different. We’ve gone beyond “AI as a tool” and into AI’s role as an actor. Everything changes when your systems are no longer used as simple autocomplete engines but rather as decision-makers, coders, analysts of financial information, workflow triggerers, or interfaces with sensitive systems.

This is the year enterprises finally realized they aren’t just deploying models anymore — they’re deploying autonomous decision-making entities. And within heavily Regulated AI Systems, that shift raises stakes dramatically. It’s not simply about model accuracy or general compliance; it’s about real-time operational risk, organizational liability, multi-layered safety governance, and the orchestration of complex agent ecosystems that behave with more dynamism than any traditional software ever has.

That’s exactly why the AI Safety Playbook — or more specifically, the Agentic AI Safety Playbook — has become the dominant internal topic for CTOs, CIOs, CISOs, and AI governance leaders in 2025. If 2023 was the year of experimentation and 2024 was the year of scaling foundation models, then 2025 is unmistakably the year enterprises realized they need hardened AI Guardrails, rigorous AI Permissions and Governance, and ironclad AI Auditability baked into every agent they deploy.

And regulators agree. Between:

- The EU AI Act formally classifies high-risk use cases.

- The US NIST AI risk management framework is being adopted widely

- Singapore’s AI Verify is gaining traction globally.

- Sector-specific laws in finance, healthcare, and energy are tightening oversight.

…we’ve entered an era where Responsible AI deployment is no longer “nice-to-have enterprise hygiene.” It’s now required infrastructure — foundational, structural, and non-negotiable.

So the thesis for this playbook is simple:

To safely scale agentic systems in 2025 and beyond, enterprises need a layered, operationalized framework built on three pillars:

- Guardrails prevent harmful or out-of-scope behavior

- Permissions define the exact boundaries of agent authority

- Auditability ensure traceability, accountability, and transparency

These pillars together form the backbone of the AI Safety Playbook, specifically tailored for the agentic era, where models don’t just respond, but think, decide, act, and interact.

What Exactly Is the Agent Safety Playbook?

If you’ve been following enterprise AI evolution, you’ve probably noticed that every major organization is scrambling to publish something that looks like an internal safety standard. McKinsey has their take. The UK published a national AI Safety Playbook. Several cybersecurity vendors have published guidelines for agent-centric architectures. Even the cloud hyperscalers now offer “safety layers” and “governance kits.”

But all of these frameworks lack something critical: a unified, enterprise-ready model specifically for Agentic AI Safety.

That gap is exactly where the AI Safety Playbook — the full agent-specific version — positions itself. Think of it less as a policy document and more like an operational, hands-on engineering manual for safely building and running Regulated AI Systems that behave dynamically across networks, tools, APIs, and workflows.

At its philosophical core, the playbook is built around a principle we call Safety by Design.

Most organizations treat safety like seatbelts: something you attach after the vehicle is built. But with agents, that mindset is lethal. You don’t bolt on safety after production; you architect it into the entire system from day zero — into the datasets, the model tuning, the runtime environment, the agent orchestration layer, and the continuous monitoring fabric.

To do that, the playbook rests on three essential pillars:

1. Guardrails

These define what the agent must not do. They stop harmful, unethical, or non-compliant actions — from toxic outputs to unauthorized API execution to data misuse.

2. Permissions

These define what the agent is allowed to do. Think of them as a dynamic, machine-enforceable roles-and-responsibilities contract.

3. Auditability

These capture exactly what the agent did, why it did it, and how it arrived at its decisions. Auditability is the source of truth that supports investigations, compliance, and AI accountability and trust.

Together, these create a holistic, end-to-end strategy for Enterprise AI governance — not static policy PDFs, but living systems embedded into agent architecture.

Layered Guardrails: The Foundation of AI Safety (and Your First Line of Defense)

Let’s talk about guardrails, because here’s where most enterprises underestimate complexity. When leaders hear “guardrails,” they think of toxicity filters or content classifiers — basically the same stuff they used for chatbots. But once you step into agentic territory, where models are reasoning about instructions, calling external tools, making autonomous choices, and interacting with sensitive backend systems, guardrails stop being optional safety enhancements. They become the load-bearing beams of your architecture.

And here’s the nuance: you don’t deploy AI Guardrails as one monolithic system. You layer them. Like defense-in-depth for cybersecurity, guardrails need to operate across multiple abstraction levels.

Technical Guardrails

These live closest to the metal. They include:

- Redaction pipelines removing PII before LLM ingestion

- Sandboxed execution environments preventing “runaway” agent behavior

- Real-time content safety filters for toxicity, bias, disallowed content

- Tool access mediation ensuring only safe, validated function calls

This layer ensures the agent’s raw I/O is sanitized, safe, and compliant — a fundamental requirement for Regulated AI Systems.

Policy Guardrails

This is where compliance and AI ethics meet engineering. Policy-driven guardrails include:

- Data usage boundaries

- Model-specific limitations tied to risk categories

- External regulatory constraints

- Organization-specific ethical frameworks

In other words: the rules the business cares about, encoded in a machine-readable way.

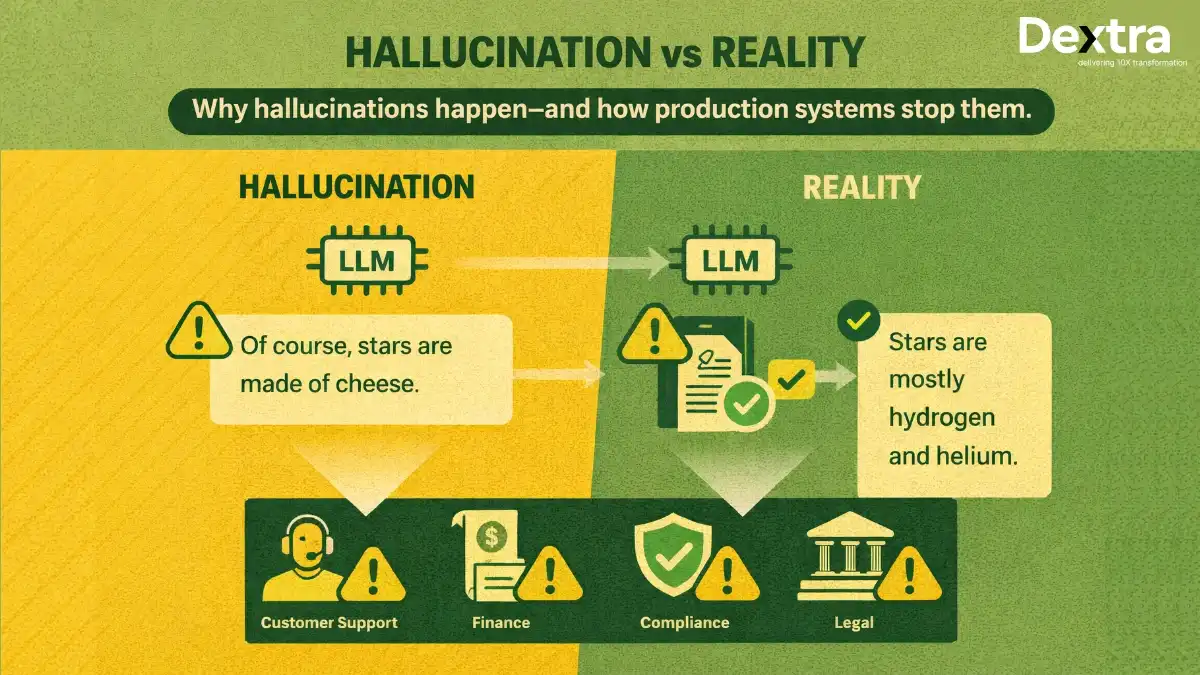

Behavioral Guardrails

These are the most sophisticated. They influence how the agent reasons. Behavioral guardrails include:

- Reinforcement learning–based reward models

- Grounding techniques that constrain hallucinations

- Instruction-level guardrails baked into the system prompt

- Conversational safety scaffolds

Together, these shape the agent’s reasoning patterns and prevent “creative” deviations that could cause harm.

Why Layering Matters

Because you can’t rely on a single layer to do everything. Toxic output filters won’t stop unauthorized financial transactions. Data redaction won’t enforce medical ethics. RL-based safety shaping won’t prevent an agent from calling a forbidden API endpoint.

Only multi-tiered guardrails create a robust architecture.

Companies like Dextralabs design guardrail systems that operate across these layers — an approach that merges custom model alignment, runtime enforcement, and context-aware instruction monitoring. This is what modern Responsible AI deployment actually looks like.

Permissions and Access Control: The Real Safety Engine Behind Autonomous Behavior

Here’s where things get interesting. Once your agents can call tools — whether they’re manipulating spreadsheets, pulling medical records, sending emails, querying financial systems, or running code — the guardrails alone won’t protect you. Because the most dangerous threats don’t come from toxic language. They come from actions.

In 2025, AI Permissions and Governance have become the true safety control center. And if guardrails are the fences, permissions are the keys.

Imagine you hire a new employee. You don’t simply give them the whole company intranet and hope for the best — you restrict their access based on their role, responsibilities, and trust level.

Agents work the same way.

Permission Models in AI Agents

You typically see three flavors in enterprise settings:

- RBAC (Role-Based Access Control) — simplest, assigns permissions based on agent “roles.”

- ABAC (Attribute-Based Access Control) — more dynamic, adapts based on agent state, user identity, data sensitivity.

- IBAC (Intent-Based Access Control) — the cutting edge, where the system evaluates the intent of the agent’s action, not just the action itself.

A modern agent might attempt to call an API like: “Retrieve last year’s PII-rich claims dataset.”

A permissions layer should evaluate:

- Is this action allowed for this agent?

- Does the agent’s role authorize access to this dataset?

- Is the data sensitive?

- Is the intent aligned with its assigned task?

- Is there a safer way to achieve the same goal?

Every “yes” or “no” becomes part of AI Auditability, which we’ll get to shortly.

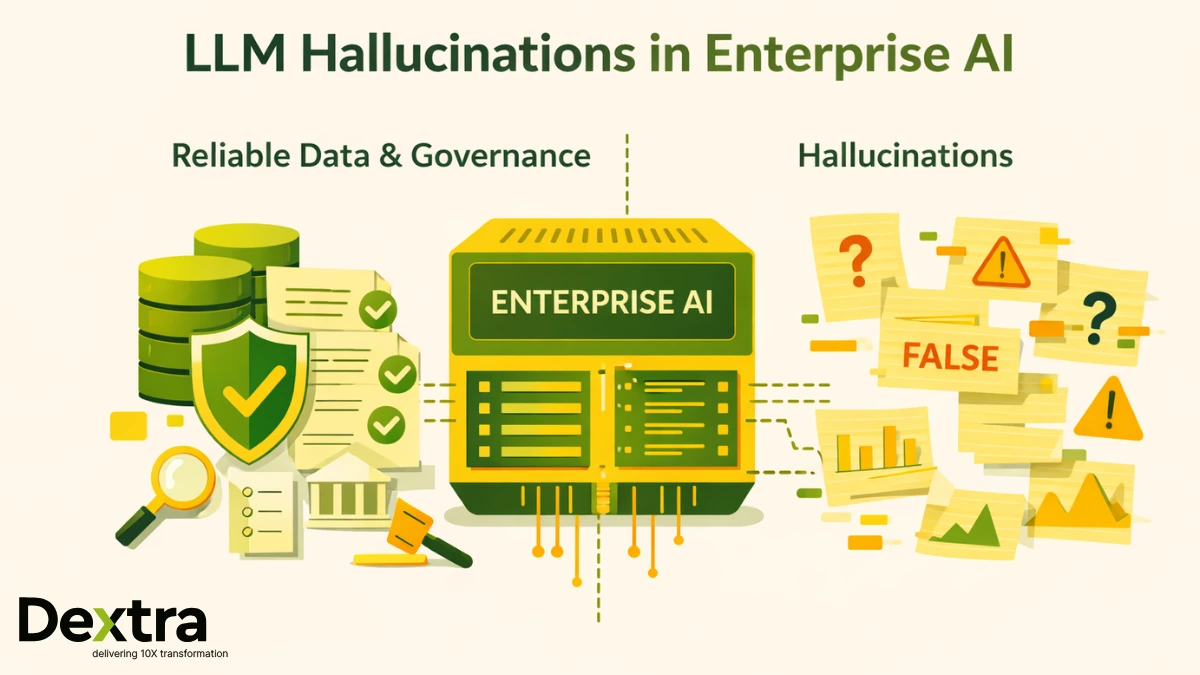

Why Permissions Are Crucial in Regulated AI Systems?

Because enterprise agents operate inside extremely sensitive domains:

- Finance (credit decisions, AML workflows, trading models)

- Healthcare (diagnostics, clinical decision support, patient records)

- Energy (grid operations, safety systems)

- Government (citizen services, public safety analytics)

These are high-risk environments. A single unauthorized action can trigger regulatory violations, financial losses, or operational failures.

This is exactly where Secure AI orchestration matters. Without mediated function calls, intent verification, scoped tool access, and real-time oversight, autonomous agents essentially become unmonitored employees with superhuman efficiency — and that’s a recipe for disaster.

Auditability: The Backbone of Compliance, Trust, and Post-Incident Forensics

Now let’s talk about the least glamorous but most critical pillar: AI Auditability. If the first two pillars define what the agent can and cannot do, auditability answers the most important question:

“What exactly did the agent do, and why?”

In Regulated AI Systems, this question isn’t optional — it’s existential. And without detailed audit logs, enterprises lose the ability to perform:

- Compliance audits

- Bias investigations

- Model drift assessments

- Post-incident forensics

- Legal defense in the event of scrutiny

Auditability is what transforms your AI system from a black box into a transparent process.

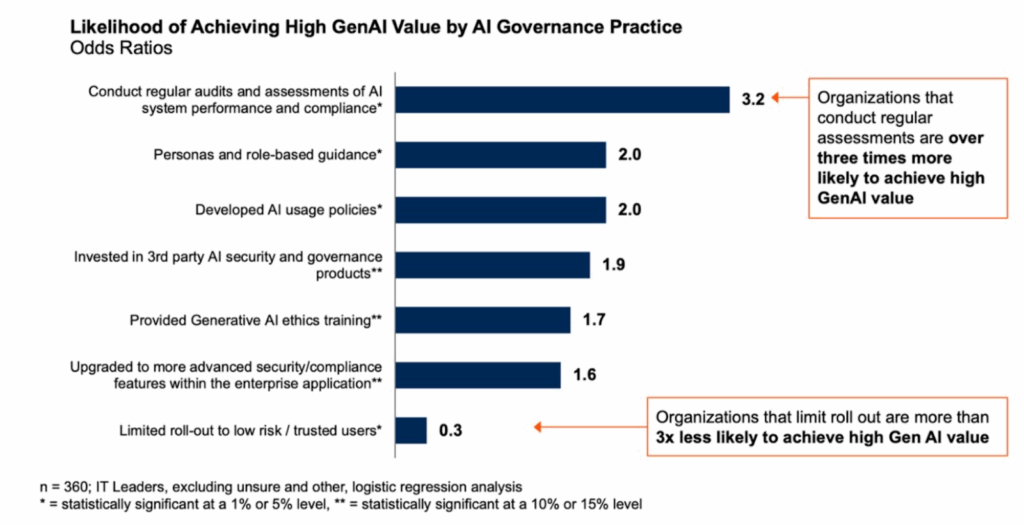

And auditability isn’t just a compliance requirement — it’s a value driver. A 2025 Gartner study found that organizations performing regular AI system assessments are over 3× more likely to achieve high GenAI business value. In other words, audit logs, monitoring, and explainability don’t slow innovation; they multiply its return. This makes the auditability pillar not only essential for regulators, but essential for ROI.

What Should Be Logged?

A mature audit system captures:

- All prompts and responses

- Reasoning chains (when allowed)

- Tool calls and external API interactions

- Decision trees

- Data access events

- Risk-level classifications

- Permission decisions (allow/deny)

- Error states

- Safety events (guardrail triggers)

In other words: everything. This is the only way to maintain true AI compliance and observability — a non-negotiable requirement for every major industry.

Why It Matters?

Without a full audit trail, you simply cannot demonstrate:

- AI accountability and trust

- Compliance with the EU AI Act

- Proper use of sensitive data

- Explainability for credit decisions, healthcare recommendations, etc.

- That decision-making was fair, consistent, and lawful.

In fact, regulators increasingly expect enterprises to implement Explainable AI (XAI) at a systemic level, not as an afterthought.

Tools for Enterprise AI governance now include observability dashboards, log analytics, drift detection, and safety monitoring — all essential components of the modern AI infrastructure stack.

Human Oversight and Risk Management in Regulated AI: Restoring Control Without Killing Velocity

Now that we’ve talked about AI Guardrails, AI Permissions and Governance, and AI Auditability, it’s time to address the human dimension — the part enterprises often neglect until regulators, auditors, or an internal AI incident forces the conversation.

Here’s the uncomfortable truth:

No matter how advanced your agents are, no matter how clever your orchestration frameworks or how beautifully aligned your models, you will always need Human-in-the-loop oversight for critical decisions within Regulated AI Systems.

And in reality, many organizations fail to implement this oversight simply because they are moving too fast. A 2025 Pacific AI governance survey found that 45% of enterprises cite speed-to-market pressure as the single biggest barrier to proper AI governance. When velocity outweighs safety, critical guardrails and permission checks get skipped — creating the exact conditions where agentic systems become operational risks instead of operational accelerators.

Not because humans are better decision-makers — often they’re not.

But because humans are accountable in ways models cannot be, and regulators mandate human control precisely to protect organizations from delegating too much authority to autonomous systems.

Why Oversight Still Matters (Even in 2025)?

You’ve probably heard bold declarations that “agents will replace entire teams.” Maybe someday. But not today — not in enterprises dealing with money flows, clinical pathways, energy grids, public infrastructure, patient safety, or citizen rights. Here, the risk envelope is simply too high.

Oversight isn’t about slowing the system down. It’s about calibration — knowing which agentic pathways require elevated scrutiny, and which can safely run autonomously.

Without a proper oversight framework, enterprises risk violating compliance requirements tied directly to:

- The EU AI Act’s high-risk system obligations

- The NIST AI risk management framework guidelines

- HIPAA and healthcare safety rules

- Financial services audit and fairness regulations

- Energy and industrial safety protocols

And if internal AI governance teams can’t demonstrate proper controls, your system essentially fails every category of Responsible AI deployment.

A Modern Oversight Framework Has Three Layers

To keep this conversational, let’s walk through the human oversight stack as if you were designing it with your engineering and compliance teams.

Layer 1: Preventive Oversight (before an agent acts)

This is where humans set the rules. They define:

- Data sources agents can use

- Approved toolchains

- Safety thresholds

- Maximum risk categories

- Prohibition lists

- Output constraints

- Policy guardrails

Think of it as governance-as-configuration — the pre-flight settings for safe agent behavior.

Layer 2: Active Oversight (during the agent’s operation)

This is where humans supervise, intervene, or approve decisions in real time. Examples:

- Loan officers reviewing a credit agent’s classification before approval

- Nurses validating a clinical decision support agent’s recommendation

- Engineers verifying high-impact code changes proposed by an autonomous dev agent

- Analysts approving or rejecting sensitive API calls

Active oversight is not micromanagement — it’s risk triage. Low-risk flows run automatically. High-risk flows require human eyes.

This is the heart of Human-in-the-loop oversight, and regulators love it for a reason.

Layer 3: Retrospective Oversight (after an agent acts)

This is where audit logs, dashboards, and monitoring systems come into play. Retrospective oversight involves:

- Reviewing decision logs

- Identifying anomalies

- Investigating bias patterns

- Assessing model drift

- Running postmortems for safety incidents

- Using audit trails for compliance reports

This is also where AI compliance and observability becomes indispensable — because you cannot oversee what you cannot inspect.

Together, these layers form the backbone of modern Enterprise AI governance.

Building Safe and Scalable Agentic Systems: The Dextralabs Approach

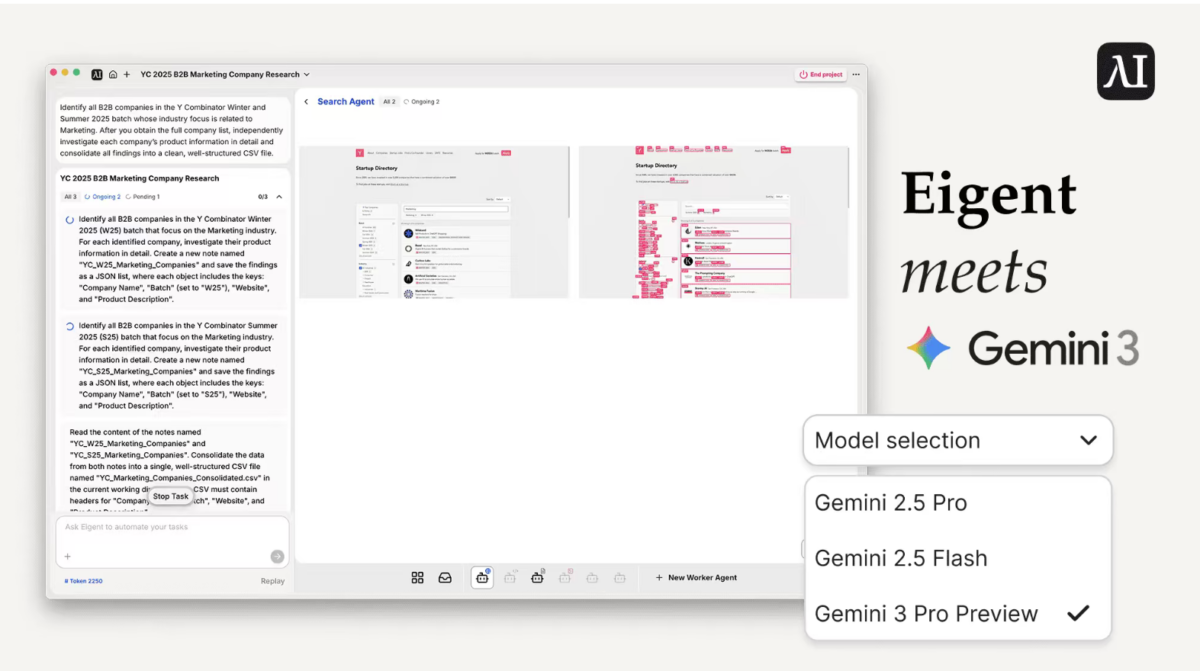

Let’s shift gears and talk about how Dextralabs approaches this challenge. Because building a safe agent isn’t complicated — building thousands of safe, scalable, interoperable agents is.

And that’s where enterprises run into trouble.

Most organizations build pilots. A sales agent here. A research agent there. Maybe a customer support assistant. But when they try to scale these agents across departments, data systems, security layers, compliance frameworks, logging infrastructures, and operational workflows, everything starts breaking.

Dextralabs built its platform to solve this exact scalability gap.

The Dextralabs Architecture

Here’s the conversational explanation we give enterprise teams when we design their safety stack:

“You don’t want a bunch of isolated agents scattered everywhere like SaaS tools from 2010.

You want a unified orchestration layer — with safety baked right into the substrate.”

That architecture includes:

1. Agentic Safety Orchestration Layer (ASOL)

Think of ASOL as the central nervous system. It manages:

- Multi-layer AI Guardrails

- Permission models

- Safety events

- Tool mediation

- Context routing

- Policy enforcement

- Safety reasoning

This is where we encode safety logic at the operational level — the part most enterprises forget to build.

2. AI Observability Dashboards

This is your real-time “cockpit” for agent behavior. Dashboards include:

- Drift detection

- Bias monitoring

- Risk scoring

- Action logs

- API call tracing

- Safety trigger analytics

- Compliance status indicators

Together, these enable complete AI compliance and observability.

3. Permission & Compliance APIs

These APIs are the bridge between your enterprise security infrastructure and your agentic environment. They allow the system to enforce:

- Context-aware permissions

- Conditional approvals

- Intent classification

- Attribute-based restrictions

- Compliance constraints

- Sensitive data masking pipelines

This is where AI Permissions and Governance becomes dynamic and machine-enforced.

The Outcome

Enterprises adopting this stack achieve:

- Safe autonomous execution

- Documented audit trails

- Automated compliance proofs

- Granular permissions

- Reduced incident risk

- Increased velocity

- Future-proof regulatory readiness

In other words: This is Secure AI orchestration done right — not bolted on, but engineered into the system.

Conclusion

The shift from predictive AI to agentic AI is the biggest leap since the creation of the modern cloud ecosystem. But it doesn’t come free. It asks enterprises to rethink safety—not as a defensive mechanism, but as an enabler of scale.

If you build safety well, agents become transformative.

If you build safety poorly, agents become a liability.

The Agent Safety Playbook provides the blueprint:

- Guardrails prevent harm

- Permissions control power

- Auditability ensures accountability

Together, these pillars form the foundation for Responsible AI governance, AI risk management, and safe enterprise deployment. The future of agentic AI won’t be measured by who builds the most powerful agents, but by who builds the most trustworthy ones.

Because ultimately, the future of enterprise AI isn’t just intelligent — it’s accountable.