The rapid growth of Generative AI (GenAI) technology is significantly impacting business operations and service delivery. In its early years, Generative AI was primarily showcased through impressive demonstrations and proof-of-concept systems. However, in 2026, organisations no longer need to determine whether AI will assist them; instead, they need to ensure their AI systems are reliable, secure, scalable, and compliant for deployment and day-to-day operations.

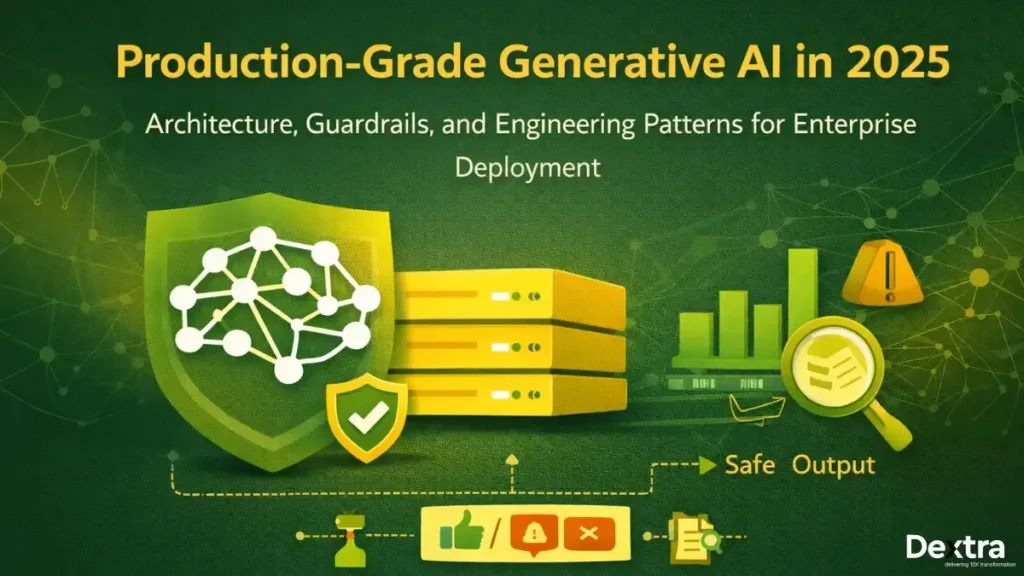

The move toward production-grade generative AI has transitioned from an emerging to a more serious engineering discipline. Creating a chatbot or generating an AI is no longer sufficient; companies must also consider their architecture, governance, safety, testing, and observability from the very start of the project.

This guide from Dextralabs explains how to build generative AI applications that work in the real world. It covers architecture patterns, guardrails, evaluation methods, and engineering practices used in modern enterprise LLM development. The goal is simple: help organizations deploy AI systems that teams can trust, scale, and govern confidently.

Why Production-Grade GenAI Needs a New Engineering Mindset?

As of 2025, GenAI technology has already been integrated into nearly every existing industry’s customer service, accounting/finance, and health & human services workflows, and provides assistance to software development teams and helps facilitate operations.

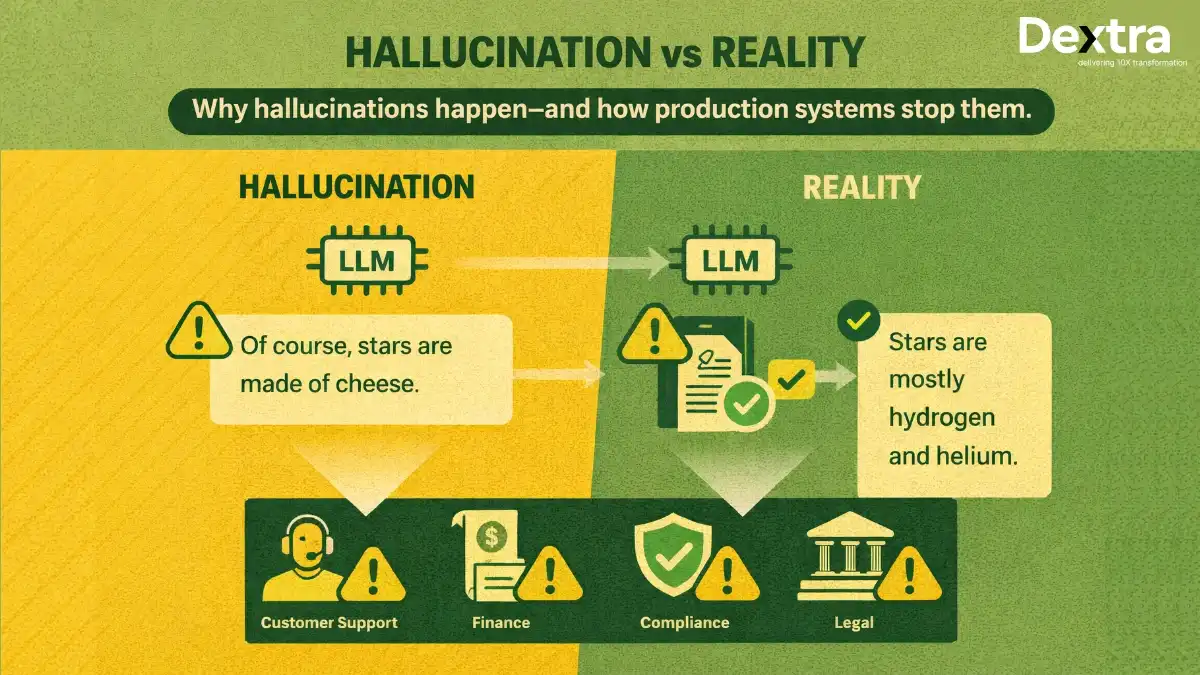

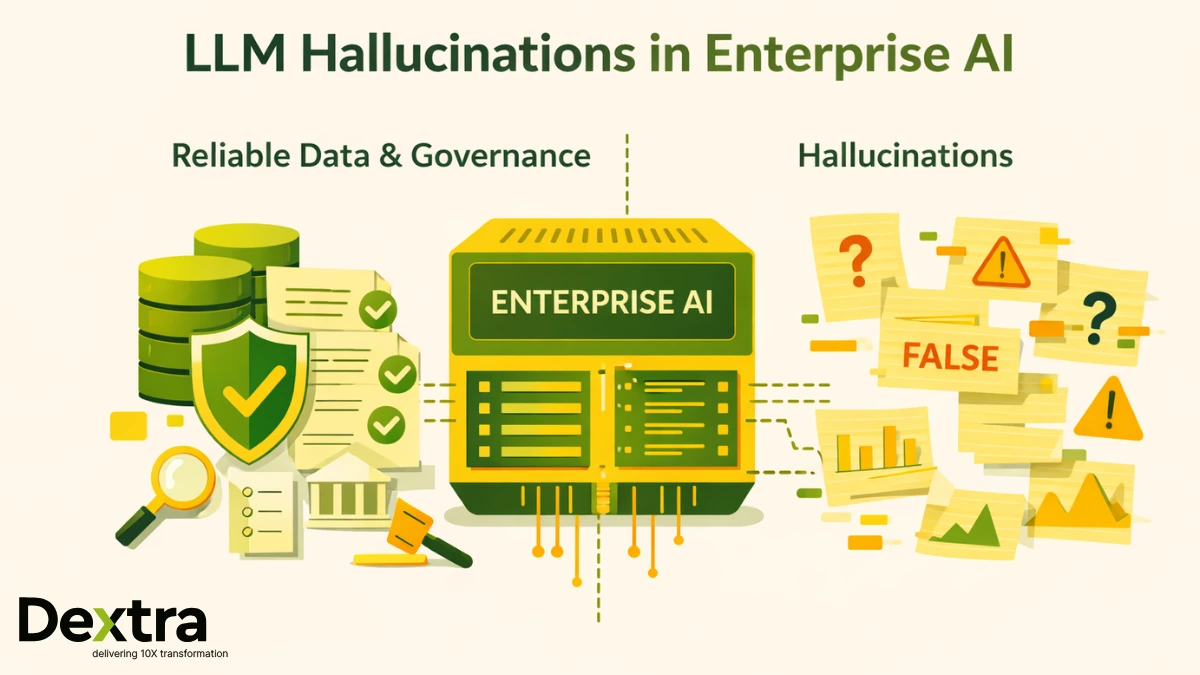

However, GenAI also introduces significant risks due to inaccuracies, non-deterministic behavior, and compliance challenges. These can range from non-compliance issues to financial losses to reputational damage.

In fact, as per different surveys performed by McKinsey & Co., 78% of organisations have already adopted GAI systems in at least one area of their operations. Similarly, the adoption rate of GAI technologies by organisations worldwide has surpassed 70%, indicating a clear shift from the experimental phase toward production-level capabilities.

Therefore, the capabilities of GAI systems developed for use in a production environment must be held to a higher level than those of experimental GAI tools.

They are expected to deliver:

- Reliable outputs

- Predictable behavior

- Strong safety controls

- Clear audit trails

- Stable performance under load

Dextralabs works with enterprises that treat AI as critical infrastructure, not an experiment. This mindset aligns closely with GenAI engineering best practices, where AI systems are built like production software—not like demos.

Step Zero: Should You Even Use an LLM?

Before writing a single prompt or selecting a model, teams must ask a critical question: Is an LLM actually the right tool?

In many cases, businesses rush into AI without evaluating alternatives. A strong decision framework compares:

- LLMs

- Rules-based systems

- Classical machine learning

- Hybrid approaches

Factors such as cost, latency, regulatory needs, and accuracy requirements matter deeply in enterprise LLM development. LLMs shine in unstructured language tasks, reasoning, and dynamic workflows. But they are not always the best answer.

Dextralabs helps organizations avoid “LLM misuse” by ensuring AI is applied only where it delivers real business value. This step alone prevents unnecessary complexity and long-term technical debt in LLM production deployment.

Model Selection for Enterprise AI Systems

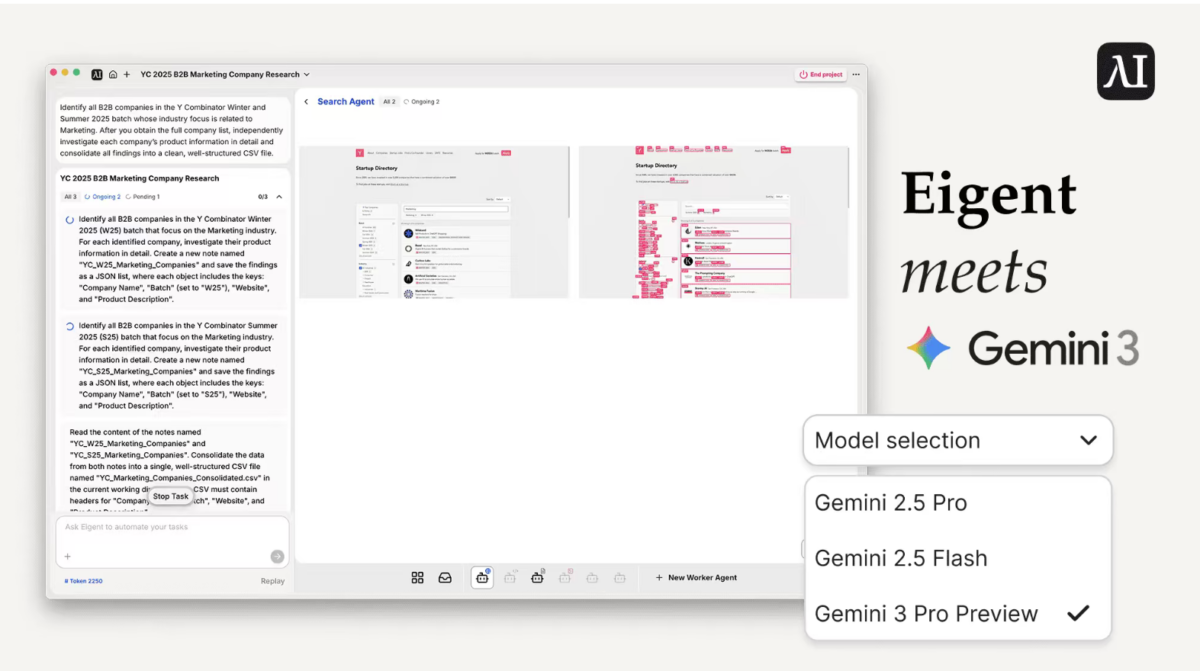

Choosing the right model is one of the most important decisions in production-grade generative AI. Enterprises in 2025 can choose from:

- Frontier models hosted via APIs

- Mid-size or distilled models

- On-prem or private LLMs

- Multi-model architectures

Factors influencing decision-making include the complexity of tasks, privacy needs, latency targets, and the constraints of cost. As a result, many enterprises have developed and established multiple models to improve both the flexibility and resiliency of their workflows. Interoperable standards such as the MCP Protocol (Model Context Protocol) and A2A Protocol (Agent-to-Agent communication) are becoming essential.

They allow teams to switch models without rewriting their entire stack, reducing vendor lock-in and future risk. This approach reflects modern GenAI engineering best practices, where adaptability is built into the architecture.

Prompt Systems Engineering for Stable AI Behavior

Prompts are no longer simple text inputs. In enterprise LLM development, prompts act as control systems that define how AI behaves across thousands or millions of interactions.

Modern prompt systems include:

- Role-based system prompts

- Structured output formats

- Function and tool calling

- Guardrail-embedded instructions

Consistency matters more than creativity in production. Prompt systems must be testable, versioned, and monitored. Techniques like prompt consistency testing help teams detect regressions early and maintain stable AI behavior over time.

This structured approach is essential for LLM production deployment, where unpredictability is unacceptable.

Input Quality Engineering and RAG Pipelines

One of the biggest lessons in production-grade generative AI is that input quality controls output quality. In many enterprise systems, more than 70% of accuracy depends on how the context is prepared.

This is where RAG architecture for enterprise becomes critical. A strong Retrieval-Augmented Generation pipeline includes:

- Clean chunking strategies

- Smart embedding selection

- Freshness and recency controls

- Noise and duplication filtering

Well-designed model context pipelines ensure the LLM receives only relevant, accurate information. Poor pipelines lead to hallucinations, outdated answers, and inconsistent results.

Dextralabs designs enterprise-grade RAG systems supported by vector database optimization, ensuring fast retrieval and reliable grounding.

Token Efficiency and Cost Control at Scale

Token usage directly affects cost and latency. At enterprise scale, inefficient prompts or oversized context windows can turn AI systems into expensive liabilities.

Smart teams apply strategies such as:

- Request routing

- Context summarization

- Modular prompt design

- Selective LLM invocation

Tracking cost per query, per user, and per workflow helps leaders understand the true economics of LLM production deployment. These optimizations support long-term sustainability without reducing output quality.

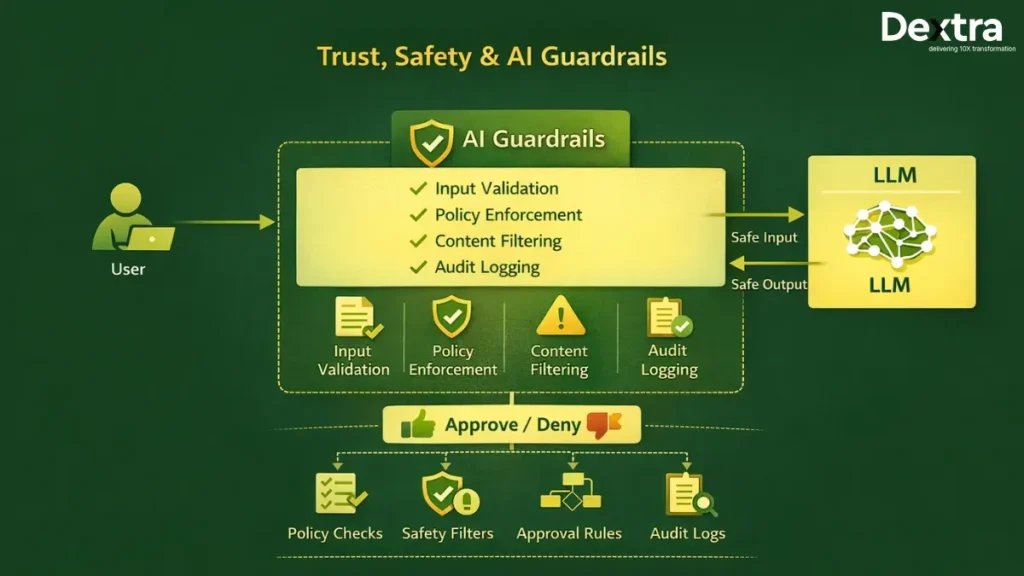

Trust, Safety, and AI Guardrails in Production

Trust is the foundation of enterprise AI. Without proper safety controls, even the most advanced system becomes a liability.

AI guardrails in production must be designed as a governance layer, not an afterthought. This layer includes:

- Input validation

- Policy enforcement

- Content filtering

- Red-teaming and stress testing

In agentic AI systems, tool and action authorization is especially important. Autonomous decisions must be limited by role-based permissions and audit rules.

Dextralabs helps enterprises build configurable safety profiles for regulated industries, ensuring compliance with internal policies and external regulations. This approach supports strong enterprise AI governance from day one.

Enterprise-Grade QA and Testing for LLM Applications

Testing AI systems is different from testing traditional software. Outputs can vary, but standards still apply.

Modern QA strategies include:

- Functional correctness checks

- Regression tests for prompts

- Retrieval accuracy benchmarks

- Human-in-the-loop reviews

Automated pipelines using LLM testing automation reduce risk and speed up releases. In regulated environments, human review remains critical, but automation handles most of the workload.

Strong testing frameworks are a core part of GenAI engineering best practices and are essential for reliable LLM production deployment.

Performance Testing for Real-World Load

Enterprise AI systems must perform well under pressure. This means planning for peak traffic, API limits, and downstream service delays.

Effective LLM performance testing examines:

- End-to-end latency

- Concurrency handling

- Rate limit recovery

- Agent orchestration overhead

As AI agent orchestration becomes more common, teams must test entire workflows, not just individual calls. Performance issues often appear only when multiple agents interact under load.

Continuous Evaluation Pipelines for Reliability

Manual evaluation does not scale. In 2025, continuous evaluation is mandatory for production-grade generative AI.

Automated pipelines combine:

- Synthetic test data

- Real user feedback

- Weighted scoring systems

- Pass/fail thresholds

These pipelines often rely on LLM evaluation frameworks to track accuracy, safety, and behavior changes over time. Continuous evaluation ensures that improvements do not introduce new risks.

Monitoring, Logging, and GenAI Observability

You cannot manage what you cannot see. GenAI observability acts as the nervous system of production AI.

Key metrics include:

- Hallucination rates

- Tool-call failures

- Retrieval mismatches

- Reasoning depth variance

Advanced systems capture agent traces and structured logs while protecting sensitive data. Integration with ModelOps and AIOps platforms helps teams detect issues early and respond quickly.

Strong observability separates reliable AI platforms from fragile ones.

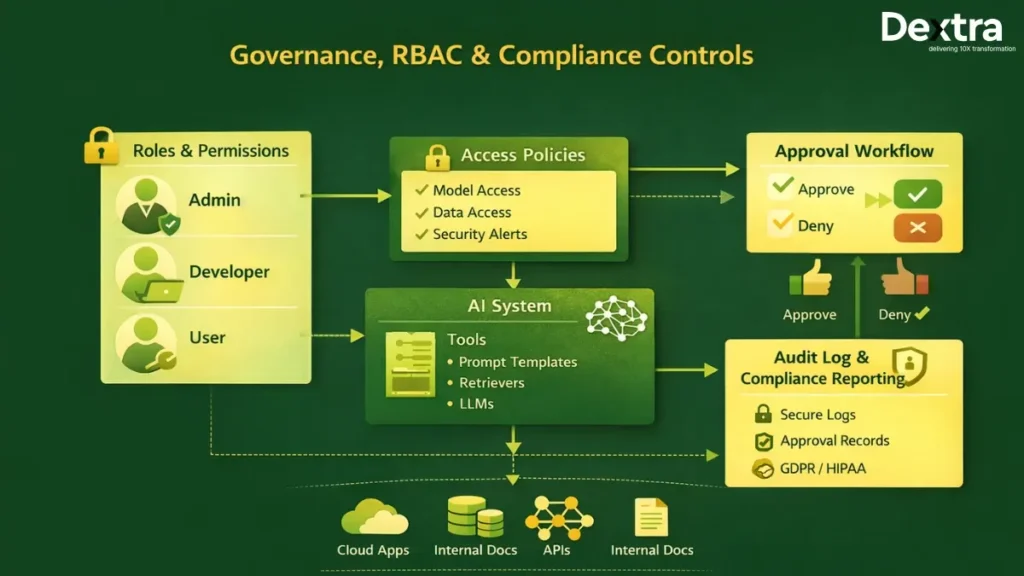

Governance, RBAC, and Compliance Controls

Governance is no longer optional. Enterprises must manage who can access models, data, and tools.

Effective governance includes:

- Role-based approvals

- Secure prompt and log storage

- Compliance with GDPR, HIPAA, PCI, and internal audits

Board-level reporting dashboards help leaders understand AI risk and performance. This governance layer supports long-term trust in enterprise LLM development and protects organizations as AI usage grows.

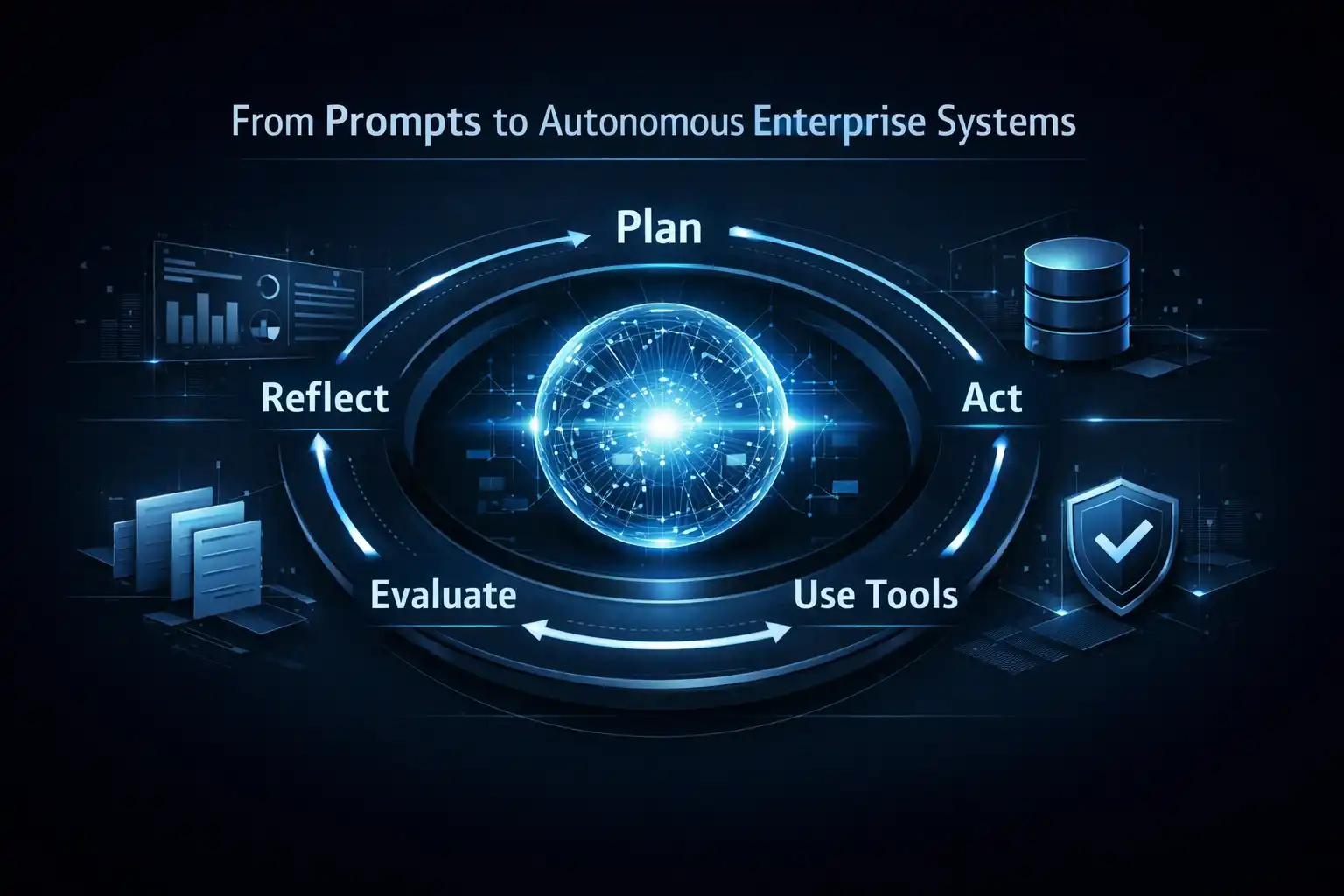

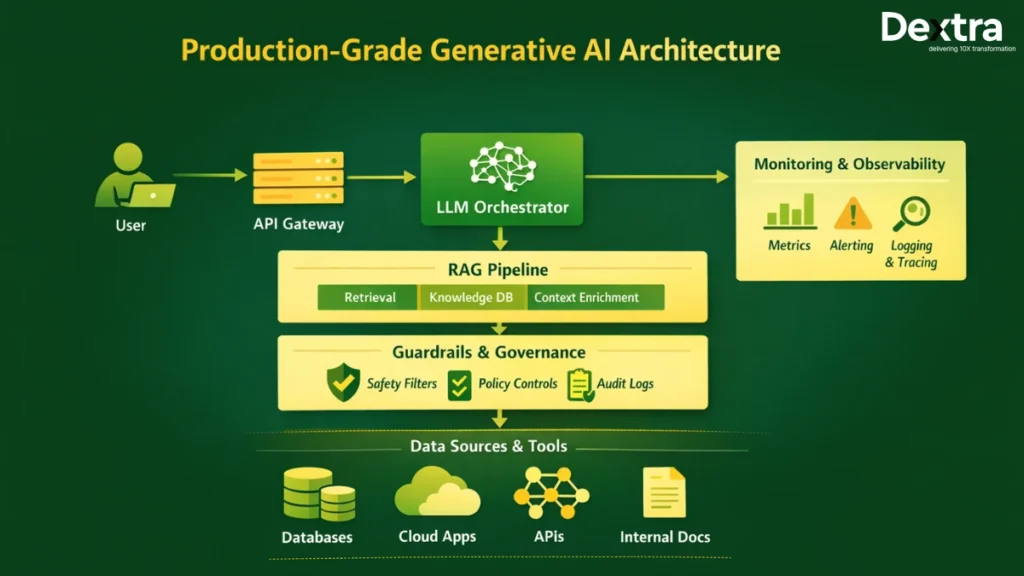

Dextralabs Reference Architectures for 2025

Dextralabs designs reference architectures based on how generative AI systems operate in real enterprise environments—not how they appear in isolated demos. These architectures are built to support production-grade LLM applications with strong foundations in reliability, security, and governance.

Key elements of Dextralabs’ reference architectures include:

- Production-grade LLM applications with RAG and guardrails:

Architects use Retrieval-Augmented Generation (RAG) pipelines with stringent context controls. The built-in guardrails protect enterprise data and mitigate the risk of hallucinations or unsafe outputs. - Multi-agent workflows using open standards:

Dextralabs enables multi-agent systems to operate using open interoperability standards, enabling AI agents to collaborate on multiple tasks while remaining auditable and governed by permissions. By implementing multiple-agent systems, companies can scale their systems over time and avoid vendor lock-in. - Full GenAI stacks with observability and governance:

All architectures will integrate an MeV&M (Monitoring, Evaluation and Governance) layer. This ensures that every decision made within a model or using a tool (e.g., a generative AI agent) can be tracked, tested, and governed.

All architectures support the deployment of autonomous AI agents that deliver value at the enterprise level while adhering to principles of safety, transparency, and operational integrity.

Enterprise Case Studies

Dextralabs has helped organizations across a variety of industries deploy production-ready generative AI solutions that create measurable and dependable value for their businesses. These production-ready systems will help organizations to achieve success from real-world applications and constraints (such as compliance, data security, scalability, etc.), rather than merely from research, test-and-learn type activities.

Key enterprise deployments include:

- BFSI audit automation:

Protected Retrieval-Augmented Generation (RAG) pipelines were implemented to automate audit workflows while ensuring sensitive financial data remained secure, traceable, and compliant with regulatory standards. - SaaS code intelligence platforms:

AI-powered systems were developed to support developers with code understanding, documentation generation, and faster debugging, resulting in improved productivity and shorter development cycles. - Healthcare decision support:

On-prem LLM deployments enabled clinical teams to access decision support tools while maintaining strict data privacy and regulatory compliance. - Logistics optimization:

Agent-driven workflows were used to automate operational decisions such as route planning and exception handling, improving efficiency across complex logistics networks.

Each case highlights how production-grade generative AI delivers tangible business impact when engineered correctly.

The Future of Production GenAI

Looking ahead, multi-model and multi-agent systems will become the default. Open standards will replace vendor lock-in, and governance will define market leaders.

Enterprises that succeed will treat AI as engineering infrastructure—not a novelty.

Dextralabs continues to help organizations design, deploy, and scale AI systems that work in reality, not just in prototypes. By following proven GenAI engineering best practices, businesses can confidently move from experimentation to long-term success in LLM production deployment.

Conclusion

Building production-grade generative AI in 2025 requires much more than just picking the best model. You’ll also need to create a comprehensive architecture, strong governance, conduct extensive testing, have complete transparency into the results, and ensure reliability at scale.

Once these foundational elements are established, AI will be seen as a reliable business asset instead of simply an experimental technology with the potential to be an operational liability.

Dextralabs bridges the gap between defining corporate strategy and helping enterprises understand how to build generative AI applications that are secure, compliant, and scalable. With the right engineering approach, enterprise LLM development becomes a long-term competitive advantage that drives measurable value across the organization.

FAQs on how to build generative AI applications:

Q. What makes a generative AI application “production-grade”?

A production-grade generative AI application is designed to operate reliably at scale, not just work in demos. It includes stable model behavior, strong input pipelines, guardrails for safety, automated evaluation, performance monitoring, and governance controls. In practice, this means the system can handle real users, real data, compliance requirements, and ongoing change without breaking or producing unpredictable outcomes.

Q. How do enterprises decide whether they actually need an LLM?

Not every problem needs an LLM. Enterprises should first evaluate whether the task requires reasoning, language understanding, or flexible decision-making. Many successful systems use a hybrid approach—combining deterministic rules, traditional ML, and LLMs only where they add clear business value. Starting with this decision prevents unnecessary cost, latency, and risk.

Q. Why do most GenAI pilots fail when moving to production?

Most GenAI pilots fail because they focus on model capability instead of system design. Common gaps include poor input quality, missing guardrails, no evaluation pipeline, limited observability, and unclear ownership. Production environments expose these weaknesses quickly, especially under real user load and regulatory scrutiny.

Q. How do companies control hallucinations and unsafe outputs in production systems?

Hallucinations are controlled through a combination of strategies: high-quality retrieval (RAG), strict prompt constraints, role-based permissions, output validation, and continuous evaluation. In enterprise environments, models must also be forced to ground responses in approved data sources and clearly abstain when confidence is low. Guardrails are not optional—they are foundational.

Q. What role does governance play in enterprise GenAI adoption?

Governance ensures that generative AI systems remain trustworthy, auditable, and compliant as they scale. This includes access controls, decision logs, monitoring for drift, and clear accountability. Boards and leadership teams increasingly expect visibility into how AI systems behave, how risks are managed, and how business value is measured over time.