Have you ever asked an AI a question and received… something that felt like a generic guess? You’re not alone. It’s frustrating. You know the technology is powerful. You’ve seen case studies and viral examples of people getting incredible results. Yet when you try, the output feels vague, generic, or just not right.

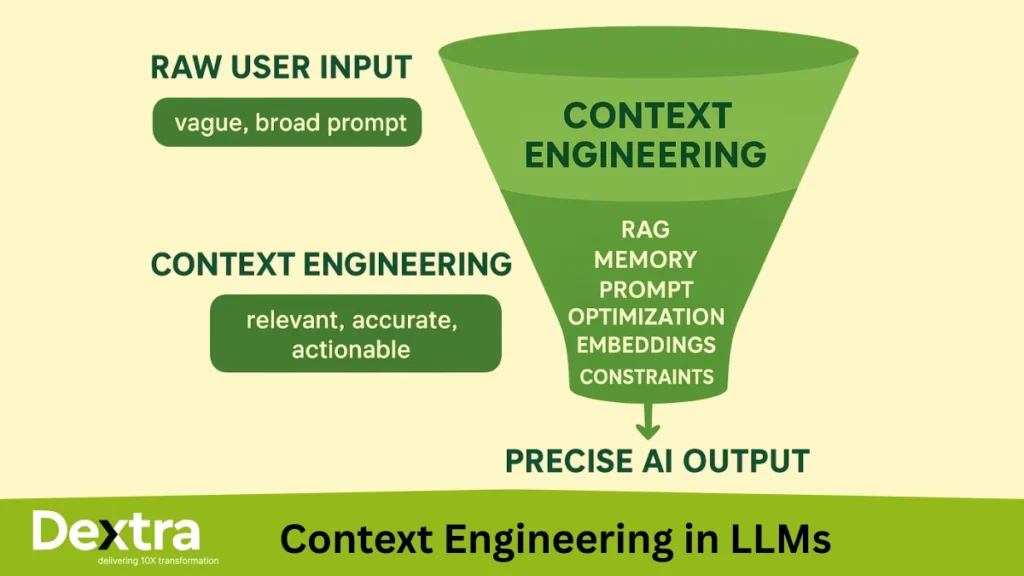

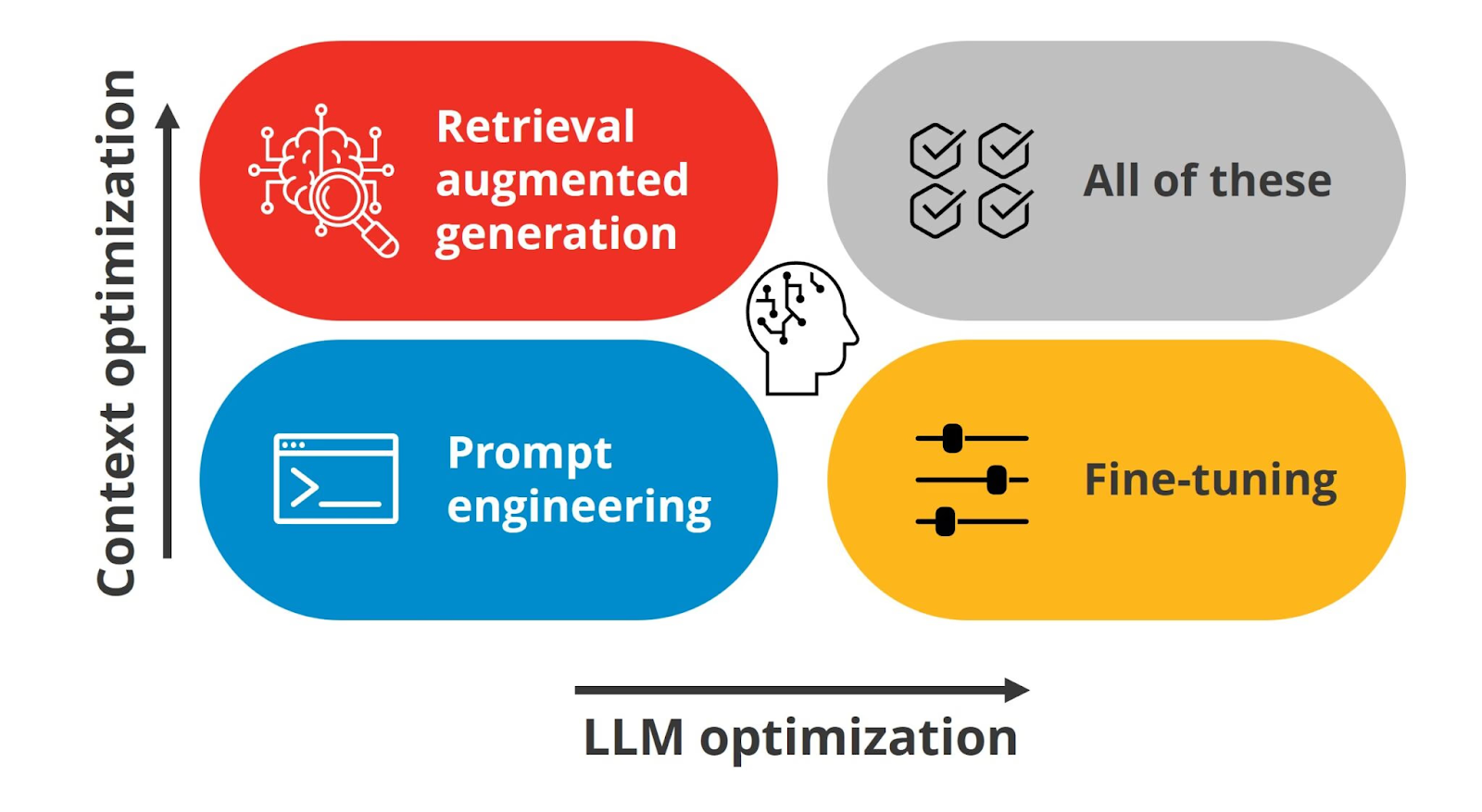

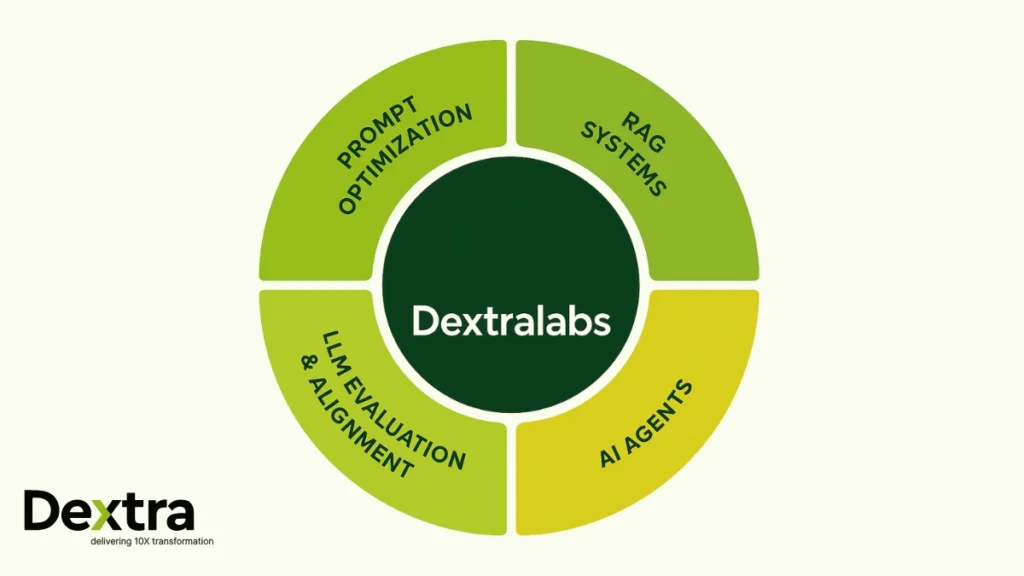

At Dextralabs, we’ve watched this frustration up close while helping enterprises deploy and scale Large Language Models. Whether it’s refining prompts, designing Retrieval-Augmented Generation (RAG) systems, or aligning AI with real business needs, one lesson is clear: powerful results don’t come from the model alone, they come from the context you give it.

Here’s the real secret: getting great answers from AI has less to do with the AI’s intelligence and more to do with your instructions. And that’s where context engineering comes in.

Unlock the Full Power of LLMs with Context Engineering

Don’t settle for vague AI outputs. Our LLM Prompt Consulting refines your inputs with context, memory, and constraints—delivering precision and reliability every time.

LLM Prompt Engineering ConsultingWhat Is Context Engineering, Really?

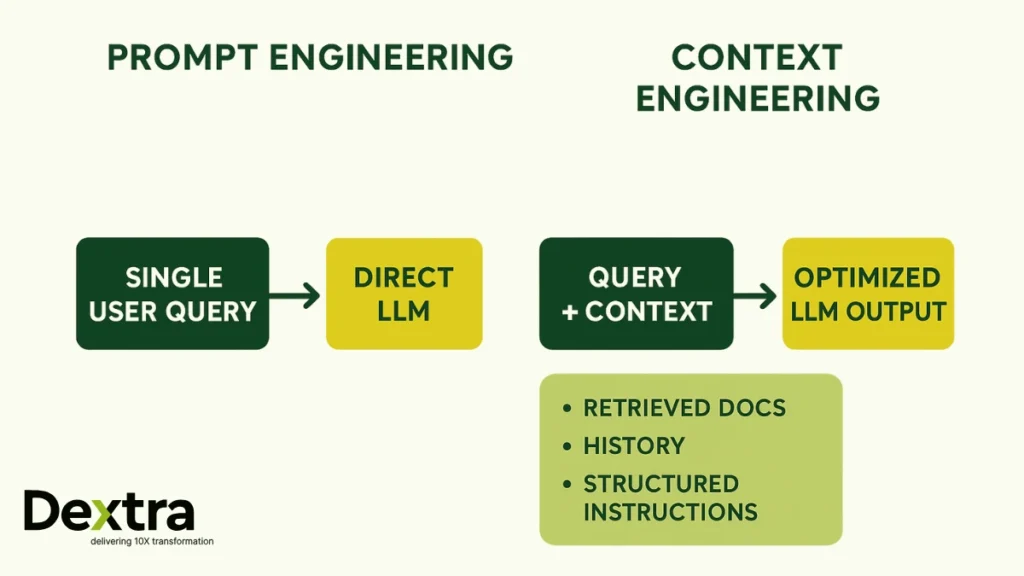

Context engineering is the pinnacle of influence. It is about intentionally crafting the conversation with an AI model to get the best possible response. You’re not just asking a question; you’re setting the situation, offering data, and directing the AI’s actions. It’s the difference between asking a colleague for hazy advice and providing them with all of the information they need to make an accurate recommendation.

It’s the practice of crafting your inputs – your prompts – to guide the AI toward the exact output you want. This isn’t about making prompts longer; it’s about making them smarter.

Research signifies that providing well-engineered context can boost the accuracy of LLM models like GPT-4 drastically. That’s the difference between an AI that “sort of” answers your question and one that nails it every time.

Addressing Skeptics: Cutting Through the Hype.

Let’s address the elephant in the room: some developers dislike the term “context engineering.” To them, it sounds like quick engineering dressed up in flashy new clothes, or worse, yet another buzzword attempting to pass itself off as genuine innovation. And honestly? They are not completely incorrect to be skeptical.

Traditional prompt engineering focuses on creating the appropriate instructions for an LLM. Context engineering, on the other hand, is broader, it involves managing the entire environment in which the AI functions. This includes retrieving relevant material on the fly, retaining memory throughout talks, coordinating other tools, and keeping track of state in extended engagements. It’s not just what you ask the AI; it’s how you structure the entire conversation.

However, here’s an uncomfortable truth: most of what we call “engineering” in AI today is still more art than science. There’s too much guesswork, insufficient rigorous testing, and far too little standardization. Despite the best efforts to set up the context engineering, LLMs still tend to hallucinate, completely trip over the logic, and struggle with very complicated reasoning. This isn’t about correcting AI’s problems; it’s about navigating them as wisely as possible.

So, no, context engineering isn’t magical. It will not convert defective models into ideal thinking machines. However, it does help us get more reliability and precision out of the tools we have, and for the time being, that’s all we can do.

Why Context Is the Multiplier?

Large language models (LLMs) don’t think the way we do. They don’t “know” things – they recognize patterns and predict what comes next based on enormous amounts of training data.

If you provide vague input, the AI fills in the blanks with its best guess – and that guess might not match your expectations. But when you give it a clear structure, it can lock in on your intent.

This is why high-performing AI teams – like those at Dextra Labs – obsess over clarity. When building AI-powered products or automating processes for enterprises, they know that precision in inputs drives precision in results.

The Real-World Impact of Setting up a Good Context:

Context engineering isn’t just an “AI nerd” thing. It has real-world business impact.

1. Smarter Customer Support

Customer service chatbots often frustrate users because they seem robotic and irrelevant. But when AI bots are given rich context – like past conversation history, customer preferences, and order data – they can respond naturally.

Some companies have seen a 50% drop in the number of support tickets escalated to human agents after making their chatbots more context-aware. That’s happier customers, lower costs, and faster resolution times.

2. Faster, Higher-Quality Content

Faster, more accurate output is not a theory; it is what happens when AI is given the proper context. Marketers who employ specific suggestions generate content up to three times faster and drive more engagement. The distinction between a broad request like “Write a post about tech” and a specific prompt like “Write a LinkedIn post for startup founders about the top three mistakes to avoid when hiring engineers” is the difference between noise and actual impact.

At Dextralabs, we’ve seen this extend beyond marketing. When we collaborated with a SaaS business to create a context-rich AI support agent, the results spoke for themselves: customer escalations decreased by 47% in just three months, using a combination of prompt optimization and LLM evaluation.

3. Accuracy in Critical Fields

In industries like law and medicine, precision is everything. Feeding AI systems with detailed patient histories or specific legal documents can dramatically cut error rates – turning AI from a risky gamble into a reliable partner.

Your Guide to Becoming a Context Master

If you want to start getting better results from AI immediately, here’s the playbook:

Be Specific

- Bad: “Write about cars.”

- Good: “Write a 500-word blog post comparing the fuel efficiency of the 2024 Honda Civic and 2024 Toyota Corolla, targeting first-time car buyers.”

Use Examples

AI learns style faster when you show it examples.

- “Write in the style of Ernest Hemingway – short, direct sentences, and a journalistic tone.”

Break It Down

Don’t ask for everything in one go.

- “First, summarize this article. Second, pull out the three main arguments. Third, write a conclusion based on those points.”

Avoid Overly Broad Questions

- Instead of “What will the future be like?” ask, “What are the top three AI trends impacting fintech in 2024, based on Gartner reports?”

Common Pitfalls to Avoid

- Information Overload: Yes, be specific, but don’t drown the AI in irrelevant details.

- Ignoring Knowledge Cutoffs: Most LLMs have fixed training data. Always fact-check time-sensitive info.

- Assuming Implicit Understanding: AI isn’t human. Spell out your expectations clearly.

The Future of Context Engineering

The next wave of AI won’t just be smarter – it will be more adaptable.

Emerging trends include:

- Dynamic Context Adjustment: AI that fine-tunes its understanding as the conversation progresses.

- Personalized Context Profiles: Systems that remember your tone, style, and preferences over time.

A 2024 Accenture report predicts that 75% of enterprises will adopt advanced context engineering strategies within two years (source). This mirrors the path of innovation Dextralabs follows – building scalable, context-driven AI tools for enterprises that demand precision and efficiency.

Putting It All Together

AI is no longer just a shiny tool for CTOs, IT leaders, and product managers, it’s quickly becoming a core strategic partner. But here’s the catch: it’s only as effective as the context you provide. When you feed it precise, structured, and relevant inputs, you move beyond generic outputs and unlock targeted, high-quality results that actually move the needle.

Whether you’re:

- A CTO tasked with scaling AI adoption across your enterprise,

- An IT leader looking to improve operational efficiency while reducing errors, or

- A product manager shaping the roadmap for your next AI-driven feature,

The principles of context engineering are disruptive. This isn’t about playing around with prompts on the side; it’s about developing a repeatable technique that turns AI into a consistent, dependable force multiplier throughout your business. When done properly, it results in fewer customer escalations, faster decision-making, and more innovation reaching your customers. AI success is a method, not magic. Context engineering is already transforming how businesses develop, build, and scale their digital initiatives. The leaders who master it now will set the pace for their industries tomorrow.

Dextralabs can help you identify greater precision, reliability, and economic value from AI. From large-scale LLM implementation to specialized AI agent development, our consultants are experts in creating context-driven solutions that produce measurable outcomes.

From Prompts to Context—Smarter AI Starts Here

At Dextralabs, we engineer context layers—RAG, embeddings, structured prompts—to transform your AI from generic to business-ready.

Book a Prompt Strategy Session