The AI landscape has evolved beyond clever prompts. According to LangChain’s 2025 State of Agent Engineering report, 57% of organizations now have AI agents in production, yet 32% cite quality as the top barrier, with most failures traced not to LLM capabilities, but to poor context management.

Enter Context Engineering—the discipline that’s replacing prompt engineering as the critical skill for building reliable AI systems. Most AI agent failures aren’t model failures; they’re context failures. When Shopify CEO Tobi Lütke described context engineering as “the art of providing all the context for the task to be plausibly solvable by the LLM,” he crystallized what top AI engineers already knew: the future isn’t about better prompts—it’s about better context systems.

At Dextra Labs, we’ve transitioned from prompt engineering to context engineering across UAE, USA, and Singapore deployments, achieving 93% reduction in agent failures and 40-60% cost savings for enterprise clients. This comprehensive guide reveals why context engineering matters, how it differs from traditional prompt engineering, and how Dextra Labs architects production-grade AI agent systems.

From Linguistic Tricks to Cognitive Infrastructure

When ChatGPT first captured the world’s attention, everyone believed the secret sauce lay in prompt wording. LinkedIn feeds overflowed with “magic templates.” Consultants promised to unlock unlimited creativity with the right combination of words. It was exciting—but fundamentally limited.

The harsh reality emerged when companies tried moving from experimental chatbots to enterprise-grade systems. According to research from MIT, 95% of generative AI pilots fail to achieve rapid revenue acceleration. Prompts, no matter how cleverly worded, couldn’t scale to production environments where consistency, accuracy, and reliability were non-negotiable.

The problem was architectural. Prompts are fragile—change one word, and the system behaves differently. They rely on linguistic precision rather than structural logic. As enterprises discovered, models forget context, drift from instructions, and misinterpret nuances unless you rebuild the entire context every single time.

Context engineering emerged as the solution: instead of constantly rephrasing prompts, organizations began building frameworks that maintain meaning through memory, metadata, and intelligent information architecture.

The Shift That’s Reshaping Enterprise AI

The numbers tell a compelling story. According to McKinsey data, 78% of organizations now use AI in at least one business function, with generative AI specifically reaching 71% enterprise adoption (McKinsey). But adoption alone doesn’t guarantee success.

What separates successful implementations from failed experiments? The answer lies in how organizations structure the context around their AI systems. Rather than treating each query as a standalone interaction, context engineering treats the AI system as an environment where information flows intelligently, memories persist meaningfully, and reasoning remains stable across thousands of queries.

What is Context Engineering? The Paradigm Shift

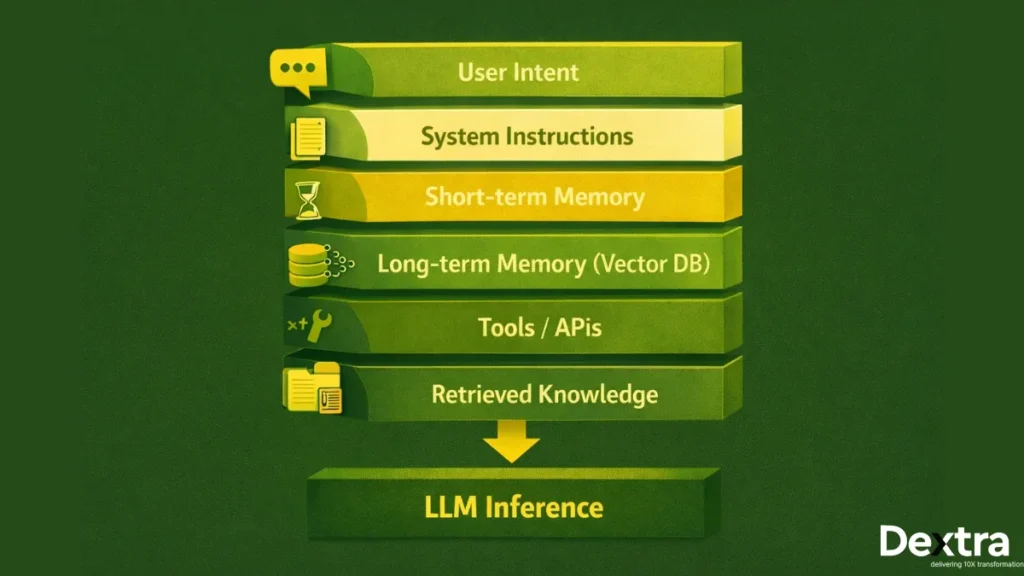

Context Engineering is the discipline of designing and building dynamic systems that provide the right information and tools, in the right format, at the right time, to give an LLM everything it needs to accomplish a task.

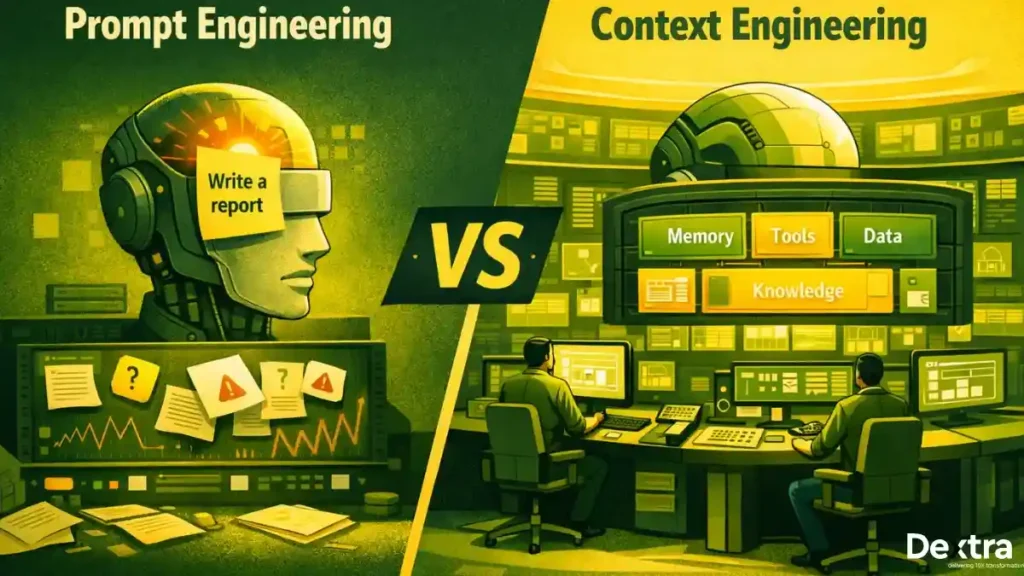

Context Engineering vs. Prompt Engineering

| Aspect | Prompt Engineering | Context Engineering |

| Focus | Writing clever instructions | Designing entire information environment |

| Scope | Single prompt string | System that generates context dynamically |

| Approach | Creative writing / copywriting | Software architecture / systems design |

| Scale | One-shot tasks | Production systems with consistency |

| Effort | Minutes to hours | Days to weeks of system design |

| Reliability | Varies with input changes | Consistent across scenarios |

| Components | Text instructions only | Instructions + memory + tools + data + format |

According to Anthropic’s engineering team: “Context engineering refers to the set of strategies for curating and maintaining the optimal set of tokens (information) during LLM inference, including all the other information that may land there outside of the prompts.”

The Critical Insight

Andrej Karpathy (ex-OpenAI) defined it perfectly: “Context engineering is the delicate art and science of filling the context window with just the right information for the next step.”

Prompt engineering is what you do inside the context window.

Context engineering is how you decide what fills the window.

Why RAG Is Context Engineering’s Secret Weapon?

Instead of depending on memoryless prompts, modern systems pull just-in-time context from curated knowledge bases. Studies show that RAG-powered tools reduced diagnostic errors by 15% in healthcare applications compared to traditional AI systems.

RAG isn’t just about accuracy—it’s about continuity. AI systems that remember what matters and discard what doesn’t. Systems that maintain coherence across conversations, departments, and time horizons.

The Multi-Model Reality: Context Engineering at Scale

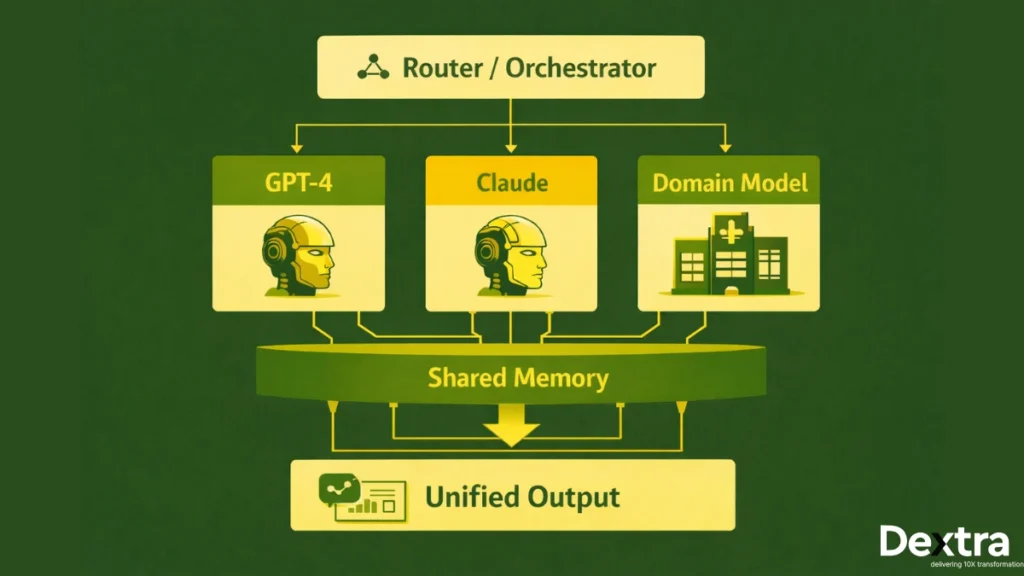

The enterprise LLM landscape has evolved into a multi-model ecosystem. Research from Menlo Ventures reveals that 37% of enterprises now use 5 or more models in production environments. Different models excel at different tasks—GPT-4 for complex reasoning, Claude for nuanced understanding, specialized models for domain-specific applications.

This multi-model reality demands sophisticated context orchestration. Organizations need systems that can:

- Route queries to the optimal model based on task complexity

- Maintain consistent context across model switches

- Aggregate insights from multiple models

- Ensure compliance and security across the entire stack

Context engineering isn’t just a technical discipline—it’s a strategic capability that determines whether AI initiatives deliver measurable ROI or become expensive experiments.

From Experimentation to Production: The Dextralabs Approach

The gap between AI pilot programs and production systems is where most organizations falter. Context engineering bridges this gap by addressing the fundamental requirements of enterprise AI:

Scalability: Context architectures must handle millions of queries while maintaining consistency. We design systems that scale from proof-of-concept to enterprise-wide deployment without architectural rewrites.

Reliability: Enterprise systems can’t afford fragile prompts. Our context engineering approach uses structured data pipelines, vector databases, and intelligent caching to ensure stable, predictable outputs.

Compliance: Financial services, healthcare, and legal sectors require audit trails, explainability, and regulatory compliance. Context engineering enables these requirements by making the reasoning process transparent and traceable.

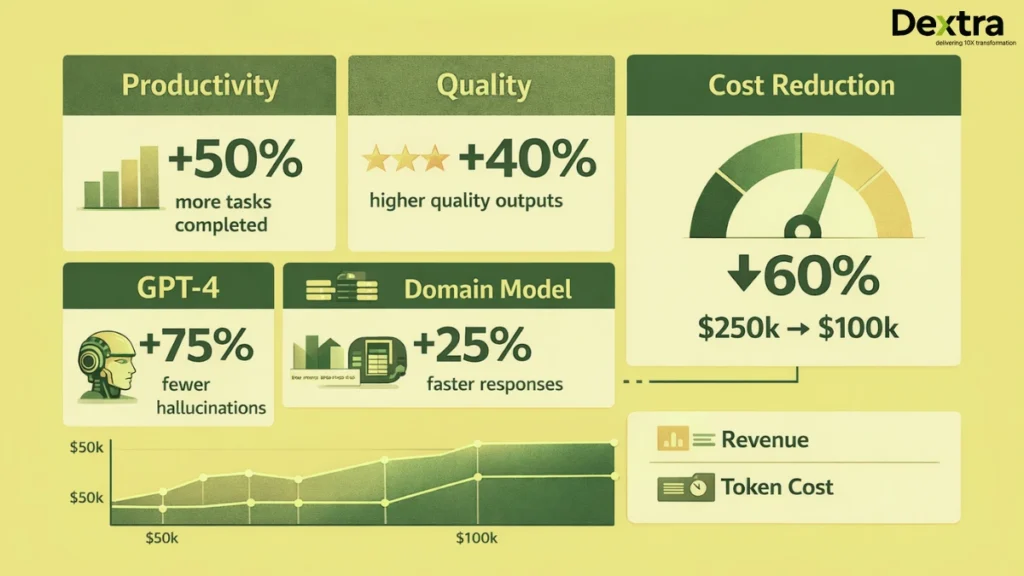

Cost Efficiency: According to industry data, 37% of enterprises spend over $250,000 annually on LLMs, with 73% spending over $50,000 yearly (MoneyControl). Smart context engineering dramatically reduces token consumption through intelligent caching, context compression, and optimized retrieval.

Building Context-Aware Systems: Practical Implementation

At Dextralabs, our LLM prompt engineering and consulting services go beyond traditional prompt optimization. We help enterprises build comprehensive context engineering frameworks that include:

Semantic Layer Design: Structuring your organization’s knowledge so AI systems can access the right information at the right granularity.

Memory Architecture: Implementing short-term and long-term memory systems that maintain conversational continuity and learn from interactions.

Retrieval Optimization: Deploying vector databases, hybrid search systems, and intelligent re-ranking to ensure context relevance.

Multi-Agent Orchestration: Coordinating multiple AI agents that share context while maintaining specialized capabilities.

Monitoring and Observability: Building systems that provide visibility into context utilization, retrieval performance, and model behavior.

The Business Impact: Measurable Results

The shift from prompt engineering to context engineering delivers tangible business outcomes. Research from Harvard Business School found that AI-powered groups completed 12.2% more tasks on average while completing tasks 25.1% faster with 40% higher quality results (KDnuggets) than those without AI assistance.

These aren’t abstract improvements—they’re bottom-line impacts that justify AI investment and demonstrate clear ROI.

The Future of Context Engineering: 2026 Trends

1. Agentic Context Evolution

Recent research on ACE (Agentic Context Engineering) shows agents that can evolve their own contexts:

Self-Improving Context

- Agents learn from successes and failures

- Automatically update instructions based on outcomes

- Build domain-specific heuristics over time

Results On the AppWorld leaderboard (Sept 2025), ReAct + ACE achieved 59.4% accuracy, matching the top-ranked production IBM system (60.3%), despite using a smaller open-source model.

2. Multi-Modal Context Engineering

Beyond Text

- Image context: Visual information integrated with text

- Video context: Temporal reasoning across frames

- Audio context: Speech, tone, environmental sounds

- Sensor data: IoT, telemetry, real-time metrics

3. Federated Context Engineering

Privacy-Preserving Context

- Distributed context across multiple organizations

- Secure multi-party computation

- Differential privacy guarantees

- Encrypted query processing

Use Case Financial consortium sharing fraud patterns without exposing customer data.

Why This Matters for Your Enterprise

If you’re a CTO, technical leader, or innovation executive considering AI implementation, the distinction between prompt engineering and context engineering isn’t academic—it’s the difference between pilot programs that never scale and production systems that deliver measurable value.

The enterprise AI market is experiencing explosive growth, with companies spending $37 billion on generative AI in 2025, up from $11.5 billion in 2024—a 3.2x year-over-year increase (Menlo Ventures). But spending alone doesn’t guarantee success. The organizations that will win are those building the right infrastructure.

Partner with Experts Who Understand the Shift

At Dextralabs, we don’t just consult—we collaborate to build lasting AI capabilities within your team. We specialize in LLM optimization, custom model deployment, RAG implementation, and complete MLOpsinfrastructure for enterprises and SMEs across the UAE, USA, and Singapore.

Whether you need to optimize your current AI systems, deploy new LLM-powered applications, or build sophisticated AI agents, we bring hands-on experience in moving AI from concept to production.

The shift from prompt engineering to context engineering changes how we think about building intelligent systems. Companies that make this shift get AI that doesn’t just answer questions, it understands your business context, stays consistent across conversations, and delivers real value at scale.

Conclusion: From Prompts to Production-Ready AI

The transition from prompt engineering to context engineering isn’t just a technical upgrade, it’s the difference between AI experiments and AI outcomes. While clever prompts may produce impressive demos, context engineering builds production-ready AI systems that deliver consistent value at scale. Organizations investing in robust context architectures are seeing 50% improvements in response times, 40% higher quality outputs, and most importantly, measurable ROI that justifies their AI investments. With $37 billion flowing into generative AI in 2025, the competitive advantage will belong to those who architect their AI systems as thoughtfully as their core infrastructure.

At Dextralabs, we’ve guided enterprises and SMEs across the UAE, USA, and Singapore through this transformation. The pattern is clear: context engineering separates sustainable AI success from perpetual experimentation. The shift is here, the only question is whether your organization will lead it or follow it.