Generative AI is no longer a side experiment buried inside innovation teams. Throughout 2024 and into 2026, adoption has accelerated to the point where boards, CIOs, and founders view GenAI as an operational capability rather than a technology trend. Across sectors, companies are moving from early pilots to full-scale deployments aligned with clear business targets.

Multiple reports confirm this shift.

McKinsey’s State of AI 2025 highlights that generative AI adoption has nearly doubled in two years. The Stanford AI Index 2025 shows global investment in GenAI reached $33.9B in 2024, up almost 19 percent year over year. Deloitte’s 2024 Enterprise report notes that nearly every large organization surveyed has moved beyond proofs of concept, and KPMG’s Boardroom Lens 2025 indicates boards are directly scrutinizing performance, security, and ROI.

GenAI is now mainstream. The question is no longer whether to adopt it, but how to scale it responsibly and profitably.

Dextralabs supports organizations that want production-grade GenAI systems, agentic workflows, measurable outcomes, and board-ready governance. The analysis below reflects criteria we see increasingly shaping 2025 budget decisions.

The Budget Shift: From Pilots to Production Programs

A year defined by scale

Most CIOs have already passed the exploratory phase. A16Z’s CIO Budget Insights show innovation budgets have shrunk to 7 percent of GenAI spending, compared to more than 30 percent a year earlier. Companies are reducing discretionary pilots and instead funding long-term programs.

Three budget realities stand out:

GenAI spending is accelerating

- IDC projects $644B in AI spending in 2025, driven heavily by GenAI workloads.

- McKinsey reports that 92 percent of enterprises plan to increase their AI budgets over the next three years.

- Most organizations have shifted procurement from “sandbox workflows” to infrastructure, data pipelines, agent platforms, and governance systems.

Internal capability building is becoming a priority

Budgets now include:

- Enterprise-safe model deployment

- Domain model fine-tuning

- Agent orchestration

- Data modernization

- Governance and audit systems

Dextralabs sees enterprises increasingly allocating budgets to durable capabilities rather than outsourced pilots or fragmented tooling.

CFOs demand clearer line-of-sight to returns

AI budgets once treated as discretionary tech exploration are now evaluated like strategic capital expenditure.

Boards want impact measured in:

- Hours saved

- Cycle-time reduction

- Cost avoidance

- Revenue contribution

Boards are asking for numbers, not narratives.

Where GenAI Is Being Deployed in 2025?

Based on Deloitte, McKinsey, and AmplifAI data, enterprise adoption is concentrated in five areas.

Software development and IT operations

This is the most advanced use case.

- Teams report 25 to 40 percent faster development cycles.

- Automated test generation and documentation reduce routine effort.

- Code remediation improves security posture.

CIOs consider engineering productivity the most reliable source of realized ROI.

Customer operations and marketing

Gartner expects 80 percent of customer service teams to use GenAI this year.

Key applications:

- Automated support agents

- Knowledge-assisted human agents

- Personalization and campaign optimization

- Real-time content generation

Companies report shorter resolution times and more consistent customer interactions.

Financial services and risk operations

High adoption due to measurable outcomes.

- Fraud detection performance improvements

- Enhanced AML and KYC processing

- Automated risk reporting

- More consistent credit decisioning

Most institutions combine GenAI inference with rule-based engines for predictable outputs.

Healthcare, manufacturing, and industrial

Use cases include:

- Diagnostic decision support

- Predictive maintenance

- Quality checks

- Supply chain forecasting

- R&D literature and data synthesis

These use cases require strong governance frameworks, an area where Dextralabs supports regulated enterprises.

AI agents entering production

McKinsey estimates that one in four enterprises will deploy AI agents in 2025.

These agents handle:

- Workflow automation

- Data retrieval

- Compliance checks

- Multi-step business processes

Boards are increasingly asking about the reliability and safety profile of agentic systems, especially when connected to operational data.

The Metrics Boards Now Expect for GenAI Adoption

KPMG’s board survey highlights a clear shift: leadership teams want detailed measurement frameworks, not generic dashboards.

Here are the metrics gaining the most traction.

Financial contribution

Boards require:

- Cost per outcome

- Cost per generated unit of work

- Contribution to margins

- Impact on revenue (e.g., upsell, retention, expansion)

In many enterprises, GenAI is now evaluated with the same rigor as ERP or CRM modernization initiatives.

Operational performance

CIOs are expected to report on:

- Reduction in cycle times

- Throughput generated by AI systems

- Time saved per function

- Case handling or ticket handling improvements

- Reduction in manual rework

These metrics show whether AI actually changes how work gets done.

Workforce productivity

Boards increasingly ask:

- How much of a team’s workload is supported by GenAI?

- Are employees actually using AI tools consistently?

- Has collaboration between teams improved?

Skill adoption and workflow incorporation are critical indicators.

Customer outcomes

Customer-side impact includes:

- CSAT improvements

- Faster response times

- Reduction in human escalations

- Personalization accuracy

These metrics connect AI to experience quality.

Governance, safety, and risk monitoring

Only 46 percent of enterprises have an AI governance policy; even fewer enforce it.

Boards want visibility into:

- Data access monitoring

- Policy compliance

- Model drift

- Error and hallucination rates

- Bias and fairness assessments

- Traceability and audit logs

Dextralabs helps companies establish governance systems that are practical, enforceable, and aligned with reporting obligations.

Environmental footprint

Stanford’s 2025 AI Index shows rising concern about the energy impact of large-scale GenAI workloads.

Boards are beginning to track:

- Energy cost

- Emissions intensity

- Efficiency of model selection (frontier vs. domain-specific)

These metrics will likely become standard by 2026.

What are the organizational bottlenecks Slowing GenAI Adoption?

The biggest constraints highlighted in McKinsey, Deloitte, and KPMG reports are not technological.

1. Skill shortages

Teams lack:

- AI solution architects

- Data engineers familiar with vector stores and streaming architectures

- Governance and risk specialists

- Prompt and systems designers for enterprise workflows

Skill gaps remain one of the highest-ranked barriers.

2. Cultural resistance

Managers often struggle to adopt AI-ready workflows.

Common issues:

- Inconsistent tool usage

- Fear of displacement

- Siloed decision-making

- Slow cross-functional alignment

3. Confusion over ownership

Companies are still defining whether AI should sit under:

- CIO

- CDO

- COO

- A cross-functional CoE

This creates delays in approvals and design.

4. Fragmented data foundations

Poor data readiness leads to:

- Weak model outputs

- Inconsistent retrieval performance

- Limited automation potential

Dextralabs helps organizations build the data backbone needed for reliable GenAI adoption.

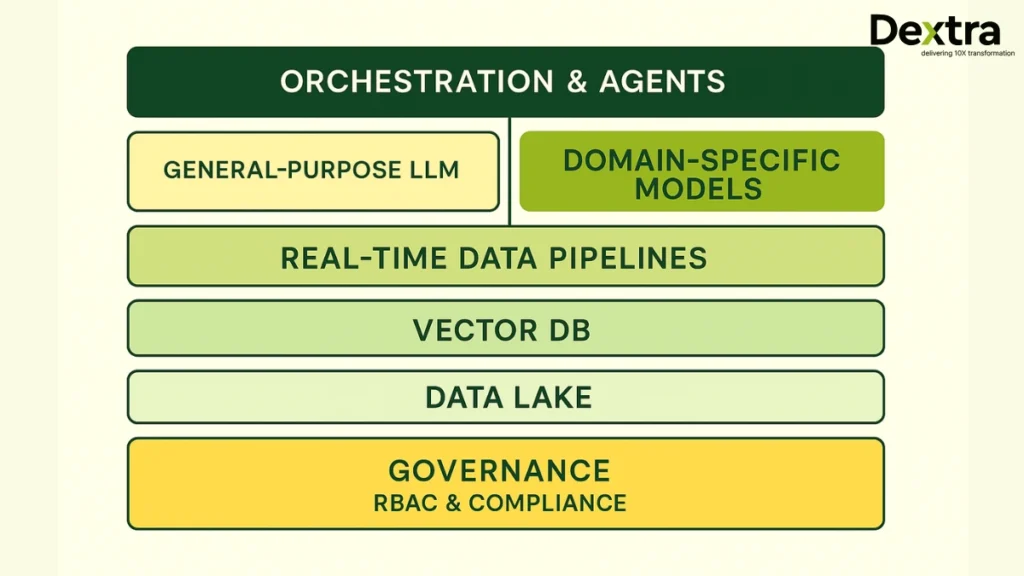

How Enterprises Are Structuring Their GenAI Architecture for 2025?

A production-grade deployment typically includes:

A. A shared orchestration layer

To coordinate multiple models, agents, and workflows.

B. General-purpose models for broad tasks

Used for reasoning, writing, summarization, and instruction following.

C. Domain-specific models for specialized work

Lower latency and cost; improved accuracy for legal, risk, healthcare, or finance.

D. Real-time data pipelines

Reliable streaming data and vector retrieval systems.

E. Comprehensive governance

Including:

- RBAC

- Audit logs

- Compliance filters

- Red-team testing

This stack mirrors what Dextralabs implements for clients executing multi-department AI systems.

A Practical 2025 Adoption Roadmap for Boards and CIOs

This roadmap reflects what high-performing enterprises follow.

Q1: Prioritize and assess

- Identify business units with measurable workflows

- Assess data readiness

- Build ROI and feasibility scoring

Q2: Establish standards and governance

- Safety protocols

- Auditability

- Access control

- Evaluation frameworks

Q3: Deploy production AI systems

Focus on:

- 3–5 high-impact workflows

- AI agents for operational tasks

- Integration with core systems

Q4: Scale horizontally

- Expand to more departments

- Build an AI center of excellence

- Create enterprise-wide adoption training

How Dextralabs Supports Board-Level, Enterprise-Grade GenAI Adoption?

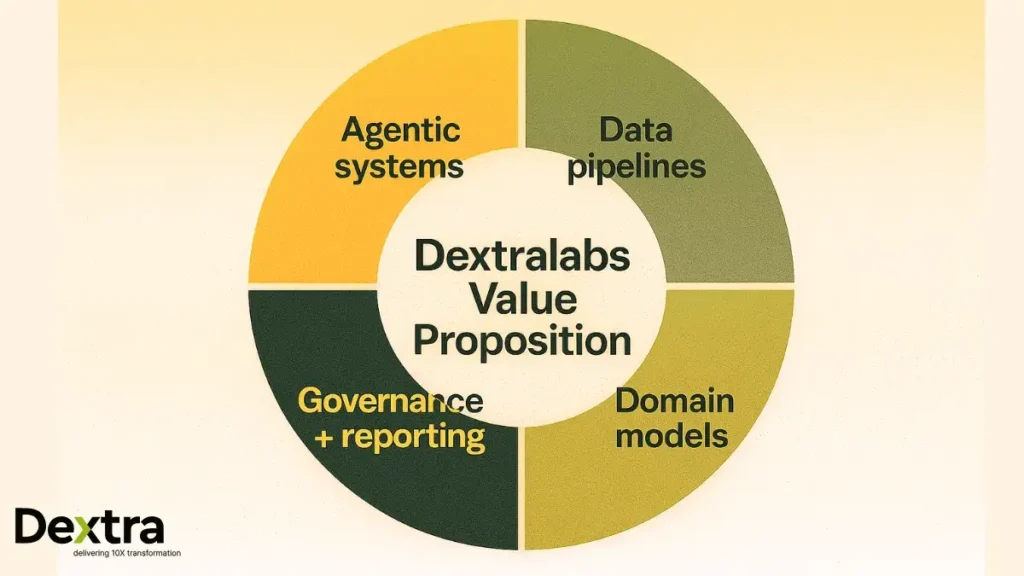

Dextralabs helps organizations move from experimentation to measurable performance through:

- Deployment of production-ready agentic systems

- Buildout of real-time data pipelines and retrieval systems

- Development of domain-specific and fine-tuned models

- Governance frameworks aligned with board expectations

- Auditability, safety controls, and reporting modules

- Operating model design, adoption playbooks, and workforce enablement

Our focus is business impact with traceability and responsible scaling.

Conclusion

GenAI is no longer a discretionary experiment.

With budgets rising, use cases expanding, and boards demanding clearer outcomes, enterprises need mature systems, reliable data pipelines, and a governance foundation that minimizes risk.

2025 rewards companies that treat GenAI like a core operational capability, not an isolated initiative. Founders, CIOs, and boards that align investment, architecture, and governance will see measurable efficiency, faster execution, and more informed decision-making across their organizations.

Dextralabs supports this shift with engineering depth, responsible AI design, and measurable performance frameworks.

FAQs:

Q. What should a board expect in a GenAI quarterly review?

A complete quarterly review typically includes:

Business impact metrics (cycle-time, cost savings, revenue uplift)

Model and agent performance summaries

Governance exceptions and policy adherence

Infrastructure cost trends and optimization actions

Roadmap adjustments based on telemetry and new opportunities

Most organizations pair this with a risk review to maintain oversight.

Q. How does Dextralabs help enterprises reduce GenAI deployment risk?

Dextralabs supports leadership teams by:

Defining the business case, metrics, and governance upfront

Conducting data, architecture, and readiness assessments

Building production-grade RAG systems, agent workflows, and secure pipelines

Implementing executive-level reporting dashboards for ROI, risk, and performance

Training teams to handle day-to-day AI operations, not just pilot prototypes

The focus is on reliability, traceability, and clear linkage to business KPIs.

Q. What is the expected payback period for GenAI investments?

Payback periods vary by domain, but data from AmplifAI and McKinsey indicates that:

Customer operations and engineering productivity initiatives often pay back within 6–12 months

Risk, compliance, and supply chain improvements typically yield returns within 12–18 months

Large model training projects have longer payback windows, usually tied to strategic differentiation rather than immediate cost savings

Boards typically require a financial model before approving programs beyond 12 months.

Q. How can organizations prepare their workforce for GenAI adoption in 2026?

Workforce planning should cover:

Targeted upskilling for engineers and analysts

Clear SOPs for AI-assisted workflows

Incentives for early adoption

Defined escalation rules when AI outputs require human review

Deloitte’s research highlights workforce enablement as one of the strongest predictors of GenAI ROI.

Q. What’s the recommended architecture for scalable GenAI deployments?

A scalable architecture usually contains:

Hybrid model strategy (frontier + fine-tuned + domain models)

Retrieval-Augmented Generation (RAG) with verified sources

Orchestration layer for policy enforcement

Observability and evaluation pipelines (EvalOps)

Unified governance layer with audit trails

Dextralabs frequently implements a shared platform model so that multiple business units can build use cases without duplicating foundational work.

Q. How can we quantify productivity gains from GenAI?

The recommended approach is a combination of baselines, controlled A/B tests, and telemetry. Enterprises often calculate:

Time saved per user per workflow

Reduction in manual steps

Percent of tasks transitioned to automated or AI-assisted flows

McKinsey’s 2025 study shows companies that tracked productivity systematically saw clearer ROI and more confident board approvals.