Have you ever wondered how it really works when you type a question into ChatGPT, Bard, or any of those other fancy Large Language Models (LLMs)? It seems almost magical; words start flowing on your screen, sentences take shape, and suddenly, you have stories, summaries, or code at your fingertips. But peel back the curtain and you’ll find something less mysterious: a bunch of smart math and adjustable “dials” that decide what comes next.

The coolest part? With a little know-how, you can play with those dials. Whether you want responses to be short and to the point, wonderfully creative, or strictly factual, it’s up to you. Think of it as being the DJ at the world’s smartest radio station: you’re in control of the mix.

At Dextralabs, we specialize in helping enterprises unlock this very control — fine-tuning LLMs to match their business goals, from conversational precision to creative adaptability.

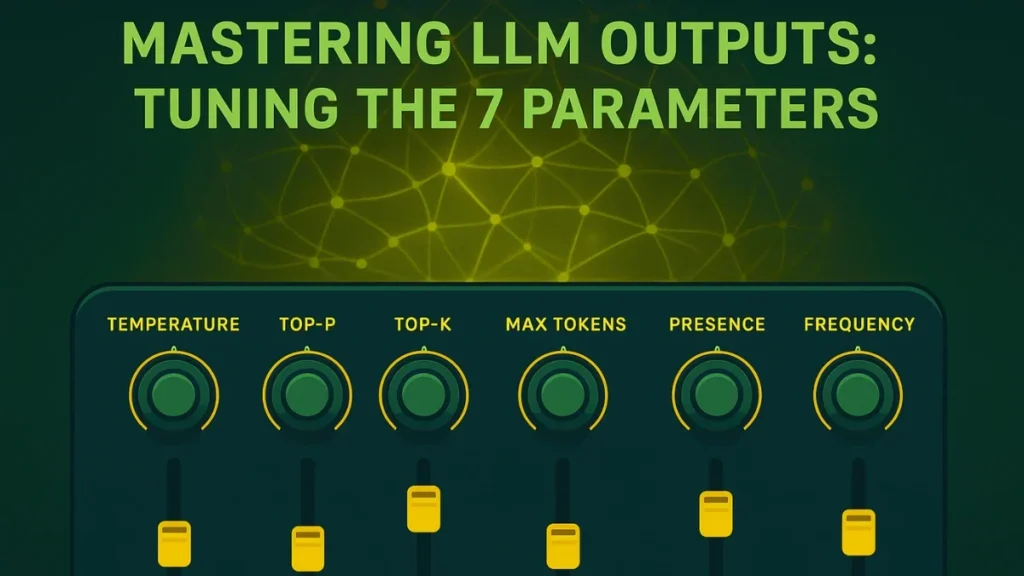

Ready to take the wheel? Let’s talk about the seven MVPs behind the scenes: max_tokens, temperature, nucleus sampling (top-p), top-k sampling, frequency_penalty, presence_penalty, and stop sequences. I’ll explain what each one does, when to change it, and how to make them all work together like a harmonious band.

The 7 Core Parameters: In Everyday Language:

Whether you’re experimenting as an enthusiast or deploying enterprise-grade AI systems, understanding these parameters — something we emphasize in our Dextralabs AI workshops — is key to shaping smarter, more efficient models. Let’s break down what these settings mean and why you might want to adjust them.

| Parameter | What It Controls | When to Adjust | Example Range |

| max_tokens | Length | Save cost or limit | 100–1000 |

| temperature | Creativity | Creative writing | 0.2–1.2 |

| top_p | Diversity | Balanced output | 0.9–0.95 |

| top_k | Selection Pool | Relevance | 10–50 |

| frequency_penalty | Repetition | Prevent loops | 0.1–0.5 |

| presence_penalty | Topic variety | Stay or shift focus | 0.2–0.6 |

| stop_sequence | Output limit | Control ending | Custom |

1. Max Tokens (max_tokens)

Imagine texting a friend and saying, “Sum up this movie in five words!” That’s basically what max_tokens does for your LLM.

- What this is really about: It sets the limit for how much the model can say in one go, whether it’s just a tweet or a wall of text. (Remember: a “token” might be a whole word or just a piece of it.)

- Why you’ll care: Want quick responses? Keep it low. Need detailed explanations? Let the model have some room. Plus, most providers charge by the token, so setting this wisely can save you money.

- Best use: For summaries, chatbots, and time-sensitive tasks, don’t let your LLM ramble on, put a cap on it!

2. Temperature

Think of temperature as the “crazy switch.” The higher you go, the wilder the model gets with its word choices.

- What’s happening: The model is more likely to pick unexpected or unusual words as the temperature rises. If you set it low, you get reliable, sometimes boring answers, great for technical stuff.

- The range: 0.0 (as safe and steady as it gets) to 2.0 (let’s see what happens).

- When should you play with it?

- Low values (0.1 – 0.5): Use when you want clear, direct answers, think instructions, code, or facts.

- High values (0.8 – 1.2): Try it for stories, brainstorming, or when you’d like the LLM to surprise you a little.

3. Nucleus Sampling (top_p)

Let’s say you want a bit of fun in your answers, but not total mayhem. That’s where top_p comes in.

- In plain English: With nucleus sampling, you tell the model to only consider the top X% of likely words for the next step. For example: “Only look at the top 90% most probable words, skip the crazy outliers.”

- Why mess with this: It chops off the really weird stuff, but keeps some variety in play.

- A good place to start: Try values like 0.9 or 0.95 for a mix of fun and coherence.

- Tip: Most experts suggest picking either temperature or top_p to experiment with, not both. Test one, see how it goes.

4. Top-k Sampling (top_k)

Picture a school spelling bee, but only the top “k” spellers get a chance to answer each time.

- How it works: The model will only choose from the top “k” possible next words. If k = 10, that’s it, just those 10 contenders.

- Why use it: This keeps things relevant and generally on-topic, especially if other dials (like temperature) are cranked up.

- Where to start: Try k values from 5 up to 50, depending on how safe or adventurous you’re feeling.

- Side note: In some tech setups, top_k comes before top_p in the decision process. Don’t be afraid to experiment.

5. Frequency Penalty

Ever have someone repeat the same joke three times in a row? Annoying, right? Frequency penalty helps your LLM avoid that habit.

- What it does: Penalizes the model for using the same word over and over in one response.

- How it’s set: From -2.0 to 2.0 (but usually you’ll want a gentle positive number like 0.2).

- Use cases: Super helpful for long pieces of text, to keep things fresh and interesting.

6. Presence Penalty

This one gently nudges the model away from topics it’s already touched or, if you like, towards them.

- The idea: If a word shows up once, its chances of being used again are now lower.

- Controls: -2.0 to 2.0, just like frequency penalty.

- Pro move: If your LLM won’t stop talking about “cats” in every answer, boost the presence penalty and watch it branch out. Trying to stay focused on a single topic? Lower it a notch.

7. Stop Sequences

Want your LLM to know when enough is enough? Use a stop sequence.

- It’s simple: You say, “When you hit this special phrase, stop!” For instance, when generating lists, you might want everything to end at a specific divider.

- Why it matters: Keeps answers neat, tight, and avoids the model rambling past where you want things to finish.

- Hints: Use something unique, like <|end|> or \n\n###, so the model doesn’t stop early by mistake. Combine this with max_tokens for double safety.

Making the Magic Happen , Together

Here’s something many people miss: these dials interact. Tweaking two at once can multiply effects for better (or sometimes wackier) outputs. At Dextralabs, our engineers and AI consultants leverage these very dynamics to deploy and optimize Large Language Models at scale. We ensure your outputs aren’t just coherent, but context-aware and cost-efficient.

- Randomness: Both temperature and top_p fiddle with how surprising, original, or conservative your responses are. But don’t go wild adjusting them together at first; pick one and see how the model changes.

- Repetition (degeneration): If your LLM gets stuck in a loop (“the cheese is cheesy and so is cheese…”), try a sensible top_p (maybe 0.92) and soft frequency penalty (like 0.2). That usually does the trick.

- Cost & speed: If you’re on a budget or want fast replies, start with max_tokens. For a snappier feel, look for streaming options that show you the answer as it’s being created, way better for impatient folks like me!

- Every model’s unique: Don’t expect 0.8 temperature on ChatGPT to feel exactly the same as 0.8 on Bard or Anthropic’s Claude. Each has its own quirks, and sometimes a model will ignore or “clamp” extreme values. Peek at their docs for the details!

Much of what makes these tweaks work comes from pioneering research; papers like Holtzman et al.’s “The Curious Case of Neural Text Degeneration” or Fan et al.’s “Hierarchical Neural Story Generation” laid the groundwork for all this.

Whether you’re experimenting with open models or integrating enterprise-grade LLMs, Dextralabs provides end-to-end support — from model selection and fine-tuning to secure deployment and continuous optimization.

The Bottom Line

Mastering LLMs isn’t just about what you ask; it’s about how you guide the answers. Change the parameters around. Try out various combinations. As you grow more used to these settings, you may change your AI sidekick’s personality, ingenuity, and usefulness.

So don’t be scared to turn those knobs. You may find everything from a funny one-liner to a poignant essay to something insightful and unexpected. Go ahead and explore where your prompting and fine-tuning may lead you.

Want to master LLM tuning for your business?

Partner with Dextralabs, where AI expertise meets practical implementation.

Explore how we can help you deploy, fine-tune, and scale your own intelligent systems — from prompt engineering to agentic AI integration.

FAQs:

Q. Why should I care about tuning LLM parameters?

Tuning LLM parameters isn’t just about getting fancier answers — it’s about controlling how your AI thinks, speaks, and behaves. The right combination of temperature, top-p, and penalties can make your model sound more factual, creative, or brand-consistent. At Dextralabs, we help enterprises fine-tune these settings to align perfectly with their tone, compliance needs, and user experience goals.

Q. Can I use these settings to make my chatbot sound more “human”?

Yes! Adjusting parameters like temperature and presence penalty allows your chatbot to sound more conversational and less repetitive. Dextralabs specializes in creating AI agents with adaptive personalities, so your chatbot can feel natural without losing accuracy or professionalism.

Q. What’s the difference between temperature and top_p — and which one should I tweak?

Both control creativity, but in different ways.

– Temperature makes the model more or less random overall.

– Top_p (nucleus sampling) limits the range of possible next words.

– Our experts at Dextralabs usually test both under controlled scenarios to find your ideal “creativity sweet spot,” ensuring your AI stays engaging yet reliable.

Q. How can tuning these parameters save my company money?

Optimizing parameters like max_tokens directly reduces compute costs and response times. Instead of generating long, irrelevant text, your LLM learns to stay concise. Dextralabs helps organizations balance creativity with cost-efficiency, so every token delivers business value.

Q. What if my model keeps repeating itself or looping in responses?

That’s a common issue known as “neural text degeneration.” By adjusting frequency_penalty and presence_penalty, you can fix it. Dextralabs consultants regularly solve this in enterprise-grade deployments, combining parameter tuning with data curation and retrieval optimization.

Q. How does Dextralabs help enterprises with LLM tuning and deployment?

We go beyond prompt tweaks. Dextralabs offers:

– Custom LLM deployment (on cloud or on-premise)

– Parameter optimization & behavioral alignment

– Agentic AI and workflow orchestration

– Continuous monitoring & governance

Our goal is to make your AI perform like a domain expert, not just a chatbot.

Q. Do I need coding experience to experiment with these parameters?

Not necessarily. While API-level tuning requires technical access, Dextralabs provides user-friendly dashboards and guided consulting that let non-technical teams control LLM behavior safely and intuitively.

Q. Can Dextralabs help if we’re already using OpenAI, Anthropic, or Google models?

Absolutely. Dextralabs works across multiple LLM ecosystems — OpenAI (GPT-4, GPT-5), Anthropic’s Claude, Mistral, and custom fine-tuned models. We help you integrate, compare, and optimize whichever model best suits your business needs.

Q. What’s the best starting point for parameter tuning in an enterprise environment?

Start small: define your goals first (accuracy, creativity, tone). Then tweak temperature, top_p, and penalties in controlled scenarios. Dextralabs can run AI Behavior Workshops to guide your team through real-time experiments and create reusable parameter presets for your projects.

Q. How can I get started with Dextralabs for LLM tuning or AI deployment?

You can reach out via our website to schedule a free consultation or tech due diligence session. Whether you’re exploring LLM deployment, custom model training, or AI agent development, Dextralabs helps you deploy smarter systems — tuned precisely to your enterprise DNA.