The AI landscape is evolving at a staggering pace, and at the center of this transformation are open source LLMs (Large Language Models) and their closed-source counterparts—two paths shaping the future of enterprise AI. For CTOs, CIOs, and enterprise engineering leaders, the LLM decision isn’t just technical; it’s strategic. Enterprises are asking: Do we leverage the openness and flexibility of open-source LLMs, or trust the power and polish of closed source ai solutions? The answer has lasting impacts on innovation, security, and bottom-line results.

According to Gartner AI Trends 2025, Over 60% of AI-driven enterprises plan to evaluate open-source LLMs by the end of 2025. Today, at Dextralabs, this definitive guide cuts through the noise. We lay out the nuanced trade-offs between open-source LLM models and closed-source LLMs, dig deep into technical subtleties, and deliver actionable deployment best practices.

You’ll get real-world examples, a clear open source vs closed source matrix for enterprise decisions, and targeted insights for open source vs closed source AI scenarios, so you can lead the future of AI-powered enterprise.

Understanding the Basics: Open vs Closed Source

Before moving further, let’s quickly comprehend the open-source LLMs and closed-source LLMs:

What Are Open-Source LLMs?

Open-source LLMs are large language models whose code, architecture, and often weights are publicly available for anyone to use, modify, and distribute. These models, like Meta’s LLaMA 3, Mistral, Falcon, or HuggingFace’s BLOOM, publish their model weights and source code. This means your teams can inspect, audit, fine-tune, even re-architect core components. You choose your LLM deployment environment: air-gapped, on-premises, or sovereign cloud. With active open source and closed source communities, you’re never building in isolation. But you’re also responsible for operationalizing, scaling, and securing the model on your terms.

Open source LLM ecosystem highlights:

- LLaMA 3: 70B parameters, top-tier coding and natural language power, ideal for custom apps, considered a best open source LLMs candidate.

- Mistral: Praised for efficiency; production-grade even on resource-limited infrastructure.

- Falcon: Popular among healthcare and EU enterprises for flexible licensing.

What Are Closed-Source LLMs?

Closed source LLM models are black-box offerings. Vendors like OpenAI (GPT-4), Anthropic (Claude), and Google (Gemini) tightly guard model internals, exposing inference primarily through APIs. You don’t see the code or the weights, but you do get robust SLAs, streamlined integrations, and (often) best-in-class performance for language, reasoning, and multilingual tasks. Enterprises gain predictable upgrade cycles and vendor-validated security compliance.

Closed source LLM powerhouses:

- GPT-4: Market-leading accuracy for reasoning, summarization, and context understanding.

- Claude: Known for robust safety guardrails and enterprise-centric privacy design.

- Gemini: Google’s scalable LLM, excels at search, knowledge retrieval, and cloud integration.

Comparison Table: Open Source vs Closed Source LLMs

If you’re seeking an open source LLM comparison or wondering about open source vs.closed source have a look at comparison table below:

| Criteria | Open-Source LLMs | Closed-Source LLMs |

| Access | Full access to architecture & weights | Usage via managed APIs/platforms |

| Cost | No/low licensing, infra costs scale with use | API subscription or per-token pricing |

| Customisation | Fine-tune models, alter tokenizers | Minimal (prompt tuning, limited extensions) |

| Security | Complete data control, but internal risk | Vendor-managed, externally audited |

| Support | Community, optional enterprise add-ons | Dedicated commercial support/SLA |

| Vendor Lock-In | None | High-migration can be resource intensive |

| Best For | R&D, internal apps, regulated industries | Public apps, multilingual, rapid deployment |

Key Considerations Before Choosing:

Let’s explore the key considerations you must take care of while choosing Open Source vs Closed Source LLMS:

1. Budget & Cost Optimisation

Open source LLMs can help you avoid recurring per-token or seat license fees. However, open source LLM models for commercial use demand serious infrastructure: GPUs or TPUs, security-hardened clusters, and continuous integration with your operational stack.

Example: One Fortune 100 telecom reduced conversational AI TCO by 40% using LLaMA 3 on custom hardware, but only after investing in an in-house MLOps team.

Closed source (sometimes called close source) LLMs offer out-of-the-box scalability with predictable, consumption-based pricing. For some, these costs balloon at scale. For others, especially startups, API access means faster prototyping with less up-front investment.

2. Internal Technical Capability

Running open LLMs demands DevOps, MLOps, security engineering, and fine-tuning expertise. Routine upgrades, model retraining, and prompt patching become your responsibility.

Closed source vs open source: Closed LLMs take away the operational burden, freeing engineers to focus on UX, vertical integrations, and business logic.

3. Security & Compliance

Open source vs closed source security: Open deployments live in your environment. You control encryption, audit trails, access policies, and run the model in isolated networks.

Closed source: Vendors pursue certifications (SOC2, HIPAA, GDPR) and publish security whitepapers. But control over raw data, prompts, and logs never leaves their ecosystem.

“Healthcare and banking leaders often blend both: open-source for PII workloads, closed source for general-purpose interaction.”

4. Customisation & Control

Want to inject custom knowledge, industry-specific terminology, or build an AI agent that reasons over proprietary datasets? Open-source LLM wins.

Closed source models let you craft sophisticated prompts and leverage plugins, but underlying behaviors are “locked”, ideal for standardized, broad-domain AI services.

Deployment Best Practices for Open-Source LLMs:

Let’s explore the best practices for deploying open-source LLMs:

- Intelligent Model Selection: Match parameter count to task; don’t over-provision. Use Mistral 7B for chatbots, LLaMA 70B for advanced analytics, two of the top open source LLMs.

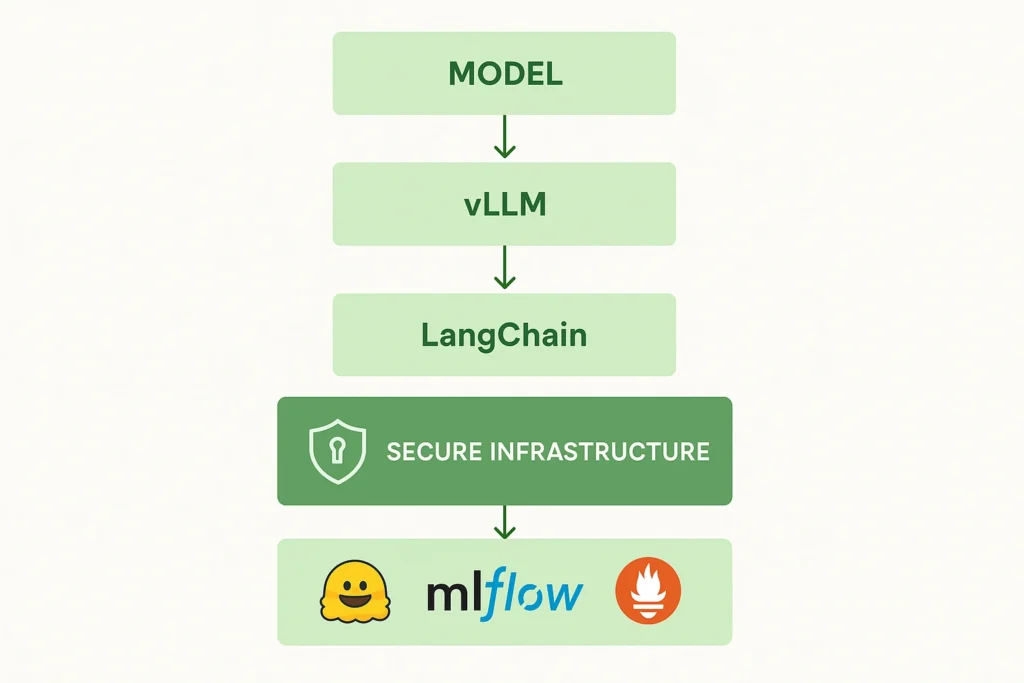

- Optimized Inference: Deploy with libraries like vLLM, GGML, or Nvidia Triton. HuggingFace Optimum accelerates inference via hardware-aware optimizations.

- Productionization with MLOps: Integrate MLflow, Weights & Biases, and Arize for model tracking, monitoring, drift detection, and scalable model management.

- Security-First Deployment: Use Kubernetes with RBAC or air-gapped Docker clusters. Test for prompt-injection, data leakage, and adversarial exploits, a must for open source vs closed source AI concerns.

- Continuous Fine-Tuning: Regularly update models on recent datasets using SFT and LoRA/QLoRA for efficient adaptation.

Example: A UK insurance company deployed Falcon in a Kubernetes cluster and monitored with Prometheus, achieving full GDPR compliance.

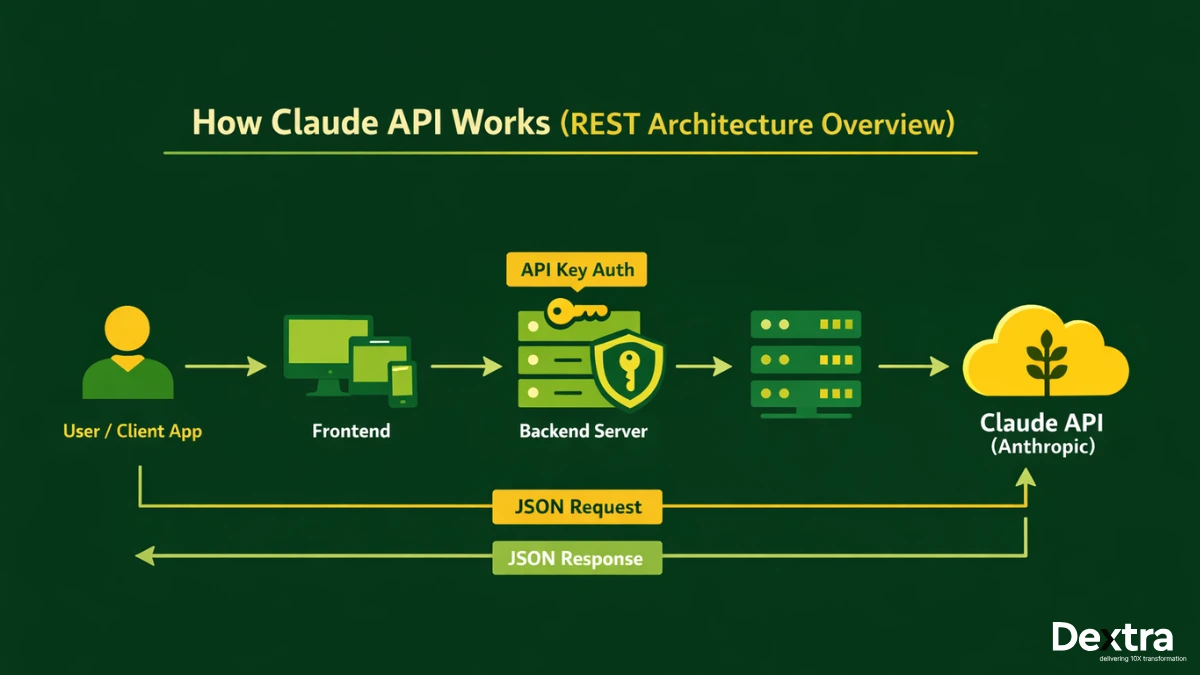

Deployment Best Practices for Closed-Source LLMs:

Let’s explore the best practices to for deploying closed-source LLMs:

- Vendor Assessment: Audit for enterprise SLA, API latency, regional compliance, and prompt injection mitigation.

- Orchestration Abstraction: Use LangChain, LlamaIndex, or Semantic Kernel to switch between providers with minimal code changes.

- Prompt Engineering for Efficiency: Optimize prompt size, implement prompt caching and chain-of-thought strategies to minimize API spend.

- Access & Compliance Management: Integrate SSO, API key vaulting, role-based access, and encrypted audit logs.

- Failover & Rate Limiting: Build secondary provider routes for catastrophic outages or aggressive rate limiting.

Example: A fintech startup used the Claude API for rapid deployment, then ported to Gemini during a service outage thanks to robust abstraction layers.

Use Case Alignment: Choosing with Precision (Open vs Closed AI)

Have a look at use cases for open vs closed source LLMs:

When Open-Source LLMs Excel?

- Data-Sensitive Internal Tools: Healthcare, legal, and finance firms fine-tune open source LLM models behind firewalls for summarization and compliance audits.

- Domain-Specific Chatbots: Manufacturing and logistics enterprises build specialized co-pilots using Mistral for industry jargon and proprietary workflows.

- On-Prem Deployments: Government agencies leverage LLaMA 3 for secure document processing with no cloud data egress.

When Closed-Source LLMs Lead?

- Multilingual Customer Support: Global SaaS providers roll out support bots in 30+ languages via GPT-4 with minimal effort.

- Rapid MVP Development: Startups validate product-market fit with minimal engineering by integrating closed API endpoints—time-to-value wins.

- High-Quality Reasoning at Scale: e-Commerce platforms use Gemini for context-aware recommendations and semantic search across millions of SKUs.

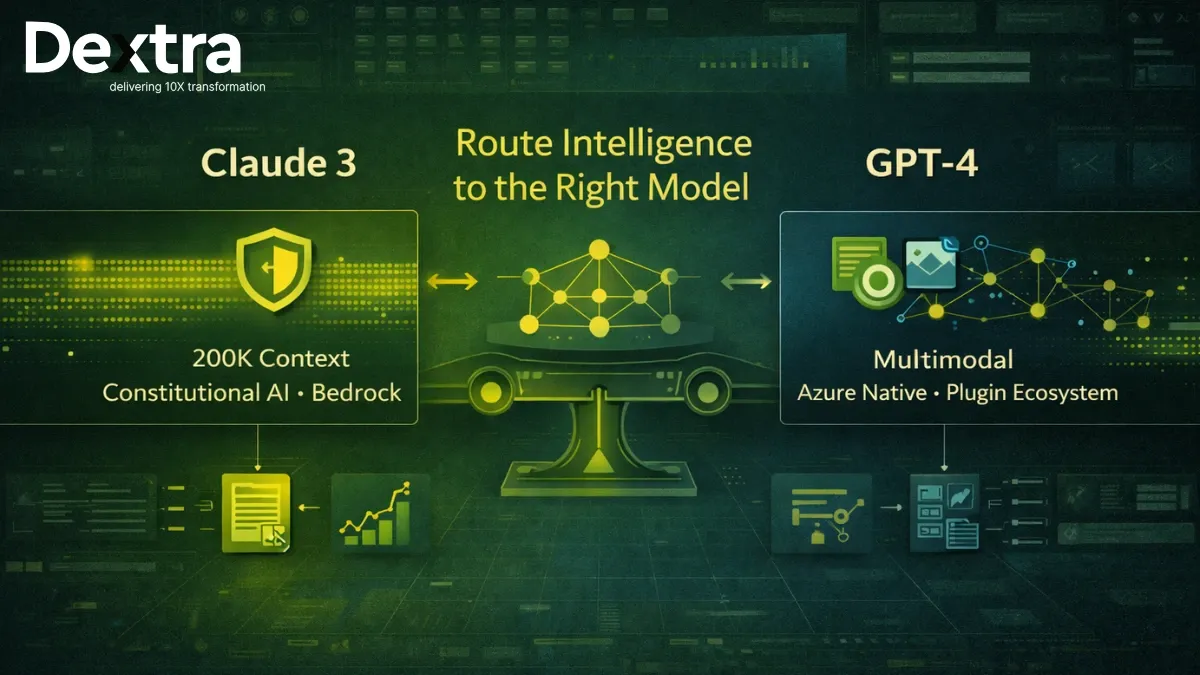

Hybrid Approach: Orchestrating the Best of Both Worlds (Open vs Closed)

Smart enterprises blend open-source LLMs and closed-source power. Use closed source ai (like GPT-4) for tasks demanding flawless reasoning, and route retrieval or sensitive analyses to open source LLM options like Falcon or Qwen.

- Prompt Routing: Tools like LangChain dynamically select the ideal LLM per user query (open vs closed API flexibility).

- Task Specialization: Build composite pipelines, e.g., generate with Claude, validate or re-rank with Mistral.

Case Study: A US enterprise SaaS leader orchestrates GPT-4 for customer support and Mistral for real-time financial document parsing, all within the same product using Semantic Kernel.

Real-World Examples:

- Fortune 500s: European banks use LLaMA 3 and Falcon (open source large language model, opensource LLMs) to power risk engines isolated on-prem, reducing regulatory exposure while using closed LLMs for general insights.

- AI Startups: Over 45% operate hybrid LLM stacks, OpenAI API for public endpoints, fine-tuned open models for specialized, low-latency backend functions (a16z LLM Stack Report 2025).

- SaaS Innovations: A logistics platform combined Mistral (open-source LLMs ignite it) with OpenAI, tripling knowledge worker productivity and accelerating new feature rollouts without sacrificing control.

Final Recommendations: Enterprise LLM Decision Matrix (Open Source vs Closed Source)

| Scenario | Recommended Approach |

| Fast MVP delivery, limited AI ops | Closed Source |

| Deep domain customization, in-house AI | Open Source |

| High privacy, data residency, compliance | Open Source |

| Large-scale public/user-facing apps | Closed Source |

| Want best cost-to-functionality balance | Hybrid Strategy |

There’s no universal LLM blueprint for every enterprise. Your stack should reflect your talent, risk tolerance, regulatory envelope, and innovation goals, whether you lean toward open-source LLM models, closed source, or a smart combination of both. Always evaluate open source vs closed source security and what is closed source before making a decision.

Bonus Toolkit: Your LLM Deployment Stack

- Top Open Source LLMs: LLaMA 3, Mistral, Falcon, HuggingFace Hub, vLLM (best open source LLMs, open source LLM comparison)

- Closed Source APIs: OpenAI/GPT-4, Claude, Google Gemini, Vertex AI (closed source ai)

- Key Orchestration: LangChain, LlamaIndex, Semantic Kernel (open vs closed api)

- Monitoring & Ops: Arize AI, MLflow, Prometheus

Choose Smart, Deploy Smarter (Open Source vs Closed Source)

Harnessing LLMs, open source, closed source, or both, is no longer an option, but a core competitive capability. Enterprise value comes from aligning choices with business outcomes, operational realities, and future-proofing your tech stack.

Looking for technical edge, security, and performance in your enterprise LLM deployment? Dextralabs delivers, from R&D pilots to robust hybrid architectures, empowering you to innovate with confidence.

Consult with Dextralabs today and future-proof your AI stack.

FAQs on open source llms vs closed source llm:

Q. Are open-source LLMs better?

Not inherently — but they can be, depending on your goals.

Open-source LLMs excel when enterprises need transparency, fine-tuning control, offline deployment, or cost savings at scale. They’re especially valuable for R&D, regulatory-compliant environments, and domain-specific customizations. However, closed-source LLMs like GPT-4 or Claude often outperform in general reasoning, multilingual capabilities, and production-grade reliability out of the box. So, “better” depends on whether you’re optimizing for control and flexibility (open) or performance and simplicity (closed).

Q. What is a closed-source LLM?

A closed-source LLM is a proprietary large language model whose architecture, training data, and model weights are not publicly accessible. These models are typically accessed via APIs (e.g., OpenAI’s GPT-4, Anthropic’s Claude, Google’s Gemini) and are maintained by commercial vendors who control updates, security, and infrastructure.

Key traits:

-You can’t see or modify the model weights.

-Usage is often metered by tokens or characters.

-Ideal for rapid prototyping, enterprise-grade NLP, and low-overhead deployment.

Q. What is open-source LLM for translation?

An open-source LLM for translation is a model trained or fine-tuned to perform multilingual text translation tasks — either as a general-purpose LLM with multilingual capabilities (like BLOOM or NLLB), or a specialized model trained on parallel corpora.

You can:

– Fine-tune a base LLM on language pairs (e.g., English ↔ Hindi)

– Use models like MarianMT, NLLB-200, or Mistral 7B with LoRA adapters

– Deploy them locally for data-sensitive translation workflows without relying on third-party APIs

Q. What is the difference between open-source and closed-source LLM performance?

Performance varies based on benchmarks and use cases:

Closed-source LLMs like GPT-4 still lead in general-purpose reasoning, summarization, and multi-turn conversation accuracy — thanks to proprietary training datasets and architectural innovations.

Open-source LLMs are rapidly catching up. Mistral 7B, Mixtral, and LLaMA 3 rival GPT-3.5 across many tasks. With quantization, fine-tuning, and retrieval-augmented generation (RAG), open models can outperform closed ones in domain-specific tasks.

Bottom line: closed is stronger out of the box; open shines with customization.