Agentic AI has officially moved past the “cool demo” phase.

We’re no longer talking about agents that live in notebooks or answer isolated questions. Today’s agents browse the web, fill out forms, manage CRM workflows, generate reports, and interact with real users over long periods of time. In short, they operate in the real world.

But as adoption grows, so does a familiar problem: many agentic AI systems fail to scale reliably in production. According to Gartner, over 40% of agentic AI projects are expected to be cancelled by the end of 2027 due to rising costs, unclear business value, and inadequate risk controls.

This is where recent real-world agent examples built with Gemini 3 are especially instructive. They don’t just show what modern models can do; they reveal how agentic AI actually works when it’s engineered correctly.

And more importantly, they expose a growing truth in the industry: the future of agentic AI is about systems, not just models.

From Task Bots to Cognitive Systems: How Agents Are Maturing

To understand why these Gemini 3 examples matter, it helps to zoom out and look at how agentic AI has evolved.

Lightweight Task Agents: Fast, Useful, but Shallow

Most organizations start here. These agents are built with:

- RAG pipelines

- Tool calling

- Predefined workflows

They’re excellent for:

- Ticket routing

- Content drafts

- Simple data extraction

But they break down quickly when tasks require judgment, prioritization, or adaptation. There’s little planning, almost no self-critique, and minimal understanding of long-term goals.

Research-Grade Agents: Impressive, but Impractical

On the other extreme are deep research agents inspired by human cognition:

- Multi-agent debate

- Draft-refine-critique loops

- Long chains of reasoning

They can produce remarkable outputs, but they’re slow, expensive, and unpredictable. For most enterprises, they’re simply too heavy to run in production.

The Missing Middle Layer

Between these two extremes is where real opportunity lies: production-grade cognitive agents.

These systems reason more deeply than workflow bots but remain efficient, controlled, and scalable. The Gemini 3 agent examples sit squarely in this middle layer, and that’s why they matter.

What Gemini 3 Brings to Real-World Agent Systems?

Gemini 3 isn’t positioned as a “thinking AGI.” Instead, it’s optimized to act as a reliable reasoning and orchestration engine inside larger systems.

Across the examples, a few strengths stand out:

- Explicit control over reasoning depth

- Strong state management for long-horizon tasks

- Native multimodal understanding

- Fast inference suitable for iterative workflows

In other words, Gemini 3 works best when it’s part of a system, not when it’s left to operate autonomously.

Real-World Agent Examples with Gemini 3: What They Actually Teach Us?

The real value of these Gemini 3 agent examples isn’t in the surface functionality, browser automation, research, memory, or enterprise workflows. Many models can perform those tasks in isolation.

What makes these examples important is how they are engineered.

Across frameworks, industries, and use cases, they expose a set of architectural truths about what it actually takes to deploy agents that remain reliable, controllable, and useful over time. Each example highlights a specific failure mode of earlier agentic systems and shows how modern designs are beginning to address it.

Let’s look at what each category of example truly teaches us.

1. ADK: Why Orchestration Is More Important Than Autonomy

The Agent Development Kit (ADK) reframes agentic AI as a software engineering problem, not a prompt engineering one.

Instead of a single, autonomous agent trying to “figure everything out,” ADK systems are composed of:

- Planner agents that reason about goals, constraints, and sequencing

- Executor agents that perform concrete, bounded actions

- Reflection and validation steps that detect errors before results are finalized

The critical insight here is not multi-agent collaboration; it’s role clarity.

By explicitly separating planning, execution, and verification, ADK avoids one of the most common agent failures: unstructured reasoning loops that drift away from the original objective. Each agent knows what kind of thinking it is responsible for, and Gemini 3’s reasoning depth can be tuned accordingly.

What this teaches us:

Reliable agents emerge from structured orchestration, not from maximizing autonomy. The more complex the task, the more important it is to constrain where and how reasoning happens.

2. Agno: Cognitive Specialization Prevents Reasoning Collapse

Agno demonstrates a different but equally important lesson: agents should specialize cognitively, not just functionally.

Rather than spawning large numbers of general-purpose agents, Agno assigns clear intellectual roles, research, analysis, and creativity, each with:

- A bounded scope of reasoning

- A focused toolset

- Explicit grounding mechanisms

Gemini 3 is used not to expand reasoning indefinitely but to keep each agent operating within its cognitive “lane.” This prevents the kind of reasoning collapse where agents mix exploration, decision-making, and execution into a single, error-prone process.

What this teaches us:

Cognitive specialization reduces hallucinations, improves accuracy, and keeps systems explainable. Agents don’t need to think about everything; they need to think about the right thing at the right time.

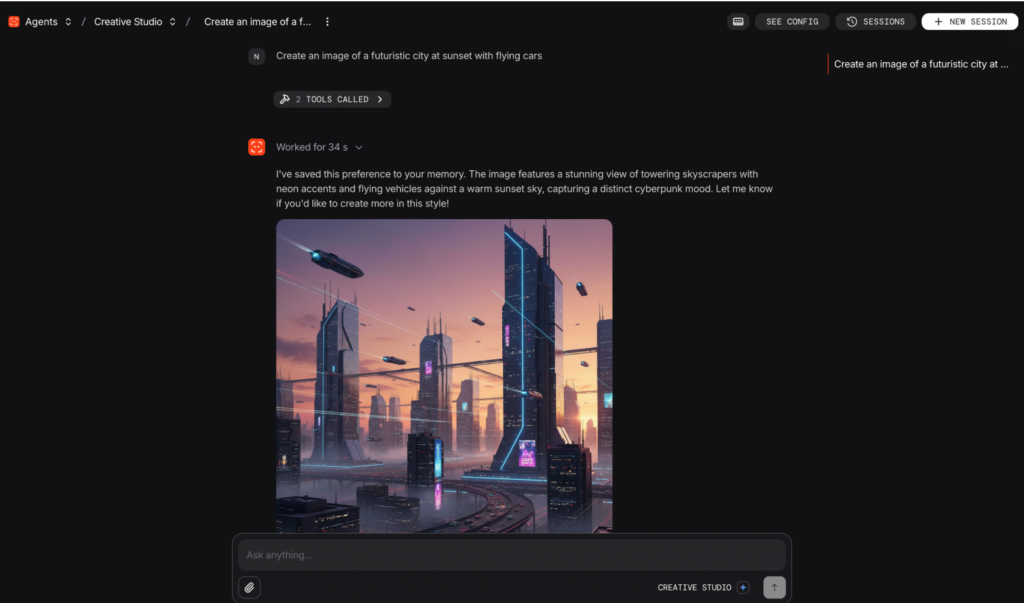

3. Browser Use: Perception Is a Core Agent Capability

The Browser Use example highlights a failure mode that has plagued agentic systems for years: brittle automation.

Traditional browser automation relies on static assumptions, selectors, fixed layouts, and predictable flows. Real websites don’t behave that way.

By leveraging Gemini 3’s multimodal capabilities, this system allows agents to:

- Visually interpret interfaces

- Understand the semantic role of UI elements

- Adapt actions dynamically as layouts change

This shifts automation from scripted execution to perceptual interaction.

What this teaches us:

Agents operating in real environments must perceive before they act. Reasoning without perception leads to fragile systems; perception without reasoning leads to chaos. Production agents require both.

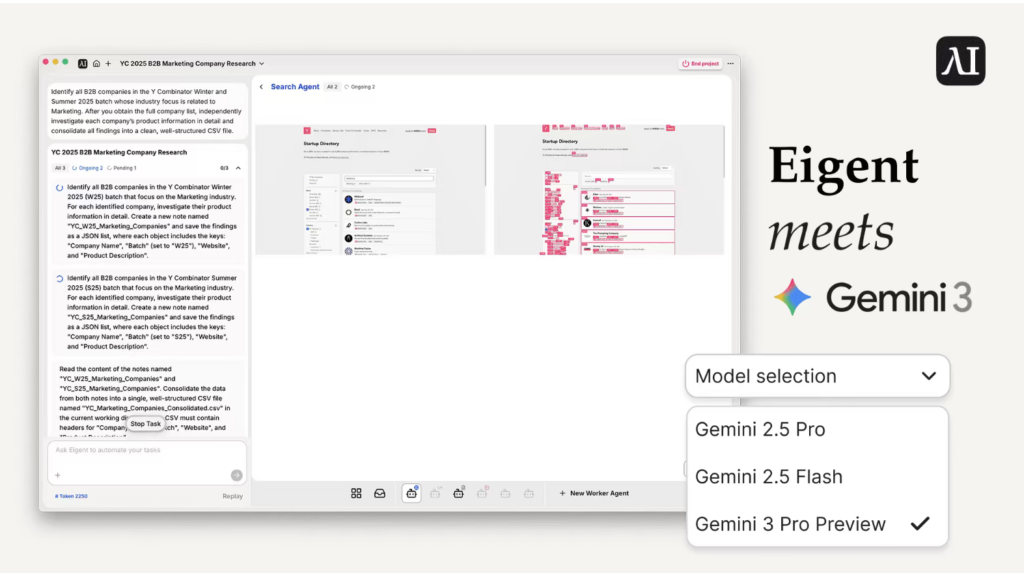

4. Eigent: Long-Horizon Tasks Demand Persistent Reasoning State

Eigent focuses on a problem most agent demos ignore: long-running enterprise workflows.

In scenarios like CRM management or deal lifecycle updates, tasks span:

- Multiple tools

- Extended time horizons

- Numerous intermediate decisions

Eigent uses workforce-style agent coordination combined with Gemini 3’s ability to maintain reasoning state across steps. This prevents context drift, where agents forget why earlier decisions were made or repeat work unnecessarily.

What this teaches us:

State persistence isn’t optional for enterprise agents. Without a maintained reasoning state, agents behave reactively instead of strategically, no matter how intelligent the underlying model is.

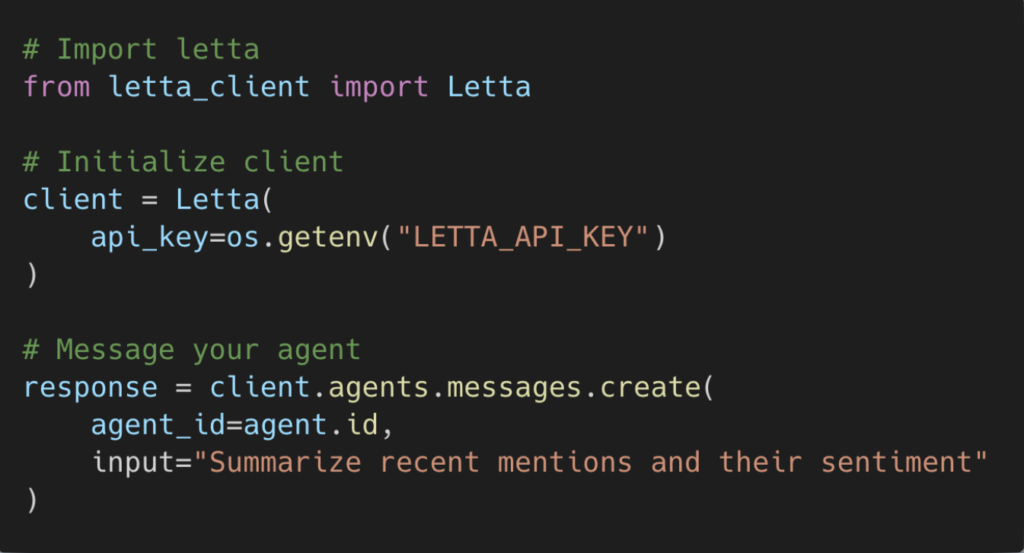

5. Letta: Memory Is Not Storage, It’s Cognition

Letta exposes a subtle but crucial insight: memory is an active cognitive process, not a passive database.

Instead of treating memory as a flat log, Letta introduces a hierarchy:

- Working memory for immediate context

- Long-term memory for goals, identity, and rules

- Episodic memory for past interactions and outcomes

Gemini 3 operates within this hierarchy, retrieving and updating memory selectively rather than consuming everything at once.

What this teaches us:

Unlimited context is not the same as useful context. Agents need structured memory systems that determine what to remember, when to recall it, and when to ignore it.

5. mem0: Forgetting Is a Production Requirement

mem0 tackles a misconception common in agent design: that more memory always leads to better agents.

Instead, mem0 shows that effective agents:

- Learn user preferences over time

- Retain high-value insights

- Actively discard irrelevant or outdated information

This prevents memory pollution, where excessive historical context degrades reasoning quality.

What this teaches us:

Forgetting is not a limitation; it’s a requirement for long-term reliability. Cognitive systems must manage memory growth intentionally to remain accurate and responsive.

The Common Pattern Behind Successful Gemini 3 Agents

Across all these examples, a clear pattern emerges:

- Structured orchestration

- Controlled reasoning loops

- Stateful, selective memory

- Safe, permissioned tool execution

- Predictability over maximal intelligence

Notably, none of these systems rely on unbounded autonomy. They rely on cognitive control layers.

Dextralabs’ Perspective: The Cognitive Layer Above Gemini 3

Gemini 3 is a powerful reasoning engine, but engines need vehicles.

Dextralabs focuses on building the cognitive systems that sit above models like Gemini 3, enabling them to operate reliably in production.

This includes:

- Layered reasoning (task, strategic, reflective)

- Clear separation between planning and execution

- Adaptive reasoning depth based on task complexity

- Memory governance across agents and workflows

- Budget-aware iteration and stop conditions

The goal isn’t to make agents think endlessly; it’s to make them think just enough.

Where This Architecture Wins in the Real World?

This middle layer of cognitive agents is especially effective for:

- Market and competitive intelligence

- Enterprise knowledge systems

- Browser-driven business workflows

- Policy and regulatory research (non-diagnostic)

- Technical documentation synthesis

These are domains where speed alone isn’t enough and where over-engineered research agents slow everything down.

Key Takeaways

- Real-world agentic AI is a systems challenge, not just a modeling challenge

- Gemini 3 excels as a reasoning core within well-designed agent architectures

- The industry is converging on a missing middle layer of cognitive agents

- Dextralabs builds that layer: balancing intelligence, efficiency, and control

Conclusion

The real-world agent examples built with Gemini 3 make one thing clear: successful agentic AI is not driven by unchecked autonomy or raw model intelligence, but by how cognition is structured, governed, and deployed in production. Systems that scale rely on controlled reasoning, selective memory, and clear separation between planning and execution.

Gemini 3 provides a strong reasoning core, but it is the surrounding architecture that turns intelligence into reliability. Dextralabs focuses on building this missing cognitive layer, designing production-grade agentic systems that balance depth, efficiency, and control. As organizations move beyond experimentation, this approach enables AI agents that think when necessary, act decisively, and operate predictably in real-world environments.

The next phase of agentic AI is not about thinking more; it’s about thinking better, with control and intent.