Most business teams these days make decisions based on data. Marketing managers constantly need to understand how their campaigns are performing. Product teams want insights into how frequently features are being used. Finance teams must pay close attention to their potential pay and real earnings to help their decision-making. The problem is that this all-important data is tied away in cumbersome relational databases collected in a desperate variety of ways that all require SQL to understand.

SQL may be complicated and alien to those who don’t have coding or technical knowledge. With no means of writing their own queries, that means many people have to ask a developer or data analyst to pull the data they need.

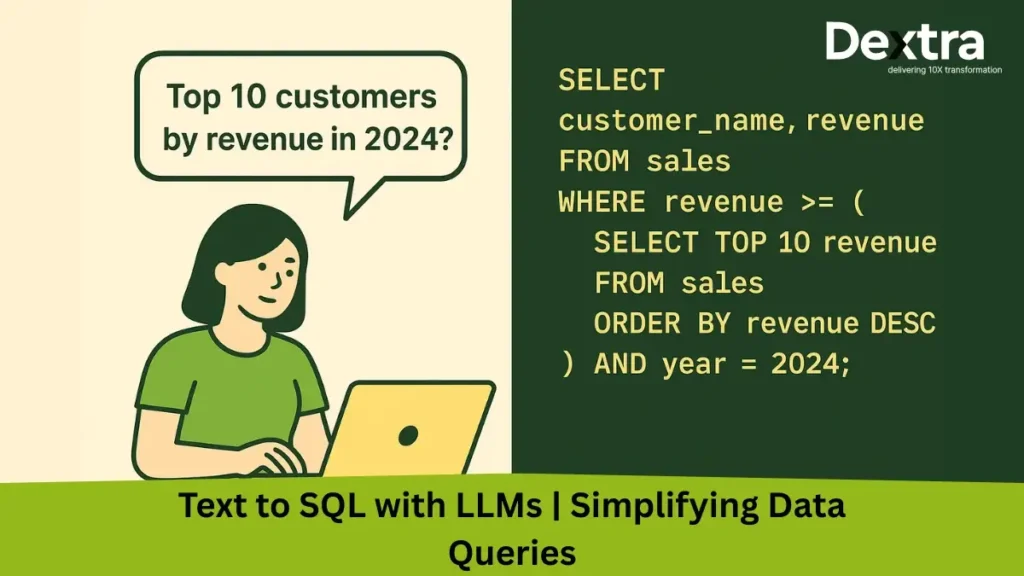

Take this illustration:

You want to know:

“Prepare a list of the best ten customers by the revenue they generated in the year 2024.”

This is how the request looks in SQL:

SELECT customer_name, revenue

FROM customers

WHERE year = 2024

ORDER BY revenue DESC

LIMIT 10;This code piece appears awkward if one does not know SQL. Such a gap between simple English and SQL makes decision-making challenging and causes people to rely upon tech teams.

This is when Large Language Models (LLMs) enter the scene and flip the script.

You don’t need to memorize the syntax of SQL; you just express your question in plain English, and the LLM automatically figures out the resulting SQL query. Dextralabs is the leader in making this technology productive for businesses by empowering them to integrate text-to-SQL solutions into their processes safely.

Why LLMs Are Game-Changer for SQL

The classical learning curve of SQL is high. Even veterans of the field spend hours fine-tuning queries, fine-tuning joins, and debugging syntax issues. Text-to-SQL using LLMs, however, allows you to question the data in a natural and easy-to-read manner.

Here are the main pros:

Accessibility – Anyone can query data without formal SQL training.

Speed – No more bottlenecks waiting on developers or analysts.

Prototyping – Quickly draft queries during brainstorming sessions.

Onboarding – Great for teaching new hires how SQL works by showing natural language alongside generated queries.

User-Friendly – Ask in plain English, get structured results.

For example, instead of:

SELECT AVG(order_value)

FROM orders

WHERE order_date BETWEEN '2024-01-01' AND '2024-03-31';You simply type:

“What was the average order value for Q1 2024?”

And get the right SQL instantly.

But like all powerful tools, there are challenges too:

Query errors – LLMs may occasionally produce invalid or inefficient queries.

AI tool dependency – The team may become dependent on AI without verifying outputs.

Privacy and data security risks – The personal data must be kept from unauthorized access.

The key is having LLM-influenced SQL responsibly and here’s where partners like Dextralabs bring experience in the trade-off of having it and protection.

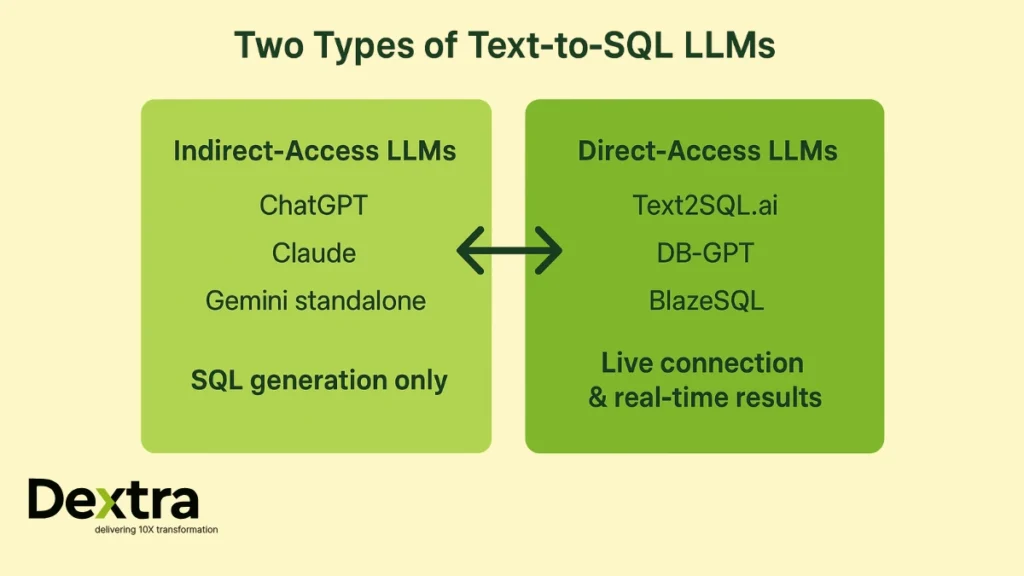

Two Types of Text-to-SQL LLMs:

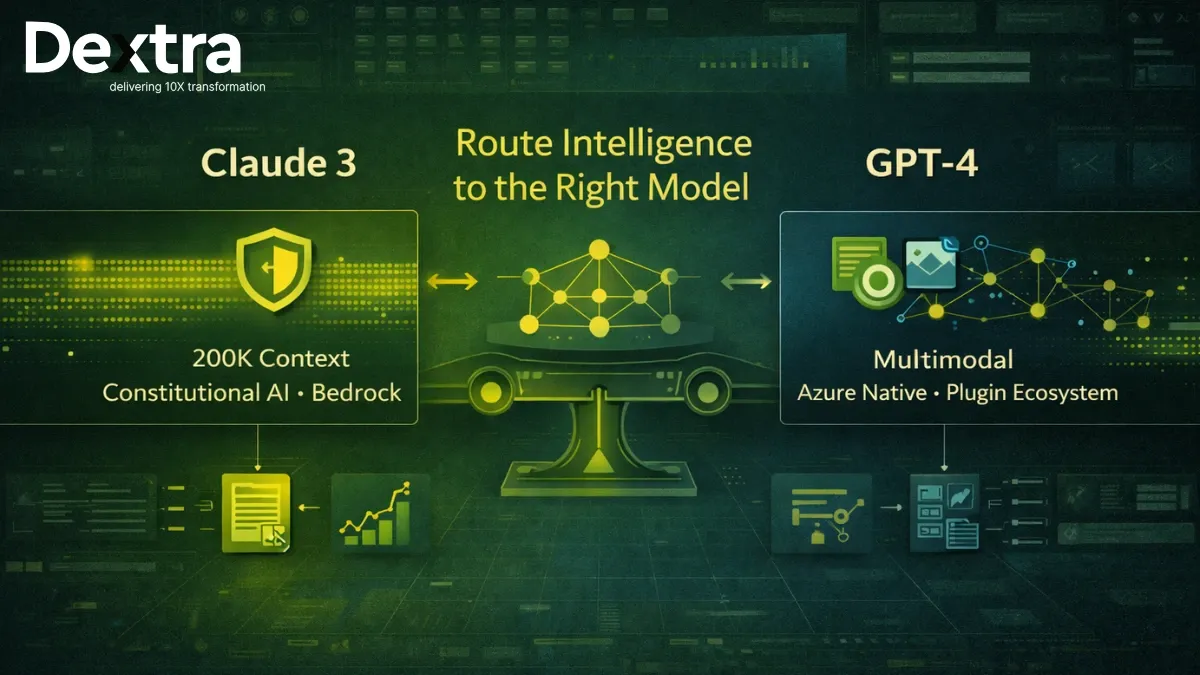

Not all LLMs handle SQL in the same way. They generally fall into two categories:

1. Indirect-Access LLMs (No Live Database Connection)

These models generate SQL based on schema details but do not run the queries themselves.

Examples:

ChatGPT (OpenAI)

Claude (Anthropic)

Google Gemini (standalone)

Phind

Use Cases:

SQL prototyping before production

Education and training

Code generation for developers

These are great for environments where security is critical—you generate the SQL but execute it manually in your own system.

2. Direct-Access LLMs (Live Database Connection)

These LLMs are designed to connect directly with databases such as PostgreSQL, BigQuery, and Snowflake, allowing them to generate and return live results instantly based on user requests.”

Text2SQL.ai

DB-GPT

DataPilot

Seek AI

BlazeSQL

ThoughtSpot Sage

Google Gemini (with Google Cloud data integration)

Use Cases:

Conversational business intelligence

Real-time analytics dashboards

Ad-hoc queries for live data exploration

These systems are powerful but raise more security and governance concerns since they execute queries directly.

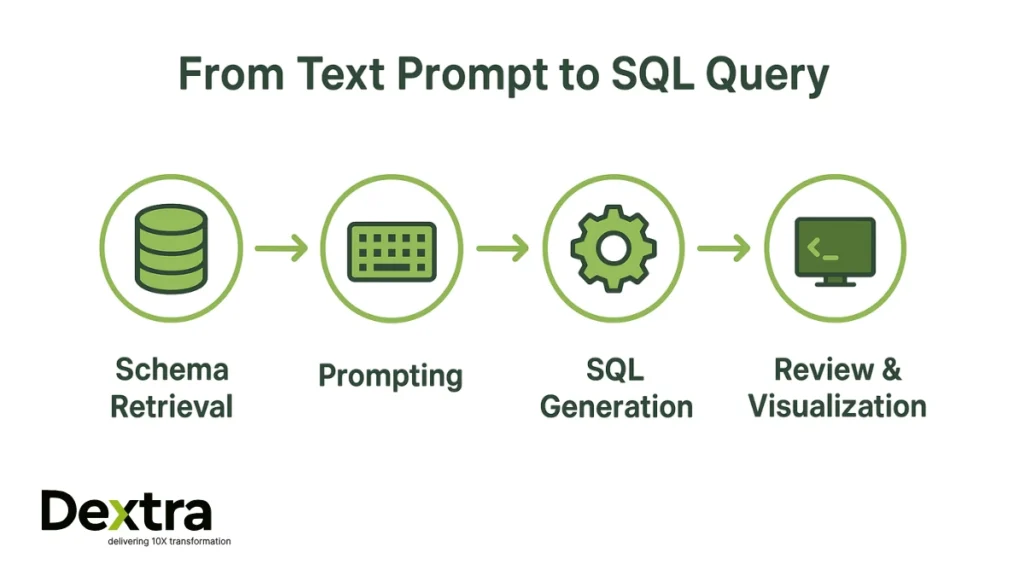

The Workflow: From Text Prompt to SQL Query

Transforming natural language into SQL involves five core steps.

1. Schema Retrieval

The model needs to know your database structure. Without schema details, it might guess incorrectly. Provide:

- Table names + descriptions

- Column names + data types

- Relationships (primary keys, foreign keys, joins)

This can be embedded in your prompt or dynamically retrieved using RAG (Retrieval-Augmented Generation).

2. Use a Natural Language Prompt

The user types a request combining schema context and the actual question.

Prompt Example:

Dataset: Customers & Orders tables.

Customers: id, first_name, last_name, city

Orders: id, cust_id, order_date, total_order_cost

Question: Retrieve the customers who had the highest total order amounts per day

between 2019-02-01 and 2019-05-01. Show first name, daily total, and order date.

Assumption: Each first name is unique.

Role: Take on the role of a SQL expert and write a PostgreSQL query.3. Generate the SQL Query

The LLM converts the prompt into executable SQL:

SELECT c.first_name, daily_totals.order_date, daily_totals.total_cost

FROM (

SELECT cust_id, order_date, SUM(total_order_cost) AS total_cost

FROM orders

WHERE order_date BETWEEN '2019-02-01' AND '2019-05-01'

GROUP BY cust_id, order_date

) AS daily_totals

JOIN customers c ON c.id = daily_totals.cust_id

WHERE (cust_id, order_date) IN (

SELECT cust_id, order_date

FROM (

SELECT cust_id, order_date, SUM(total_order_cost) AS total_cost,

RANK() OVER (ORDER BY SUM(total_order_cost) DESC) AS rnk

FROM orders

WHERE order_date BETWEEN '2019-02-01' AND '2019-05-01'

GROUP BY cust_id, order_date

) ranked

WHERE rnk = 1

);4. Execute the Query

Depending on the setup:

Copy/paste into a SQL editor for execution, or

Allow the LLM (if directly connected) to run it and return live results.

Sample Results:

| first_name | order_date | total_cost |

| Jill | 2019-04-19 | 275 |

| Mark | 2019-04-19 | 275 |

5. Review, Visualize & Refine

Validate the output for accuracy.

Visualize results as tables or dashboards.

Refine with follow-ups (e.g., “Also show the customer’s city”).

This iterative loop makes data analysis conversational and dynamic.

Challenges & Best Practices:

Even if LLMs are robust, they require guardrails.

| Challenge | Best Practice |

| Incorrect SQL | Use few-shot prompting with examples. |

| Ambiguous questions | Write clear, structured prompts. |

| Hallucinated columns/tables | Use schema search or RAG grounding. |

| Outdated schema info | Provide updated metadata. |

| Large schemas | Break queries into smaller, modular prompts. |

| Unsafe queries | Require approvals before execution. |

| Unauthorized access | Enforce role-based access controls. |

| Wrong results | Validate outputs against business logic and test cases. |

This is exactly where Dextralabs adds value, guiding businesses in designing workflows that maximize productivity while ensuring data security and integrity.

Real-World Scenarios:

Text-to-SQL isn’t a tech demo, it’s already transforming industries.

- Retail & E-commerce:

Querying customer purchase history, sales by region, or abandoned cart rates.

- Finance & Banking:

Fraud detection, transaction monitoring, or quick financial summaries.

- Healthcare:

Doctors ask, “What was the average length of stay for cardiac patients last year?” without needing IT support.

- Marketing & Sales:

Instant campaign performance dashboards.

- Internal Operations:

HR teams are querying staff turnover rates or training completion metrics.

Every use case reinforces the same point: data becomes accessible to everyone.

The Future of Text-to-SQL

Looking ahead, we’ll see:

- Interactive LLM agents – Models that ask clarifying questions when queries are vague.

- Multimodal inputs – Voice or visual prompts (e.g., dashboards or ER diagrams).

- Fine-tuned domain-specific models – Tailored for industries like finance, retail, or healthcare.

- Deeper BI integration – LLMs as the natural interface of analytics platforms.

In the end, SQL can be transparent—users specify what they want, and the system gives it to them right away.

Conclusion

Large language models are expanding the availability of data, speed, and usability. Anyone, not just SQL experts, can draw knowledge from databases within seconds after the right configuration.

But success requires:

- Clear prompts

- Schema grounding

- Validation layers

- Security controls

Here’s where Dextralabs comes in, enabling companies to adopt text-to-SQL solutions which are technologically robust, secure, and scalable.

Text-to-SQL with LLMs isn’t just about convenience; it’s about empowering everyone to work with data smarter and faster..