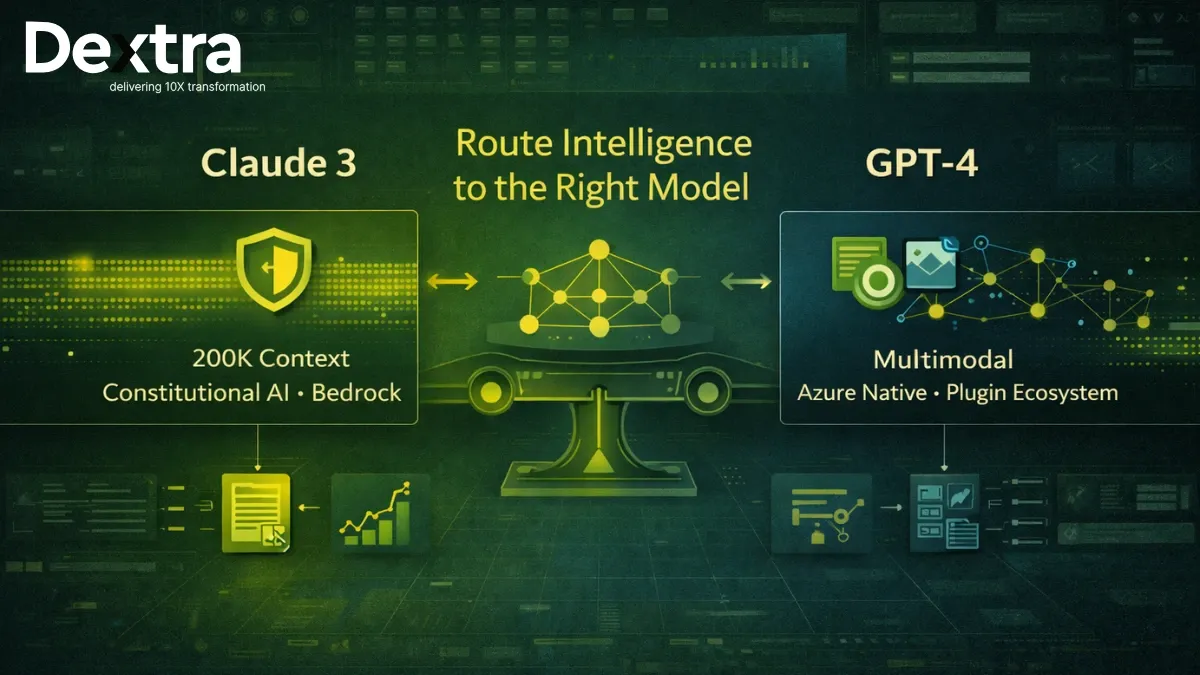

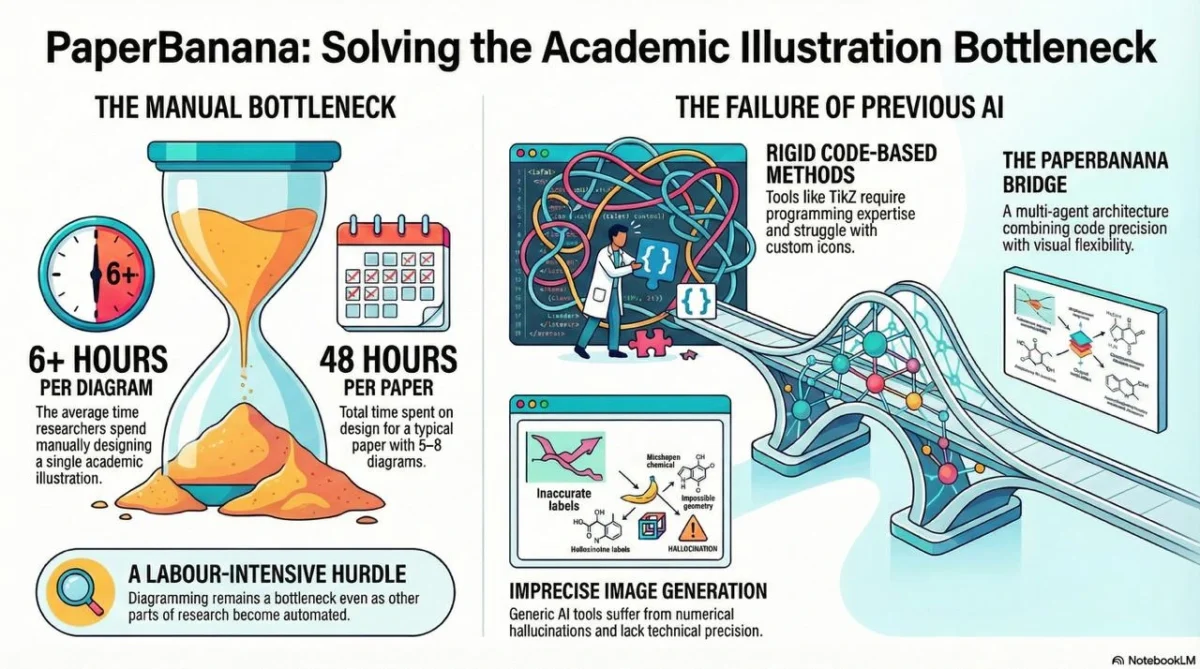

Small Language Models (SLMs) are quickly becoming the new face of AI in 2025. For years, the spotlight was on massive Large Language Models (LLMs) — systems like GPT‑4 that amazed us with their intelligence but demanded sky‑high costs to build and run. Training GPT‑4 alone is said to have cost over $100 million in compute, and keeping it running can drain thousands of dollars every single day. Impressive? Yes. Practical for most businesses? Not even close.

That’s why the momentum has shifted. The future of AI is no longer about being the biggest — it’s about being smarter, lighter, and faster. SLMs embrace this shift. They deliver real power without the baggage: models that startups can deploy on modest budgets, enterprises can run on‑device to keep data private, and individuals can use them without relying on endless GPUs.

At Dextralabs, we believe SLMs unlock the next stage of AI adoption: accessible, affordable, and built for real‑world impact. In this guide, we’ll introduce you to the Top 15 Small Language Models for 2025, highlighting their strengths, unique features, and the industries where they’re making a difference.

Why Are Small Language Models on the Rise?

While popular language models like GPT-4, Claude, and Gemini dominate the headlines, small language models (SLMs) are quietly becoming the go-to choice for businesses that value speed, efficiency, and control.

Unlike massive large language models, these small language models are designed for practical, resource-conscious deployments from on-device AI to privacy-first enterprise systems.

Key advantages of small language models include:

- Faster inference: SLM AI is ideal for real-time applications like chatbots, virtual assistants, and interactive tools due to low latency.

- Lower prices: Less compute power translates to cheaper deployments without compromising core performance.

- On-device capabilities: Operate natively on mobile, IoT, or edge devices; do not require constant cloud connection.

- Customizability: More facile to tailor to specialized industries, from medicine to finance, with specialized solutions.

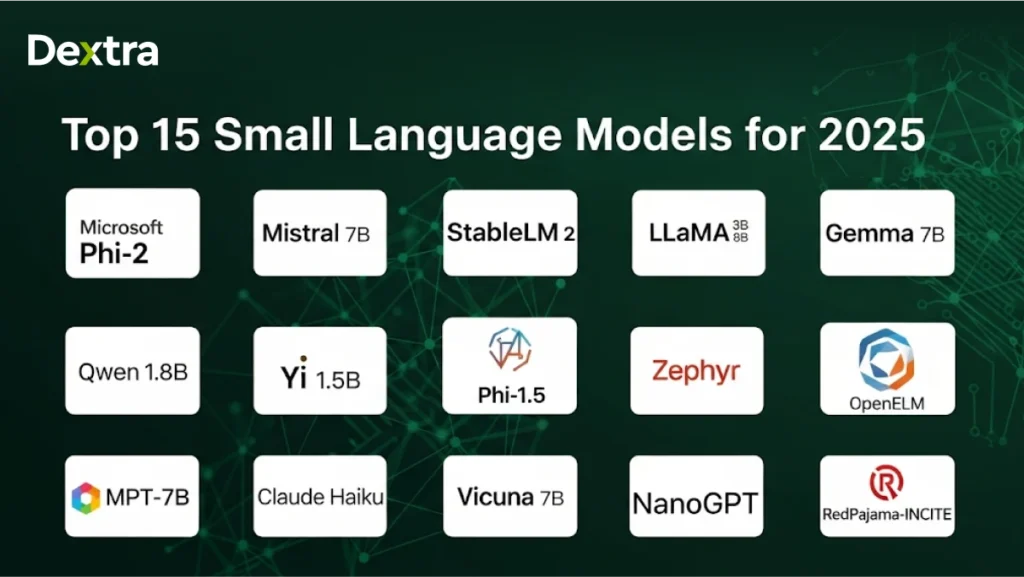

Top 15 Small Language Models in 2025

These smallest LLM models of 2025 are redefining AI by combining speed, efficiency, and accuracy in compact architectures. From open-source champions to proprietary breakthroughs, these small language models deliver big results with minimal compute power.

So, let’s have a look at the top 15 SLMs with their type, use cases, and important strengths.

| Model Name | Developer | Type | Key Strengths | Primary Use Cases |

| Microsoft Phi-2 | Microsoft | Proprietary SLM AI | Outperforms LLaMA & Mistral in reasoning, coding, and comprehension | Edge AI, on-device assistants, enterprise copilots |

| Mistral 7B | Mistral AI | Open-source small LLM | Multilingual accuracy, fast reasoning | Global chatbots, content generation, knowledge retrieval |

| StableLM 2 | Stability AI | Open-source ChatGPT alternative | Scalable, cost-efficient, fine-tuning ready | Startups, open-source AI tools, niche applications |

| LLaMA 3 8B | Meta | Small LLaMA model | High accuracy with low compute demand | Research, internal AI copilots, academic tools |

| Gemma 7B | Google DeepMind | Lightweight long-context model | Strong summarization & RAG performance | Legal research, scientific data analysis |

| Qwen 1.8B | Alibaba | SLM AI for multilingual enterprise | E-commerce search, localized fluency | Global business chatbots, recommendation systems |

| Yi 1.5B | YOY (China) | Privacy-focused small LLM | Efficient on-device inference | Healthcare, finance, lightweight chatbots |

| Phi-1.5 | Microsoft | Early Phi variant | Fast, lightweight inference | IoT devices, virtual assistants, smart tools |

| Zephyr | Open-source community | Fine-tuned small LLM | Rich, natural dialogue generation | Customer support, AI tutoring, learning platforms |

| OpenELM | Open-source | On-device small LLM | Low power usage, offline performance | Mobile AI, wearables, offline AI assistants |

| MPT-7B | MosaicML | Flexible small LLM | Highly customizable, versatile | Document processing, RAG pipelines |

| Claude Haiku | Anthropic | Smallest safety-tuned LLM | Fast, ethical, compliance-friendly | Finance, education, healthcare |

| Vicuna 7B | Community project | Conversational small LLM | Human-like chat quality | Hobby projects, educational tools |

| NanoGPT | Open-source | Minimalist small LLM | Easy to train on small datasets | Research, domain-specific AI |

| RedPajama-INCITE | Together Computer | Open-data small LLM | Diverse training, transparent licensing | Research, summarization, open-source AI projects |

Here is the comprehensive information on each type of SLM:

1. Microsoft Phi-2

- Developer: Microsoft

- Parameters: ~2.7B

- Access: API via Microsoft Azure AI

- Open Source: No Proprietary

- Detailed Overview: Microsoft Phi-2 is an advanced small language model (SLM AI) that provides high-quality performance in reasoning, code creation, and understanding tasks. It outperforms LLaMA and Mistral consistently on common benchmarks, all with fewer parameters. It is optimized for low-latency inference, making it ideal for edge AI and on-device deployment where resource utilization and speed matter most. With natively integrated support in Azure AI services, Phi-2 integrates seamlessly into enterprise workflows, providing robust privacy controls for compliance-driven industries such as healthcare and finance.

- Ideal Use Cases: Enterprise copilots, private AI agents, and edge AI assistants.

2. Mistral 7B

- Developer: Mistral AI

- Parameters: 7B

- Access: Hugging Face / Mistral website download

- Open Source: Apache 2.0

- Detailed Overview: Mistral 7B is an open-source miniature LLM celebrated for its multilingualism and sophisticated reasoning ability. It provides robust performance in question answering, summarization, and structured output generation with a balance of speed. Mistral 7B is very efficient and works well with consumer-grade GPUs, thus making it a go-to for startups and research groups that require powerful AI without costly infrastructure. Unrestricted commercial use with its permissive Apache 2.0 license ensures that developers have complete freedom to customize and incorporate it.

- Ideal Use Cases: Multilingual chatbots, cross-border customer service, global content generation.

3. StableLM 2

- Developer: Stability AI

- Parameters: 3B–7B (varies by release)

- Access: Hugging Face, Stability AI GitHub

- Open Source: Apache 2.0

- Detailed Overview: StableLM 2 is Stability AI’s answer to the demand for an open-source ChatGPT alternative. It prioritizes cost-efficiency and scalability, making it possible to run powerful AI models both on cloud infrastructure and on-device hardware. With open weights and robust fine-tuning capabilities, StableLM 2 empowers developers to create domain-specific assistants without being tied to a single vendor. The model is particularly popular among early-stage startups and independent developers who need affordable AI without sacrificing control.

- Ideal Use Cases: Domain-specific assistants, startup AI products, educational tools.

4. LLaMA 3 8B

- Developer: Meta

- Parameters: 8B

- Access: Request-based download via Meta

- Open Source: Research license

- Detailed Overview: Meta’s LLaMA 3 8B is a refined small LLaMA model that delivers high accuracy and versatility for a wide range of NLP tasks. With a smaller size than LLaMA’s largest models, it is easier to deploy while still offering state-of-the-art performance in reasoning, summarization, and dialogue. Its partially open-source nature makes it a favorite for academic and research institutions looking for a balance between accessibility and capability.

- Ideal Use Cases: Research projects, AI copilots, and enterprise automation.

5. Gemma 7B

- Developer: Google DeepMind

- Parameters: 7B

- Access: Google Cloud Vertex AI

- Open Source: No Proprietary

- Detailed Overview: Gemma 7B specializes in long-context understanding, performing well in applications demanding understanding and processing of large documents. Its structure lends itself well to retrieval-augmented generation (RAG) pipelines, qualifying it as a top pick for industries where contextual precision is of utmost importance. Although proprietary, Gemma takes advantage of Google’s enterprise-level infrastructure, guaranteeing dependability, safety, and scalability.

- Ideal Use Cases: Legal document analysis, scientific research, enterprise knowledge systems.

6. Qwen 1.8B

- Developer: Alibaba

- Parameters: 1.8B

- Access: Hugging Face, Alibaba Cloud

- Open Source: Apache 2.0

- Detailed Overview: Alibaba’s Qwen 1.8B is an SLM AI, specifically made for multilingual enterprise settings. It excels in e-commerce product search, recommendation systems, and conversational AI, with impressive fluency in Asian and Western languages alike. Its light nature enables real-time response in global customer service applications.

- Ideal Use Cases: E-commerce platforms, multilingual customer support, global business tools.

7. Yi 1.5B

- Developer: YOY (China)

- Parameters: 1.5B

- Access: Enterprise partnerships

- Open Source: No Proprietary

- Detailed Overview: Yi 1.5B is a privacy-first small LLM model designed for on-device AI inference. Low compute costs make it perfect for use in industries where data sovereignty and regulatory compliance are not negotiable. It facilitates both text-based conversation and structured output tasks without cloud connectivity.

- Ideal Use Cases: Healthcare, fintech, and government AI applications.

8. Phi-1.5

- Developer: Microsoft

- Parameters: ~1.3B

- Access: Azure AI

- Open Source: No Proprietary

- Detailed Overview: The previous version of Microsoft’s Phi series, Phi-1.5, is still applicable for light AI workloads. It is extensively used in IoT devices and embedded smart systems where extremely quick response rates are necessary. In spite of its compact size, it provides competitive accuracy for short-text Q&A, summarization, and structured data generation.

- Ideal Use Cases: IoT devices, wearable AI assistants, smart appliances.

9. Zephyr

- Developer: Open-source community

- Parameters: ~7B

- Access: Hugging Face

- Open Source: Apache 2.0

- Detailed Overview: Zephyr is a highly optimized conversational small language model that generates context-rich and interactive dialogues. It is best suited for customer service bots, AI tutors, and interactive apps that need a natural conversational style. Being open source, it is easy for developers to modify and incorporate.

- Ideal Use Cases: AI tutoring, customer service chatbots, educational tools.

10. OpenELM

- Developer: Open-source contributors

- Parameters: ~1B

- Access: Hugging Face

- Open Source: Permissive license

- Detailed Overview: OpenELM is a low-power, compact LLM designed for offline AI applications on mobile devices and wearables. Its low energy usage makes it deployable on battery-powered devices without sacrificing performance.

- Ideal Use Cases: Wearable AI, offline travel assistants, mobile productivity tools.

11. MPT-7B

- Developer: MosaicML

- Parameters: 7B

- Access: Hugging Face, MosaicML Hub

- Open Source: Apache 2.0

- Detailed Overview: MPT-7B is a highly customizable small LLM. It’s often employed in retrieval-augmented generation pipelines, document summarization, and report generation automation.

- Ideal Use Cases: Enterprise automation, RAG knowledge engines, and document-heavy workflows.

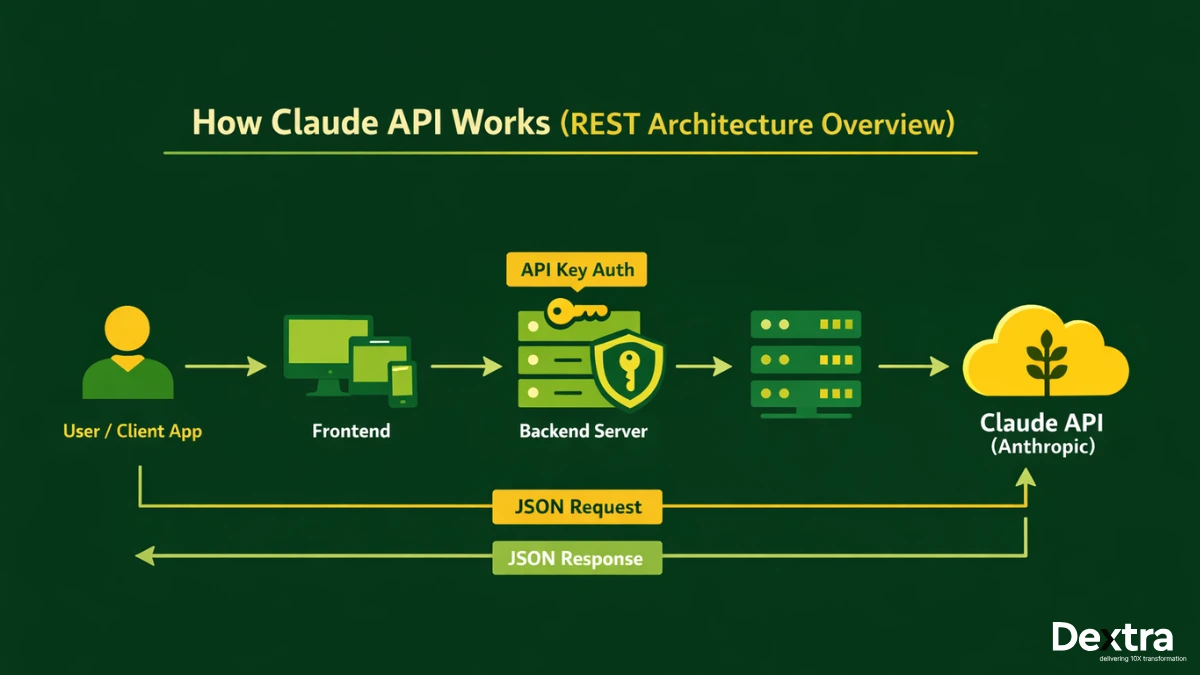

12. Claude Haiku

- Developer: Anthropic

- Parameters: ~3B–5B (estimated)

- Access: Anthropic API

- Open Source: No Proprietary

- Detailed Overview:Claude Haiku is Anthropic’s smallest safety-tuned LLM and is best for speed and ethical output. It best fits industries with intense compliance, where safe AI conduct is paramount.

- Ideal Use Cases: Finance, healthcare, and education.

13. Vicuna 7B

- Developer: Community-driven

- Parameters: 7B

- Access: Hugging Face

- Open Source: Research license

- Detailed Overview: Vicuna 7B is a fine-tuned community small LLM with human-like conversation abilities. Constructed from LLaMA weights, it can serve as a great foundation for interactive AI models.

- Ideal Use Cases: Educational bots, conversational R&D, and community-driven AI projects.

14. NanoGPT

- Developer: Open-source community

- Parameters: Variable (custom-trainable)

- Access: GitHub

- Open Source: MIT license

- Detailed Overview: NanoGPT is a minimalist LLM architecture that allows developers to train models from scratch on modest hardware. It’s ideal for educational purposes and domain-specific dataset training.

- Ideal Use Cases: AI learning, research experiments, niche AI models.

15. RedPajama-INCITE

- Developer: Together Computer & collaborators

- Parameters: 3B–7B variants

- Access: Hugging Face

- Open Source: Apache 2.0

- Detailed Overview: RedPajama-INCITE is an open-data, transparent, small LLM that has been trained on a variety of datasets. It provides balanced performance in conversational AI, summarization, and reasoning with complete transparency regarding training data sources.

- Ideal Use Cases: Academic research, transparent AI projects, summarization tools.

Why Choose Dextralabs for Small Language Model Solutions?

At Dextralabs, we empower companies to leverage the full potential of small language models (SLMs) by creating AI that is lightweight, rapid, affordable, and optimized for real-world applications. Our process excels beyond theory by coupling deep technical knowledge with hands-on product delivery so you receive AI solutions that are actionable on day one.

Here’s why companies in various industries choose us to implement top-notch SLM AI solutions:

1. Expertise in Lightweight AI Model Integration

- We specialize in integrating small LLM models for real-time performance, edge computing, and resource-constrained environments.

- Our engineers understand the unique optimization needs of SLM AI from reducing model latency to compressing memory footprints without compromising accuracy.

- Whether you’re deploying on mobile apps, internal enterprise tools, or embedded IoT systems, we fine-tune every model for seamless user experiences and maximum efficiency.

2. Custom-Built SLM Pipelines for Startups and SMEs

- We don’t believe in one-size-fits-all AI. Every SLM solution we build is co-created with your team to align perfectly with your product’s lifecycle and growth strategy.

- From prototype to production, our pipelines are designed to scale effortlessly as your business grows.

- We factor in future model updates, integration with existing tools, and ongoing maintenance so your investment keeps delivering value.

3. Privacy-Focused, On-Prem & Air-Gapped Deployments

- For industries where data privacy is non-negotiable, we offer on-device inference and fully air-gapped deployments, ensuring sensitive data never leaves your environment.

- We work extensively with regulated sectors like healthcare, fintech, and defense, where compliance and security are paramount.

- Our philosophy is “No vendor lock-in”; your models remain under your full control, giving you long-term flexibility and independence.

4. Multi-Region Delivery Excellence (USA, UAE, Singapore)

- Our delivery footprint spans three continents, enabling us to serve AI-first startups and global enterprises with the same level of precision.

- We adapt to local compliance requirements while maintaining global AI development standards, ensuring smooth, legally compliant deployments anywhere.

- Our regional expertise allows faster deployment timelines and reduced operational friction.

5. Proven Track Record in Low-Latency LLM Use Cases

- We’ve successfully built and deployed low-latency AI applications ranging from RAG chatbots to internal copilots, knowledge engines, and autonomous agents.

- Our deep understanding of the smallest LLM models means we can deliver the speed, responsiveness, and reliability your users expect.

- By optimizing inference pipelines and model architecture, we enable faster time-to-market and higher adoption rates.

6. AI Consulting That’s Product-Led, Not Just Theoretical

- At Dextralabs, we don’t just consult; we build. Our AI experts are hands-on engineers who work directly on your codebase, product architecture, and deployment pipelines.

- We ensure that every recommendation is backed by real implementation experience, not just strategy slides.

- This approach eliminates the gap between planning and execution, saving you time and reducing project risk.

7. Seamless DevOps & MLOps Integration

- We deploy small language models using robust CI/CD pipelines, containerized with Docker, orchestrated on Kubernetes (K8s), and monitored using enterprise-grade observability tools.

- Our MLOps best practices ensure continuous improvement; models stay updated, optimized, and reliable over time.

- From version control to scalable deployments, we build AI infrastructure that’s production-ready from day one.

Conclusion

In 2025, the small language models are not a niche choice anymore; they are the future of AI. With the combination of speed, efficiency, flexibility, and lower compute demands, SLM AI gives companies the ability to innovate without being burdened by the constraints of massive models.

From Microsoft Phi-2 to RedPajama-INCITE, the 15 smallest language models we’ve highlighted show that small architectures can provide world-class performance for various industries ranging from healthcare to fintech and from education to e-commerce.

However, selecting the right model is only the beginning. To really unlock its potential, you require a seasoned partner who knows how to integrate, optimize, and scale small language models according to your specific business requirements.

That’s where Dextralabs stands out, blending technical expertise, privacy-first deployment strategies, and product-led AI consulting to deliver AI that works, scales, and lasts. Whether you need on-device AI assistants, low-latency copilots, or custom SLM pipelines, Dextralabs ensures your AI vision becomes a high-performing reality.

The future of AI is small, smart, and unstoppable, and it’s ready for you to build it.