In just over a decade, Large Language Models (LLMs) have completely changed the world of software development. Not long ago, LLMs were only capable of providing autocomplete suggestions for a few lines of text. They have now evolved into full coding copilots with the ability to carry out entire projects, debug errors, and refactor legacy systems.

Now that we have coding-focused LLMs, there has been a fundamental shift in how developers work. Today, whether you are a solo developer sharing your startup project or a large enterprise managing millions of lines of productive code, LLMs will be leveraged to improve speed, accuracy, and productivity with code. A recent Stack Overflow 2024 Developer Survey revealed that over 92% of U.S. developers reported using AI coding assistants in their delivery workflows, demonstrating the fact that coding assistants and LLMs have now become mainstream tools in software development.

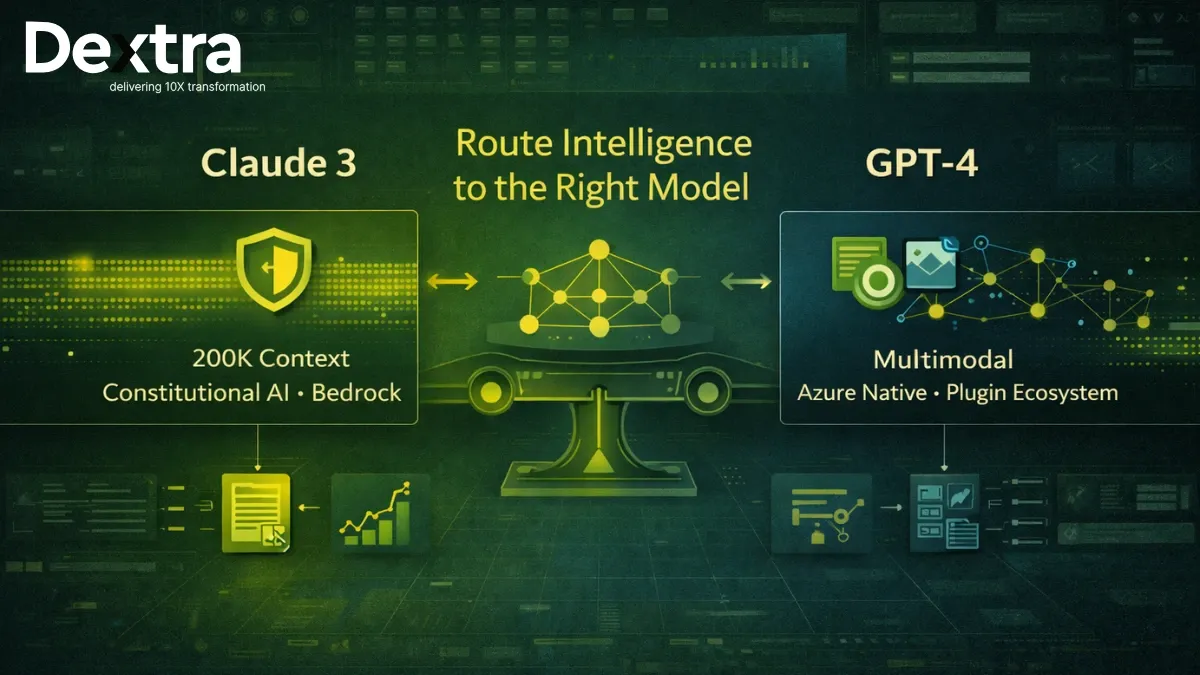

In 2026, the question that most teams and businesses are asking is not if they should use an LLM, but which is the best LLM for coding, given their needs. Finding the best coding LLM comes down to context length, IDE integration,inference speed,privacy, deployment options (local vs. cloud), and a number of other factors. Plenty of players now exist in this field—current major players include OpenAI,Anthropic, and powerful, open-source competitors like Meta’s Code Llama—leaving developers with as many choices as ever.

That is where Dextralabs comes in. As a trusted partner for enterprises and developer teams, Dextralabs enables businesses the ability to use, customize, and scale LLMs for coding workflows, whether deploying cloud, hybrid, or fully private. The data initiatives confirm the demand for LLMs for coding. With search queries on search engines such as “best LLM for coding in 2026” and “which LLM is best for coding” rising quickly, we can see developers are widely looking for coding assistance to help their projects.

This guide will discuss the best LLMs for coding in 2026 and also walk through each one’s strengths, weaknesses, and use cases, so that you can make your best decision.

What Makes an LLM Ideal for Coding?

Not all Large Language Models are the same. Some models are great at generating natural language and poor at complex code generation, and others are built to handle programming workflows. The best LLM for coding in 2025 will stand out by excelling in several key technical areas:

Key Technical Considerations

- Multi-Language – A best coding LLM needs to support many languages, including Python, JavaScript, Java, C++, and Go. Most modern enterprises have some polyglot systems, so flexibility is very important.

- Deep Code Reasoning – In addition to generating syntax, the model should understand architecture, logic, and dependencies through large projects.

- Long Context Understanding – An LLM that is capable of understanding much longer context windows (128K-200K tokens) can process and analyze entire codebases without losing coherence.

- Real-Time Inline Suggestions – Integration into IDEs for autocompletion, refactoring, and debugging provides the coder with little to no friction for usage within their projects.

- IDE Integrations – Compatibility with environments such as VS Code, JetBrains, and Android Studio ensures teams of developers are able to adopt generative AI broadly without friction.

- Model Size vs Inference Latency – Larger models generally generate better accuracy.

However, balancing size with inference speed is vital for real-world usage.

- Local vs. Cloud Deployment – Quick cloud APIs may work for some startups, but enterprises may stick to local deployment because of compliance, with strict compliance for data privacy.

- Cost-Performance Trade-off – API-based solutions can be expensive at scale, and with open-source or even locally deployed models may bring long-term savings.

- Privacy & Enterprise Control – For organizations using sensitive IP, full control over LLM deployment, whether on-premise or hybrid, is a must.

That’s exactly where Dextralabs can provide value. By deploying secure, private LLMs on-prem or with some hybrid cloud structure, Dextralabs ensures that enterprises not only choose the best coding LLM but also deploy it effectively with the built-in privacy, scale, and performance optimization.

Best LLMs for Coding in 2026: Quick Overview

| Model | Type | Best For | Context Length | Notes |

| GPT-4.1 (API) | Cloud (Closed) | Deep reasoning, multi-language efficiency | ~128K+ | Excellent multi-language reasoning, trusted as GitHub Copilot’s engine, best for back-end logic and refactoring, but costly and API-only. |

| Claude 3.5 Sonnet | Cloud (Closed) | Large codebase understanding, full-stack workflows available | 200K | Largest 200K context window, great for massive codebases and documentation, ideal for enterprises, but expensive and closed-source. |

| Gemini 1.5 Pro | Cloud (Closed) | Java/C++, heavy workflows, system-level, and Android development | ~130K | Strong for system-level programming (C++/Java), diagram-to-code tasks, and backend modernization, though costly and inconsistent at times. |

| Code Llama 70B | Open Source | Code generation, private/local deployment | 100K+ | Meta-backed open-source model, powerful in code generation and debugging, highly customizable, but demands heavy computing resources. |

| DeepSeek Coder | Open Source | Affordable and flexible coding tasks done quickly | 16K–32K | Budget-friendly option for startups and small teams, good at debugging and documentation, though limited in context length. |

| Mistral Codestral | Local (Open) | Fast, lightweight inferencing, edge deployment | 32K | Lightweight and low-latency, ideal for offline or resource-constrained setups, but less suited for large enterprise projects. |

| Qwen 2.5 Coder | Local (Open) | Custom fine-tuning for dev workflows, customizations | 32K–64K | Highly customizable for enterprise workflows, great for building domain-specific copilots, but requires dedicated ML pipelines. |

Cloud-Based Coding LLMs

1. GPT-4.1 (API only)

GPT-4.1 represents the next step in the evolution of OpenAI’s models with its deep reasoning ability and multi-language capabilities. It is the underlying model used in GitHub Copilot. Copilot is one of the most widely adopted coding assistant tools.

- Strengths: Good at backend logic, code reviews, bug detection, and refactoring. Good for complex, multi-language applications.

- Weaknesses: Expensive at scale, limited by API-only access, and has privacy concerns for sensitive projects.

- Dextralabs Tip: GPT-4.1 is a good choice for TDD teams wanting rapid prototyping through APIs, but may not be desirable for enterprises with strict rules around IP protection.

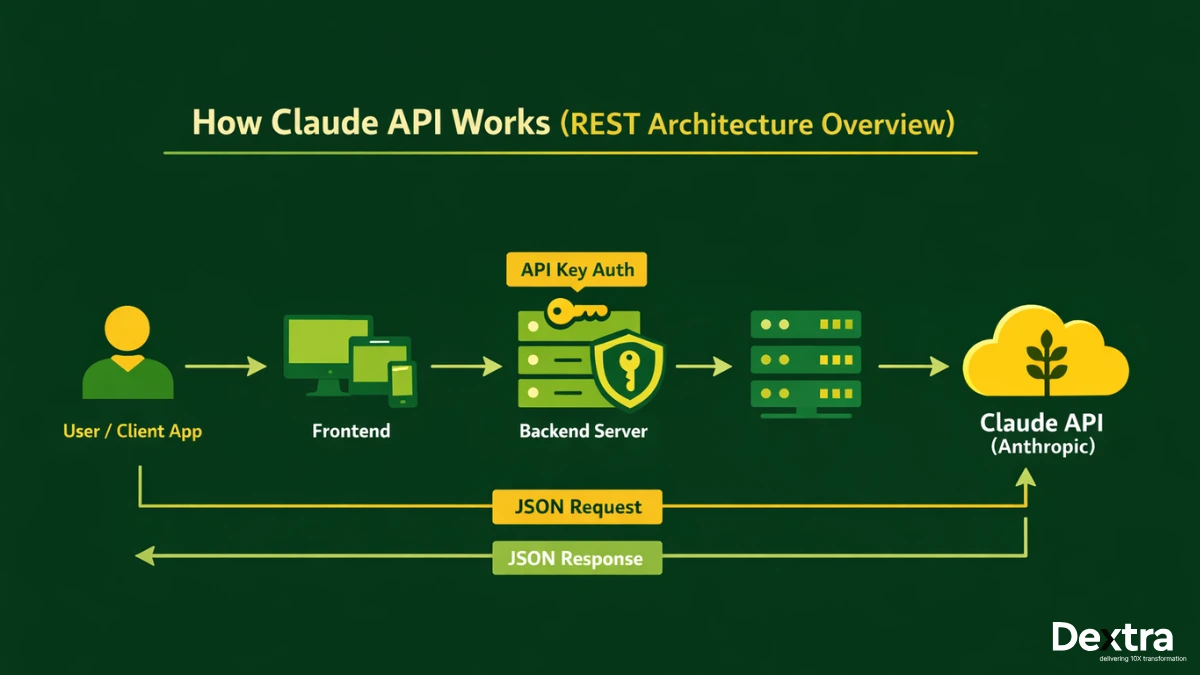

2. Claude 3.5 Sonnet

Anthropic’s Claude 3.5 Sonnet database is a developer favorite because of its 200K context window, which makes working on massive codebases easier. It is best with full-stack development, documentation, and cross-language translation.

- Strengths: Can accommodate enterprise-size repositories and long dependency chains without losing.

- Weaknesses: Similar to GPT-4.1, limited by a closed-source approach and pricing models.

- Community Pick: Developers often say, “Claude reads your intent better than other tools,” making it one of the preferred coding assistants for natural tasks.

3. Gemini 1.5 Pro

The Gemini 1.5 Pro is a Google product that will shine when working with C++, Java, and Android workflows, which is why Google has made it an option for system-level development. Its unique strength is handling diagram-to-code workflows, where visual logic diagrams can be translated into working code.

- Strengths: Conversion to legacy code, enterprise backends, and good for Android apps.

- Weaknesses: Inconsistency across sessions; pricing the same as GPT/Claude.

- Use Case: Companies working with a Java-heavy back end or working at the system-level.

Best Open Source & Local LLMs for Coding

In terms of privacy, control, and cost efficiency, open-source LLMs and best local LLMs for coding are gaining popularity among developers and enterprises. These models offer teams secure local options for running code generation on private infrastructure, flexibility to fine-tune for specific niche workflows, and avoiding recurring cloud expenses. Here are some of the popular options available:

1. Code Llama 70B

Code Llama 70B has gained popularity as one of the leading open-source best coding LLMs. It is powerful for highly contextual and accurate code completion, possesses great reasoning, and fine-tuning flexibility across multiple programming languages, making it flexible for most developers. Being an OSS product means teams also have comprehensive control of deployment and customization.

At Dextralabs, we help teams deploy Code Llama on private cloud setups or local GPU clusters with ease, maximizing performance and data security.

2. DeepSeek Coder

If cost-efficiency is top of mind, DeepSeek Coder could be a great option. It is pre-trained on millions of code and documentation datasets, enabling it to carry out tasks like code completion, bug-fixing, and documentation generation without trouble. DeepSeek is a very competitive option for startups and scaling teams to access performance without the cost of a proprietary LLM.

3. Mistral Codestral

Mistral Codestral prioritizes low-latency performance. It has been designed specifically for edge devices and disconnected use, making it useful for startups and developers with limited underpinning infrastructure. Its light and rapid architecture offers an ideal framework to maximize tight development cycles where iteration speed is critical without reliance on cloud infrastructure.

4. Qwen 2.5 Coder

Qwen 2.5 Coder has been optimized for developer workflows and offers strong capabilities with both generalization coding and specialised use cases. This model is an excellent candidate for fine-tuning based on custom codebases, providing enterprises with the flexibility to shape it to fit around internal requirements.

Dextralabs can provide personalized fine-tuning pipelines for Qwen 2.5 through our ML Ops suite, allowing teams to align the model to reach maximum operational alignment with their coding stacks & workflows.

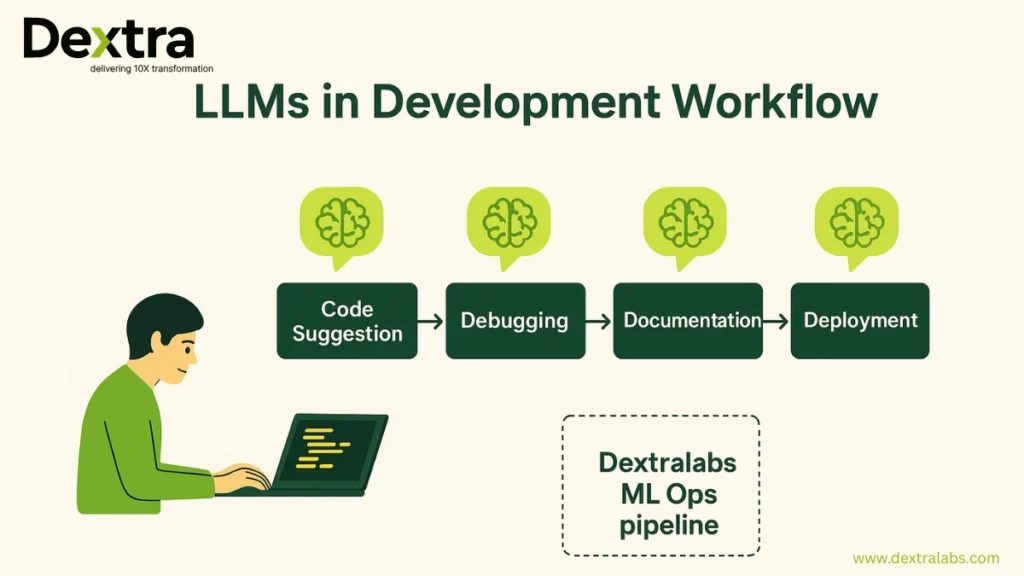

Dextralabs: Powering the Future of Code-Ready LLMs

Dextralabs supports developers, startups, and enterprises who are looking to work with Large Language Models (LLMs) for coding. With teams experimenting with the best open source LLM for coding on one end of the spectrum, and building the next best-in-class enterprise co-pilot on the other, Dextralabs offers the tools, infrastructure, and expertise to innovate and develop speed, deploy with intelligence, and maintain complete control of your AI.

Our Core Services:

1. LLM Deployment

Your LLMs can run in a few targeted ways. Deploying locally is the right option for owning your data, and quickly deploying on the cloud is the better way to scale a new workload, or combine both for balance when speed and compliance matter. With Dextralabs, it’s possible to maintain flexibility and control without sacrificing one for the other.

2. Custom Fine-Tuning

Every industry has its workflows. Dextralabs gives you the ability to run through the fine-tuning process on your proprietary codebase – whether that’s your fintech Java back ends, healthcare APIs, or IoT firmware. To ensure your LLM understands and generates contextually relevant code.

3. Agentic Coding Models

Generic AI assistants designed for everyone are not specific enough for your developer team. Dextralabs creates agentic ai coding copilots that can autonomously analyze repositories to suggest fixes, optimize workflows, and handle multi-step coding tasks to help make your development teams more productive.

4. Monitoring & Optimization

AI models are powerful solutions, but can also be very expensive without proper oversight. Dextralabs provides real-time monitoring dashboards to track model performance, latency, and usage. While providing methods to optimize costs and maximize output quality.

5. IDE/ API Integrations

Development teams shouldn’t have to leave their favorite tools. Dextralabs integrates into IDE workflows like VS Code, JetBrains, or IntelliJ as well as CI/CD pipelines when you are ready to implement your AI implementation. This allows your AI copilots to fit naturally into your workflow.

Real-World Use Cases

- DevOps Startup: A DevOps-centric startup worked with Dextralabs to deploy DeepSeek Coder locally. Thanks to enterprise-grade token gating and access controls, they achieved airtight security while allowing their team to generate, test, and deploy scripts more quickly—without ever transmitting their sensitive code to the cloud.

- Fintech Company: A rapidly growing fintech company used Dextralabs’ MLOps pipelines to optimize Code Llama on their proprietary Java backend systems. What was the outcome? A 37% bump in developer velocity—faster bug resolution, faster feature rollout, and a decreased tech debt.

Cloud vs Local LLM for Coding: Which One to Choose?

| Feature | Cloud LLMs (GPT-4.1, Claude, Gemini) | Local/Open-Source LLMs (Code Llama, Qwen, DeepSeek) |

| Cost | Higher ongoing cost due to API pricing; great for small operations, but pricey at scale. | Higher upfront cost (GPUs/servers) but marginally lower cost, so over time this will be cheaper. |

| Privacy & Security | Your data goes through the provider, you have limited control, and this could cause compliance issues in many regulated industries, e.g, finance and healthcare. | You have full enterprise control; your data is all within your environment; this ensures GDPR/HIPAA/SOC-2 compliance. |

| Customization | General-purpose models with limited fine-tuning, limited by constraints from the provider. | High level of customization through fine-tuning and training on domain specifications, context-aware for proprietary workflows. |

| Deployment & Scalability | Instant deployment using APIs and no infrastructure management is needed, but waiting for the vendor’s uptime and policy is a limiting factor. | Initial infrastructure set-up (GPUs, monitoring, scaling) and a significant amount of upfront work, but it will provide scalable service and independence in the long run. |

| Best Use Cases | Rapid prototypingHackathons/MVPs | Enterprise-scale appsHigh volume, cost-sensitive usage |

Best LLMs by Use Case:

Here is real use cases of LLMs based on various task implementation or industrywise:

1. For IDE Integration & Inline Suggestions → GPT-4.1 (Copilot), Claude 3.5

For real-time assistance coding inside your editor (VS Code, JetBrains, etc.), GPT-4.1 (GitHub Copilot) and Claude 3.5 are the best picks.

- With its strong reasoning engine, GPT-4.1 was designed to catch bugs, complete functions, and offer smart inline suggestions in multiple languages.

- Developers love Claude 3.5 for its natural understanding of intent, making it especially useful for smoother, human-like suggestions.

- Best for individual developers and teams looking for speed and accuracy in their everyday coding tasks.

2. Full Project Generation → Claude 3.5, Gemini 1.5 Pro

When the task is to generate entire project structures instead of only completing a single function, these models truly shine.

- With its 200k context window, Claude 3.5 can generate large repos while keeping continuity across files.

- Gemini 1.5 Pro is strong for system-level and Android workflows, which is ideal if you are looking for scaffolding new projects, migrating legacy code, or doing large-scale solutions.

- Best for startups or teams looking to bootstrap new apps quickly, move old systems, and build prototypes end-to-end.

3. For Localized Offline Use→ Mistral Codestral, Qwen 2.5

When privacy and compliance outweigh access to a cloud-based LLM, your solution is a best local LLM for coding. Mistral Codestral and Qwen 2.5 both fit into this category.

- Mistral Codestral is lightweight and built for efficiency, running easily on local hardware.

- Qwen 2.5 was built for multilingual coding, making it useful in a global enterprise team that seeks offline solutions.

- Ideal for enterprises that operate in regulated industries (e.g., finance, healthcare, defense) where they never want to pull data outside of on-premises systems.

4. Enterprise-Scale Contexts → Claude 3.5, GPT-4.1

Enterprises need models that can comprehend massive codebases, complex workstreams, and support long-term code maintainability.

- Claude 3.5 provides a longer context window, handles large repositories and dependency chains without breaking continuity.

- GPT-4.1 is unrivaled in reasoning across multi-language, multi-module enterprise applications.

- Best for large organizations that need the best, in terms of reliability, documentation, and scaling, including across global teams.

5. For Affordable OSS Development → Code Llama, DeepSeek Coder

For open-source enthusiasts or cost-sensitive teams, these models provide a strong performance to accessibility ratio.

- Code Llama (by Meta) is versatile, light-weight, and fine-tunable, which makes it a reliable go-to for OSS projects.

- DeepSeek Coder provides excellent code generation capabilities while remaining light on computing power for local deployments, ensuring developers save on costs.

- Best for indie devs, open-source contributors, and startups who want flexibility without the high API costs.

How to Get Started with the Best Coding LLM via Dextralabs?

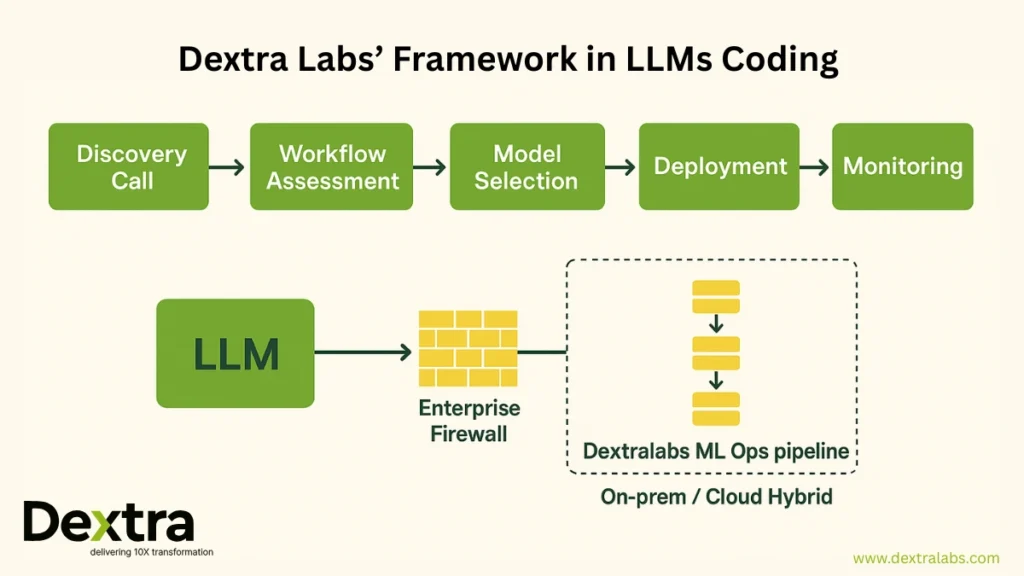

Choosing the best coding LLM does not have to be overwhelming. Dextralabs makes the whole process easy by starting where it makes sense for your team. A journey begins with a discovery call where our experts will uncover the goals of the engagement, current development workflows, and existing infrastructure.

After we gather this information, we will conduct a deep assessment of your coding stack and workflows to identify bottlenecks and areas with opportunities that an LLM can provide you with value. Whether it’s improving code generation, enhancing debugging, or scaling enterprise applications.

Based on these insights, our team will help you select the appropriate LLM for your use case (e.g., a cloud model with superior performance like GPT-4.1 or Claude 3.5, or an open-source model that is customizable like Code Llama, Qwen, or DeepSeek). After it is selected, we handle the deployment and monitoring through our enterprise-grade ML Ops platform, ensuring performance, reliability, and cost optimization at every stage.

To finish things up, we will also provide you with custom fine-tuning so that your LLM is trained from the ground up based on your domain-specific codebase, framework, and industry specifics. This means your LLM will not just be powerful, it will also be context-aware and optimized for your use case.

Enhance your development pipeline with your choice of the best coding LLM—Talk to Dextralabs today and future-proof your engineering workflows.

Final Verdict: Which LLM Is Best for Coding Right Now?

Determining the best LLM for coding is not only about determining the most efficient coding model; it is also subjective and largely based on your workflows, infrastructure, priorities, and supporting ecosystem. Here’s the breakdown:

- Overall Best (Cloud): GPT-4.1 & Claude 3.5

Unparalleled accuracy, reasoning, and inline coding support for developers that want immediate productivity with minimal setup.

- Best Enterprise & Large Context: Claude 3.5

With its extended context window, Claude 3.5 is ideal for any organization that is dealing with large repositories and complicated dependencies without losing track of details.

- Best Open Source: Code Llama 70B

A consistent, flexible choice with a low relative cost of entry and great performance across every dev-centric metric, especially for developers who like open-source ecosystems.

- Best Local + Customizable: Qwen 2.5 & Mistral Codestar

Perfect for enterprises that want local deployment, have some of the most stringent data privacy and compliance requirements in place, able to do custom fine-tuning while maintaining reasonably high coding efficiency.

- Best Value/Pick: DeepSeek Coder

Low relative cost, flexible performance, reasonable alternative for Start-ups and small teams, and they did a great job optimizing for cost efficiency.

Final Takeaway:

If you prefer speed and convenience, go with the cloud-deployed coding models such as GPT-4.1 or Claude. If you want privacy and customizations, start looking for leaders in open-source applications such as Code Llama or Qwen. Ultimately, the decision comes down to which LLM model is best for coding based on your project’s unique requirements.