Generative AI is shaking up the way businesses work. According to AmplifAI’s Generative AI Statistics, the generative AI market is expanding rapidly, with an annual growth rate of 46%, and is expected to reach $356 billion by 2030.

From new startups to the biggest names around, companies are putting large language models (LLMs) to work across support desks, marketing, research, and more. You’ve probably heard about ChatGPT’s huge user base. Now, models like Claude and Gemini are gaining ground just as quickly. But here’s the thing: most teams only tap into a slice of these tools’ true power.

Here’s the secret behind AI that actually makes a difference: prompt engineering for ChatGPT, Claude, and Gemini. Asking something simple might get you a passable answer. But with the right prompt, you unlock advanced reasoning and creativity that can really move the needle. Companies that put effort into prompt design, including the big names we work with at Dextralabs AI consulting, see a real change. Think: faster results, better insights, and less wasted time. That’s why LLM consulting services matter.

LLM consulting services can help organizations avoid generic, one-size-fits-all approaches and maximize the value of their AI investments. Whether you’re implementing a new workflow, building out a powerful knowledge base, or optimizing for regulatory compliance, LLM consulting services ensure your prompt engineering strategies are tuned specifically for your business goals.

Let’s break it all down. This guide will show you what makes each model unique, why prompt engineering is essential, and how you can master it with a few best practices.

What is Prompt Engineering?

Prompt engineering is all about shaping your AI’s answers by crafting smarter questions or instructions. Unlike traditional code, you talk to the model in natural language; sometimes with a bit of structure, sometimes in a simple, direct way. But your words matter.

Imagine you’re working with a very smart assistant. If you just say, “Write about marketing,” you’ll get something so-so. But if you get specific, “Act as a B2B marketing strategist. Write three email subject lines for HR leaders that promise to cut employee turnover by 25%”, you’ll get results you can use.

Types of Prompts:

Let’s have a look at different types of prompts:

- System Prompts set the ground rules and AI’s “personality” before the chat starts.

- Role-Based Prompts cast the model in a specific role, like “Act as a financial analyst.”

- Zero-Shot Prompts ask for answers without any examples.

- Few-Shot Prompts give examples so the model knows what kind of answer you want.

- Chain-of-Thought Prompts spell out the steps for the AI to follow, making it reason step by step.

Why Does It Matter in Business?

Enterprise AI prompt optimization changes how every team works. Marketing teams brainstorm new ideas, sales gets help with proposals, and research teams speed up analysis. The key difference from home use? Prompts need to be reliable, easy for others to reuse or adapt, and designed with business goals in mind. That’s where the real value lives.

Key Large Language Models in Focus

The adoption of generative AI in enterprises has surged, with usage increasing from 33% in 2023 to 71% in 2024, according to McKinsey’s State of AI report. Let’s look at the big three.

1. ChatGPT (OpenAI)

ChatGPT is known for smooth, friendly conversations. It takes instructions well, and its ecosystem (with plugins and APIs) is huge. It’s great at juggling lots of information and changing topics on the fly.

Strengths: Fluid back-and-forth, wide range of plugins, adaptable to all sorts of tasks.

Limitations: Sometimes makes things up or gives out-of-date info. Double-checking facts is a must.

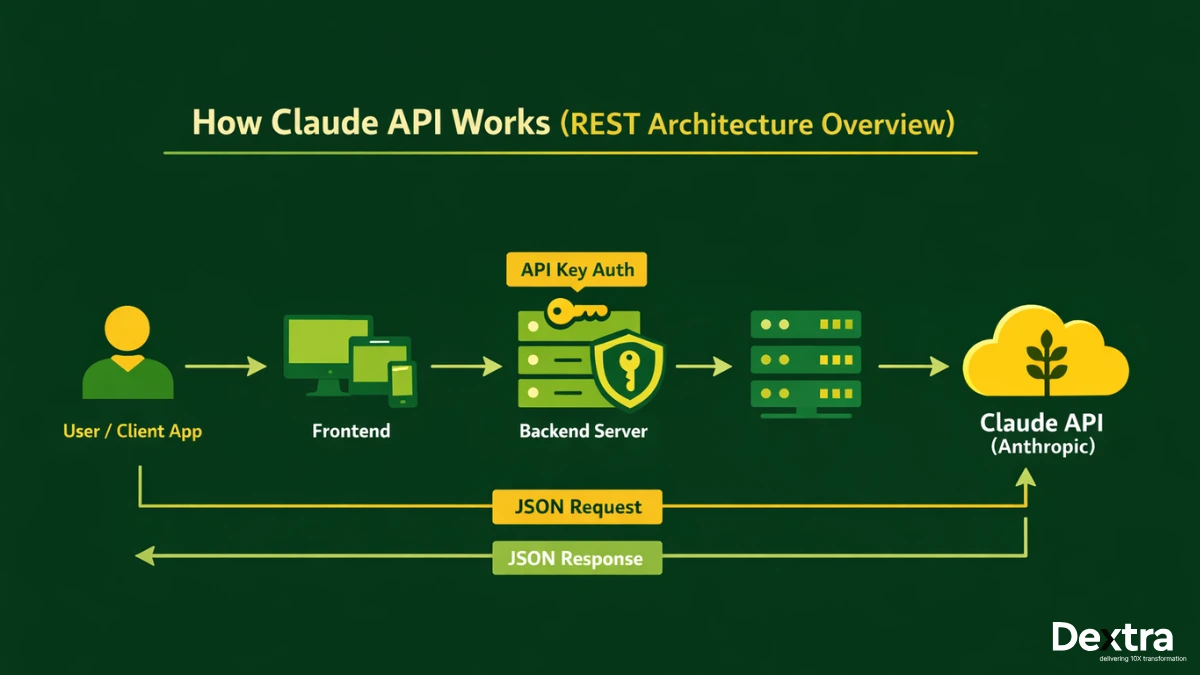

2. Claude (Anthropic)

Claude is all about being safe, helpful, and reliable. It remembers a lot at once, making it excellent for tasks like reading long documents or summarizing big reports.

Strengths: Handles tons of information, sticks to the rules, avoids risky answers, really shines with nuanced tasks.

Limitations: Sometimes less creative or bold, and its ecosystem isn’t as big as ChatGPT’s.

3. Gemini (Google DeepMind)

Gemini isn’t just text; it understands images, charts, and more. Effective Gemini Google LLM prompt engineering enhances its multimodal capabilities. It plugs right into Google Workspace, so if your company is already using Google tools, Gemini fits right in.

Strengths: Reads and uses both text and visuals, taps Google’s massive ecosystem, and brings in real-time data.

Limitations: Still new, so it’s evolving. The user and developer ecosystem is growing, but not huge yet.

Prompt Engineering: Comparing What Works Across Models

For the best results, you have to use a custom approach with each model.

For ChatGPT

Prompting ChatGPT isn’t the same as prompting Claude. ChatGPT likes clarity and structure. Need a brainstorm? Spell out your ask. Want a summary or a list? Let it know. It’s good to use system prompts and tell the AI what “role” it’s taking. Bullet points or sections work great.

For Claude

Claude prompt engineering best practices involve detailed background info and careful instructions. Claude can handle a big data dump, keep the context together, and keep answers safe. Put all your instructions at the start if you can. That leads to more focused and consistent results. The more details and guardrails you give, the better Claude performs.

If you’re engineering prompts for enterprise use, following Claude prompt engineering best practices means not only specifying output formats but also highlighting any policy boundaries or ethical guidelines upfront. That’s why many top consultants in the field emphasize that using Claude’s prompt engineering best practices helps companies get reliable, on-brand, and safe results from their enterprise AI deployments.

For Gemini

Gemini Google LLM prompt engineering works best when you take advantage of its strengths. Mix text with images or charts, use clear formatting, and make requests that tap into real-time data or Google apps. It’s built for multimodal and integrated tasks, so don’t treat it like a basic chatbot.

Quick Table: Prompt Engineering at a Glance

| Model | Prompt Length | Context Handling | Shines At | Strategy |

| ChatGPT | 100–500 words | Great for dialogue | Creative, multi-step tasks | Roles + clear asks |

| Claude | 500–2000 words | Holds lots of data | Summaries, deep analysis | Detailed context |

| Gemini | Variable + visual | Multimodal | Data/visual, Google tasks | Structure + media |

Enterprise Impact and Use Cases:

Here’s where things get exciting:

Market Research & Summarization

Custom enterprise AI solutions make it easy to pull meaningful insights from a mountain of data. Imagine taking a hundred-page competitor report and boiling it down to an action plan in minutes. That’s possible with the right prompt, especially when you use Dextralabs LLM prompt engineering services. One client in pharma sped up their market analysis by 400%, just by rethinking how they asked the AI to process information.

Customer Support Automation

The differences between ChatGPT, Claude, and Gemini become clear here. ChatGPT can run a helpful, friendly Q&A. Claude is best for answers that really need to follow company policy. Gemini shines if your support team runs on Google Workspace and needs to blend text and visual tricks in their responses.

Knowledge Base Assistants

Got a giant FAQ or documentation site? A well-designed prompt can turn that into a smart helper that guides users and answers questions instantly. This is where well-crafted AI prompt design strategies matter most.

Creative Workflows

Whether you need fresh campaign ideas or copy, these models help, but in different ways. ChatGPT produces fast variations for writers. Claude gives thoughtful, polished content that’s on-brand. Gemini pairs strategy with visuals for marketing teams.

Common Pitfalls to Avoid

Even smart companies slip up:

- Using the same prompt everywhere: One-size-fits-all doesn’t work. Each model is different.

- Ignoring model differences: Don’t treat Gemini like ChatGPT or vice versa.

- No evaluation framework: If you don’t test and measure, you can’t know what’s working. Generative AI consulting services can help set up these systems.

- Skipping compliance: Poorly designed prompts can break rules or leak data. Always keep regulations and company policies in mind.

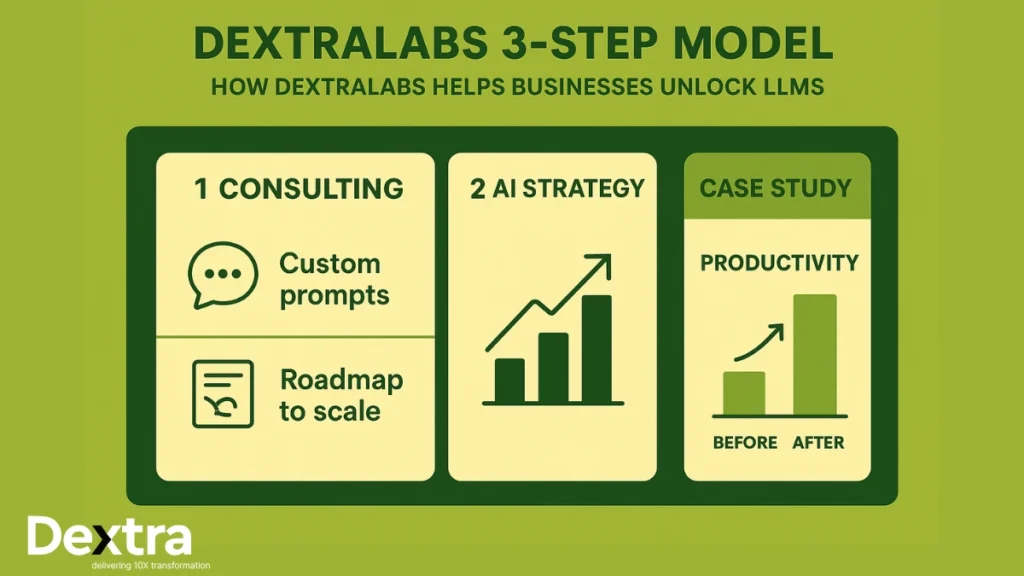

How Dextralabs Helps Businesses Unlock LLMs?

We don’t just give advice; we partner with your team from start to finish and make sure AI actually moves the needle. As a trusted prompt engineering company, Dextralabs specializes in creating tailored AI solutions that maximize the potential of ChatGPT, Claude, and Gemini for enterprises.

Consulting That Makes a Difference

Dextralabs AI consulting doesn’t believe in templates or shortcuts. We listen, learn your needs, and design custom enterprise AI solutions that fit real business problems.

Prompt Engineering Done Right

Our experienced prompt engineers create prompts for ChatGPT, Claude, and Gemini that don’t just work, they drive real impact. We blend industry experience with technical know-how so you get results that matter.

Strategic Roadmaps

Scaling from a proof-of-concept to running AI at enterprise scale is tough. We’ll help you plan the journey so you get the ROI you want.

Hands-On Training

Your team should feel confident using AI. Our workshops upskill everyone, from analysts to managers, so prompt engineering becomes second nature.

Client Story: Real-World Results

A financial services client struggled with inconsistent research reports across teams. Our Dextralabs AI consulting team developed role-specific prompts for ChatGPT and Claude. Research quality improved by 250%, while production time decreased by 60%. The standardized approach ensured compliance with industry regulations.

Partner with Dextralabs for AI impact that goes beyond basic implementation. We help enterprises move from one-size-fits-all prompting into tailored AI workflows that drive measurable business results.

Conclusion

The differences between ChatGPT, Claude, and Gemini create unique opportunities for businesses willing to invest in proper prompt engineering. Each model offers distinct advantages when approached with platform-specific strategies.

Model-specific prompt design isn’t just a technical consideration, it’s a competitive advantage. Organizations that master these differences will significantly outperform competitors relying on generic approaches.

Ready to unlock your AI investment’s full potential? Partner with Dextralabs for AI impact that transforms how your business operates. Our Dextralabs LLM prompt engineering services provide the expertise, training, and ongoing support needed to maximize results from ChatGPT, Claude, Gemini, and emerging AI platforms.

Contact Dextralabs today to discover how proper prompt engineering can revolutionize your business workflows and drive measurable growth through intelligent AI implementation.

FAQs on Prompt Engineering for ChatGPT, Claude & Gemini:

Q1. What exactly is “prompt engineering”? Isn’t it just typing better questions?

Not quite. While anyone can type a question, prompt engineering is about structuring instructions so that ChatGPT, Claude, or Gemini give you precise, useful, and reliable outputs. Think of it as the difference between ordering “some food” versus asking for “a medium thin-crust Margherita pizza with extra olives, delivered before 9 PM.” The clarity changes the outcome.

Q2. Why do I need different prompting strategies for ChatGPT, Claude, and Gemini?

Because each LLM has its own “personality” and design philosophy.

– ChatGPT is like a smart, chatty colleague who thrives on variety.

– Claude is the meticulous, thoughtful researcher who handles context like a pro.

– Gemini is the futuristic multitasker — juggling text, images, and data in one go.

If you talk to them all the same way, you’re leaving a lot of power unused.

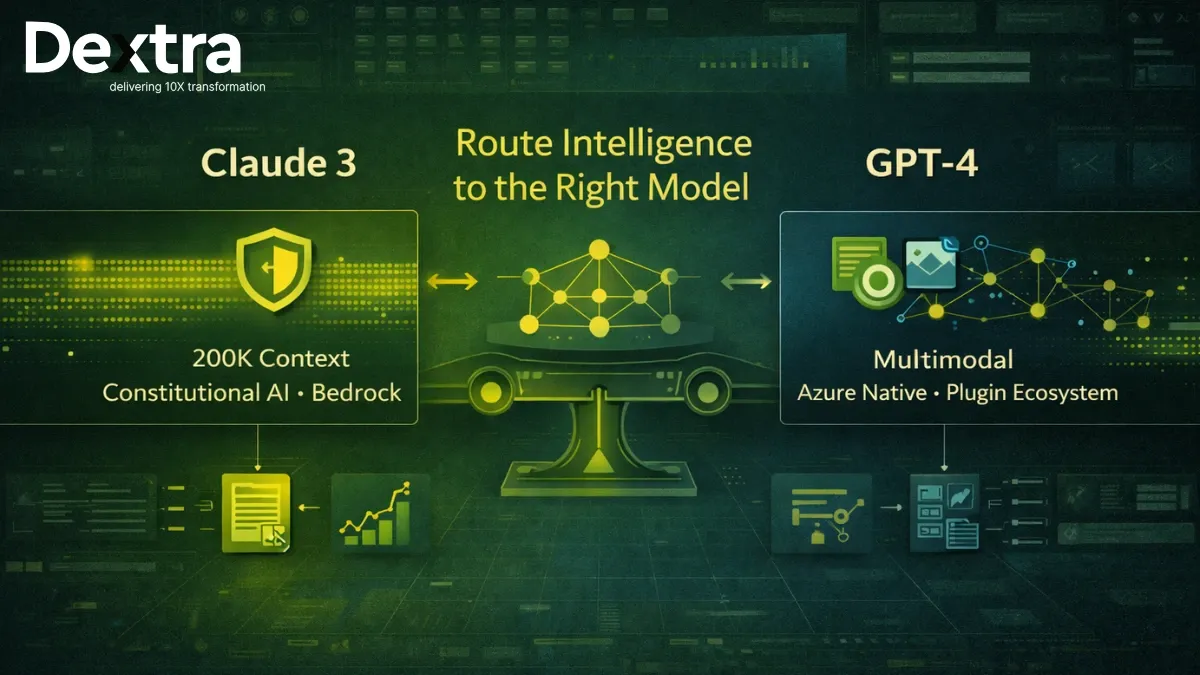

Q3. Which model is best for enterprise use — ChatGPT, Claude, or Gemini?

It depends on your business goal:

Use ChatGPT if your focus is wide-ranging tasks, customer interaction, and ecosystem integrations.

Use Claude if you handle long reports, compliance-heavy tasks, or sensitive content.

Use Gemini if you live inside the Google suite (Docs, Sheets, Search) or need multimodal analysis.

In practice, enterprises often blend them — the real value lies in knowing when to use which model.

Q4. What are some common mistakes teams make with prompt engineering?

– Copy-pasting generic prompts from the internet.

– Forgetting that each LLM “thinks” differently.

Skipping measurement — prompts need testing for accuracy, compliance, and ROI (not just “does it sound cool”).

Ignoring safety frameworks — especially with customer-facing bots.

Bottom line: random prompts won’t scale; tailored design will.

Q5. Can I train my team to do prompt engineering in-house?

Absolutely — but there’s a learning curve. Teams need to understand not only how to write prompts, but also how to evaluate outputs against business goals. That’s why workshops and structured training (like Dextralabs offers) speed things up. Otherwise, you risk months of trial-and-error.

Q6. What does Dextralabs actually do differently in this space?

We don’t hand you a generic “prompt pack.” We analyze your workflows, pick the right model mix (ChatGPT, Claude, Gemini), and build prompts tailored to your business outcomes. Then we train your team so they’re not dependent on consultants forever. It’s about scalable prompt design, not one-time hacks.

Q7. What kind of ROI can I expect from optimized prompting?

Enterprises we work with typically see major gains in:

– Speed (reports generated in hours instead of weeks).

– Accuracy (fewer hallucinations = less fact-checking time).

– Productivity (customer agents, analysts, and marketers scale without burning out).

The ROI isn’t just cost-cutting — it’s unlocking new workflows that weren’t even possible pre-AI.

Q8. Is prompt engineering still relevant if models keep getting “smarter”?

Yes — maybe even more relevant. Smarter models = more capabilities, but also more complexity. Prompt engineering evolves into prompt strategy: aligning each model’s power with your enterprise goals. It’s like navigating a sports car — just because it’s advanced doesn’t mean you drive without skill.