For most organizations, the first interaction with artificial intelligence feels deceptively simple. A user enters a prompt, the model responds, and value appears to be created instantly. This ease of use has driven rapid experimentation across enterprises, but it has also created a dangerous illusion: that AI success is primarily a matter of better prompts.

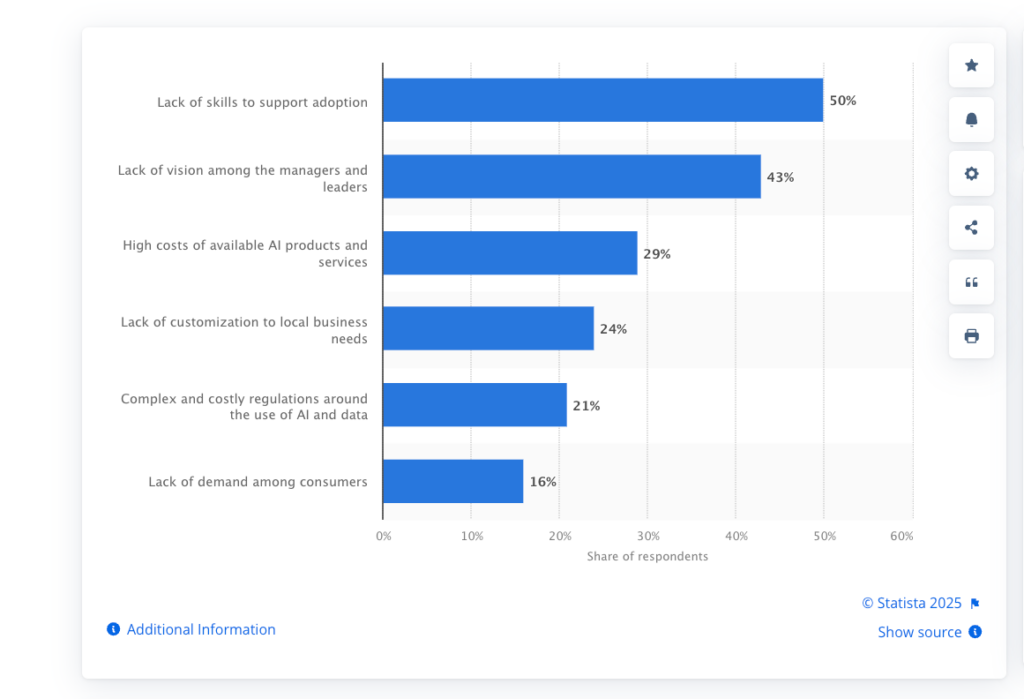

In reality, prompt-centric AI collapses under real-world complexity. Enterprises do not operate in single turns. They operate in environments filled with uncertainty, incomplete information, regulatory constraints, evolving data, and high consequences for error. According to Statista, in 2025 the biggest barriers to AI adoption were lack of skilled professionals (50%), lack of management vision (43%), and high costs (29%). This highlights that structural and organizational challenges, not just prompts, limit enterprise AI success.

When AI systems are expected to analyze markets, draft policies, investigate incidents, or support decision-making, single-shot responses quickly reveal their limitations. At Dextralabs, we’ve observed a consistent pattern across industries: AI initiatives stall not because models lack intelligence, but because intelligence is deployed without structure, autonomy, or feedback loops.

Agentic workflows solve this problem. By introducing planning, tool use, reflection, and iteration, agentic workflows transform large language models from passive responders into autonomous, goal-driven systems. This article explores the core agentic workflow patterns Dextralabs uses to design enterprise-grade AI systems in 2026 and why they are rapidly becoming the foundation of serious AI deployments.

Main obstacles to artificial intelligence (AI) adoption in global business in 2025.

The Enterprise AI Bottleneck: Why Non-Agentic Systems Fail?

Despite major improvements in model accuracy, reasoning, and context length, enterprises continue to struggle with AI reliability and ROI. The bottleneck is architectural, not algorithmic.

1. One-Shot Reasoning Cannot Handle Enterprise Complexity

Most LLM deployments rely on a single generation pass. This approach assumes that all necessary reasoning can happen internally, instantly, and correctly. In practice, enterprise tasks require:

- Multiple intermediate steps

- Validation of assumptions

- Cross-checking against data

- Iterative refinement

Without iteration, AI outputs tend to be plausible but fragile. They sound confident while masking subtle errors, an unacceptable risk in domains like finance, healthcare, legal, and operations.

2. Lack of Strategic Autonomy

Traditional LLM systems do not decide how to solve a problem. They do not evaluate alternative strategies or adapt when conditions change. This absence of meta-reasoning makes systems brittle.

For example:

- If a data source is incomplete, the model does not know to search elsewhere.

- If a calculation fails, it cannot retry using a different method.

- If confidence is low, it cannot escalate or ask for clarification.

Agentic workflows introduce decision-making as a first-class capability.

3. Disconnection from Enterprise Reality

LLMs without tools are fundamentally disconnected from the environments they are meant to support. They cannot access internal systems, retrieve live data, or execute actions. This isolation is the primary cause of hallucinations in production systems.

Dextralabs Principle: Intelligence without grounding, feedback, and control is not enterprise-ready.

What Are Agentic Workflows? The Dextralabs Definition

At Dextralabs, we define an agentic workflow as a goal-oriented AI system capable of planning actions, using tools, evaluating outcomes, and iterating until predefined success conditions are met.

Agentic workflows introduce control loops into AI behavior, mirroring how experienced human operators solve complex problems. Have a look at agentic AI vs traditional AI:

| Traditional AI | Agentic AI |

| Prompt → Response | Goal → Plan → Act → Evaluate |

| Stateless | Persistent memory |

| No self-checks | Built-in verification |

| Model-centric | System-centric |

This shift reframes AI from a content generator into a decision-making system.

The Dextralabs Agentic Architecture Stack

Every production-grade agentic system we deploy is built on a layered architecture designed for reliability, observability, and scale.

1. LLM Layer

- Multi-model orchestration (reasoning vs execution models)

- Cost–accuracy tradeoff optimization

- Domain-specific model selection

2. Orchestration Layer

- Workflow control and sequencing

- Agent state management

- Retry, fallback, and escalation logic

3. Tooling Layer

- Retrieval-Augmented Generation (RAG)

- Internal APIs and microservices

- Databases and data warehouses

- Code execution environments

- Workflow and automation engines

4. Memory & Context Layer

- Short-term working memory

- Long-term vector memory

- Metadata and task state tracking

- Historical performance signals

5. Evaluation & Guardrails Layer

- Confidence scoring

- Policy and compliance enforcement

- Observability, logging, and tracing

- Human-in-the-loop triggers

This architecture enables the five foundational agentic patterns below.

Pattern #1: Reflection Pattern — Self-Improving Intelligence

The reflection pattern enables an agent to critically evaluate its own output before finalizing a response.

Why Reflection Matters?

First-pass AI outputs are rarely optimal. Reflection introduces:

- Error detection

- Clarity and structure improvement

- Alignment with domain constraints

Instead of assuming correctness, the system treats generation as a draft.

Dextralabs Reflection Loop:

- Generate initial output

- Apply role-based critique (legal, technical, strategic)

- Identify gaps, risks, and ambiguities

- Regenerate with targeted improvements

- Assign a confidence score

Enterprise Applications

- Regulatory and compliance documentation

- Technical specifications and SOPs

- Code generation and review pipelines

- Executive reports and decision memos

Reflection converts AI from a generator into a self-correcting system, dramatically improving reliability in high-stakes contexts.

Pattern #2: Tool Use Pattern — Grounding Intelligence in Reality

Tool use is the bridge between reasoning and reality.

Why Tools Are Essential?

Without tools, AI systems operate on probability rather than truth. Tool use allows agents to:

- Retrieve current and proprietary data

- Query internal systems

- Perform precise calculations

- Validate claims against sources

Dextralabs Tool Ecosystem

- RAG pipelines for enterprise knowledge

- SQL and NoSQL databases

- Internal APIs and SaaS integrations

- Code interpreters and execution sandboxes

- Workflow automation engines

Key Differentiator

Dextralabs agents dynamically decide when a tool is required and which tool best serves the task. This decision-making capability distinguishes agentic systems from scripted automation.

Pattern #3: ReAct — Adaptive Intelligence Under Uncertainty

ReAct (Reason + Act) interleaves reasoning with execution.

The ReAct Cycle

- Reason about the current state

- Act using tools or decisions

- Observe results

- Re-reason and adapt

Why Dextralabs Uses ReAct?

ReAct excels when:

- Information must be discovered

- Assumptions may be wrong

- The environment changes mid-task

It prevents both over-planning and blind execution, enabling adaptive problem-solving.

Ideal Use Cases:

- Market and competitive research

- Incident investigation

- Root-cause analysis

- Exploratory data analysis

ReAct produces transparent reasoning trails that improve trust, debugging, and governance.

Pattern #4: Planning Pattern — Structured Intelligence at Scale

Some enterprise workflows demand predictability, sequencing, and dependency management.

Dextralabs Planning Framework

- Goal formalization

- Task decomposition

- Dependency mapping

- Resource and tool allocation

- Controlled execution

Strengths

- Reduced execution errors

- Better coordination

- Clear accountability

Limitations

- Less flexible under high uncertainty

Dextralabs typically combines planning with ReAct to balance structure and adaptability.

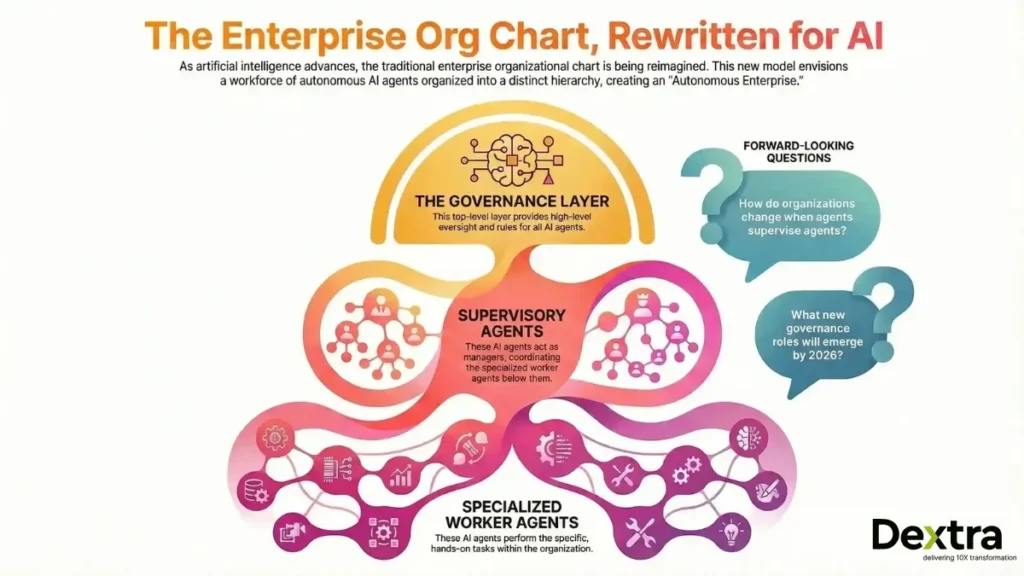

Pattern #5: Multi-Agent Systems — Engineering AI Teams

As problem complexity increases, specialization becomes unavoidable.

The Multi-Agent Principle

- One generalist agent cannot optimize simultaneously for research depth, execution speed, verification rigor, and strategic oversight.

Common Agent Roles

- Research agent

- Execution agent

- Critic or verifier agent

- Orchestrator agent

Coordination Models

- Centralized orchestration

- Hierarchical delegation

- Event-driven collaboration

Multi-agent systems introduce complexity, but for large-scale enterprise workflows, the performance gains far outweigh the overhead.

Hybrid Agentic Architectures in Production

Real-world systems rarely rely on a single pattern.

Dextralabs-Proven Combinations

- Reflection + RAG: High-accuracy knowledge systems

- ReAct + Tool Use: Autonomous research agents

- Planning + Multi-Agent: Enterprise-scale automation

Example: An AI compliance officer may plan audit steps, retrieve evidence via RAG, reason iteratively with ReAct, and validate conclusions using reflection.

Enterprise Use Cases Powered by Dextralabs Agentic Systems

These systems do not merely assist humans; they operate alongside them as autonomous contributors. Take a look:

- Autonomous market and competitive intelligence

- AI-driven compliance and risk monitoring

- Internal AI copilots for operations and strategy

- Intelligent customer support platforms

- DevOps and incident-response agents

Measuring ROI: How Dextralabs Evaluates Agentic AI?

Let’s have a look at how Dextralabs evaluates agentic AI:

Core Metrics

- Task success rate

- Hallucination reduction

- Cost per successful outcome

- Time-to-decision

- Human intervention rate

Continuous Optimization

- Agent performance dashboards

- A/B testing of agent strategies

- Feedback-driven improvement loops

Agentic systems are living systems. Measurement and iteration are non-negotiable.

The Future of Agentic AI: Dextralabs 2026 Outlook

The future belongs to systems that think, act, and adapt. Key trends shaping enterprise AI:

- Agentic RAG as the default architecture

- Self-healing and self-optimizing workflows

- Multimodal agents (text, image, audio)

- Governance layers for autonomous decision-making

- Agents managing and supervising other agents

Conclusion: Engineering Intelligence, Not Chatbots

Agentic workflows represent a fundamental shift in enterprise AI. They replace fragile prompt-based interactions with resilient, autonomous systems capable of real work.

At Dextralabs, we don’t build chatbots or demos; we engineer intelligent systems designed for scale, trust, and impact.

In 2026, competitive advantage will not come from better prompts or larger models. It will come from better-designed agentic workflows.