For many CIOs and CTOs, the challenge at the intersection of AI innovation and legacy system stability is very real. Replacing core ERP or CRM platforms is disruptive and often carries more risk than reward. Today, a more modern, controlled path is available: layering Large Language Models (LLMs) over your existing environment to deliver rapid, secure, and measurable business value.

With proven integration techniques, such as robust API facades, event-driven infrastructure, and secure Retrieval-Augmented Generation (RAG), you can unlock the power of AI without destabilizing the systems you rely on daily. This isn’t just theory. Dextra Labs works with organizations globally, delivering outcome-first pilots that prove rapid return on investment while respecting the integrity of your foundational systems.

The Case for “Augment, Not Replace”

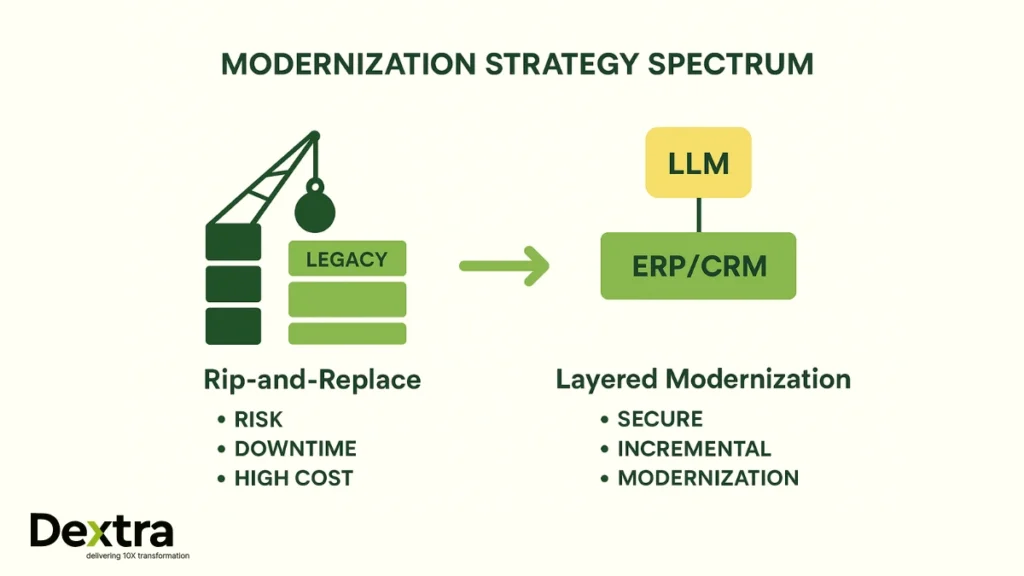

Traditional “rip-and-replace” migrations have become synonymous with delayed timelines, unexpected cost overruns, and operational risk. Organizations that embark on such journeys often find themselves chasing a moving target, while new business needs emerge before the initial transformation is complete.

A deliberate augmentation strategy, layering modern intelligence over your ERP and CRM systems, offers a tangible alternative. By building on your existing infrastructure, you preserve investments and accelerate time-to-value. For example, deploying LLM-powered natural language interfaces enables business units to access complex data rapidly, while AI agents can automate triage, summarization, or workflow routing without changing foundational workflows or compliance structures.

Event-driven patterns are a big part of this method. Instead of direct, synchronous integration, where AI models work with older platforms in real time, which could slow things down or make them less reliable, AI capabilities are triggered as separate, asynchronous events. This makes sure that your basic systems stay reliable and operate well while still getting the speed and flexibility of current AI.

Integration Patterns That Work

Connecting advanced AI to established enterprise systems calls for thoughtful partitioning, not force-fitting. These integration patterns enable enterprise-grade modernization while reducing risk and maintaining governance.

API Facades and Adapter Layers

The most robust integrations begin with an API facade, modernizing your legacy interface by exposing clean REST or OData endpoints. This removes the complexity of direct, fragile integrations, providing a secure, authenticated boundary between legacy systems and new AI capabilities. Adapter layers standardize contracts and shield your core from inadvertent changes, ensuring long-term system reliability.

Event-Driven Middleware

Using an event-driven middleware layer with systems like RabbitMQ or Kafka is a crucial way to separate legacy producers (like transactional ERP events) from AI consumers (like LLM-driven analytics and summarisation). This asynchronous architecture makes sure that AI services may grow and shrink as needed and work with old systems at the best frequency and load, which keeps operations running smoothly even when demand surges due to AI.

Secure RAG over Operational Data

LLMs are at their most valuable when grounded in relevant, up-to-date operational data. Achieving this in a secure, auditable fashion calls for RAG deployed over a managed API gateway. Rather than direct database access, data retrieval is parameterized, RBAC-enforced, and subject to comprehensive logging. Sensitive information can be masked or tokenized at the gateway, ensuring privacy without losing contextual relevance.

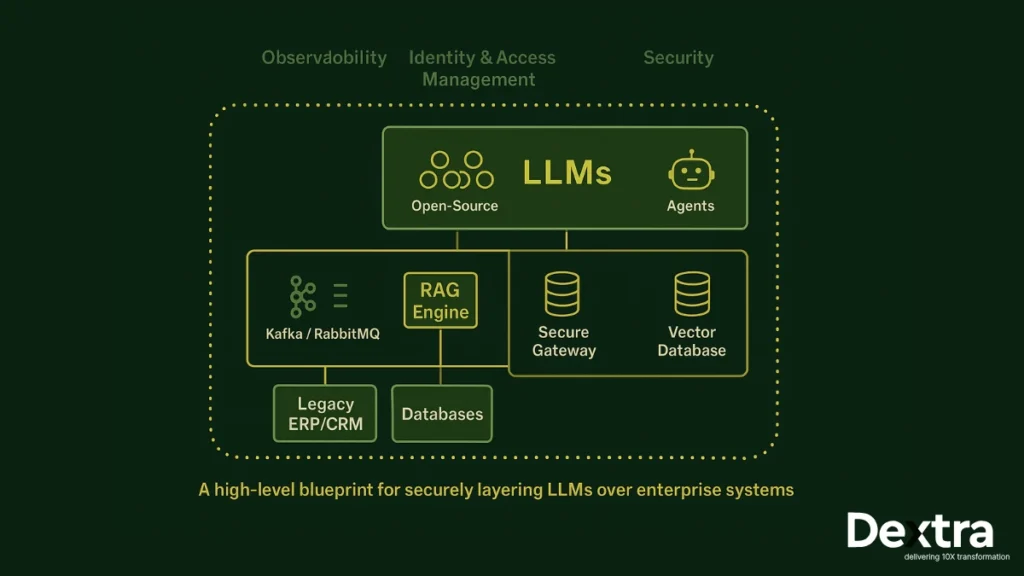

Architecture Blueprint

A successful LLM integration draws clear boundaries for security, scalability, and observability. The following high-level architecture blueprint illustrates a pragmatic approach:

- Ingress Layer: All external and internal requests flow through a unified API gateway. This enforces security policies, supports SSO, and serves as the single point for authentication and authorization.

- Identity Management: Integrate with established identity providers (OAuth2, SAML). Every model, agent, and consumer operates on the principle of least privilege, reducing exposure risk.

- Data Plane: Events are managed asynchronously on an event bus; the vector database underpins secure, efficient RAG, indexing, and retrieving business context for the LLM while respecting access controls.

- Model Plane: Use a mix of open-and closed-source LLMs as needed. On-prem models handle sensitive data, while cloud-hosted models scale for general workloads. This hybrid cloud/on-premises approach balances compliance and performance.

- Observability: Set up strong logging, tracking, and cost management. Use rapid tracing, assessment harnesses, and real-time alarms to find model drift, integration issues, or budget overruns before they affect production.

ERP/CRM Use Cases:

Business impact is realized when technical capability translates directly into operational results. Below are practical applications of LLM augmentation, now running in live settings across industries:

ERP Modernization

Let’s have a look at ERP modernization:

- Intelligent Demand Forecasting: LLMs analyze structured and unstructured signals, sales notes, market indicators, and historical records, to produce actionable, API-delivered forecasts directly to inventory or finance modules.

- Automated Exception Summaries: AI-powered agents review exception logs, summarize root causes, and recommend remediation, reducing time-to-resolution for supply chain and financial incidents.

- Natural Language Reporting: Allowing users to query operational or financial data with simple, human language, translating “Show me top-margin clients in EMEA last quarter” into actionable, governed reports.

CRM Enhancement

Take a look at CRM enhancement:

- Next-Best-Action Recommendations: LLMs recommend proactive, individualised sales or support procedures based on historical customer encounters, case histories, and open tickets.

- Case Deflection and Triage: The LLM categorises, surfaces appropriate resources, and automates initial answers to incoming support issues, reducing resolution times and human workload.

- Automated Call Summaries: AI transcribes, summarises, and extracts next steps from calls, integrating with CRM and releasing personnel for high-value work.

Even for mainframe and COBOL environments, integration is viable. Event streams derived from system logs can be analyzed with RAG-enabled agents, then posted back to legacy systems without the need for codebase changes.

Security and Compliance by Design

Security and compliance requirements cannot be relinquished in the pursuit of AI agility. Dextralabs delivers “security by design,” embedding best practices at every step:

- All LLM interactions are mediated through zero-trust API layers; raw SQL is never exposed to AI processes.

- Every access to data and transaction can be audited, and detailed records show who accessed what, when, and why.

- Before any data leaves your controlled perimeter, PII is redacted and tokenized.

- Row- and field-based access controls are rigorously enforced. Inference services operate in dedicated, isolated contexts, and all credentials are routinely rotated and tracked for anomalous usage.

- Architectures are tailored: highly regulated workflows may be restricted to on-premises models, while others adopt hybrid cloud, always in accordance with your data residency and vendor risk requirements.

Performance and Scaling

Efficiency and predictability are hallmarks of enterprise integrations at scale. To deliver high performance and control costs:

- Prefer asynchronous integration patterns; batch and cache calls where possible to optimize usage and reduce LLM latency.

- Right-size vector indexes, applying hybrid (vector plus keyword) retrieval strategies for fast, accurate search across large datasets.

- Adopt indexing strategies like HNSW or IVF appropriate to your data and access patterns. Use storage tiers to balance performance and cost.

- Tune underlying databases and event buses to handle modern AI-driven workflows without interfering with transaction processing.

- Deploy automated monitoring and controls to cap LLM usage and prevent unexpected cost overruns. Observability should provide both operational intelligence and proactive anomaly detection.

Build vs. Buy vs. Integrate

Every modernization journey faces the fundamental question: build, buy, or integrate?

- Integrate using proven platforms like MuleSoft, Boomi, or SAP Integration Suite for orchestration, governance, and accelerated time-to-value.

- Buy or host RAG and vector solutions considering your privacy, latency, and TCO criteria. Managed cloud can be effective for rapid pilots, while self-hosted supports maximum control.

- Phase your delivery wrap APIs and establish event-driven integration first, then tactically add LLM-based enhancements. This incremental approach reduces program risk and enables quick wins that build executive and stakeholder support.

The optimal path often combines all three. Tailor your mix to align with internal strengths, critical workloads, and strategic objectives.

Dextralabs Delivery Approach

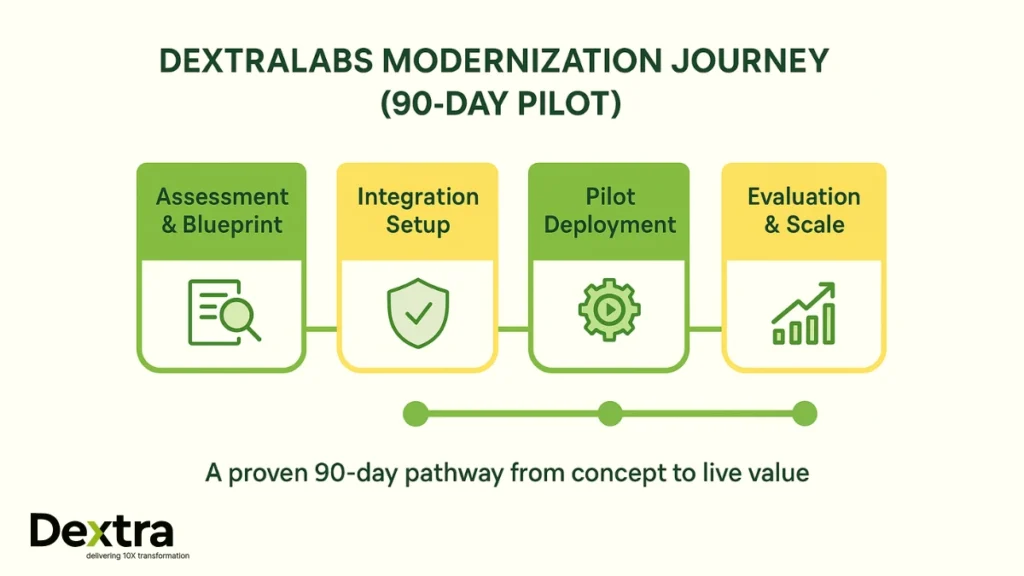

Dextralabs brings a disciplined, evidence-based methodology to modernizing legacy systems with LLMs:

- Service Portfolio: We enable legacy-aware API facades, design and implement event-driven architectures, deliver secure RAG, and provide expert guidance for LLM and deployment strategy selection. Our LLMOps and AgentOps practices ensure efficiency, performance tracking, and cost control.

- 90-Day Pilot Model: Instead of protracted scoping, Dextralabs launches productive pilots in three months, establishing core integration, delivering one operational use case, and baselining KPIs. The result is a live demonstration of value, minimizing disruption and building an ironclad business case for broader adoption.

- Outcome Orientation: Our main goal is to swiftly generate business value by minimizing the cost-to-serve, cycle time, and number of mistakes, as well as getting your firm ready for regulated, enterprise-wide LLM implementation.

Implementation Checklists:

Before you start, confirm readiness with an actionable checklist:

- Have all critical APIs been inventoried and secured with a modern facade?

- Is an event bus provisioned and validated for production traffic?

- Are data contracts (for APIs and event streams) well-defined and version-controlled?

- Is PII redaction strictly enforced in every transaction?

- Does the RAG pipeline utilize a secure API gateway, or does it never involve direct database access?

- Are vector indexes right-sized for your search and performance requirements?

- Are model evaluation, governance, and observability in place end-to-end?

- Are usage and cost monitors configured, with alerts for limits or anomalies?

- Are cutover, rollback, and change management procedures documented and tested?

Partner With Dextralabs: Blueprint Your Modernization Strategy

Your ERP and CRM systems represent your organization’s operational foundation, stable, secure, and deeply integrated. Modernizing does not have to mean fielding unnecessary risk or discarding your most valuable technology assets. With LLMs, you can unlock intelligence and automation, elevating user experience and efficiency while shielding your core from disruption.

Dextralabs invites you to a Modernization Assessment and Integration Blueprint for one ERP or CRM workflow, complete with data security review, KPI baseline, and actionable architecture diagrams.

Take the first step. Book a session today and let us map the modernization risks and opportunities specific to your environment. In 90 days, you’ll witness the real benefits of AI for yourself, along with a plan for enterprise scale that includes control and compliance.

Let’s use the strength of your past to develop your AI future, with the help of Dextralabs’ experience.

FAQs on Modernizing Legacy Systems with AI & LLMs:

Q1. What is the difference between legacy and modern ERP systems?

Legacy ERP systems are traditional, on-premise platforms built years ago to manage finance, inventory, and operations. They often have rigid architectures, limited scalability, and minimal integration options. Modern ERP systems, on the other hand, are cloud-native, API-driven, and AI-augmented. They enable real-time analytics, automation, and seamless connectivity with other business tools. Essentially, legacy ERPs run your data — while modern ERPs learn from it.

Q2. What is a legacy ERP?

A legacy ERP is an older enterprise resource planning system that may still function well but lacks modern capabilities like real-time data access, API integration, or AI-driven insights. These systems were designed for stability and control — not agility. Over time, they become harder to maintain, upgrade, or integrate with cloud services or AI models.

Q3. What does it mean to modernize legacy systems?

Modernizing a legacy system means upgrading it without tearing it apart. Instead of replacing everything, modern teams often build an intelligent overlay — such as an LLM (Large Language Model) or AI layer — on top of the existing system. This “augmentation” approach improves data access, automation, and decision-making, while keeping the core ERP or CRM stable and secure.

Q4. Why do 75% of all ERP projects fail?

Most ERP projects fail because they’re treated as technology migrations, not business transformations. Common pitfalls include:

– Ripping out old systems too quickly (rip-and-replace).

– Poor change management and user training.

– Lack of alignment between IT and business goals.

– Ignoring data quality and integration challenges.

Dextralabs’ approach to modernization focuses on incremental transformation — layering AI capabilities over existing systems to deliver value fast, without disrupting operations.

Q5. What is the difference between legacy and modern authentication?

Legacy authentication often relies on outdated methods like passwords or simple tokens stored in local servers. Modern authentication uses multi-factor authentication (MFA), OAuth, biometrics, and zero-trust security principles. It’s designed for distributed environments where users access systems from multiple devices and locations — essential for today’s hybrid enterprises.

Q6. What are modern ERP systems?

Modern ERP systems are cloud-first, API-centric, and AI-enhanced platforms that unify data, processes, and analytics across an organization. They integrate with CRM, HR, supply chain, and financial tools — enabling predictive insights, automation, and scalability. Examples include SAP S/4HANA Cloud, Oracle Fusion Cloud ERP, and Microsoft Dynamics 365 — all of which can now integrate with LLM-driven copilots.

Q7. What is the difference between legacy and device?

If you mean “legacy devices,” the difference lies in connectivity and intelligence. A legacy device is an older hardware or embedded system that lacks network connectivity or modern firmware support. A modern device connects seamlessly via APIs or IoT platforms, supports real-time data exchange, and can integrate with AI or edge analytics. In modernization terms, the goal is to connect legacy devices through middleware or AI bridges — not necessarily replace them.