You know what’s interesting? We’re living through something pretty remarkable right now. Just a couple of years ago, if you wanted cutting-edge AI for your business, you had to shell out serious money to the big tech companies. Now? Some of the most powerful open source large language models are completely free – and honestly, many of the best open source llms are giving the paid alternatives a real run for their money.

I’ve been watching this space closely, and 2025 feels like the year when open source AI models really hit its stride for enterprise use. Companies aren’t just experimenting anymore; they’re building real, production-grade systems with these models. And there are some pretty compelling reasons why.

The numbers are compelling:

- McKinsey’s Global AI Survey indicates that businesses that utilize open source AI models experience a 23% quicker time-to-market for their AI projects.

- Gartner forecasts that more than 60% of businesses will adopt open-source LLMs for at least one AI application by 2025, increasing from 25% in 2023.

Deloitte’s “State of AI in the Enterprise” report emphasizes that companies using open source LLMs can save 40% in costs while achieving similar performance levels as proprietary options.

This guide explores the best open source large language models in 2025, comparing features, performance, use cases, and ecosystem support for informed decision-making.

At Dextralabs, we’ve helped enterprises implement the right LLM stack—from initial model selection through production deployment—and I’ve seen firsthand how the right best open source ai strategy becomes a genuine competitive differentiator, not just a cost-cutting measure.

Build Smarter with the Right Open Source LLM

Struggling to choose between LLaMA, Mixtral, or Qwen? Let Dextralabs help you identify, fine-tune, and deploy the perfect open source model for your business.

Talk to Our LLM ArchitectsWhy Smart Companies Are Going Open Source?

Let’s be honest about something – choosing between top open source llms models and proprietary AI isn’t just a technical decision. It’s a business strategy decision that’ll impact your company for years to come.

The Money Talk: Those per-token pricing models from the big providers? They can get expensive fast. Like, surprisingly expensive. I’ve seen companies get sticker shock when their usage scales up. With free LLMs, you’re looking at infrastructure costs instead of licensing fees. Sure, you still need to run the hardware, but at least you can predict and control those costs.

Your Data Stays Yours: This is huge, especially if you’re in a regulated industry. When you use a proprietary API, your data goes through someone else’s servers. That might be fine for some use cases, but what if you’re a law firm handling sensitive client information? Or a healthcare company dealing with patient records? Open source models let you keep everything in-house.

Customization That Actually Matters: Here’s where things get really interesting. With the best open source llm models, you can fine-tune them on your specific data. Imagine a customer service bot that actually understands your products, your policies, and your customers’ common issues. That’s not just possible – it’s becoming standard practice.

The Community Factor: Something I find fascinating about the open source AI community is how fast things move. When someone discovers a bug or develops an improvement, it spreads quickly. You’re not waiting for a vendor’s quarterly update cycle.

What to Consider Before Making the Jump?

If you’re a CTO or tech lead thinking about this, here are the real questions you need to ask:

Do You Have the Team for This? Implementing open source LLMs is not a straightforward process. You need people who understand machine learning, can handle deployment, and know how to fine-tune models. If you don’t have the necessary expertise in-house, consider training or forming partnerships.

What About Compliance? Every industry has its rules. Healthcare has HIPAA, finance has all sorts of regulations, and don’t get me started on GDPR. Make sure your chosen open source large language model and deployment approach actually meet your compliance requirements.

Can It Scale? That model that works great in testing might struggle when you have thousands of users hitting it simultaneously. Think through your scaling needs early.

How to Pick the best open source llm?

Choosing an open source LLM isn’t like picking a database where you can compare clear performance metrics. There are several factors that matter:

Performance Where It Counts: Don’t just look at general benchmarks. If you’re building a coding assistant, you care about code generation quality. If it’s customer support, you need strong conversational abilities. Match the model’s strengths to your actual use case.

License Compatibility: This might sound boring, but it’s crucial. Apache 2.0 and MIT licenses are pretty permissive for commercial use. Some other licenses have restrictions you need to know about upfront.

Resource Requirements: Some of these models are absolute beasts when it comes to hardware requirements. A 70-billion parameter model might perform amazingly, but can your infrastructure handle it? Sometimes a smaller, more efficient model is the smarter choice.

Community Health: Active GitHub repos, responsive maintainers, and regular updates are good signs. You want a model that’s going to be supported and improved over time.

Best open source large language models:

Let me walk you through the models that are really making waves in 2025:

1. Meta’s Llama 3 Series

These are probably the most well-known open source models right now, and for good reason. The 8B, 70B, and larger variants offer solid performance across almost any task you throw at them. They’re particularly good for companies that want to fine-tune for specific domains.

What I like: Great base performance, strong community support, regular updates What to watch: The Meta license has some specific terms you should review for commercial use

2. Mistral and Mixtral

Mistral is one of the best open source llm models doing some clever things with their “Mixture of Experts” architecture. Basically, these models can achieve great performance while being more efficient than you’d expect. The Mixtral 8x7B is particularly impressive.

Best for: Companies that need multilingual support or want to optimize for efficiency License: Apache 2.0 (which is great for commercial use)

3. Google’s Gemma 2.0 Flash

Google’s entry into open source is interesting because it brings some of the technology from their Gemini models to the community. As one of top open source llms, Gemma Flash is designed to be lightweight and fast, which makes it great for applications where response time matters.

Best for: High-throughput applications, chatbots, quick summarization tasks

4. Qwen Series from Alibaba

Don’t sleep on these models. Qwen 2.5 and especially Qwen2.5-Coder are getting really good results, particularly for code generation and multilingual tasks. If you’re doing anything involving Chinese language processing, these are worth a serious look.

Standout feature: Exceptional Chinese-English performance

5. Vicuna (LMSys)

Here’s an interesting one – Vicuna is built on top of LLaMA but has been specifically fine-tuned for conversations. The team at LMSys has done excellent work making it really good at following instructions and maintaining context in dialogues.

Best for: Chat interfaces, helpdesks, virtual assistants where conversation quality matters The catch: Non-commercial license, so it’s great for research and academic use but not for business deployment Why it matters: Shows what’s possible with good fine-tuning approaches

6. Falcon Series (Technology Innovation Institute)

The Falcon models from TII in the UAE have been making serious waves. The 180B parameter version was a real statement when it launched, and even the smaller 7B and 40B versions punch above their weight. They’re designed to be production-ready from the start.

Best for: Content generation, summarization, general-purpose applications License: Apache 2.0 for most versions Regional note: Particularly popular in Middle East applications, but gaining traction globally

7. BLOOM (BigScience Project)

The fact that this is genuinely a community effort makes it unique. The design of BLOOM reflects the collaborative efforts of hundreds of scholars. It was developed from the ground up using ethical AI principles and excels in multilingual tasks.

Ideal for: Businesses who value the development of ethical AI, multilingual applications License: Responsible AI License (requires certain ethical use) Distinct angle: supports a staggering number of languages, which makes it useful for applications that are really worldwide.

8. MosaicML MPT Series

The MPT series, which is currently a component of Databricks, was created with enterprise deployment in mind. These models are designed to be as efficient as possible in both inference and training. Companies that want to get up and running fast without a lot of ML knowledge will find them especially interesting.

Ideal for: Businesses seeking a quicker time to production and cost-effective corporate deployments Apache 2.0 is the license. Business appeal: From the beginning, commercial deployment and support were considered.

9. Microsoft’s Phi-3 Series

Here’s proof that bigger isn’t always better. Microsoft’s Phi-3 models (3.8B, 7B, 14B parameters) deliver surprisingly strong performance despite their compact size. They’re optimized for edge deployment and scenarios where you can’t throw massive hardware at the problem.

Best for: Mobile applications, edge AI, private on-device agents License: MIT License (very permissive) Innovation: Shows how much you can achieve with smaller, more efficient architectures

10. DeepSeek R1

This is one to watch closely. DeepSeek R1 from China is gaining serious momentum, especially for its mathematical reasoning capabilities. The 67B version is particularly impressive for complex problem-solving tasks that require logical thinking.

Best for: Mathematical reasoning, educational applications, any use case requiring strong logical capabilities License: Apache 2.0 Growing fast: Particularly popular in Asia and academic settings, but expanding globally

Bonus: Grok (xAI)

I had to mention Elon Musk’s Grok because it’s… different. It’s designed to have personality and can be quite witty (sometimes controversially so). While it’s capable, its unconventional style makes it more suitable for creative applications than serious business use.

Best for: Creative content, experimental applications where personality matters Note: More of a wildcard option – great for specific creative use cases but not your typical enterprise deployment

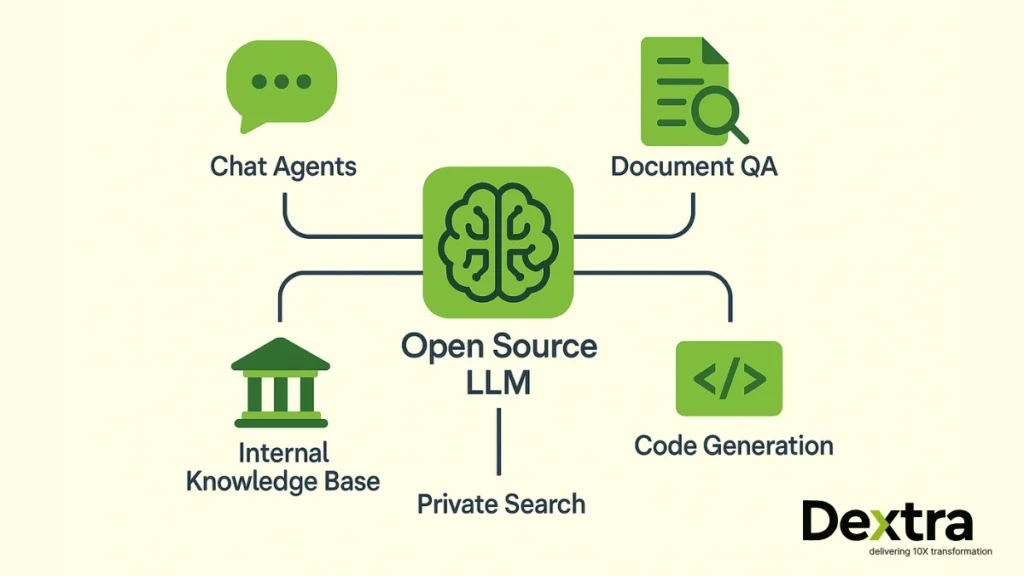

Real-World Applications That Actually Work

Let’s talk about how companies are actually using these open source llm models:

Internal Knowledge Bases: Instead of employees digging through endless documentation, they can ask natural language questions and get instant answers. One company I know fine-tuned a model on their internal wiki and procedures – now new hires get up to speed much faster.

Document Processing: Legal firms are using these models to summarize contracts and identify key clauses. Financial services companies are processing research reports. The time savings are substantial.

Code Assistance: Development teams are using models like Qwen2.5-Coder for everything from code completion to explaining complex legacy code. It’s not replacing developers, but it’s definitely making them more productive.

Customer Support: This is probably the most common use case. Fine-tune a model on your support tickets and product documentation, and you can handle a lot of routine inquiries automatically.

Getting Implementation Right

Here’s what I’ve seen from companies that have done this successfully:

Start with Private Infrastructure: Even if it costs more initially, deploying on your own infrastructure gives you control and peace of mind. You can always optimize costs later.

Fine-tuning Is Where the Magic Happens: A base model is just the starting point. The real value comes when you fine-tune it on your specific data and use cases. This is what transforms a general-purpose model into something that actually understands your business.

Plan for Iteration: Your first deployment won’t be perfect. Build systems that let you easily update models, adjust parameters, and incorporate new training data.

Why Enterprises Trust Dextralabs?

At Dextralabs, we don’t just talk about AI – we deliver it. We help businesses across sectors transform open source LLMs into production-ready systems that actually move the needle.

What We Do:

- Custom AI Deployments: We tailor models to your industry and goals – no cookie-cutter setups.

- Secure Fine-tuning: We build private, compliant fine-tuning pipelines so your data stays protected.

- Enterprise-Ready Agents: From chatbots to intelligent assistants, we craft AI agents that are scalable, reliable, and fully integrated.

- Full-Org Integration: We help deploy LLM-powered tools across departments – from customer support to R&D – ensuring AI augments every corner of your business.

Our clients – from fintech in the UAE to e-commerce giants in the U.S. – have seen major wins, from faster response times to boosted team productivity. Let us help you build your next competitive advantage.

Looking Ahead

What excites me most about where we’re heading is that this technology is becoming democratized. You don’t need to be Google or OpenAI to build sophisticated AI applications anymore. Small companies can compete with large enterprises on AI capabilities.

The models are getting better, the tooling is improving, and the community is growing. We’re seeing more specialized models for specific tasks, better efficiency, and easier deployment options.

For businesses, the question isn’t whether to explore the best open source llm model – it’s how quickly you can get started and what competitive advantages you can build with it.

The companies that figure this out early are going to have a significant advantage. The technology is ready, the models are available, and the community is there to help. What are you waiting for?

Empower your enterprise AI with the right open source LLM models and partner – start your journey with Dextralabs today.

This guide reflects the current state of open source LLMs as of 2025. The landscape moves quickly, so always verify the latest information about models, licenses, and capabilities before making implementation decisions.

Your Enterprise LLM Stack, Built for Scale

From private AI agents to secure on-prem deployments, Dextralabs turns open source models into production-ready tools for high-growth enterprises.

Book a Free AI ConsultationFAQs on top open source llms:

Why are open-source LLMs important?

Open-source LLMs are reshaping how companies build with AI. They give teams full control over data, security, and custom features—which is a game-changer in regulated industries. Plus, they slash costs (no API paywalls!) and offer faster innovation cycles because you can fine-tune and iterate without waiting on closed ecosystems. In short: they put the power of AI in your hands, not behind someone else’s firewall.

What are the common challenges with open-source LLMs?

It’s not all sunshine and token streams. Open-source LLMs come with their own challenges:

Compute costs: Hosting and fine-tuning require solid hardware or cloud GPUs.

Model selection fatigue: With so many choices (LLaMA, Mistral, Gemma…), picking the right one can be overwhelming.

Security & governance: You’re responsible for alignment, bias mitigation, and compliance.

Lack of guardrails: Unlike ChatGPT or Claude, many open models don’t come with built-in moderation or safe outputs.

But with the right partner (like Dextralabs 👋), these hurdles become manageable—and even opportunities.

Are there FREE resources to learn open-source LLMs?

Absolutely. The open-source ecosystem thrives on community learning. Here are a few places to start:

Hugging Face Transformers Course – Beginner-friendly and hands-on

Mistral & Meta GitHub repos – Real-world LLM implementations

YouTube channels like Yannic Kilcher & AssemblyAI – Deep dives, no fluff

Papers with Code – Benchmark-driven learning by doing

Dextralabs Blog & GitHub – (yes, ours!) Tutorials, guides, and fine-tuning blueprints from real-world enterprise projects

Open-source isn’t just a tool—it’s a mindset. And there’s never been a better time to dive in.