Ever pushed a build to production, only to watch it break in minutes?

If you’re reading this and dangling your head, you’re with me. In a trend of fast sprints, lean QA teams, and frequently increasing software stacks, one mislaid bug can cost millions or, worse, user trust. To address this, modern teams are looking to AI in QA to transform the way they certify builds, review code, and detect defects before they hit production.

With leaner teams, tighter timelines, and intricate microservices, quality assurance must change. This is where AI-driven QA goes beyond just another buzzword; it becomes imperative. At Dextralabs, we assist engineering teams in transitioning from reactive QA to proactive, AI-driven release pipelines so they can deploy more quickly with fewer defects.

Accelerate QA Without Growing Your Team

Let Dextralabs help you embed AI copilots into your QA workflows—automating test cases, bug triage, and deployment checklists. Scale without hiring.

Book Your Free AI ConsultationUnderstanding AI in QA: What It Means Today?

The software industry has been depending on structured quality assurance approaches right from the days of manual testing, going up to automation frameworks, and now moving toward intelligent, adaptive systems. It’s not all about speed but about creating smarter, more predictive QA pipelines.

What is AI in QA?

AI in QA (Quality Assurance) refers to the application of artificial intelligence practices like machine learning (ML), natural language processing (NLP), and generative AI to increase the accuracy, efficiency, and coverage of software testing processes.

Unlike rule-based automation tools, AI-driven QA systems can learn from factual test data, predict where bugs are most likely to occur, and unconsciously generate and maintain test cases. They shift from validating reactively to applying quality proactively.

The Shift from Manual to Intelligent QA

QA has transitioned across three significant phases:

- Manual Testing: It takes a lot of time and is prone to errors for human testers to write and run tests manually.

- Test Automation: Although Selenium and Appium provided speed and repeatability, they necessitated regular maintenance.

- AI-Driven QA: In order to uncover flaws early in the development cycle, examine logs using natural language processing, and write self-healing test scripts.

Emerging Trends: Generative and Agentic AI

Two key advancements are reshaping the way we think about AI in QA automation:

1. Generative AI in QA Testing

Generative AI tools can now create test scripts, data sets, and bug reports By analyzing user stories, past errors, and application behavior, generative AI technologies may now automatically generate test scripts, data sets, and problem reports. This enhances coverage and speeds up test creation, particularly for edge cases.

For example, a generative model can simulate user paths that weren’t explicitly written, helping QA teams uncover issues before users do.

2. Agentic AI in QA Automation

Agentic AI systems go beyond generation. These AI agents do not simply assist but work actively, monitoring, responding to, and refining testing strategies within sprints. They don’t simply aid but decide what areas require additional coverage or if a test case is stale and requires rewriting.

These agential models render QA processes more independent, adaptable, and robust, particularly for teams following continuous integration and delivery (CI/CD).

Key Use Cases: How AI Improves Pre-Production QA?

Despite being one of the most important stages of the software development lifecycle, pre-production testing is frequently not optimized because of manual restrictions and time constraints. By adding intelligence, flexibility, and automation to fundamental QA procedures, AI in QA is changing this phase.

Let’s examine the main areas in which engineering teams are immediately benefiting from AI QA tools.

1. Automated Test Case Generation

In QA, creating and maintaining test cases is one of the most frequent bottlenecks. AI in QA automation eliminates the need for manual test creation.

AI models may automatically create test scripts that correspond with real-world usage by analyzing user flows, application behavior, and past defects.

- Generative AI in QA testing can simulate rare edge cases that human testers might overlook.

- This reduces test authoring time and improves functional coverage across releases.

By leveraging AI-driven test creation early in the AI deployment checklist, teams can significantly speed up onboarding for new features and product updates.

2. Predictive Failure Analytics

Traditional testing finds errors after they happen. By anticipating errors before testing even starts, AI enables teams to move to the left.

To identify high-risk modules in the codebase, predictive analytics in QA leverages historical data, including issue trends, commit frequency, and test failure rates.

- By utilizing AI in QA testing, teams may reduce effort waste on low-risk regions by prioritizing testing where it’s most needed.

- Predictive models evolve and adapt over time, resulting in a more intelligent and targeted QA cycle.

This use of qa AI improves production readiness confidence while simultaneously cutting down on release delays.

3. Self-Healing Test Scripts

In CI/CD environments, where code changes daily (or even hourly), static test scripts often fail. Self-healing automation, powered by AI, solves this problem.

These AI QA tools detect changes in application UI or API structure and update broken test cases automatically, eliminating the need for constant manual maintenance.

- By using generative AI in QA automation, teams can ensure scripts remain functional even as the product evolves.

- This drastically reduces test flakiness and increases release velocity.

Self-healing is now a must-have feature in any modern QA for AI-powered delivery pipelines.

4. AI-Powered Test Data Generation

Good testing needs a variety of high-quality data. Datasets created manually take a lot of effort and are frequently poor.

AI may automatically generate dynamic, privacy-compliant test data that corresponds to real user behavior without revealing personal information.

- This ensures that test scenarios are robust and realistic while reducing the need to copy production data.

- AI-generated data adapts to different input types and edge cases, reducing gaps in test coverage.

For regulated industries, using AI in QA for data generation also supports compliance by enforcing guardrails around user privacy.

5. Smart Defect Detection & Reporting

A bug can be corrected more quickly if it is identified and replicated more quickly. AI can examine logs, exceptions, and stack traces using NLP-driven QA tools to automatically provide thorough, repeatable issue reports.

- Defects are also categorized and prioritized by these tools according to their frequency, severity, and modules that are impacted.

- As part of a complete AI QA process, this ensures better alignment between developers and testers, reducing triage cycles.

This kind of intelligent automation turns noisy logs into actionable insights bringing a new level of speed and clarity to qa for AI-enhanced engineering teams.

What are the AI Deployment Checklists for QA Teams?

AI in QA is a strategy change rather than merely a tooling choice. To get the most out of AI automation, teams must set up their workflows, infrastructure, and mentality.

QA leaders can use this AI deployment checklist to assess if their team is prepared to incorporate AI into testing procedures and how best to scale it.

1. Readiness Assessment

Before bringing AI into your QA workflow, assess the current landscape:

- Team AI maturity: Do your engineers understand how AI tools work and where they fit into the test lifecycle? If not, some basic enablement and training go a long way.

- Tool compatibility: For smooth automation and tracking, make sure your current stack, such as JIRA, Selenium, or Jenkins, can interface with AI QA technologies.

Teams that evaluate preparedness in advance avoid clear implementation and onboarding issues.

2. Infrastructure Checklist

AI-powered testing often requires different infrastructure capabilities than traditional testing.

- Do you need GPU-based infrastructure to support model training or inference? This is especially important for teams deploying large-scale generative or agentic models.

- What are your CI/CD integration paths? AI testing tools should plug into your existing pipelines without disrupting workflows.

Having the right infra in place ensures your AI in QA automation runs efficiently and scales with product growth.

3. Model + Tool Selection

Choosing the right AI QA tools and language models is critical to success.

- Tools like Testim, Mabl, or Applitools offer varying degrees of automation, UI support, and flexibility.

- For more tailored needs, custom Dextralabs solutions combine LLMs with QA frameworks to deliver purpose-built test agents.

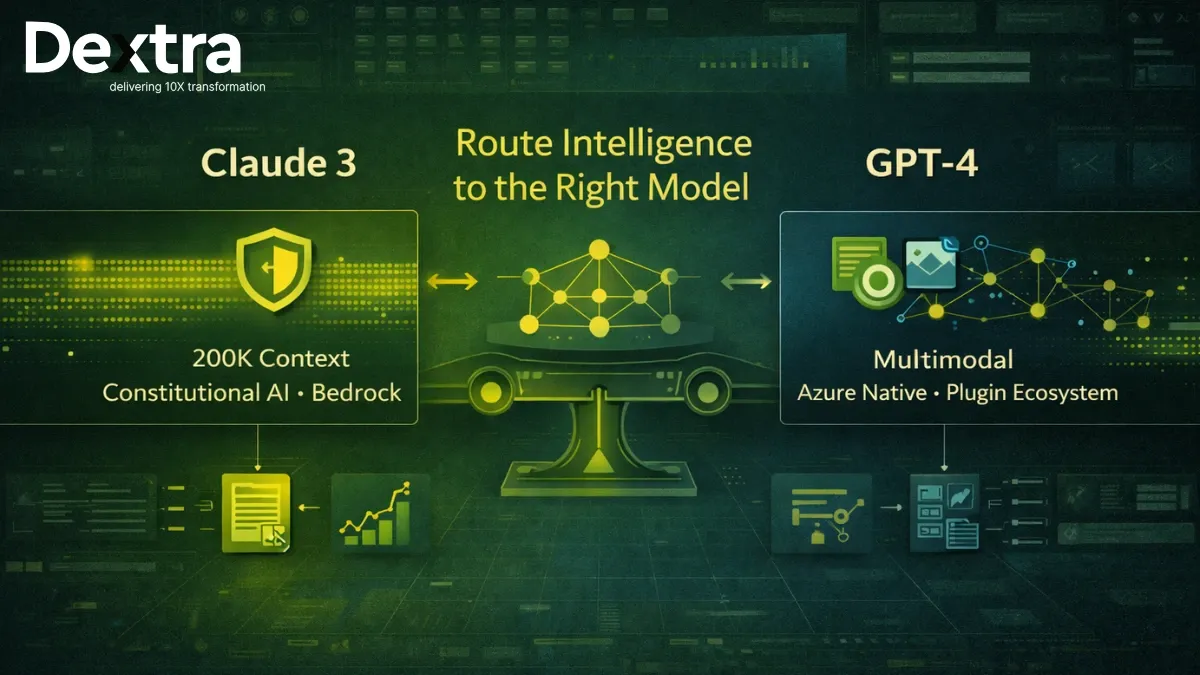

- Select LLMs (large language models) based on your use case:

- Use Meta’s open models for customizable QA agents

- Choose Mistral AI for high performance in smaller environments

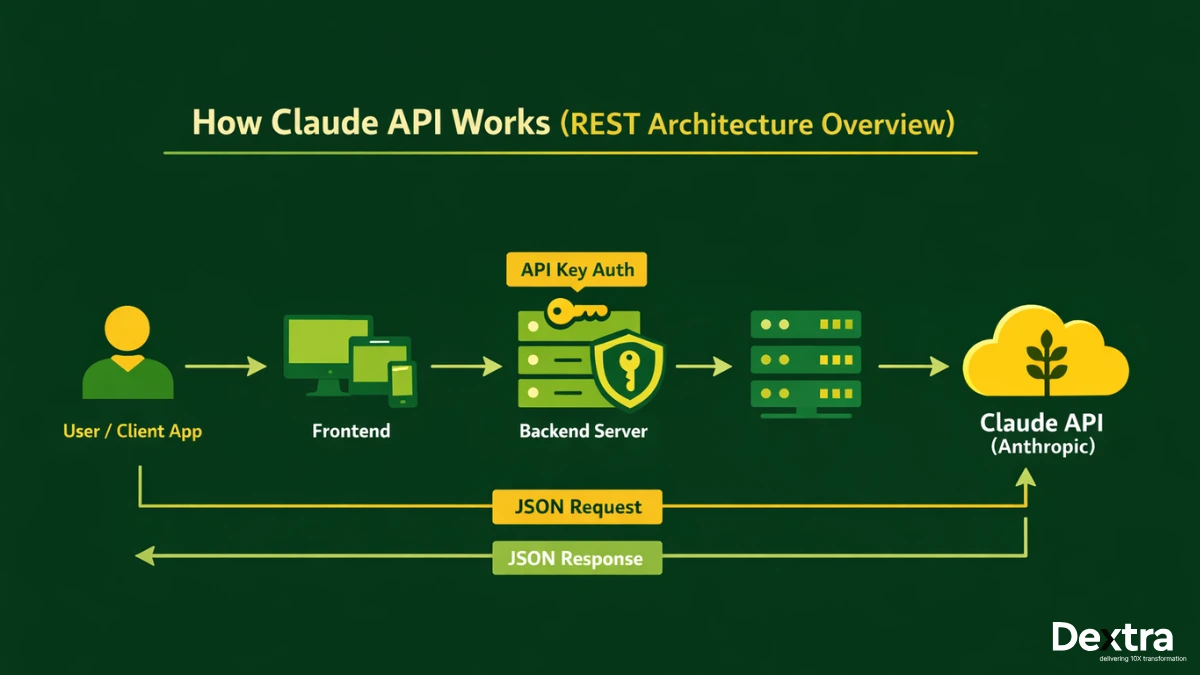

- Opt for Anthropic’s Claude when explainability and safety guardrails are essential

- Use Meta’s open models for customizable QA agents

This model-agnostic approach gives teams the flexibility to evolve their stack over time.

4. Privacy & Compliance

Working with sensitive data? AI doesn’t exempt you from compliance requirements.

- Handle data responsibly in regulated industries, especially in finance, healthcare, or EdTech.

- Set access controls and use role-based permissions for test environments.

- Build in guardrails for how AI interacts with sensitive data, particularly when generating synthetic test cases.

A secure AI QA process isn’t just a technical requirement; it’s a trust signal to customers and stakeholders.

5. Monitoring & Continuous Learning

AI doesn’t stop learning once it’s deployed and neither should your QA strategy.

- Set up feedback loops to retrain or fine-tune models based on outcomes (e.g., failed tests, false positives).

- Use alerting mechanisms to monitor for irregularities in test results, model behavior, or integration points.

Continuous learning helps AI evolve with your product making it an increasingly valuable part of your QA for AI-enhanced delivery.

Choosing the Right AI QA Tools for Automation:

With the growing interest in AI in QA, a wide variety of tools have emerged each offering unique capabilities across the testing spectrum. However, selecting the right solution isn’t just about features. It’s about finding the best fit for your workflows, release velocity, and team structure.

This section breaks down how to evaluate AI QA tools effectively and use them strategically across your testing pipeline.

Aligning Tools with Use Cases

Not all testing needs are equal. Some teams require full UI testing support, while others are focused on backend workflows or mobile coverage.

Here’s how leading tools map to real-world use cases:

| Tool | Best For | Key Features |

| Mabl | UI and regression testing | Self-healing scripts, visual testing, low-code workflows |

| Testim | Agile and fast-moving teams | AI-assisted test authoring, dynamic locators, CI integrations |

| Katalon | API and data-driven testing | Scripting flexibility, analytics dashboard, built-in reporting |

| Dextralabs | Custom AI QA deployments | Agentic QA systems, LLM integration, model-agnostic tooling |

When choosing a tool, think beyond just automation. Consider how easily it fits into your CI/CD setup, the learning curve for your QA team, and how much manual work it can realistically offload.

What to Prioritize in AI QA Tools?

To make the most of AI in QA automation, here are some key capabilities to look for:

- Self-healing test scripts: Adjust to changes in UI, structure, or APIs without breaking

- NLP-driven test creation: Auto-generate test cases based on user stories or requirements

- Visual testing: Compare UI snapshots across builds to catch rendering issues

- No-code/low-code interfaces: Empower non-engineers to contribute to test automation

- Cross-platform support: Ensure coverage for mobile, web, and backend systems

Selecting a tool that supports these functions ensures your AI investment delivers tangible value across releases.

Why Customization Matters?

In many cases, off-the-shelf AI tools solve general problems but not domain-specific challenges. That’s why some teams opt for custom QA AI agents built on models like Meta, Mistral, or Anthropic.

At Dextralabs, we help teams build tailored AI QA solutions that:

- Integrate seamlessly into existing test infrastructure

- Support LLM-driven generation, triage, and learning

- Offer flexibility to evolve with product complexity

Whether you’re starting small or scaling fast, choosing the right AI QA tool can determine the success of your QA transformation.

Gen AI and Agentic AI in QA: The Next Frontier

As AI becomes more embedded in the software development lifecycle, the next evolution of QA is no longer about just automation, it’s about autonomy.

Generative AI and agentic AI systems are transforming how QA teams work by enabling machines not only to support testing but to actively participate in it. These technologies are opening the door to faster releases, higher test coverage, and fewer manual interventions across the board.

What is generative AI in QA?

Generative AI in QA refers to the ability of AI models to create meaningful testing assets like test scripts, datasets, bug summaries, or even test plans based on inputs such as user stories, past issues, or code changes.

These models can:

- Generate test cases for both typical and edge user behaviors

- Automatically document test outcomes and exceptions

- Simulate real-world user interactions without pre-programmed scenarios

Instead of relying on static test suites, generative QA AI evolves alongside your product, filling in test gaps and increasing quality coverage.

Introducing Agentic AI in QA Automation

Agentic AI goes one step further.

Unlike traditional AI models that require human prompts, agentic AI systems operate independently. They monitor systems, make decisions, learn from feedback, and execute actions without needing constant direction.

In the context of QA, these AI agents can:

- Decide which modules to test based on recent code changes

- Adjust testing priorities in real-time during sprint cycles

- Alert teams when anomaly patterns emerge in test results

- Recommend fixes or improvements by learning from past bugs

This approach makes agentic AI in QA automation ideal for teams practicing continuous testing or managing microservice-heavy architectures.

Dextralabs in Action: Real-World Results

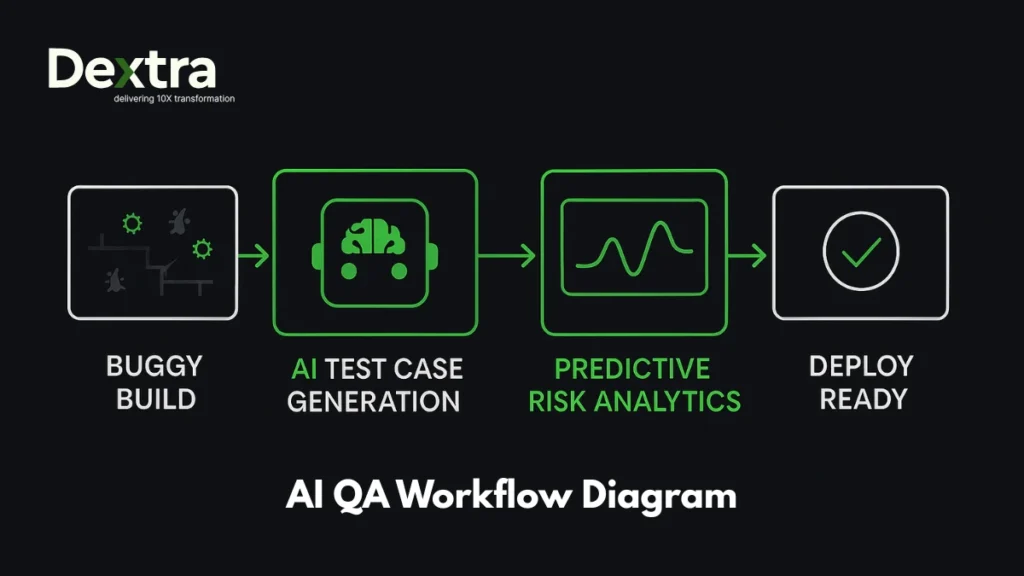

At Dextralabs, we recently deployed an agentic QA system for a SaaS startup struggling with flaky tests and unstable release cycles. Let’s have a look at our Ai QA “Workflow Diagram” given below:

Within three months of integrating autonomous AI agents into their CI/CD pipeline:

- Production bugs dropped by 60%

- Regression testing time reduced by 40%

- Test suite maintenance time decreased significantly

The system didn’t just improve outcomes, it changed how the QA team worked, giving them time to focus on exploratory testing and strategic improvements.

Why Does This Matters?

Generative and agentic AI are not just new tools; they represent a shift in mindset. QA is no longer a passive safety net. With the right AI models and tooling in place, it becomes a proactive, evolving partner in software delivery.

This is the future of qa AI: not just fast, but smart. Not just scalable, but strategic.

What are the Challenges of Using AI in QA?

Though the advantages of AI in QA range from speed to coverage to predictive insights, adoption does have its challenges. Teams moving from manual or script-based testing can experience resistance, technical and cultural.

Recognizing these early on assists QA leaders in preparing better AI deployment checklists and circumventing implementation setbacks.

1. Learning Curve and Adoption Resistance

Even the most capable AI QA tools won’t provide value if the team is not ready to employ them. QA engineers and automation testers will experience a learning curve while working with machine learning models, NLP-based test platforms, or generative systems.

Some teams might also resist adopting new workflows they’ve had in place for years. That’s why successful AI rollouts frequently couple tooling with upskilling, internal enablement, and cross-functional alignment.

2. False Positives and Negatives

AI models learn from data, and like any learning system, they make mistakes early on. In QA, this can show up as:

- False positives: Flagging issues where none exist, creating unnecessary noise

- False negatives: Missing actual bugs due to lack of training or context

Early-stage models may also misclassify defects or suggest incorrect test paths. Continuous feedback loops and domain-specific tuning are essential to improving accuracy over time.

3. Data Privacy and Compliance

Working with AI during testing often includes examining user flows, logs, and datasets that can include sensitive data. This raises data handling concerns, particularly in regulated sectors.

Without proper guardrails, teams can be at risk of exposing personally identifiable information (PII) or breaking compliance regulations such as GDPR, HIPAA, or SOC 2.

QA AI systems must be designed with data governance in mind from anonymizing test data to enforcing strict access controls.

4. Integration with Legacy Systems

All applications are not cloud-native. Older monoliths, in-house QA tools, or heavily customized systems can be difficult to integrate with new AI platforms.

Although some AI QA automation tools provide REST APIs and plug-ins for legacy environments, others might need considerable setup or refactoring.

In place of infrastructure and integration requirements, teams must consider these factors as part of their overall AI in QA process planning particularly prior to scaling.

5. Overreliance on AI

AI is a tool not a replacement for human judgment.

It’s tempting to let AI take over large parts of the QA process, but without human-in-the-loop supervision, automation can spiral into poor decisions or blind spots.

The most effective QA strategies use AI to enhance, not replace human testers. For example, AI might prioritize defects, while humans assess business impact and customer experience.

Future Scope: What’s Next for AI in QA?

The use of AI in QA is not just a passing trend, it’s laying the foundation for the upcoming software delivery. As development cycles become even faster and software complexity increases, QA must grow from being a reactive gatekeeper to an intelligent, proactive system.

Here’s a look at what’s next for AI in QA, and how current transformations are shaping tomorrow’s best practices.

1. Increase of Autonomous Testing Pipelines

We’re moving beyond AI-assisted testing to fully autonomous QA workflows. These systems will not just execute tests but also decide what to test, when, and how all without human intervention.

- AI agents will monitor sprint activity, code churn, and feature flags to determine dynamic testing needs.

- Generative models will build and execute test plans that adapt in real time.

- Anomaly detection systems will proactively flag release risks before deployment.

This level of automation will redefine how AI QA tools are positioned, not as helpers, but as virtual teammates.

2. QA as a Strategic Function

As QA AI capabilities grow, quality assurance will shift from a cost center to a strategic differentiator.

- Insights from AI models can inform product decisions, UX improvements, and release readiness.

- Predictive analytics will allow leadership to visualize quality trends across product lines, helping teams make data-driven decisions around resourcing and timelines.

With the use of AI in QA field and future scope expanding, QA will play a more central role in business outcomes, not just technical validation.

3. AI Copilots for Testers and Developers

Just as developers now use GitHub Copilot, Cursor AI or ChatGPT for coding, QA professionals will work side by side with AI copilots that suggest test cases, outline results, and recommend fixes.

- These copilots will reduce repetitive work and allow testers to focus on exploratory testing, usability, and edge cases.

- Developers will also benefit from receiving inline QA suggestions during code commits, crossing the gap between testing and development.

4. Intelligent Collaboration Between Humans and Machines

The future isn’t about replacing people, it’s about better collaboration.

- AI will manage the operational layer test creation, data generation, error logging

- Humans will provide context, make judgment calls, and guide the system over time

This AI in QA process will create feedback loops that continuously improve both the AI systems and the products being tested.

At Dextralabs, we’re already working with teams to prepare for this future building AI-powered pipelines, deploying agentic QA systems, and designing LLM-based copilots tailored to their workflows.

The question isn’t if AI will become a core part of your QA team, it’s how soon you’re ready to embrace it.

Why Partner with Dextralabs for AI-Powered QA?

At Dextralabs, we understand that every software team is different. That’s why we offer custom QA solutions powered by AI agents, LLMs, and automation frameworks built around your unique needs.

Our approach includes:

- Full-stack AI integration (UI, API, data, compliance)

- Support for models from OpenAI, Anthropic, Mistral, and Meta

- Tools like RAG workflows, prompt guardrails, and fine-tuned LLMs

- Flexible support for startups, scale-ups, and organizations’ teams

Conclusion

AI won’t replace your QA team, but it will enhance them.

By adopting AI in QA early, you can shift left, catch issues faster, and deploy with confidence. Whether you’re running weekly sprints or managing pipelines, AI can help you go from buggy builds to clean, reliable deploys without burning out your team.

Want to explore how Dextralabs can help integrate AI into your QA process?

Let’s talk. We’ll help you build smarter testing systems tailored to your stack.

Custom AI QA Agents, Built for Your Stack

Dextralabs builds domain-specific QA agents powered by Generative AI and agentic frameworks—designed to learn from your sprints and evolve with your codebase.

Get a Free AI QA AssessmentFAQs on Ai in QA:

Q. What tools can simulate AI brand output in different languages for QA purposes?

Tools like Google’s Vertex AI, AWS Translate + Comprehend, and OpenAI’s GPT models with multilingual prompts can simulate brand-specific tone and content in multiple languages for QA. When combined with LLM fine-tuning or prompt engineering frameworks, testers can verify localization accuracy, brand alignment, and tone-of-voice across markets. QA teams often use snapshot diff tools (e.g., Percy) alongside LLM APIs to auto-check semantic drift, cultural missteps, and translation fidelity at scale.

For enterprise-grade needs, platforms like DeepL API, Unbabel, and custom RAG pipelines (Retrieval-Augmented Generation) enable context-aware multilingual QA simulations, especially when tuned with company-specific knowledge bases.

Q. How is AI used in QA?

AI augments QA by automating and intelligently optimizing every stage of the testing lifecycle. This includes:

– Test case generation: Generative AI creates test scenarios from user stories or code changes.

– Predictive analytics: ML models detect modules likely to fail using historical bug data.

– Self-healing tests: AI automatically updates broken scripts when UI or API structures change.

– Defect triage: NLP-driven tools auto-classify and prioritize bugs by parsing logs and user reports.

– Synthetic data generation: AI tools generate realistic, privacy-safe test datasets.

The outcome is fewer manual bottlenecks, faster regression cycles, and higher release confidence in CI/CD pipelines.

Q. Will QA testers be replaced by AI?

Not replaced, but redefined. AI doesn’t eliminate the QA role—it elevates it. While AI can write test scripts, triage bugs, or simulate user flows, critical thinking, exploratory testing, and ethical oversight remain human-driven.

Modern QA testers are becoming AI-augmented engineers, who design feedback loops, audit AI behavior, and validate that automated decisions are contextually correct. Think of AI as a copilot—one that helps you go faster, but not without a pilot in the seat.

Q. Which AI tool is best for QA testing?

The “best” tool depends on your tech stack and QA maturity. Here’s a quick breakdown:

1. Testim (by Tricentis): Best for AI-driven UI testing with self-healing capabilities.

2. Mabl: Great for cloud-native teams needing AI-assisted test creation + execution.

3. Katalon Studio: Affordable, end-to-end with AI for visual and regression testing.

4. Applitools: Visual AI testing at pixel-perfect accuracy.

5. Dextralabs Solutions: Ideal for companies needing custom AI agents, RAG-powered validations, or domain-specific QA copilots.

Choose tools that support integrations (JIRA, Jenkins, GitHub), provide explainable AI, and adapt to your CI/CD rhythm.

Q. How to become an AI/QA tester?

To thrive in AI-driven QA, combine traditional testing expertise with AI literacy:

1. Master core QA principles: Test design, SDLC, automation frameworks (Selenium, Cypress).

2. Learn AI concepts: ML, NLP, generative AI, prompt engineering.

3. Get hands-on with tools: Try Mabl, Testim, and GPT-based QA agents.

4. Understand model behavior: Learn how LLMs make decisions—bias, hallucinations, guardrails.

5. Build Python + API skills: Most AI QA tools expose APIs for test orchestration.

Courses on Coursera, Udacity, or even Dextralabs’ upcoming AI QA bootcamp can jumpstart your journey.

Q. How can AI help in quality assurance?

AI enhances QA by adding speed, scale, and intelligence to traditional testing:

Faster feedback loops via intelligent test selection and defect prediction.

Scalable test coverage using autonomous agents that simulate thousands of scenarios.

Smarter insights from log parsing, anomaly detection, and user behavior mapping.

Instead of waiting for errors to surface in production, AI helps you preemptively strike by understanding patterns, intent, and risk.

Q. What is an AI testing tool?

An AI testing tool is a software platform that uses artificial intelligence (often ML or LLMs) to:

– Generate test cases based on app logic or user flows.

– Detect UI/API changes and auto-adjust test scripts.

– Identify bugs by learning from past test cycles and logs.

– Create or sanitize data intelligently for edge case testing.

These tools extend beyond traditional automation—they learn, adapt, and optimize. Some also integrate Gen AI to simulate real-world conversations or behaviors during testing.

Q. How is AI used in QA automation?

AI enhances QA automation by making it resilient and context-aware. Here’s how:

Test Case Authoring: LLMs interpret requirements and auto-generate test logic.

Script Maintenance: Tools like Testim use AI to “heal” broken selectors.

Failure Prediction: AI predicts flaky tests and isolates root causes.

Conversational Test Design: Testers can describe what they want, and NLP engines build tests on the fly.

These innovations reduce test flakiness, increase velocity, and cut maintenance costs by over 50% in some teams.

Q. What is the role of AI in quality assurance?

AI plays a strategic role in QA by shifting it from reactive testing to proactive quality engineering. It helps in:

Prevention over detection: Predict bugs before they occur.

Continuous quality: Embedded testing in DevOps with autonomous validation.

Augmenting testers: Providing AI copilots that suggest, test, and even fix issues.

In essence, AI turns QA into a continuous intelligence layer across the software lifecycle.

Q. How is AI changing test automation?

AI is transforming test automation from a rules-based engine to a learning system:

– Scripts are now dynamic, not hardcoded.

– Test selection is driven by risk, not randomness.

– Bugs are traced via semantic understanding, not static logs.

AI-driven automation also enables codeless test authoring, multilingual simulation, and autonomous agentic QA, redefining how and when testing happens—often without human prompting.