Artificial intelligence is rapidly becoming an essential part of life today. Large Language Models (LLMs) are increasingly used within organizations to inform decisions and support staff in their work. Another application of these models is being developed for customer support and processing large data sets.

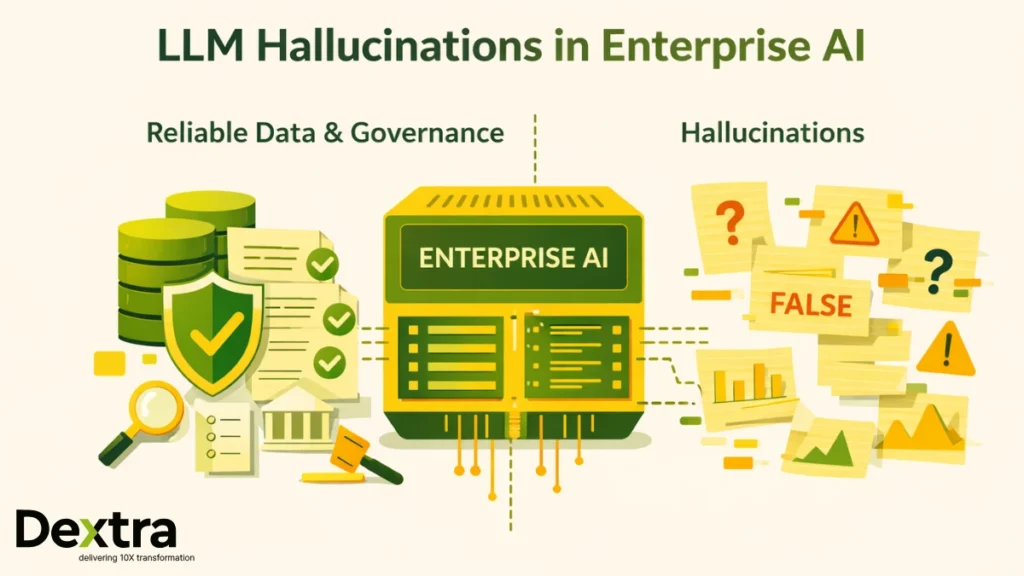

While these systems offer speed and efficiency, they also introduce a serious and often misunderstood challenge — LLM hallucinations. An AI hallucination occurs when a model produces information that sounds confident and correct but is factually wrong. In enterprise environments, this is not just a technical issue. It is a business risk.

Industry research reinforces this concern. According to AIMultiple’s research on AI hallucinations, large language models can generate responses that appear plausible but are factually incorrect, and even the latest models still exhibit notable error rates across diverse tasks.

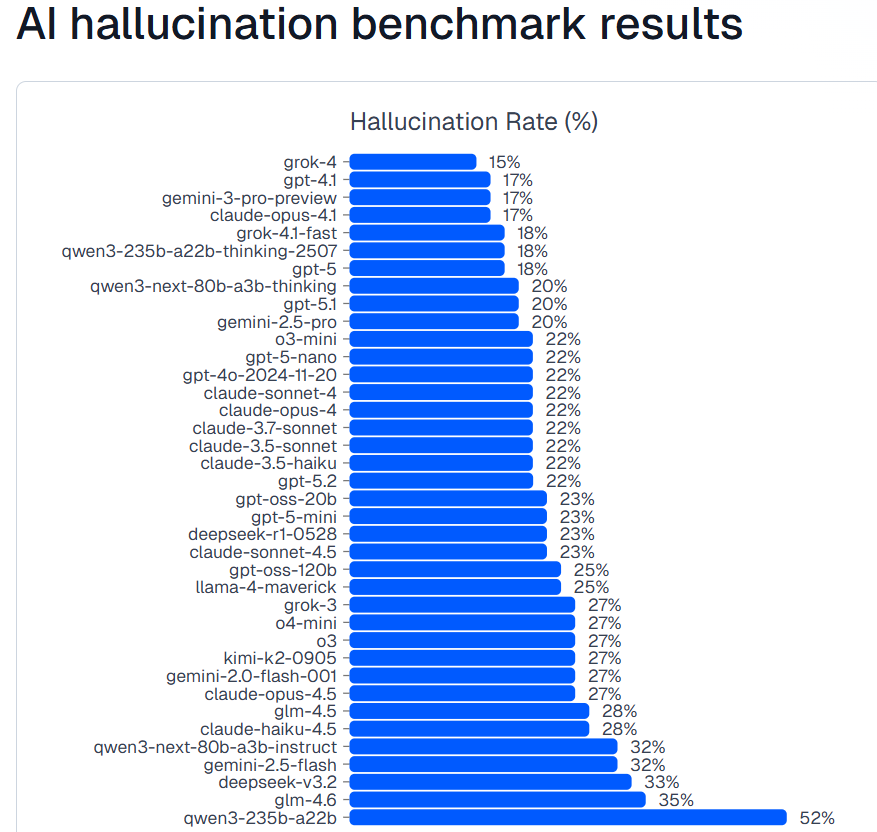

In benchmarking studies of multiple LLMs, overall hallucination rates often exceed 15% or more, reflecting persistent challenges in accuracy across models and domains.

For organizations using AI in real workflows, hallucinations can lead to financial loss, compliance failures, damaged customer trust, and poor strategic decisions. This is why enterprises are shifting their focus from “how powerful the model is” to how reliable, controllable, and auditable the AI system truly is.

Dextralabs helps organizations design and deploy enterprise AI systems that prioritize trust, accuracy, and governance, not just automation.

Why LLM Hallucinations are an Enterprise Risk — Not a Model Bug?

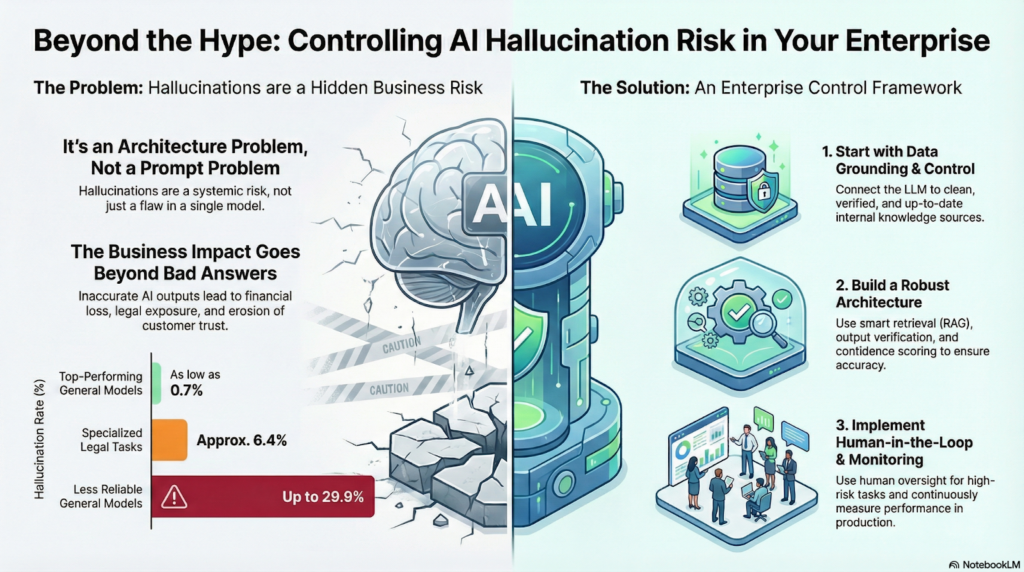

Many teams assume hallucinations happen because a model is “not smart enough” or because prompts are poorly written. In reality, hallucinations are a systemic issue, not a single model flaw.

LLMs are probabilistic systems. They generate responses based on patterns in data, not verified truth. When enterprises rely on these outputs for real decisions — approving transactions, responding to customers, generating reports, or guiding operations — even a small error rate can create major consequences.

This is why LLM hallucinations in enterprise AI must be treated as a risk management problem, similar to cybersecurity, data privacy, or financial controls.

Why Enterprises Should Be Concerned?

- AI systems now influence business decisions, not just content creation

- Hallucinations often go unnoticed because the outputs sound confident

- Verification costs increase as teams double-check AI results

- Regulatory bodies are paying closer attention to AI accuracy and accountability

These risks are not theoretic upal.

Without the right safeguards, hallucinations become a potential hidden operational risk.

The Real Cost of False AI Outputs in Business Automation

When AI systems hallucinate in consumer chatbots, the impact may be limited. In enterprise workflows, the cost is much higher.

Financial Impact

Inaccurate insights generated by AI systems will have an immediate, tangible impact on the financial world. The use of AI systems for pricing, forecasting, and operations is prevalent across enterprises. Inaccurate details can spread and cause revenue loss.

- Wrong pricing decisions can cause loss of competitiveness or reduced profits.

- Inaccurate financial projections may mislead management, thereby leading to wrong investment or budgetary decisions.

- Loss of revenue can happen when automatic business processes are based on incorrect results generated by AI.

These mistakes compound over time, causing additional costs for operation and affecting the integrity of decisions made by AI.

Compliance and Legal Risk

In regulated domains, AI hallucinations raise serious challenges of compliance.

- Improper legal citations might bring legal disputes against the concerned organizations.

- Non-compliant documentation could be non-regulatory.

- Failures of audit might arise when the authenticity and traceability of the data generated through the use of artificial intelligence are questioned.

Brand and Trust Damage

Customer trust erodes quickly when AI systems deliver inconsistent or incorrect information.

- Support agents sharing inaccurate answers

- AI responses contradicting company policies

- Errors repeating at scale

In many cases, the long-term cost of lost trust is far greater than the cost of fixing the AI system itself.

What Causes LLM Hallucinations Beyond Prompt Engineering?

Prompt engineering alone cannot solve hallucinations. Enterprises that rely only on prompts are treating symptoms, not root causes.

1. Lack of Grounded Knowledge

LLMs do not “know” your internal data unless it is provided correctly. Without grounding, models guess.

2. Poor Retrieval-Augmented Generation (RAG) Design

RAG systems can reduce hallucinations, but only when:

- Retrieval is accurate

- Context is relevant

- Sources are validated

Poor retrieval increases hallucinations instead of reducing them.

3. Data Quality and Context Gaps

Inaccurate or inconsistent data may result from feeds that are outdated, incomplete, or faulty.

4. Over-Automation Without Validation

When AI outputs trigger actions automatically, errors spread faster and cost more.

This is why AI hallucinations are an architecture problem, not just a model problem.

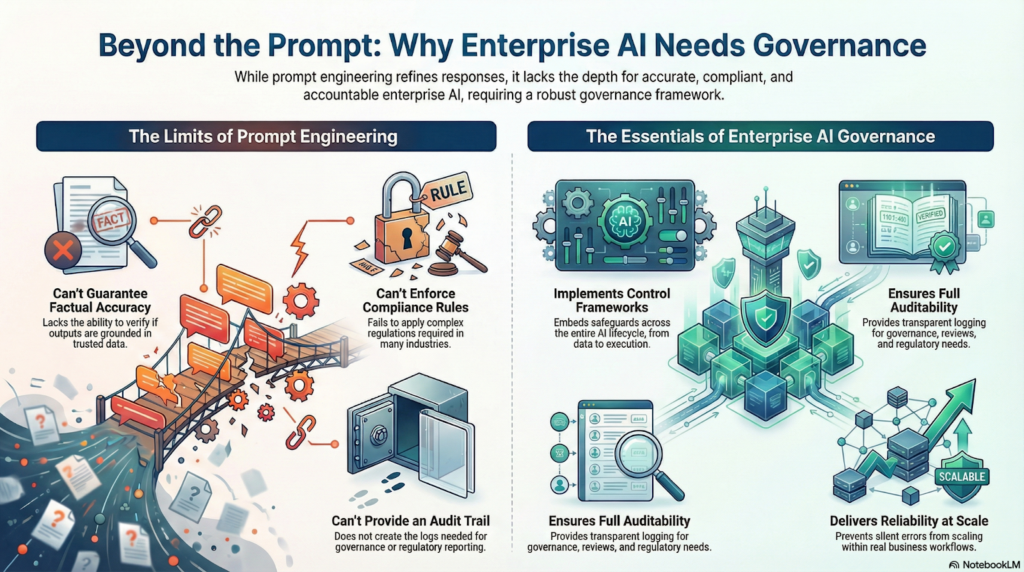

Why Prompt Engineering is Not Enough for Enterprise AI?

Prompt engineering can be used to improve AI responses, but it affects only the model’s surface level. The larger problem that arises when such models are implemented in business environments is not addressed here. In business setups, accuracy, accountability, and compliance become more important aspects, not the well-phrased outputs.

While prompts can guide tone and structure, they cannot:

- Verify factual accuracy or confirm whether an output is grounded in trusted data

- Enforce compliance rules required in regulated industries

- Measure hallucination risk across different use cases and workflows

- Provide audit trails needed for governance, reviews, and regulatory reporting

Relying solely on prompts leaves organizations exposed to silent errors that scale quickly.

Enterprises require control frameworks, not clever prompts. Dextralabs focuses on AI orchestration services that embed safeguards across every layer of the AI lifecycle — from data and retrieval to reasoning, evaluation, and execution — ensuring AI systems remain reliable, auditable, and safe at scale.

Enterprise Control Frameworks That Actually Reduce Hallucinations

Reducing hallucinations requires a multi-layered approach. There is no single fix.

1. Data Grounding and Context Control

Reliable AI starts with reliable data.

- Clean, structured knowledge sources

- Clear ownership of data accuracy

- Metadata tagging and versioning

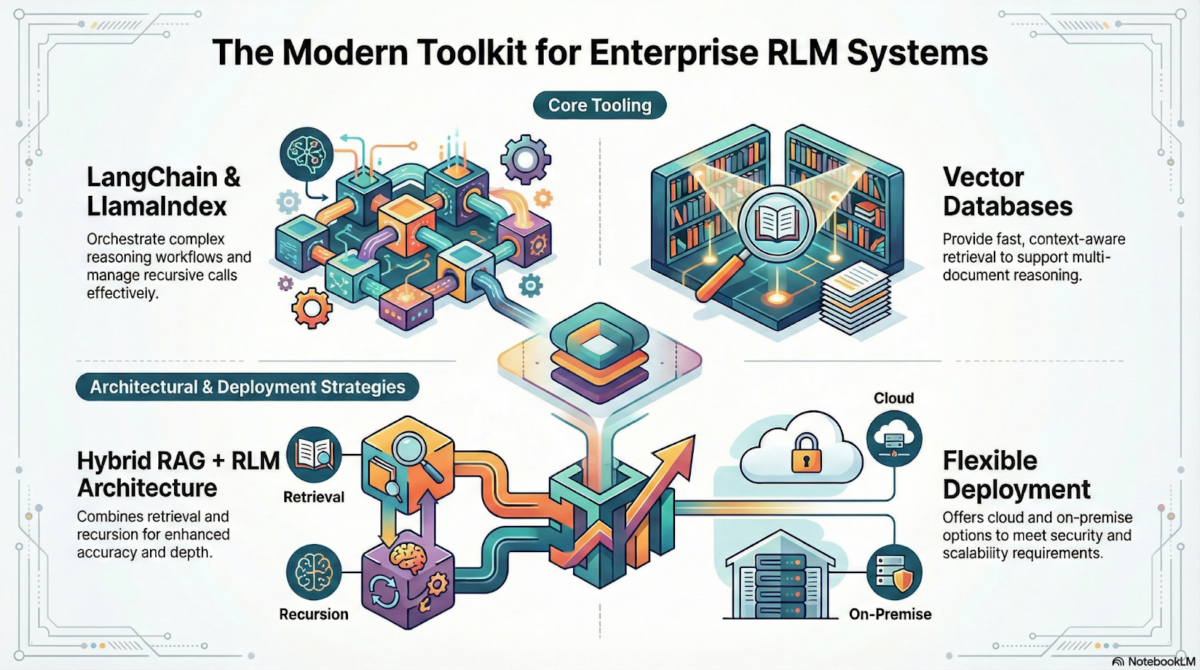

2. Robust RAG Architecture

Effective RAG systems include:

- Hybrid retrieval (vector + keyword search)

- Context filtering and reranking

- Source relevance scoring

This ensures the model responds based on facts, not assumptions.

3. Output Verification and Confidence Scoring

AI systems should evaluate their own responses.

- Confidence thresholds

- Contradiction checks

- Factual validation layers

Low-confidence outputs can be flagged or routed for human review.

4. Human-in-the-Loop Systems

For high-risk use cases, human oversight is essential.

- Approval workflows

- Escalation mechanisms

- Feedback loops to improve models

This balance between automation and control is critical.

Measuring and Monitoring Hallucination Risk in Production

The most significant gap in the adoption of AI in organizations may be a lack of insight into how AI systems behave when deployed in production. Most organizations are applying LLMs and are not sure whether the results are safe.

Must be able to answer key questions like:

- To what extent does the system hallucinate in a real business situation?

- Which of these use cases represent the highest risks, such as finance, legal, or customer flows?

- What is the pattern of improvement of accuracy over time as new data emerges?

For this, the following key performance indicators need to be tracked by organizations:

- Hallucination rate by use case

- Source citation accuracy

- Confidence versus correctness scores

- Human override frequency

With continuous monitoring and feedback loops, hallucinations become measurable — and more importantly, manageable at enterprise scale.

Industry-Specific Hallucination Risks

AI hallucinations do not affect all industries in the same way. The level of risk depends on how AI outputs are used and the real-world consequences of incorrect information. This makes industry-specific governance essential.

The impact of AI hallucinations is not equal across all industries. The degree of danger varies based on the use of the results and the consequences of the information being wrong. Hence, industry-by-industry rules are necessary.

- Healthcare

A patient’s safety can be directly affected by the incorrect recommendations, summaries, and insights provided by the AI system. Finite errors in the information may lead to incorrect decisions, making the process of validation and source checking a mandatory requirement.

- Finance

Hallucinations may impact financial prediction, reporting of compliance, and risk evaluation. Incorrect results may lead to regulatory violations, economic losses, and loss of market confidence.

- Legal

Fabricated legal citations or incorrect interpretations of laws can expose organizations to serious legal and compliance risks, especially when AI-generated content is used in documentation or advisory roles.

- Customer Support

Ineffective or inconsistent AI responses increase customer uncertainty and distrust, creating additional challenges for support staff.

Due to the above-mentioned variations, the governance of enterprise-level artificial intelligence must vary by industry and application.

How Dextralabs Assists Businesses in Trusting AI Models?

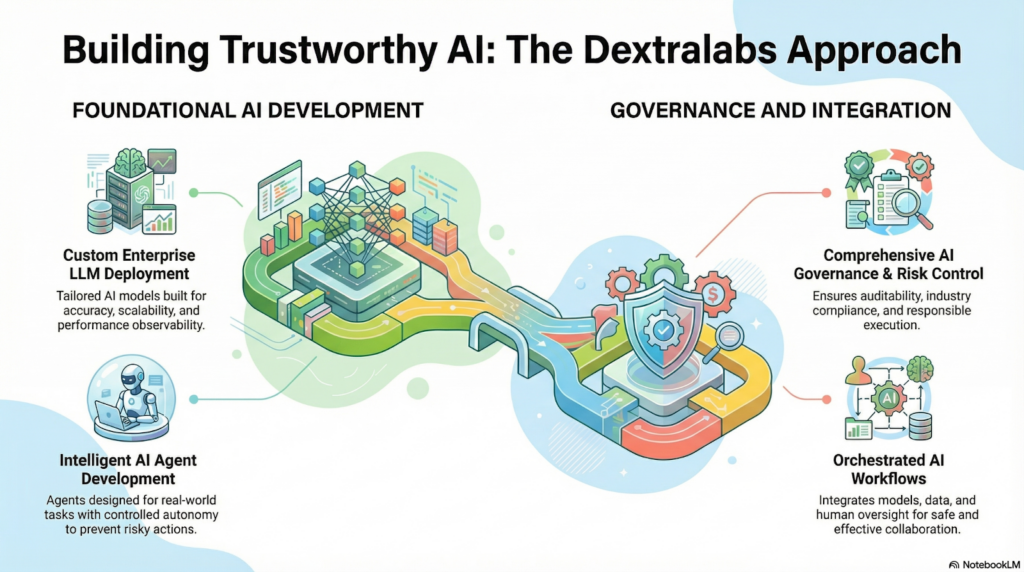

Dextralabs collaborates with institutions to scale beyond artificial intelligence research and development and focus on developing production-level, reliable, and responsible artificial intelligence. This is because their work at Dextralabs is not limited to developing highly capable models but also making them work flawlessly and safely.

Enterprise LLM Deployment

Dextralabs designs and deploys custom LLM architectures tailored to enterprise needs, with a strong emphasis on:

- Accuracy, ensuring outputs are grounded in trusted data

- Scalability, so systems perform reliably as usage grows

- Observability, providing visibility into model behavior and performance

AI Agent Development Services

Dextralabs builds intelligent AI agents designed for real-world execution, not experimentation. These agents include:

- Task-specific reasoning aligned to defined business goals

- Feedback loops to continuously improve accuracy

- Controlled autonomy to prevent unchecked or risky actions

As a result, AI agents are designed to act responsibly — not hallucinate.

AI Governance and Risk Control

From governance frameworks to technical safeguards, Dextralabs ensures:

- Auditability for enterprise oversight

- Compliance alignment across industries

- Responsible AI execution at scale

AI Orchestration Services

Rather than relying on isolated tools, Dextralabs designs orchestrated AI workflows where models, data, humans, and systems work together safely and effectively.

Why Control-First AI is the Long-Term Winner?

Wikimedia Foundation – forthcoming

The companies that succeed at AI for the long term do not concentrate on what the newest and hottest approaches to AI happen to be. Rather, successful companies focus on control and reliability, and on holding themselves accountable.

Successful business entities concentrate on:

Enterprises that achieve long-term success with AI do not focus on adopting the newest or most popular models. Instead, they prioritize control, reliability, and accountability from the start. This approach ensures AI systems deliver consistent value rather than unpredictable outcomes.

Successful organizations focus on:

- Reliability over novelty, choosing stable and well-governed systems instead of experimental technology

- Governance over shortcuts, embedding rules, validation, and oversight into every AI workflow

- Outcomes over demos, measuring real business value instead of pure one-off performance.

With the focus on systemic risk rather than isolated mistakes in AI hallucinations, a significant benefit is:

- Predictable AI behavior to enable informed decision-making

- Reduced operational risk in automated business flows

- Increased customer, partner, and governmental trust

Therefore, the control-first strategy turns the potential risk of AI into a lasting value that will make enterprises more competitive over the long term.

Conclusion

LLM hallucinations are not a temporary issue. They are a permanent challenge that comes with probabilistic AI systems. Enterprises that ignore this risk pay for it later — in cost, trust, and compliance failures. But organizations that get it right take a different route. They design AI systems with control, accountability, and governance at the core.

At Dextralabs, we enable enterprises to shift from experimental AI to trusted, production-grade AI systems that unlock real business value without taking on pointless risk. If your organization is deploying AI agents, decision automation, or enterprise LLM systems, now is the time to address hallucination risk properly.

Partner with Dextralabs to architect, deploy, and govern accurate, auditable AI systems engineered to succeed over the long term.

FAQs:

What are LLM hallucinations?

They occur when AI generates incorrect information with high confidence.

Can hallucinations be fully eliminated?

No, but they can be significantly reduced and controlled with the right architecture.

Is RAG enough to prevent hallucinations?

RAG helps, but only when designed and monitored properly.

Which industries face the highest risk?

Healthcare, finance, legal, and enterprise decision automation are especially vulnerable.