AI agents have moved rapidly from experimentation into widespread business use. According to Gartner forecast, over 40% of agentic AI projects may be canceled by the end of 2027 due to unclear business value, high costs, or governance and risk issues. At the same time, Gartner also predicts that 40% of enterprise applications will feature task‑specific AI agents by 2026, up from less than 5%, showing a clear trend toward embedding intelligent agents in core systems.

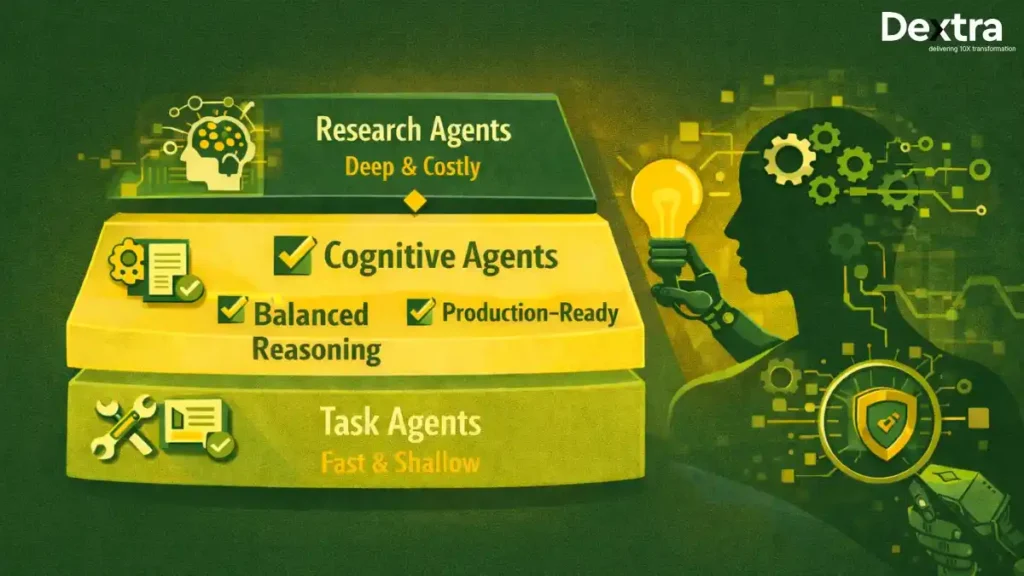

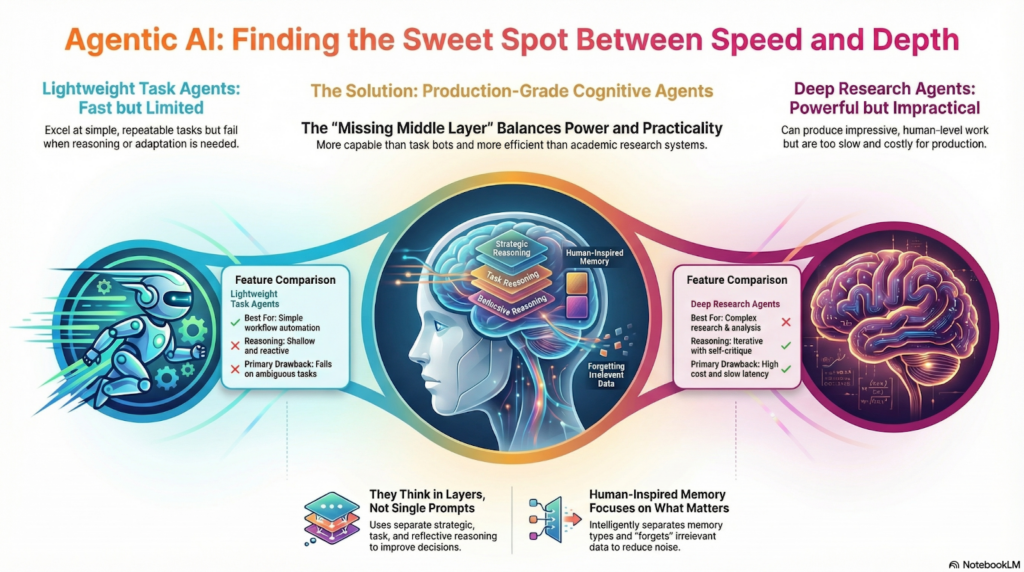

This data highlights a divide: task‑based agents are rapidly adopted for routine work, but many initiatives fail to scale or deliver value on complex problems. The advanced “human‑level ” research agents promise deeper reasoning but remain costly, slow, and difficult to deploy in production.

This creates a clear need for a practical, production‑ready layer of cognitive agentic systems, neither overly simplistic nor overly complex. This is where Dextralabs excels, by building scalable, enterprise‑ready agentic AI solutions that bridge basic automation and research‑focused intelligence.

The Two Extremes of Today’s Agentic AI Landscape

1. Lightweight Task-Oriented Agents: Fast but Limited

Lightweight AI agents are widely used today. These systems are typically built around tools, workflows, and retrieval-augmented generation (RAG). They are designed to perform clear, repeatable actions with speed and efficiency.

What these agents do well?

- Call external tools and APIs

- Retrieve information using memory and RAG

- Automate workflows like ticket routing, content generation, or data extraction

For many short tasks, these agents deliver immediate value. They are easy to deploy, cost-effective, and well-suited for operational automation.

Where they break down?

- Reasoning remains shallow and reactive

- There is no long-term planning or strategy

- Agents rarely critique their own outputs

- They struggle with multi-step decision-making

As soon as a task requires judgment, prioritization, or adaptation, these agents often fail or produce unreliable results.

2. Deep Research Agentic Systems: Powerful but Impractical

At the other end of the spectrum are deep research agentic systems inspired by human reasoning. These systems aim to replicate how people research, write, and refine complex work.

What human-level research agents do well?

- Start with rough drafts and refine them iteratively

- Use supervisor and worker agents to divide tasks

- Critique their own reasoning and fill knowledge gaps

These systems can generate impressive research outputs. However, they come with serious trade-offs.

Key limitations

- High computational and financial cost

- Increased latency due to multiple reasoning loops

- Complex engineering and orchestration

- Difficult to scale across everyday business tasks

For most organizations, these systems are too heavy for production use.

The Missing Layer: Production-Grade Cognitive Agents

Between these two extremes lies the real opportunity.

Most agentic systems today lack:

- A clear reasoning hierarchy

- Adaptive memory strategies

- Separation between planning and execution

- Cost-aware intelligence

Dextralabs addresses this gap with cognitive-grade agentic systems—agents designed to think deeper than workflow bots while remaining practical enough for real-world deployment.

These systems are:

- More capable than task-based agents

- More efficient than academic research systems

- Built for scalability, reliability, and business impact

How Cognitive Agentic Systems Actually Think?

1. Reasoning Layers Instead of Single Prompts

Traditional agents rely on a single prompt-response cycle. Cognitive agents operate across layered reasoning.

- Task reasoning htandles immediate actions

- Strategic reasoning evaluates goals and priorities

- Reflective reasoning reviews outputs and detects gaps

This layered structure mirrors how people think and reduces errors caused by shallow reasoning.

2. Planning Before Acting

Humans plan before acting, especially when tasks are complex. Cognitive agentic systems follow the same principle.

Responsibilities are divided clearly:

- Planner agents decide what needs to be done

- Executor agents perform specific actions

- Validator agents review results for quality and accuracy

This separation improves reliability and reduces unnecessary retries.

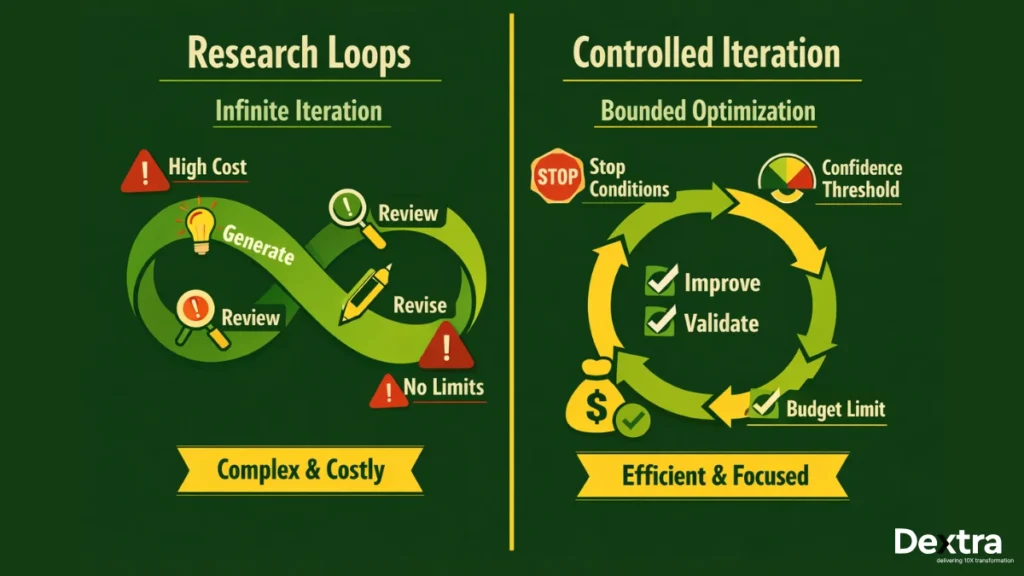

3. Iteration Without Infinite Loops

Self-improvement is valuable, but unchecked iteration leads to wasted resources.

Cognitive agents use:

- Confidence thresholds

- Stop conditions

- Controlled refinement cycles

This ensures agents improve results without running endlessly or increasing costs.

Memory Beyond RAG: What Most Agents Get Wrong?

Short-Term, Long-Term, and Episodic Memory

Many systems store everything in vector databases, assuming more memory means better performance. In reality, this often increases noise and confusion.

Human-inspired memory design separates:

- Short-term working context

- Long-term factual knowledge

- Episodic memory from past tasks

This structure allows agents to retrieve the right information at the right time.

Context Compression and Forgetting

Forgetting is not a weakness. It is a strength.

Selective memory helps agents:

- Focus on relevant details

- Avoid outdated or conflicting data

- Improve response accuracy

Cognitive agents compress context intelligently instead of accumulating unnecessary information.

Learning Across Tasks

Most agents reset after each conversation. Cognitive systems learn across tasks by storing outcomes, patterns, and feedback. Over time, this improves consistency and performance across similar use cases.

Research-Grade Agents Without Research-Grade Complexity

Borrowing the Right Ideas from Test-Time Diffusion

Advanced research systems introduce valuable concepts:

- Draft-first reasoning

- Iterative refinement

- Self-critique loops

Dextralabs incorporates these ideas in a simplified, controlled form suitable for production.

What to Avoid from Academic Systems?

Some research practices do not translate well to real-world environments:

- Excessive parallel agent spawning

- Unbounded reasoning steps

- Over-engineered orchestration

These approaches increase cost and reduce predictability.

Dextralabs’ Balanced Architecture Approach

Dextralabs designs modular agentic systems with:

- Budget-aware reasoning

- Progressive depth based on task complexity

- Scalable orchestration

Not every task needs deep research. Cognitive agents adapt depth dynamically.

Real-World Use Cases Where This Middle Layer Wins:

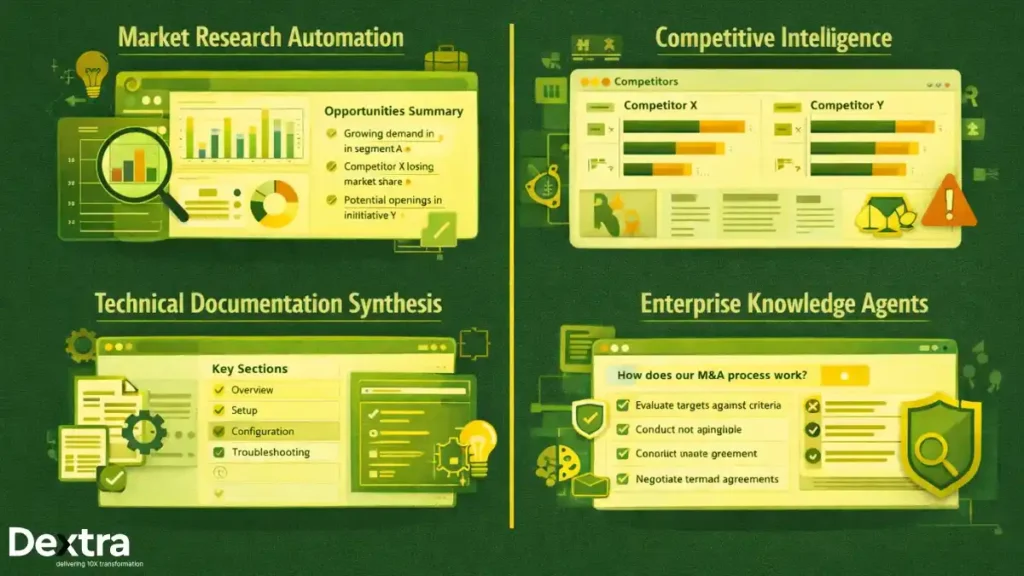

The middle layer of agentic AI, often referred to as cognitive or hybrid agents, combines structured execution with controlled reasoning, making it ideal for real-world applications:

- Market Research Automation:

Simple agents often miss deeper trends, while deep research agents slow down delivery. Cognitive agents balance speed and reasoning to surface actionable insights efficiently. - Competitive Intelligence:

Task agents collect data but fail to interpret it. Cognitive agents analyze patterns, compare sources, and highlight strategic risks. - Technical Documentation Synthesis:

Workflow agents struggle with accuracy, and research agents over-engineer the process. Cognitive systems generate clear, structured documentation at scale. - Legal or Policy Research (Non-Diagnostic):

Cognitive agents summarize and organize information responsibly without replacing human judgment. - Enterprise Knowledge Agents:

Instead of static search tools, cognitive agents understand intent, context, and relevance across organizational knowledge.

How Dextralabs Builds Scalable Agentic Systems?

Building scalable agentic AI systems requires more than intelligence; it demands engineering discipline, structured workflows, and governance. Dextralabs approaches this challenge by implementing a set of core architectural pillars that ensure agents remain effective, reliable, and safe, even as they operate at scale. Each layer is designed to address a specific operational need while maintaining overall system integrity.

- Orchestration Layer:

Manages agent roles, task sequencing, and lifecycle control to ensure predictable behavior and smooth operation across multiple agents. - Reasoning Control:

Guides agent planning, decision-making, and validation, balancing simple and deep reasoning for efficient yet intelligent actions. - Memory Governance:

Maintains relevant context and filters outdated or low-value information, ensuring continuity and accurate decision-making. - Secure Tool Execution:

Applies safeguards, permissions, and access controls to prevent risky actions and protect organizational data. - Evaluation & Feedback Loops:

Continuously monitors performance, quality, and reliability, enabling iterative improvements and trustworthy operation.

Key Takeaways for Businesses Building AI Agents in 2026:

As organizations adopt agentic AI, it is important to recognize that not every task requires a deep research agent. Simple, execution-focused agents can handle routine tasks efficiently, while complex, high-stakes decisions demand agents capable of deeper reasoning.

Intelligence should scale with task complexity, adapting reasoning depth and decision-making power based on the problem. Adaptive agent depth allows businesses to deploy AI that is both cost-effective and insightful. Companies that implement this balance can maximize value while minimizing risk and inefficiency, gaining a clear competitive advantage.

Key Points:

- Not every task needs a deep research agent; simple agents are sufficient for routine work.

- Complex decisions require agents with deeper reasoning capabilities.

- Intelligence should scale with task complexity for efficiency and accuracy.

- Adaptive agent depth enables cost-effective and insightful AI deployment.

- Balancing depth and efficiency provides a competitive advantage.

Why Dextralabs Is Positioned for the Next Phase of Agentic AI?

Dextralabs stands out in the rapidly evolving world of agentic AI by combining practical engineering, research-informed reasoning, and production-ready scalability. Unlike many AI solutions that focus on demos or experimental prototypes, Dextralabs builds systems designed for real-world business impact.

Practical engineering ensures that agents operate reliably under real-world constraints, with predictable behavior, robust error handling, and safe execution. Research-informed reasoning allows agents to leverage advanced cognitive approaches, such as multi-step planning and iterative analysis, without compromising efficiency or control. Production-ready scalability guarantees that these systems can grow with organizational needs, handling larger datasets, more users, and increasingly complex tasks without performance degradation.

By integrating these three pillars, Dextralabs delivers forward-looking, grounded, and outcome-driven agentic AI solutions. Organizations deploying these systems can confidently leverage AI to improve decision-making, automate workflows, and gain a sustainable competitive advantage.

Wrapping Up

Agentic AI is evolving quickly, but success depends on balance. Organizations need systems that think deeper than simple automation while remaining practical, scalable, and cost-aware.

Dextralabs focuses on this missing middle layer, cognitive agentic systems built for real-world complexity. Explore Dextralabs’ enterprise AI solutions, or connect with the team to design agentic systems tailored to your goals.

FAQs:

Q. How is this different from RAG?

RAG retrieves information, while cognitive agentic systems reason, plan, and adapt beyond simple retrieval.

Q. Are deep research agents always better?

No. They are powerful but often unnecessary for routine or low-complexity tasks.

Q. How do agentic systems reduce hallucinations?

Layered reasoning, validation agents, and controlled memory usage minimize errors and maintain accuracy.

Q. Can these agents scale for large enterprises?

Yes. Dextralabs designs production-ready systems that scale efficiently across users, datasets, and workflows.

Q. How do these agents balance speed and intelligence?

Adaptive reasoning depth ensures agents act quickly for simple tasks and deeply for complex problems.