Imagine a future where your business runs more smoothly, your contacts with customers are easier, and you can make decisions more quickly than before. This isn’t a far-off dream; it’s what Large language models (LLMs) are making available for businesses right now.

Large language model (LLM) integration is evolving the way businesses function by automating tasks and making customers’ experiences better. Gartner says that 72% of businesses will invest in generative AI by 2026. But even though people are excited about it, expanding AI in businesses is not easy.

According to McKinsey, only 18% of generative AI initiatives make it to production effectively. It’s easy to see why: using LLMs in businesses is very different from standard machine learning (ML) efforts. Businesses could waste money, run into regulatory problems, and provide users with bad experiences if they don’t have the correct plan.

We at Dextralabs help businesses in the US, UAE, and Singapore deal with these problems. We make sure that your enterprise LLM implementation is smooth, scalable, and useful, whether you’re making a chatbot, automating tasks, or adding AI to your product.

Ready to scale GenAI safely and smartly?

Let Dextralabs guide your enterprise LLM journey

Book a Free 30-Minute Ai ConsultationWhat are Common Pitfalls in LLM Deployment and How to Avoid Them?

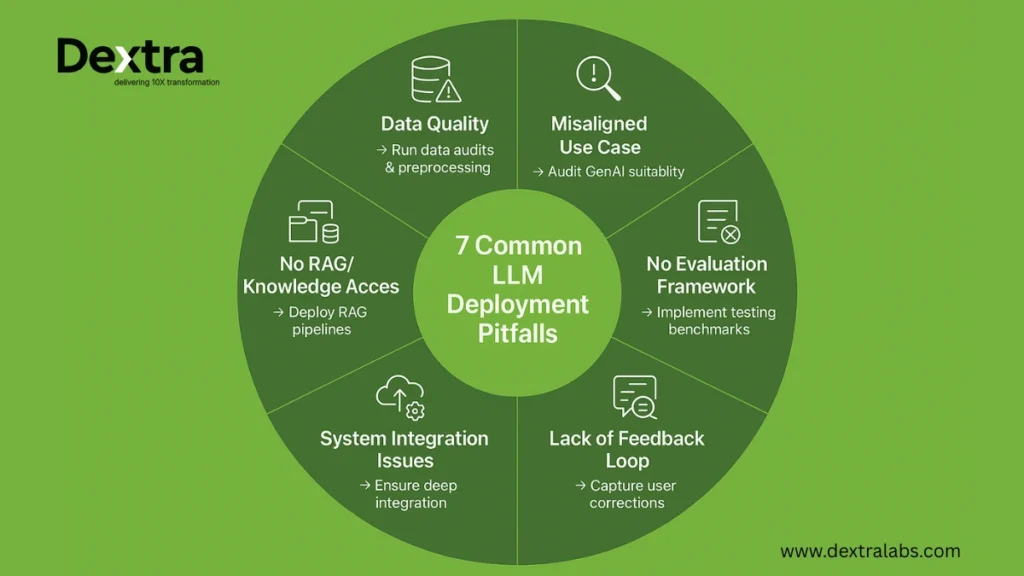

Despite the growing number of businesses utilizing LLMs, many of them fail to reap the benefits due to avoidable errors. Below, we’ll examine the most common LLM deployment pitfalls or LLM deployment challenges. Furthermore, you’ll explore how Dextra Labs can help prevent them.

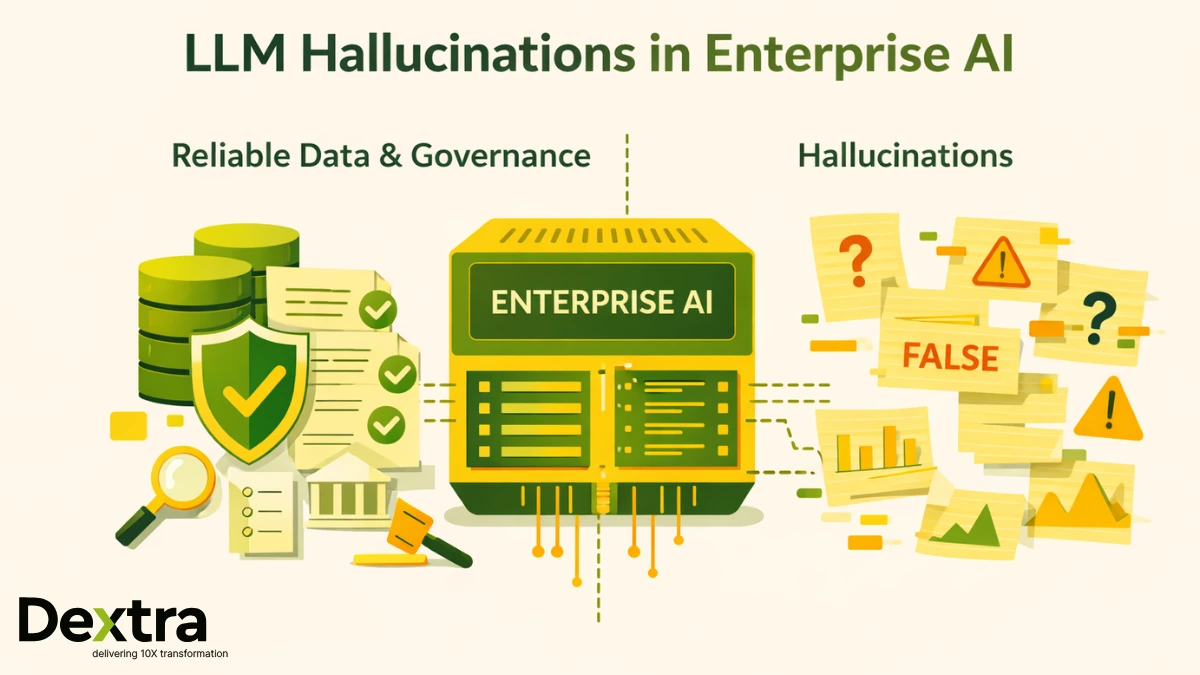

1. Insufficient Data Preparation and Quality Control

The most crucial component of any successful LLM implementation in an enterprise is high-quality data. The data that LLMs are trained on powers their performance. Sadly, many businesses feed their models old, biased, or unstructured data. This makes the results useless or even harmful. According to a report by Accenture, 60% of failed AI deployments cite poor prompt quality or unclear use cases.

For example, poor data in healthcare or finance could lead to wrong predictions or compliance risks. Moreover, unstructured data frequently introduces noise. Thus, making it harder for the model to identify meaningful information.

How Dextralabs Fix this? We conduct thorough data audits and preprocessing to make sure that your models are based on high-quality, well-organized, and up-to-date data. Here are some of the things our pipelines do:

- Data Cleaning: Getting rid of duplicates, inconsistencies, and information that isn’t needed.

- Bias Detection: Finding and fixing biases in training datasets.

- Data Structuring: Changing unstructured data into representations that LLMs can work with easily.

By putting data hygiene first, we help businesses avoid making expensive mistakes and make sure their models produce correct results.

2. Misalignment Between Business Use Case and LLM Capabilities

You don’t always need an LLM to solve a business problem. When it comes to activities that need complicated human thought, long-term memory, or emotional judgment, traditional machine learning or rule-based systems are often better. When LLMs are used incorrectly, they waste resources. This makes users dissatisfied, and fails to meet their needs.

For example, if you use an LLM for financial forecasting or legal contract analysis without fine-tuning it, the findings may be inaccurate.

Dextralabs Fix:

We help enterprises run a GenAI use case audit to align their business goals with the most effective AI approach. Our process in hindering LLM deployment challenges includes:

- Finding high-impact, repetitive tasks where LLMs can add measurable value.

- Suggesting other technologies (like classical ML) for tasks that are better suited to them.

- Making sure that LLMs are deployed only where they make sense, which will give the best return on investment.

3. Lack of Robust Testing and Evaluation Frameworks

Many companies deploy LLMs without first making sure they have the necessary testing and assessment systems in place. This makes it challenging to guess how models will behave in the real world. Every enterprise must avoid LLM deployment mistakes.

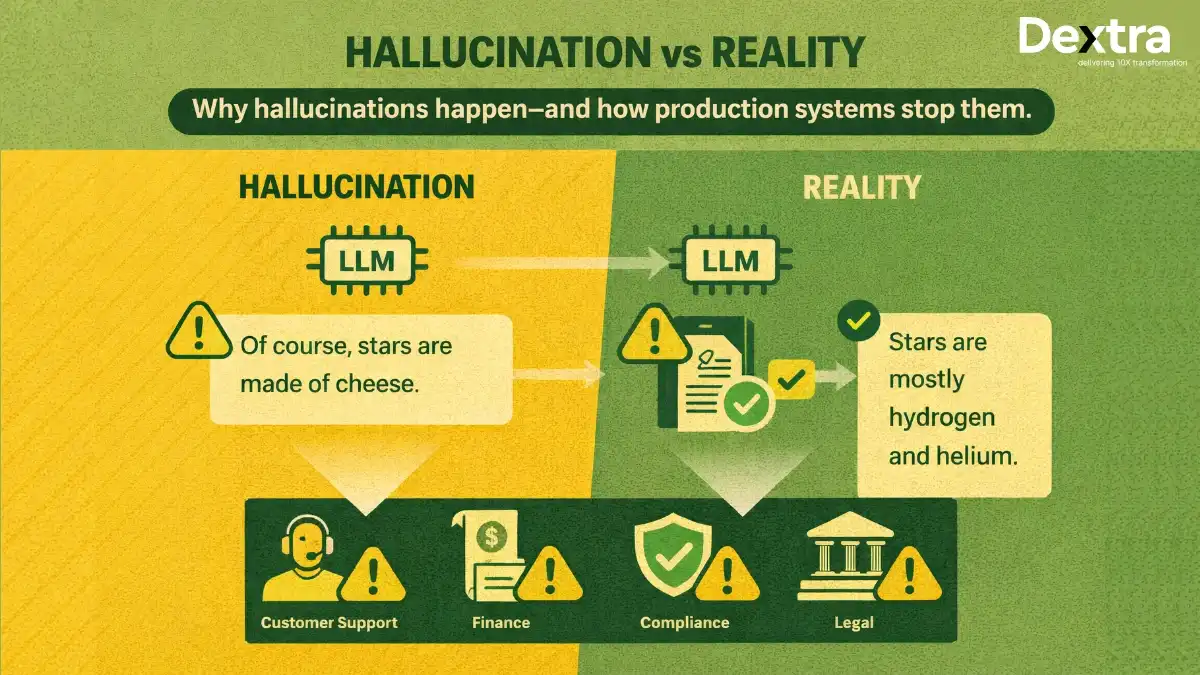

For example, LLMs could make hallucinations or outputs that are inaccurate or misleading if there are no baseline accuracy criteria or fail-safe mechanisms.

How Dextralabs Fix this? We use pre-launch evaluation suites to make sure your models are strong and trustworthy. These are some of them:

- Prompt tests: Checking how the model reacts to different types of input.

- Safety audits: Finding and reducing hazards connected to prejudice, ethics, and following the rules.

- Hallucination benchmarks: Checking how well the model can make outputs that are correct and true.

4. Ignoring Feedback Loops and User Data Signals

AI models must learn and develop over time; they are not static. However, a lot of companies are unaware of the significance of feedback loops. As a result, they become stuck and are unable to grow from their errors. After launch, LLMs won’t improve if they don’t receive data signals or user corrections.

Dextralabs Fix:

We make closed-loop systems that enable continuous learning and getting better. We do the following to tackle these LLM deployment challenges:

- Capturing feedback from users to improve prompts and outputs.

- Using human-in-the-loop (HITL) processes for important jobs.

- Making models work better based on data from real-world use.

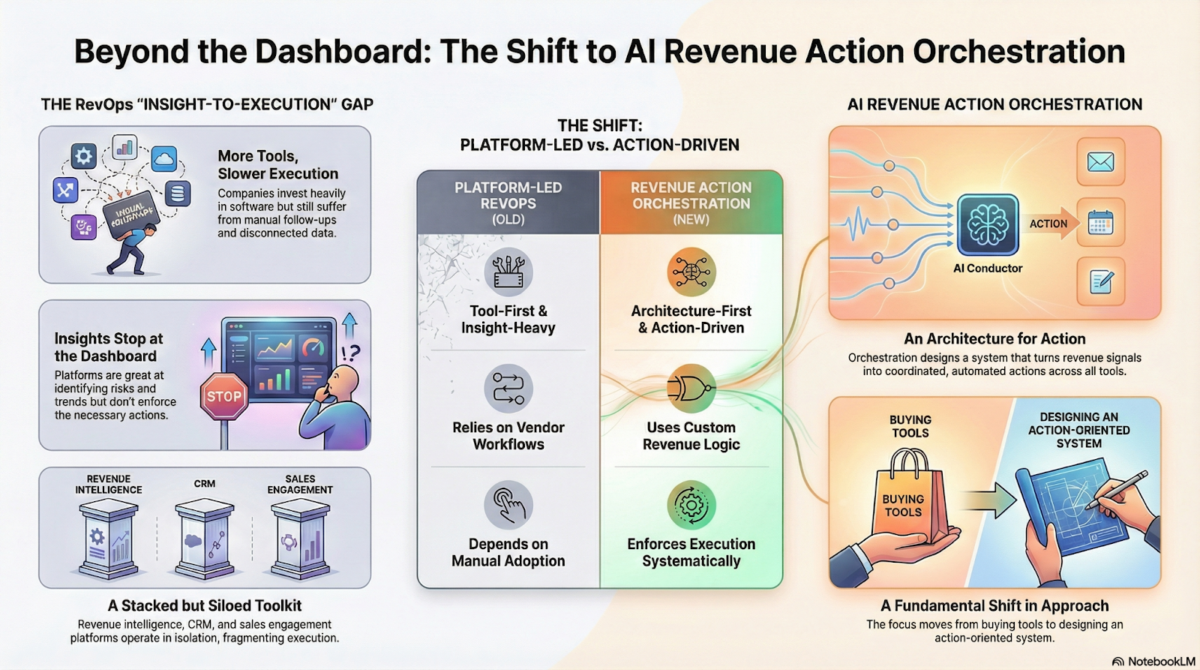

5. Poor Integration with Existing Systems and Workflows

Low adoption and siloed data are common outcomes of deploying LLMs as stand-alone tools. If companies don’t fully integrate AI into their current systems, they won’t be able to use all of its benefits. It is one of the biggest AI integration challenges.

For example, a chatbot powered by an LLM that isn’t linked to your knowledge base or CRM will have a hard time giving you accurate, relevant information.

How Dextralabs Help in LLM Integrations?

We ensure deep integration with your existing systems and workflows. This includes:

- Mapping LLM workflows to business processes.

- Working with CRMs, ERPs, and internal databases to make things easier.

- Allowing users to share data in real time for a consistent experience.

By embedding LLMs into your core ecosystem, we transform them into productivity amplifiers, not just standalone tools.

6. Underestimating Infrastructure and Computational Costs

Operating LLMs costs a lot of money and processing power. Unexpected AI cost optimization overruns result from many businesses’ failure to plan for scaling, monitoring, and optimization.

For instance, if models aren’t configured to function as efficiently as possible, inference expenses can skyrocket.

Dextralabs Fix:

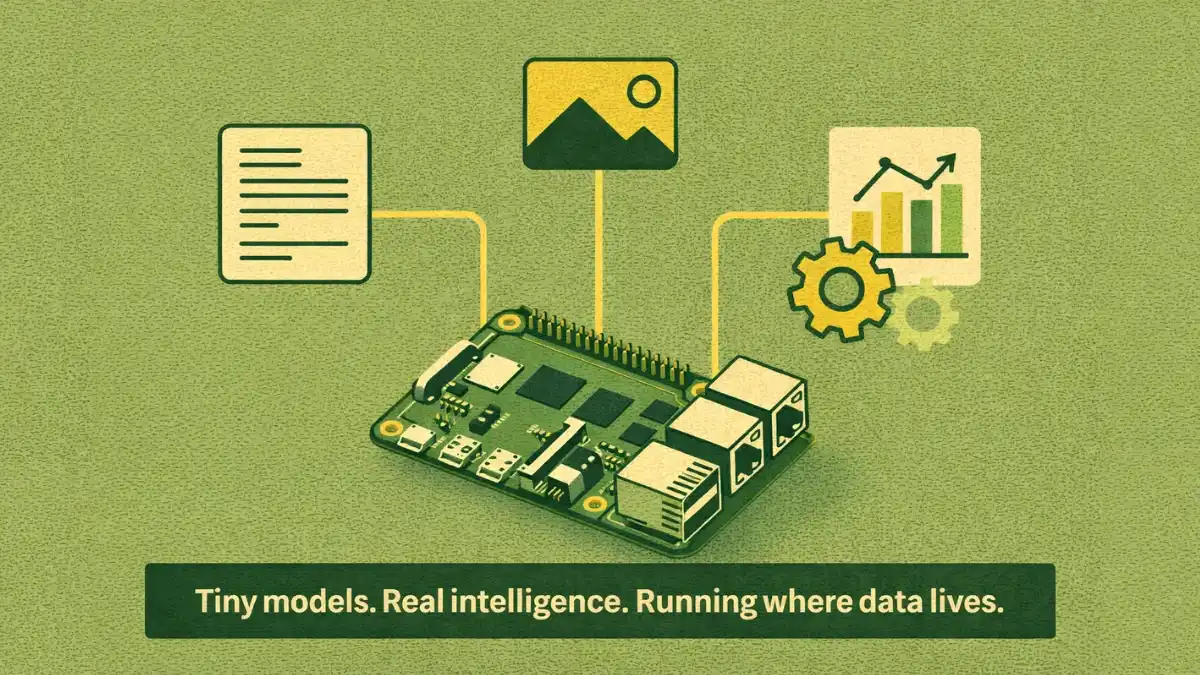

We use cutting-edge methods to create cost-optimized LLM stacks, such as:

- Caching: Cutting down on pointless calculations.

- Quantization: Reducing accuracy without compromising precision in order to conserve computing power.

- Model distillation: Applying more effective, smaller models to particular tasks.

- Usage analytics: Tracking and maximizing the use of resources.

7. Over Reliance on Pre-Trained LLMs Without Retrieval Augmentation Generation

Pre-trained LLM usually hallucinates answers to inquiries about things it wasn’t trained in. These models can’t access real-time internal knowledge or documents without Retrieval-Augmented Generation (RAG). Hence, they give wrong or incomplete answers.

Dextralabs Fix:

We use RAG pipelines to make your LLM work better. This means:

- Adding internal databases, documents, and wikis to the model’s response engine.

- Making it possible to get domain-specific knowledge in real time.

- Lowering the number of hallucinations and raising the level of accuracy.

We make sure your models give you outcomes that are both dependable and relevant to the situation by integrating LLMs with RAG.

What are the Best Strategies to Avoid These Pitfalls?

A successful enterprise LLM implementation requires smart and proactive planning. Here are the best practices to avoid common LLM deployment pitfalls or LLM deployment challenges:

1. Invest in Data Hygiene

Any AI system relies on data quality. Clean, annotate, and update datasets regularly to remove errors, duplication, and useless information. Use data validation pipelines to make sure the data is correct and fair. For businesses, using master data management (MDM) methods makes sure that all teams and systems are on the same page. When data is clean, models are more accurate and there are fewer mistakes.

2. Select High-Impact Use Cases

An LLM isn’t always necessary. Concentrate on jobs that are repeated and important, where LLMs can have a big effect. For instance, making frequently asked questions automatic, summarizing reports, or making marketing materials that are unique to each customer. Put the use cases that are most important to your business goals and have clear success indicators at the top of your list. This ensures that your investment pays off and gives stakeholders more faith in it.

3. Implement Continuous Testing

AI models need monitoring to work successfully. Set up automated pipelines to see how well the model works in different situations. You can keep track of things like accuracy and response time with tools like PromptLayer or LangSmith. Get feedback from users to make the prompts better and the outputs better. Testing your LLM on a regular basis ensures that it stays reliable and can adapt to new needs.

4. Ensure Seamless Integration

LLMs function best when integrated into your existing systems. Link LLM processes to your company’s operations by integrating them with technologies such as knowledge bases, CRMs, and ERPs. To enable real-time data sharing between your systems and the LLM, use APIs. An LLM that works well with other systems boosts productivity and makes the user experience better. It also helps people work together better and breaks down barriers.

5. Optimize Costs

It can be expensive to run LLMs, but good planning can assist. For different tasks, use both big and tiny models. Use response caching to avoid doing the same calculations over and over and to save resources. Quantization and model distillation are two methods that help lower the cost of computing without losing accuracy. Check usage often to find problems and keep expenses down.

6. Leverage Retrieval-Augmented Generation (RAG)

Pre-trained LLMs often have trouble with questions that are specialized to a certain field. This can cause hallucinations or outputs that don’t matter. Use Retrieval-Augmented Generation (RAG) with your LLM to make it more accurate. RAG pipelines let the model get to internal data stores, like wikis or databases, in real time. This makes sure that answers are correct, appropriate to the situation, and trustworthy.

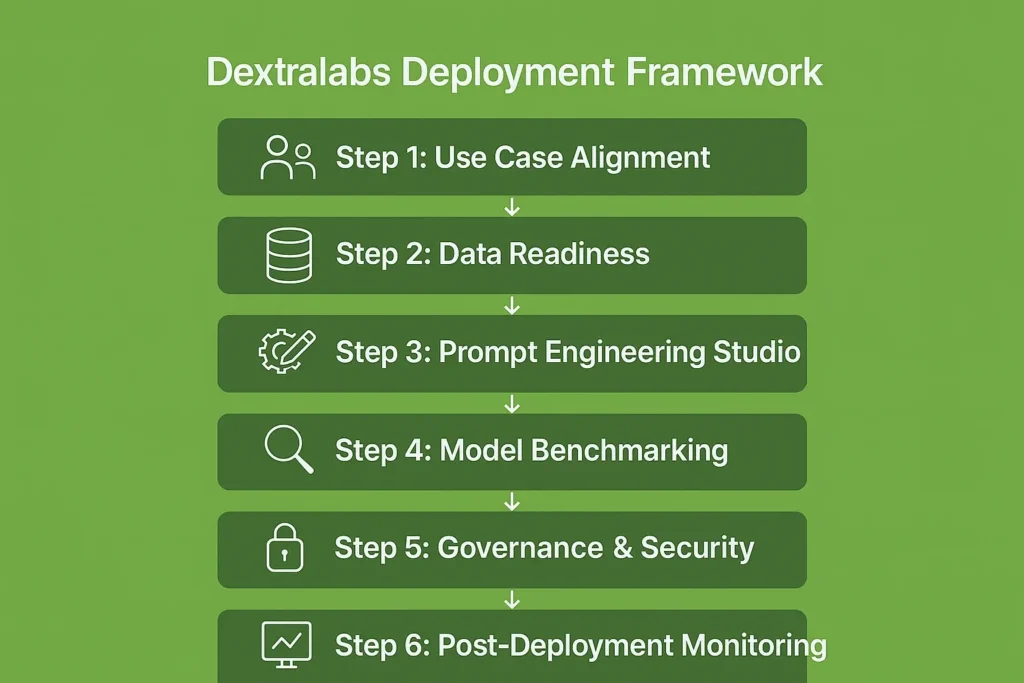

Pre-Deployment Best Practices by Dextralabs:

At Dextralabs, we follow a structured approach to ensure your LLM deployment is successful from the start. We use the best LLM deployment best practices. Here’s how we prepare scalable and reliable AI implementation for enterprises:

1. Business-Aligned AI Strategy

Our first step is to match your business objectives with your AI use case. This entails determining the tasks, such as automating customer service or producing insights from sizable datasets, where LLMs can be most useful. We specify precise success criteria, like reduced expenses or increased productivity. This ensures your AI strategy is focused and delivers measurable results.

2. Data Readiness Checks

Data readiness is critical for effective LLM deployment in enterprise. We conduct thorough data audits to clean, filter, and structure your internal knowledge base. Unstructured data is formatted for LLM processing. Biases and inconsistencies are checked to ensure data accuracy and reliability. This step trains your model on high-quality inputs.

3. Prompt Engineering Studio

Prompts guide how an LLM behaves. At Dextra Labs, we design reusable, structured prompts tailored to your specific use cases. For example, prompts for generating reports or answering customer queries. We test and refine these prompts to ensure they deliver consistent and accurate outputs. This process helps the model perform better and reduces errors.

4. Model Benchmarking

It’s very important to pick the right model. Some of the best models we look at are the GPT-4, Claude, Gemini, and Mistral. This helps us find the best one for your needs. Models are judged by how accurate, fast, and scalable they are in our benchmarking method. We also evaluate context retention and multimodal capabilities. This will make sure that you use a model that is both strong and affordable.

5. Security & Governance Layer

Security is a top priority for scaling AI in enterprise. We implement role-based access controls to protect sensitive data. Logging mechanisms track how the model is used, ensuring transparency. Fallback systems lower risks by excellently handling errors. These precautions guarantee that your LLM deployment in enterprise is safe, legal, and in line with industry norms.

Post-Deployment Checklist for Enterprise-Grade LLMs

Large Language Model integration is just the first step. The real challenge is making sure it works well and meets the needs of your business. Without a strong post-deployment plan, even the most advanced models may not work. Use the following list to make sure that your enterprise LLM implementation is reliable and works well:

1. Continuous Evaluation of Output Quality:

Regularly check model output quality. The information must be accurate, relevant, and in a suitable tone. PromptLayer and LangSmith are two tools that can help you keep track of performance metrics and find places where you can improve.

2. Feedback and Prompt Refinement Loop:

Feedback from users is very important for making models work better. Set up systems to get feedback and corrections from users. Use this information to improve prompts and make the model more accurate.

3. Observability Tooling

Understanding how your LLM works in production requires observability. Weights & Biases can help you identify and fix model issues like latency and drift by assessing model performance. According to a study by Stanford CRFM, Model drift and hallucination affect 45%+ of LLM applications within 6 months of launch.

4. Periodic Performance and Hallucination Audits

Models might drift or cause hallucinations. Check the model often to make sure it satisfies business goals and gives correct results.

5. Human-in-the-Loop (HITL) Systems

For critical tasks, incorporate HITL workflows. This ensures that sensitive or high-stakes outputs are reviewed by humans before being delivered to end-users.

6. Fallback Mechanisms

Always have a fallback system in place. For example, if the LLM fails to generate a satisfactory response, it should defer to a search engine or a predefined knowledge base.

Case Study: How Dextra Labs Helped a UAE FinTech Scale GenAI Securely?

Let’s look at a real-life scenario to show how important it is to have a plan for LLM deployment in enterprise. A top finance firm in the UAE came to Dextralabs with an urgent problem: their AI-powered chatbot was giving inconsistent results, which made customers unhappy and put them at compliance risk. Let’s explore its enterprise genAI roadmap.

The Challenge:

- The chatbot frequently produced hallucinations. Hence, giving customers erroneous or unnecessary answers to their questions.

- Not having enough role-based access controls puts important financial data at risk.

- Users had bad experiences because the system couldn’t connect to the business’s knowledge base and CRM.

The Solution:

Dextralabs came up with a solution that addressed these problems in many ways:

1. Custom Prompt Engineering: We built custom prompts to help the chatbot answer questions in a way that was correct, context-aware, and fit with the company’s tone of voice.

2. Retrieval-Augmented Generation (RAG): We made it possible for the chatbot to get real-time, domain-specific information by connecting the company’s internal knowledge base to the LLM’s response engine.

3. Role-Based Access Control: We added a governance layer to limit access to sensitive data and make sure we followed industry rules.

4. System Integration: The chatbot worked perfectly with the company’s CRM and other internal technologies, giving users a single experience.

The Outcome:

- 38% improvement in CSAT (Customer Satisfaction Score): Customers got answers that were more accurate and helpful, which made their experience better.

- 64% reduction in hallucination errors: The RAG pipeline made the chatbot much more accurate, which lowered the enterprise AI adoption 2025 risks of giving out wrong information.

- Improved Compliance: Role-based access controls made sure that sensitive data was handled safely, lowering the risk of not following the rules.

This case study shows that a strategic approach to enterprise LLM implementation can solve important problems and get results that can be measured.

Final Thoughts: Build AI That Actually Works at Scale

Scaling AI in enterprises requires more than new tools and technology. It needs a robust structure to work, grow, and meet organisation goals. This entails making the data ready and watching it once it has been used.

If you have the right plan, tools, and people, generative AI could make your business better. Want to know how to scale AI in business? At Dextralabs, we help you find high-impact use cases and make sure they fit with your business goals from start to finish. We make structured, reusable workflows to make sure the results are always the same and reliable. We handle everything from data preparation to integration and governance.

Avoiding these pitfalls isn’t just technical—it’s strategic. At Dextralabs, we don’t just build LLMs; we build enterprise-grade AI systems that work, scale, and evolve.

Built for Enterprise AI Teams

Avoid critical LLM deployment failures—explore Dextralabs’ proven framework for success.

Book Your Free AI ConsultationFAQs on Enterprise AI Pitfalls:

Q. What’s the #1 reason LLM projects fail?

Poor use case alignment is the most prevalent reason. A lot of businesses use LLMs without precisely stating the business problem they want to solve, which wastes resources and doesn’t meet expectations.

Q. Can open-source LLMs work for enterprises?

Yes, open-source LLMs can work well if you have the necessary security, infrastructure, and fine-tuning tools. They are frequently a cheaper option than proprietary models.

Q. How do I prevent hallucination in production?

To cut down on hallucinations, use Retrieval-Augmented Generation (RAG) with rapid engineering. Regular audits and feedback loops also help keep things accurate.

Q. What if I don’t have a GenAI team?

If you don’t have the right skills in-house, partnering with an AI consulting firm like Dextralabs can help. We help with everything, from planning to deployment.

Q. What are the most common pitfalls in deploying LLMs at scale?

The biggest challenges in LLM integration include insufficient data preparation, poor integration with existing systems, and underestimating infrastructure costs.

Q. How can organizations reskill teams for AI adoption?

Invest in training programs that teach MLOps for AI, prompt engineering, and AI governance. If you give your employees more training, they will be able to better manage and grow AI systems.

Q. Which technical standards are crucial for AI scaling?

AI scaling strategies include security protocols, AI cost optimization techniques, and continuous evaluation frameworks. These ensure your AI systems are reliable, efficient, and compliant.