The numbers don’t lie: 52% of Fortune 500 companies have deployed generative AI in dev workflows. (PwC AI Outlook). 71% of organizations regularly use generative AI in at least one business function, according to McKinsey’s latest Global Survey on AI. Even more striking, 92% of Fortune 500 companies now use OpenAI technology (the company behind ChatGPT), according to a February 2024 Financial Times report. While the tech world debates AI’s long-term impact, smart engineering leaders are implementing LLMs for cost optimization and AI to reduce software development costs through AI-powered dev team optimization.

This isn’t about replacing developers—it’s about freeing them from repetitive tasks while cutting operational costs. Companies working with Dextralabs are reporting significant cost savings within their first six months of LLM implementation.

The core question facing CTOs and engineering leads is simple: How can your team use generative AI to reduce engineering spend without sacrificing quality or innovation?

Ready to Cut Engineering Costs with LLMs?

Partner with Dextra Labs to deploy custom LLM solutions tailored to your startup or enterprise.

Book a Free Ai Consultation NowWhy Engineering Teams Are Prime Targets for Cost Optimization?

Engineering cost reduction with AI has become a critical focus as engineering talent represents one of the largest cost centers in any tech company. Senior developers command six-figure salaries, and the competition for top talent continues to drive compensation higher. Yet much of their time gets consumed by routine tasks that don’t require their full expertise.

Consider the typical engineering workflow: developers spend hours writing documentation, creating internal tools, troubleshooting deployment issues, and answering repetitive questions from junior team members. These activities are necessary but don’t directly contribute to product innovation or customer value.

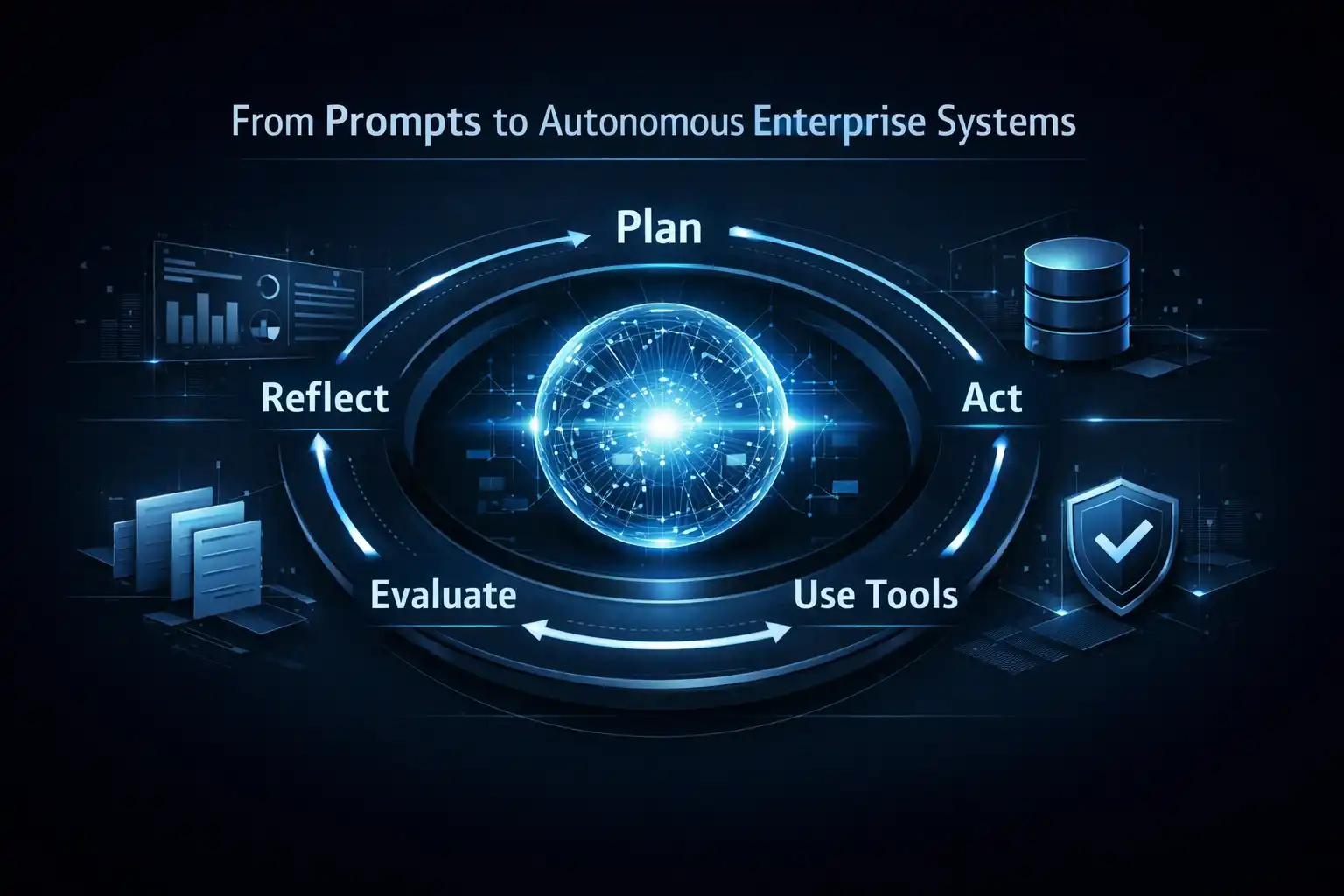

LLMs in software engineering excel at exactly these types of structured, knowledge-intensive tasks. LLM-driven automation can process context, follow patterns, and generate consistent output at scale—without requiring benefits, vacation time, or performance reviews.

The math becomes compelling when you calculate the resource optimization potential: having a $150,000-per-year engineer write API documentation versus having an LLM generate the same content in minutes represents significant cost savings.

Key Use Cases Where Fortune 500s Are Slashing Engineering Spend:

1. Code Generation & Internal Tooling

GitHub Copilot has become the poster child for AI-assisted development, but enterprise teams are implementing more sophisticated LLM applications in startups and large corporations. They’re using custom LLM prompts and prompt engineering in dev workflows to build entire internal applications, generate database migration scripts, and create administrative dashboards.

Dextralabs has developed prompt libraries specifically for common engineering tasks. These templates understand your technology stack and can generate context-appropriate code that follows your team’s conventions and security requirements, representing a key enterprise LLM use case.

2. Automated Technical Documentation

Technical documentation often becomes the bottleneck that slows down development cycles. Engineers hate writing it, but it’s essential for maintenance and team knowledge sharing.

Generative AI for engineering can analyze codebases and generate comprehensive documentation including architecture diagrams, API specifications, and onboarding guides. LLMs can also keep documentation current by automatically updating it when code changes, demonstrating AI cost optimization in startups.

A fintech startup client reduced their documentation overhead by 120+ hours per month using Claude to generate and maintain their internal technical docs. Their senior developers now focus on architecture decisions instead of explaining how their APIs work.

3. DevOps & Incident Handling with AI Agents

System outages and production issues require immediate attention, but much of the initial triage work follows predictable patterns. AI-driven DevOps automation allows LLMs to analyze log files, cross-reference known issues, and generate runbooks for common problems.

Fortune 500 companies are deploying ChatOps-style agents trained on their internal procedures as part of their Fortune 500 generative AI strategy. When an alert fires, AI agents can immediately provide context about similar past incidents, suggest probable causes, and recommend initial troubleshooting steps.

This approach reduces escalation time and helps junior engineers resolve issues that previously required senior developer intervention. The cost benefit extends beyond salary savings—faster resolution times mean less downtime and happier customers, showcasing effective LLM deployment strategies.

4. Faster Onboarding with AI-Powered Internal Copilots

New developer onboarding typically takes 3-6 months before team members become fully productive. During this period, senior engineers spend significant time answering questions about codebases, explaining architectural decisions, and reviewing junior developers’ work.

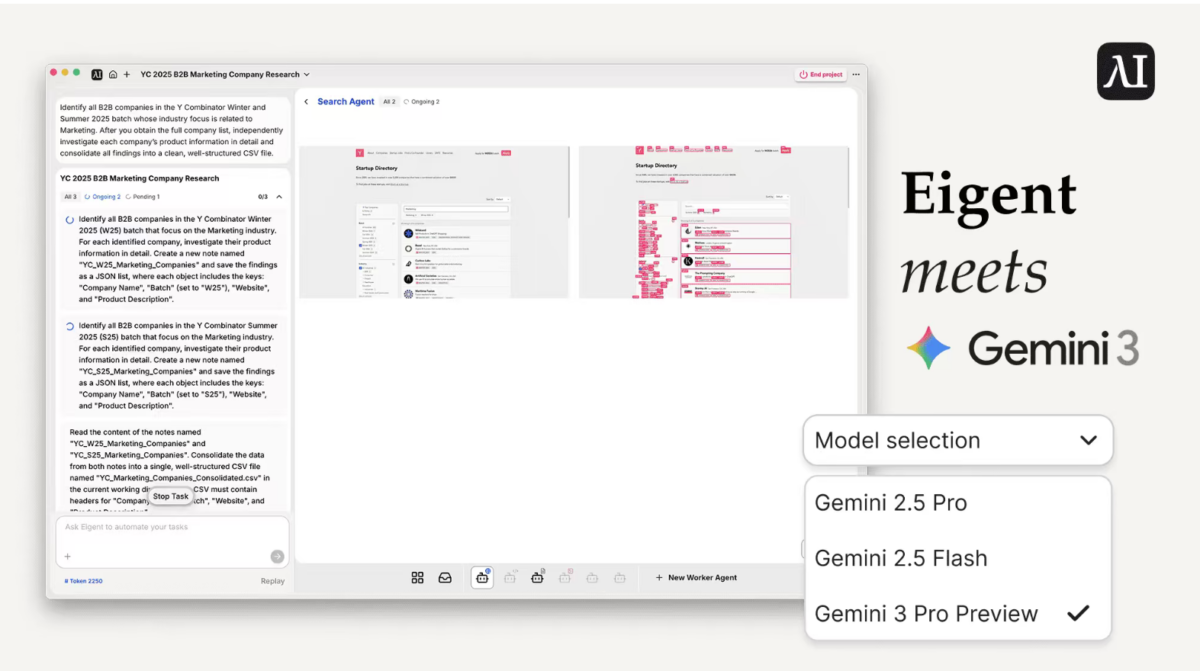

LLM-powered internal copilots and custom LLM copilots for internal tools can answer common questions instantly. These systems use RAG (Retrieval-Augmented Generation) to search through code repositories, documentation, and chat histories to provide accurate, contextual answers.

High-growth tech companies are building these systems using LangChain and similar frameworks, creating conversational interfaces that junior developers can query about internal patterns, deployment procedures, and coding standards. The result is faster time-to-productivity and reduced mentoring overhead, representing significant burn rate reduction.

5. Prompt Engineering as a Replacement for Manual Scripting

Many engineering tasks involve repetitive scripting for testing, data validation, and quality assurance. Prompt engineering for developer productivity and well-crafted prompts can replace these manual processes with intelligent automation that adapts to different scenarios.

Instead of writing custom scripts for each testing scenario, teams can use prompt workflows that understand the testing context and generate appropriate validation logic. This approach is particularly effective for integration testing, data pipeline validation, and AI in project estimation.

Dextralabs provides prompt optimization services that help teams identify these automation opportunities and implement high-performance prompt workflows integrated into their development lifecycle, supporting comprehensive LLM deployment for engineering teams.

Case Studies: LLMs in Action

1. Fortune 500: Global Tech Giant Cuts DevOps Escalation Time by 60%

A major technology company was struggling with their DevOps escalation process. Level 1 support tickets often sat in queues for hours before reaching qualified engineers, and many issues required extensive context gathering before resolution could begin.

Dextralabs implemented a GPT-based ChatOps assistant that could instantly analyze support tickets, match them against historical incidents, and provide detailed troubleshooting guidance. The system integrated with their existing ticketing workflow and could escalate complex issues while providing comprehensive context to senior engineers.

Results after six months:

- 60% reduction in average resolution time

- 40% fewer tickets requiring senior engineer intervention

- Significant improvement in documentation quality and consistency

- Cost savings equivalent to hiring 1.5 additional senior DevOps engineers

2. Startup Unicorn: Replaced 2 Full-Time Engineers with LLM Agents

A rapidly growing SaaS company found themselves constantly building internal tools and answering repetitive technical questions from their product and marketing teams. They had allocated two full-time engineers to handle these requests, but the workload kept growing.

The company built a custom internal assistant using prompt workflows and retrieval systems. The AI could generate database queries, create simple dashboards, explain technical concepts to non-technical team members, and even write basic automation scripts.

The implementation took 6 weeks and cost approximately $40,000 in development time. Within four months, they had reassigned both engineers to core product development while maintaining the same level of internal support.

Annual savings: $300,000+ in engineering salaries, plus the additional value generated by reassigning senior developers to revenue-generating activities.

LLMs vs Traditional Outsourcing: What’s More Cost-Efficient?

| Factor | Traditional Outsourcing | In-House Engineers | LLM Solutions |

| Setup Time | 4-8 weeks | 2-4 weeks | 1-3 weeks |

| Monthly Cost | $8,000-15,000 | $12,000-20,000 | $500-2,000 |

| Scalability | Limited by team size | Limited by hiring | Nearly unlimited |

| Quality Consistency | Variable | High | High with proper prompting |

| Communication Overhead | High | Low | Minimal |

| Time Zone Issues | Common | None | None |

| Knowledge Retention | Lost when contract ends | High | Permanent |

Open-source LLMs for business provide several advantages over traditional outsourcing models. There’s no onboarding period, no cultural or communication barriers, and no risk of losing institutional knowledge when contracts end.

The long-term ROI timeline becomes particularly compelling when you consider that LLM capabilities continue improving while costs decrease. A prompt workflow that costs $500 per month through cost-efficient deployment might handle twice as much work for the same price next year, demonstrating superior AI cost optimization strategies for startups.

Implementation Tips to Maximize ROI with LLMs:

Success with LLM cost optimization requires strategic thinking about which tasks to automate first. Start with high-volume, low-risk activities that follow predictable patterns.

A. Identify High-Value Opportunities: Look for tasks that consume significant developer time but don’t require creative problem-solving. Internal tooling development, documentation, and routine troubleshooting are ideal starting points for AI agents for DevOps automation.

B. Use Specialized Models and Prompts: Generic ChatGPT conversations won’t deliver enterprise-grade results. Invest in fine-tuned models or comprehensive prompt libraries that understand your specific technology stack and business context through custom prompt engineering.

C. Measure and Track ROI: Establish clear metrics before implementation. Track tokens consumed, hours saved, and improvement in key performance indicators like mean time to resolution (MTTR) and developer productivity improvements.

D. Start Small and Scale Gradually: Begin with pilot projects that can demonstrate cost reduction opportunities quickly. Once you’ve proven ROI on simple use cases, expand to more complex automation scenarios using hybrid deployments.

Dextralabs has developed an enterprise LLM deployment blueprint that helps organizations identify optimization opportunities, implement proof-of-concept solutions, and scale successful pilots across their engineering organization with Cloud FinOps for AI considerations.

How Dextralabs Can Help You Cut Costs with GenAI?

Implementing LLM solutions requires more than just API access and basic prompts. Enterprise-grade LLM deployments need careful planning, domain expertise, and ongoing optimization to deliver consistent ROI and productivity improvement.

Custom Prompt Engineering: We develop prompt libraries specifically for your development workflow, technology stack, and business requirements. Our AI copilots trained on codebase are optimized for consistency, accuracy, and cost-effectiveness.

AI Copilots for Your Codebase: We build internal assistants that understand your specific code patterns, architectural decisions, and development practices. These systems can answer questions, generate code, and provide guidance through using AI copilots that’s customized to your organization.

RAG Integration for Internal Knowledge: We implement RAG integrations and retrieval-augmented generation systems that can search through your documentation, code repositories, and chat histories to provide accurate, contextual answers to technical questions.

Cost Optimization Through Hybrid Deployments: We help you balance open-source + API-based LLMs and hybrid deployments to minimize costs while maintaining performance. Our approach includes local model deployment for sensitive data and API optimization for scalability, incorporating Cloud FinOps for AI best practices.

Top 5 Engineering Tasks to Automate with LLMs:

| Task | Recommended Approach | Estimated Monthly Savings |

| Internal Tool Development | GPT-4 + LangChain with custom prompts | 40-60 developer hours |

| Technical Documentation | Claude with automated generation workflows | 30% faster onboarding process |

| Runbook Creation & Updates | RAG + GPT for knowledge synthesis | Significant reduction in support escalations |

| QA Validation Scripts | Prompt-based workflow automation | 25% reduction in testing overhead |

| Technical Q&A Support | Custom LLM copilot with internal knowledge base | Eliminates daily developer interruptions |

Ready to see where your engineering team can save costs with LLM automation? Book a free GenAI Cost Audit with Dextralabs. We’ll analyze your current workflows and show you specific opportunities for cost reduction and productivity improvement.

Need Expert AI Consulting to Cut Engineering Costs?

Dextra Labs offers tailored AI consulting to help startups and enterprises deploy LLMs that save time, reduce costs, and scale faster.

Book a Free AI ConsultationFrequently Asked Questions:

Q. What’s the typical ROI timeline for LLM implementation in engineering teams?

Most organizations see initial cost savings within 4-6 weeks of implementation. Significant ROI (25-45% cost reduction) typically materializes within 3-6 months as teams adapt their workflows and expand automation to additional use cases.

Q. How do you ensure AI-generated code meets enterprise security and quality standards?

LLM-generated code should always go through the same review and testing processes as human-written code. We recommend implementing automated security scanning, comprehensive testing suites, and human oversight for critical systems. The goal is to speed up development, not bypass quality controls.

Q. Can LLMs completely replace developers?

No, and that’s not the goal. LLMs excel at routine, pattern-based tasks but struggle with creative problem-solving, architectural decisions, and complex debugging. The most successful implementations use AI to eliminate repetitive work so developers can focus on high-value activities like system design and innovation.

Q. What about data privacy and security concerns with enterprise LLM deployment?

Enterprise LLM deployments should include proper data handling protocols, access controls, and audit trails. Many organizations use hybrid approaches—leveraging cloud APIs for general tasks while running local models for sensitive data processing. Dextralabs helps implement security frameworks that meet enterprise compliance requirements.