In 2026, vision language models (VLMs) are redefining the parameters for new types of artificial intelligence. These models combine visual understanding with language reasoning – thus linking the modes of language understanding and visual understanding. VLMs view images, video, documents, and, potentially, even the environments around the images and videos, in ways that machines have never done before. Also, they are capable of thinking both visually and linguistically at the same time – whether it is an X-ray, live object identification, or any complex document, VLMs expand the boundaries of human ability.

This innovation comes amid momentous change: A report of Exploding Topics says the global artificial intelligence market is valued at an estimated $391 billion in 2025, and is projected to reach nearly five fold by 2030 at a CAGR of 35.9%. This kind of growth reflects how VLMs and generative AI are becoming more integral to advances in technology and industry disruptions.

Healthcare, robotics, content moderation, and virtual assistants are all shifting foundations, and in their ways. For example, vision-language models (VLMs) are giving robots the means to intelligently navigate their environments, are giving doctors the means to identify disease based on medical imagery, and can identify what is on screen or in a picture.

Custom or Bring Vision-Language AI to Life with Dextralabs

From Gemini 2.5 Pro to the latest open-source VLMs, we help you deploy, fine-tune, and integrate cutting-edge multimodal AI for real-world impact.

Book Your Free VLM ConsultationIn 2026, what will change? Three aspects will define the newest generation of VLMs:

- Long-context comprehension across pages, frames, or documents

- Video understanding that is frame-accurate and multi-language aware

- Lightweight edge models that deliver vision intelligence for phones, drones, and AR glasses

At Dextralabs, we aim to help teams get out of the experimentation phase. Whether you’re ready for production llm deployments, fine-tuning for your specific domain, or optimizing performance for speed and efficiency, we can ensure that your vision-language stack is future-proof and scalable.

What Defines a Top-Tier VLM in 2025?

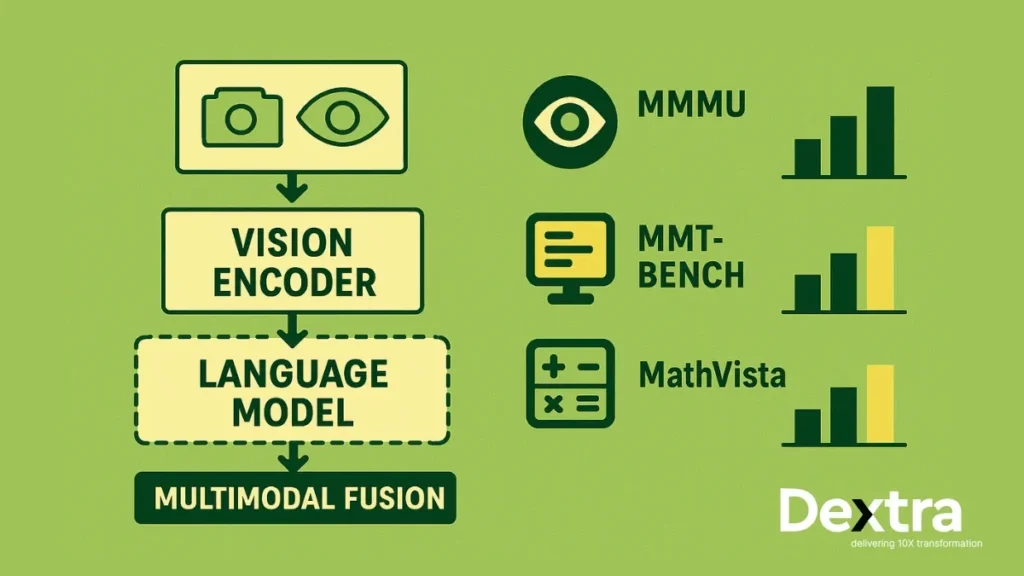

In 2026, Vision language models (VLMs) are changing our understanding of how AI systems can perceive and relate to the world around them. A top VLM will not just allow you to possess more processing power than other models, but also allow you to combine vision encoders, language models, and multimodal fusion strategies to produce high-performing, efficient, broad-spectrum, and accurate results.

At a high level, a VLM generally includes three components:

- A vision encoder that reads images and/or video,

- A language model that reads and produces text,

- A multimodal fusing mechanism that combines visual and text references.

Key Criteria to Assess the Best VLMs of 2026:

1. Accuracy of Vision-Text Reasoning

Vision-Language Models (VLMs) are required to do complicated things, like Visual Question Answering (VQA), Optical Character Recognition(OCR), and image captioning. These VLMs, with the ability to express visual understanding with contextually relevant text-based responses, will provide intelligent applications in healthcare, robotics, content moderation, etc.

2. Long-Context Processing and Video Understanding

Modern use cases demand processing long sequences of images or videos. The best VLMs will hold context for thousands of tokens, allowing them to process videos and analyze video streams to deliver meaningful insights. It is a true game-changer for surveillance systems, multimedia indexing and search, and autonomous systems.

3. Latency Engineering, Model Size, and Resource Efficiency

Latency engineering is the science of balancing accuracy and speed trade-offs, while model size and efficiency allow for limited computational load. Getting to optimal latency, size, and efficiency, VLMs will perform real-time inference from edge devices, with limited resources and without compromising on quality.

4. Fine-Tuning Support and Open Source Licensing

The ability to customize models using domain-specific data is a huge must-have. Models that support fine-tuning through few-shot or instruction tuning approaches allow businesses to customize AI workflows. Open-source licenses drive transparency and innovation, allowing developers a completely transparent view and control of all model deployments.

5. Deployment Flexibility: Cloud, On-Device, and Edge

A key feature of a top-tier VLM is the ability to deploy to any environment—whether in the cloud for scale, on-device for privacy, or on edge for low-latency cases—and still maintain operational flexibility for procurement, runtime costs, and compliance.

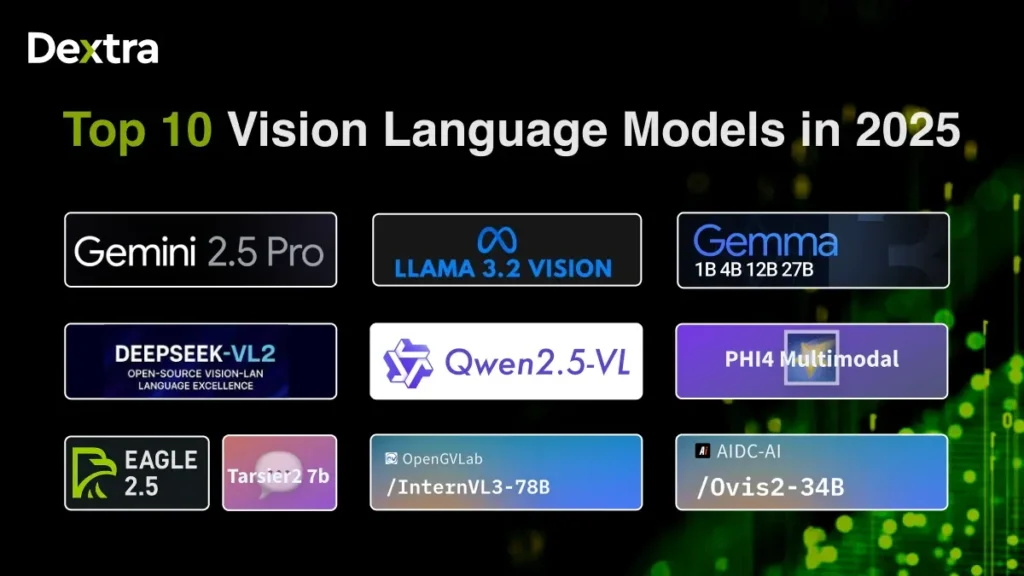

What are the 10 Best Vision Language Models of 2026?

Here is a more in-depth overview of the top 10 most impactful Vision Language Models (VLMs) for 2026, explaining how they differ by use case—ranging from video to industrial work to lightweight edge processing.

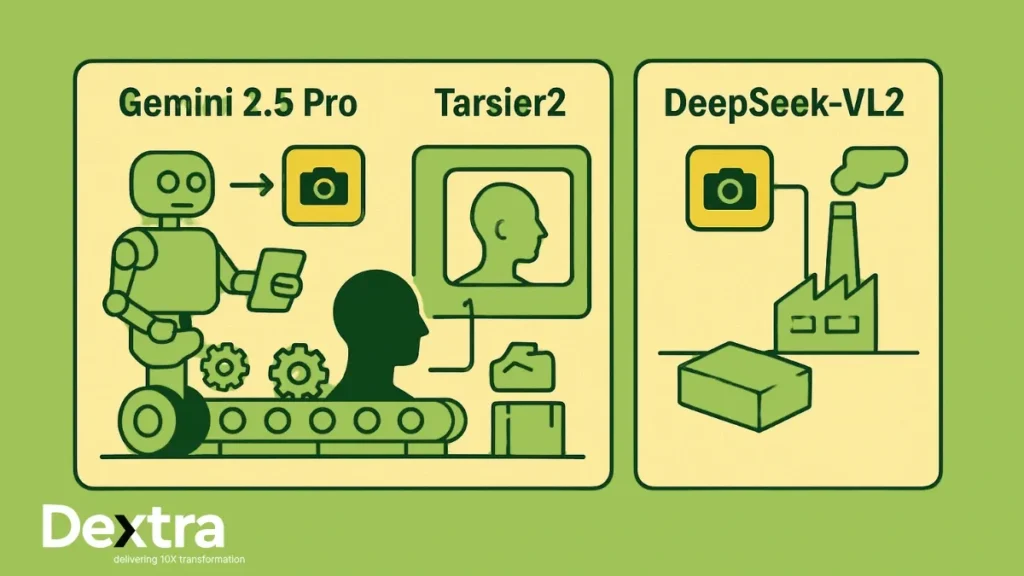

1. Gemini 2.5 Pro (Google)

This is Google’s most advanced proprietary VLM, and it is distinguished by its “thinking-model” architecture, which “deep thinks” about a problem step-by-step before responding. It can understand input from multiple modalities (text, images, video, audio) and has more than 1 million tokens in context, with 2 million tokens coming soon, as announced in the Google Blog on Gemini 2.5 updates.

It consistently tops leaderboards such as LMArena, WebDev Arena, etc., and has performed well on reasoning, coding, math, and science benchmarks, including Humanity’s Last Exam, AIME 2025, and GPQA.

2. InternVL3-78B

InternVL3-78B enjoyed success because it is open source and a very popular, powerful industrial-level VLM, providing industrial and 3D reasoning capabilities. It achieves 72.2 on the MMMU benchmark, setting a new record for an open-source model and not too far off from proprietary superstars like GPT-4o or Gemini 2.5 Pro, as documented in the InternVL3 technical report on arXiv.

In addition, InternVL3-78B improved at least 4+ points from each earlier version across many multimodal reasoning benchmarks using the MPO training methodology.

3. Ovis2-34B (AIDC-AI)

This is a model that balances computational efficiency with accurate performance. It performs well across models following MMBench-style evaluations, so it is perfect for teams looking for a solid performance-to-resource tradeoff. While public benchmark scores are still forthcoming in-depth at this point, its lean architecture serves to make it even more valuable for those who want to preserve computational resources.

4. Qwen2.5-VL-72B-Instruct (Alibaba)

This is a high-capacity open-source VLM licensed under Apache 2.0. Qwen2.5-VL-72B-Instruct supports video input, localization, and is multilingual. This model primarily optimizes for global use cases that require versatile capabilities along with freedom for customization.

5. Gemma 3 (1B–27B)

Gemma 3 is an open-weight VLM series from Google DeepMind with context windows up to 128K tokens. It performs exceptionally well on OCR and multilingual understanding tasks, and can scale across a variety of deployment scenarios–from small-scale deployments to enterprise-level.

6. LLaMA 3.2-Vision (Meta)

A powerful open-source VLM with a very long context window for document understanding, including OCR applications and VQA applications. LLaMA 3.2-Vision has great accuracy while maintaining flexibility for custom fine-tuning.

7. DeepSeek-VL2 Series

Using a Mixture-of-Experts (MoE) architecture, this series could have 1-4.5 billion activated parameters. This means it is possible to perform reasoning on technical and scientific tasks with extremely low latency with the DeepSeek-VL2 models. These models are ideal for deployment in a lab, factory, or mobile setting.

8. Phi-4 Multimodal / Pixtral

These types of VLMs (multimodal video language models) are lightweight, edge-first types. Ultimately, they are designed for speed, power efficiency and are capable of following instructions successfully while interpreting visual input—ideal for AR glasses, smartphones, or IoT gadgets.

9. Tarsier2-7B

Another video-specific specialist, Tarsier2-7B, excels in long-form video description, frame-level Q/A, and streaming comprehension. It consistently outperforms models like GPT-4o and Gemini in video benchmarks, establishing it as one of the most preferred multimodal language models for video-intensive workflows.

10. Eagle 2.5-8B

Eagle 2.5-8B is a multi-modal generalist that excels at high-resolution videos and image reasoning with long context. As an open-source model, it achieves a strong balance between modality and generalist functionality.

Model Highlights & Use Cases:

The latest Vision Language Models (VLMs) are not just research projects—they are driving real systems today in various industries. Here is a rundown of how each of the leading VLMs in 2025 is being used, along with examples of the job sectors currently getting the most from them.

1. Gemini 2.5 Pro (Google)

Key Strengths:

- Outstanding multimodal reasoning across images, text, audio, and video.

- Long-context capabilities for analyzing hours of video or hundreds of pages simultaneously.

- Step by step, “Deep Think” reasoning is used before producing results.

Industry Applications:

- Multimodal AI Agents: Powering next-gen virtual assistants that can “see” a webpage, “hear” an audio clip, and “read” a PDF, all from one query.

- Robotics Platforms: Assisting autonomous robots with proximity to the factory floor or warehouse by supplying contextual awareness of typical environments, as well as instructions.

- Web-scale Visual Assistants: Enabling enterprise tools to rationalize and reach conclusions over large archives of nearly any visually and verbally accessible information.

2. InternVL3-78B

Key Strengths:

- Among all non-proprietary models, it has top benchmarks for open-source MMMU across the board.

- Deep 3D reasoning and CAD-friendly spatial awareness.

Industry Applications:

- 3D Modeling & Simulation: Read specifications and designs to create interactive 3D models from engineering blueprints.

- CAD Systems Integration: Automatic validation and error corrections of designs on architectural or industrial projects.

- Factory Floor Vision AI: Identifying defects, tracking workflows, and monitoring for safety compliance on the factory floor during real-time operations.

3. Qwen2.5-VL-72B (Alibaba)

Key Strengths:

- Supports video comprehension, multilingual processing, and localization.

- Fully open-source under Apache 2.0 offers complete freedom for customization without additional licensing requirements.

Industry Use Cases:

- Video Understanding Pipelines: Automated highlight detection, frame-level QA, and long-form video summary formats.

- Multilingual AI Agents: Virtual assistants that can describe images or videos in all languages seamlessly.

- Localization Workflows: Translating & adapting video-based training content for a global audience.

4. Gemma 3 (Google DeepMind) & LLaMA 3.2 Vision (Meta)

Key Strengths:

- Superb OCR (Optical Character Recognition) capabilities.

- Large context windows for multi-document reasoning.

Industry Use Cases:

- Document Parsing: Taking scanned versions of contracts, invoices, or legal filings and converting them into structured, easy-to-search data, which is actionable by industry standards.

- OCR Tasks: Extracting text from an image made up of a low-quality scan, or text from historical archives, etc., which often includes multiple languages.

- Government Quality Data Processing: Handling sensitive records, compliance documentation, and classified records in a secure AI framework.

5. DeepSeek-VL2

Key Strengths:

- Low-latency mixture-of-experts model performs very well even on modest hardware.

- Strong inferencing for scientific and technical datasets.

Industry Applications:

- Scientific Research Assistant: Processing microscopy images, chemical structures, or astronomical datasets in real-time.

- Low Latency Apps: Instant perspective visual reasoning in AR glasses or handheld systems.

- Edge Deployments in Compute-Limited Environments: Used on field research stations, in diagnostics labs in rural locations, or even in disaster-affected areas.

6. Tarsier2-7B & Eagle 2.5-8B

Key Strengths:

- Expertise in long-form video understanding and high-resolution image reasoning.

- Superior frame-by-frame QA for dynamic events.

Industry Applications:

- Long-Video QA: Answering complicated, timeline-based questions about multi-hour video surveillance footage or interviews.

- News Summarization: Producing short summaries of entire broadcasts or event coverage.

- Sports/Event Commentary: Independently offering real-time commentary, play-by-play analysis, and highlight footage.

Open Source vs Proprietary: Which One Should You Select?

When deciding between open source vs proprietary Vision Language Models (VLMs) in 2026, the decision being made is far larger than a technical choice to consider costs, compliance, performance, and scalability. Rather, you are making a much bigger business decision to consider costs, compliance, performance, and scalability.

Let’s go through the differences, when to use each, the implications on performance and scalability, and provide examples of how organizations are making the decision today.

| Feature | Proprietary VLMs | Open-Source VLMs |

| Access & Cost | Paid API that charges you based on usage, with hosting, maintenance, and support. Costs rise with use and require a contract. | Free with open licenses, all hosting costs and computing costs on them, and no per-request charges. |

| Performance | Perform very well on a lot of benchmark tests with complex architecture, large proprietary data sets, and great features such as real-time video understanding. | Fast-moving through community and research to rival proprietary models in certain areas only. |

| Fine-Tuning | Mostly limited; custom training may cost more, and you may have to provide data to the vendor. | Fully customizable with control over proprietary data and blocking architecture access. |

| Deployment | Most are cloud-only via vendor API; very limited hybrid solutions. | On-premises, cloud, edge, or hybrid deployment with no vendor lock-in. |

| Data Control | Vendor-governed; may keep and analyze your data based on their policy and business needs. | You have full control of data that remains on your infrastructure, which is best when working in a regulated environment. |

Why Choose Dextralabs for VLMs?

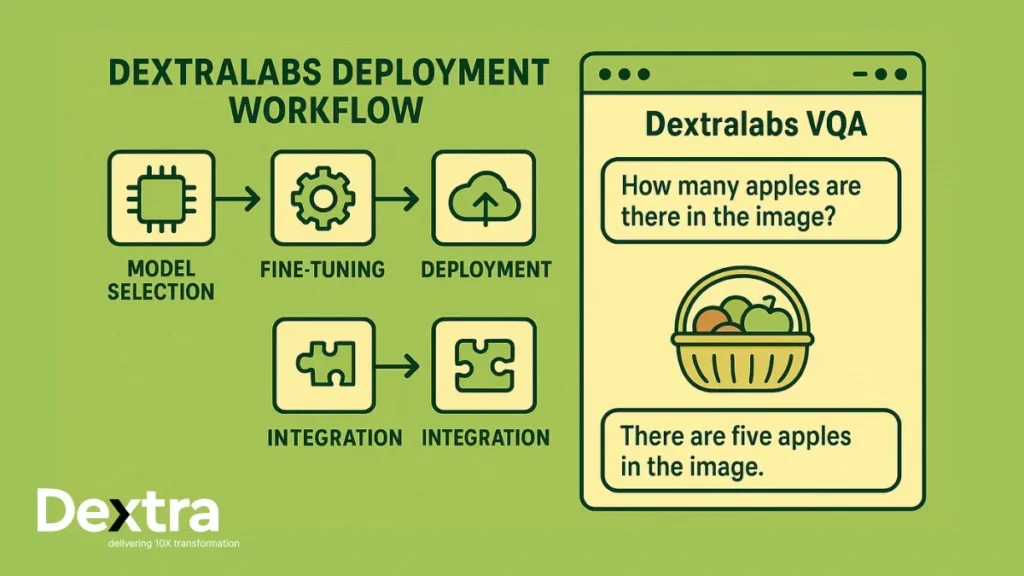

From healthcare to finance to robotics, logistics, and media, Dextralabs brings cutting-edge Vision Language Models into production systems. We provide the experience, tooling, and infrastructure to deploy, fine-tune, and iterate at scale for VLMs.

What Makes Us Different:

- Expert Deployment- Run anywhere: any cloud, on premise, or as an edge device; with a GPU accelerator (NVIDIA A100/H100, AMD, ARM). All containerized, in Kubernetes/Docker, and with ML Ops to scale up and down easily.

- Fine-Tuning Pipelines – Instruction-tuning, few-shot learning, and privacy-preserving training tailored to your domain’s needs without large datasets.

- Vision Agent Workflows – Create VQA, image chat agents, and multimodal RAG systems for precise, contextually relevant responses.

- Performance Optimization – Measure and minimize latency, benchmark accuracy, and control hallucination rates with hybrid model validation layers.

- IDE & SDK Support – Python SDKs, REST APIs, ML Ops tools, etc. for developers to implement quickly and prototype at will.

Suggested Use-Cases by Model:

Picking the right Vision Language Model (VLM) is about more than just performance metrics – it’s about how your own needs can be met, considering the strengths of the model you pick.

The following list breaks down the top VLMs of 2026 by practical application with examples from the real world.

1. Image understanding & Visual Question Answering (VQA).

Recommended Models:

- Gemini 2.5 Pro – Outstanding reasoning across complex image scenes, making it perfect for technical inspections, product catalogs, and autonomous robot vision.

- Gemma 3 – Strong open-source option with OCR and multilingual support for document analysis, medical imaging, and content moderation.

- LLaMA 3.2 Vision – Structuring data very well from images, excellent for ID verification, invoice processing, and parsing legal documents.

Example Applications:

- Retail – Automated tagging of products and managing catalogs

- Healthcare – Anomaly detection for radiology scans

- Government – Passport and license OCR with comparison against databases

2. Video or Streaming Comprehension

Recommended Models:

- Tarsier2 – Created for QA on long-form video, frame-based video QA, and automated sports commentary generation.

- Eagle 2.5 – Handles high-resolution streams with real-time interpretation of scenes. Suitable for security monitoring and engaging with live events.

Example Applications:

- Media – Automatic synthesis of content from live sporting events as stories, including compilation of highlights

- Security – Real-time anomaly detection from CCTV feeds

- Education – Indexing of lectures with automated summaries to timestamped sections by AI

3. Edge & Low-Latency Applications

Recommended Models:

- DeepSeek-VL2 – Efficient mixture of expert design. It’s a great fit for the scientific fieldwork community, industrial IoT devices, and portable diagnostic tools.

- Phi-4 Multimodal – Small but powerful, perfect for mobile AI assistants, AR glasses, and offline customer support kiosks.

- Pixtral – Ultra-lightweight VLM and great visual reasoning capabilities for drones, smart cameras, and wearable devices.

Example Applications:

- Logistics: Package scanning and routing in warehouses without reliance on the cloud.

- Field Services: Visual diagnostics for repair technicians.

- Agriculture: On-device tracking of crop health in rural areas.

4. Multilingual Agents & Global Workflows

Recommended Models:

- Qwen2.5-VL – Provides robust multilingual support with excellent capabilities in video understanding and localization. It’s an excellent fit for global e-commerce or multilingual customer care.

- Gemma 3 – It is great for international document processing and cross-border compliance workflows due to its dozens of languages and great OCR capability.

Examples of Applications:

- Travel – Multilingual reference guides for tourists

- E-commerce – Automatically localizing product images and associated marketing descriptions from market to market

- Finance – Processing global forms and contracts into multiple languages.

Benchmarking & Community Views

The foundational performance of the top Vision Language Models of 2025 is validated through rigorous testing on benchmarks such as:

- MMMU – Evaluates multimodal understanding across diverse areas of analysis

- MMT-Bench – Measures visual question answering and image-to-text reasoning accuracy

- Video-MME – Tests temporal understanding and video-based reasoning

- MathVista – Assesses visual and mathematics-based problem solving using diagrams

Interestingly, challenger models such as Tarsier2 and Eagle 2.5 are now outperforming GPT-4o and Gemini 2.5 Pro in video description and long-context reasoning.

Community discussion on Reddit and Hugging Face shows that more and more people are leaning towards open-source models that can deliver high performance while being resource-efficient. In particular, developers find reporting benchmarks to be more transparent and adaptable to real-world tasks.

At Dextralabs, we do more than just give a leaderboard score: we help you understand the results, choose the right VLM solution, adapt the model for your domain, and adapt the model further to maximize ROI.

Final Thoughts & Recommendations

In 2026, the Vision Language Model space is more varied than ever. In terms of overall performance, Gemini 2.5 Pro stands out among proprietary options, while Qwen 2.5-VL-72B and Gemma 3 are also top performers in the open-source ecosystem. If the primary task is video understanding or retrieval-augmented generation (RAG), Tarsier2 and Eagle 2.5 also offer impressive reasoning about time and long-context abilities.

Where lightweight, resource-efficient deployments matter, DeepSeek-VL2, Phi-4, and Pixtral run well on edge devices. If your organization is looking for scalable workflows with documents, OCR, or a VQA solution, both Gemma 3 and LLaMA 3.2 Vision are the best options for accuracy and flexibility.

In the end, it is all about your use case — video or OCR, cloud or edge, multilingual support or privacy measures. At Dextra Labs, we review, deploy, and fine-tune to your requirements the best VLM solution, with a focus on optimum performance, efficiency, and compliance after deployment.

How to Get Started with Dextralabs?

With dozens of Vision Language Models available in 2026, it can be challenging to know where to get started. Not at Dextralabs, we make it easier. Our team will work with you to understand your situation, establish what you want to accomplish, whether it is scaling document OCR, supporting real-time video insights, or providing multilingual VQA agents.

Once we understand your situation, we associate you with the best model, fine-tune it to your domain, and deploy it in the environment of your choice (cloud, edge, hybrid). After deployment, our team stays with you to optimize for speed, accuracy, and ROI so your VLM investment delivers you big returns for a long time after deployment.

Let us transform your applications and systems with next-generation vision intelligence. Partner with Dextralabs to deploy, configure, and scale the most relevant Vision-Language Models of 2026.

Your AI Vision, Delivered

Whether you need OCR, video understanding, or multilingual visual agents, Dextralabs ensures your VLM is production-ready and optimized for your domain.

Get a Free AI Consultancy