What is VLM technology, and why are VLM models becoming essential for modern AI applications? Vision language models (VLMs) are AI systems that combine computer vision with natural language processing, enabling machines to understand images and communicate about them in natural language.

At Dextra Labs, we specialize in developing advanced LLM solutions and have expanded our expertise into VLM software development. As a leading AI Consulting firm, we recognize that vision models represent the next evolution in artificial intelligence applications. From custom VLM model development to integration consulting, we help businesses implement VLM AI capabilities.

This comprehensive guide examines VLM machine learning, visual LLM architectures, and practical applications. Whether you’re trying to understand what is a VLM or implementing solutions, we’ll cover technical foundations, training approaches including how to pretrain vision and large language models, and real-world examples of visual language applications across industries.

What Are Vision Language Models?

Vision language models are multimodal AI systems that process both visual and textual information simultaneously. The VLM meaning centers on creating AI that can see and understand images while communicating about them in natural language.

What are the Core Components of VLM Architecture?

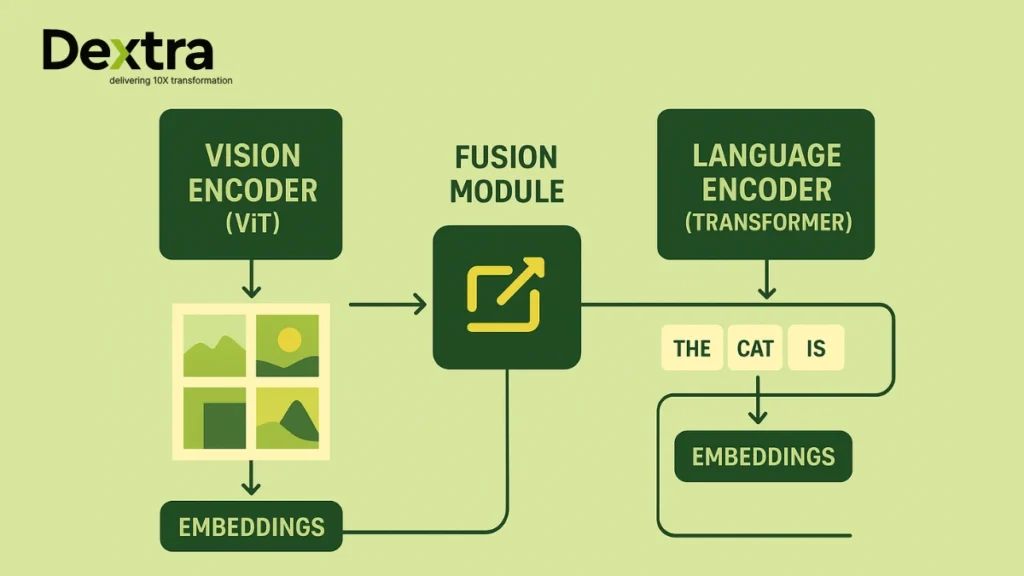

VLM models consist of two primary components working together:

1. Language Encoder: The language encoder captures semantic meaning and contextual associations between words and phrases, converting them into text embeddings for AI models to process. Most VLMs use neural network architectures based on transformer models for their language encoder.

2. Vision Encoder: The vision encoder extracts visual properties such as colors, shapes, and textures from images or videos, converting them into vector embeddings that machine learning models can process. Modern vision language models employ Vision Transformers (ViTs) that process images into patches, treating them as sequences similar to tokens in language transformers.

How VLM Training Works?

Training VLM AI systems involves aligning and fusing information from both vision and language encoders so the model can correlate images with text. VLM machine learning training typically uses several approaches:

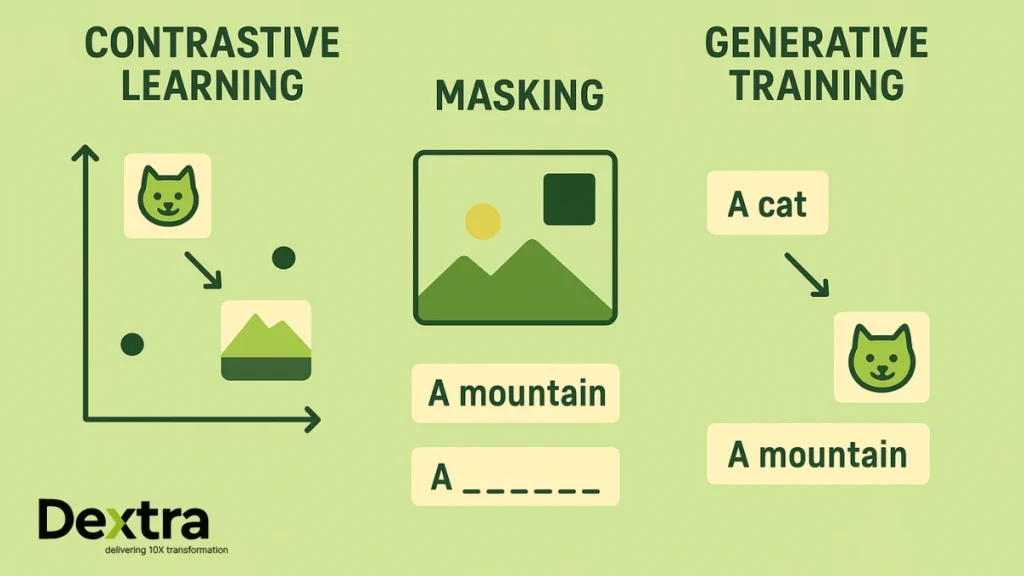

Contrastive Learning: Maps image and text embeddings into a shared space, training on image-text pairs to minimize distance between matching pairs. CLIP exemplifies this approach with its training on 400 million image-caption pairs.

Masking Techniques: Models learn to predict hidden parts of input text or images. In masked language modeling, VLMs fill in missing words given an image. In masked image modeling, they reconstruct hidden pixels given text captions.

Generative Training: Models learn to create new data, either generating images from text or producing text descriptions from images.

Types of Vision Language Models: Popular VLM Architectures

Current State-of-the-Art Models:

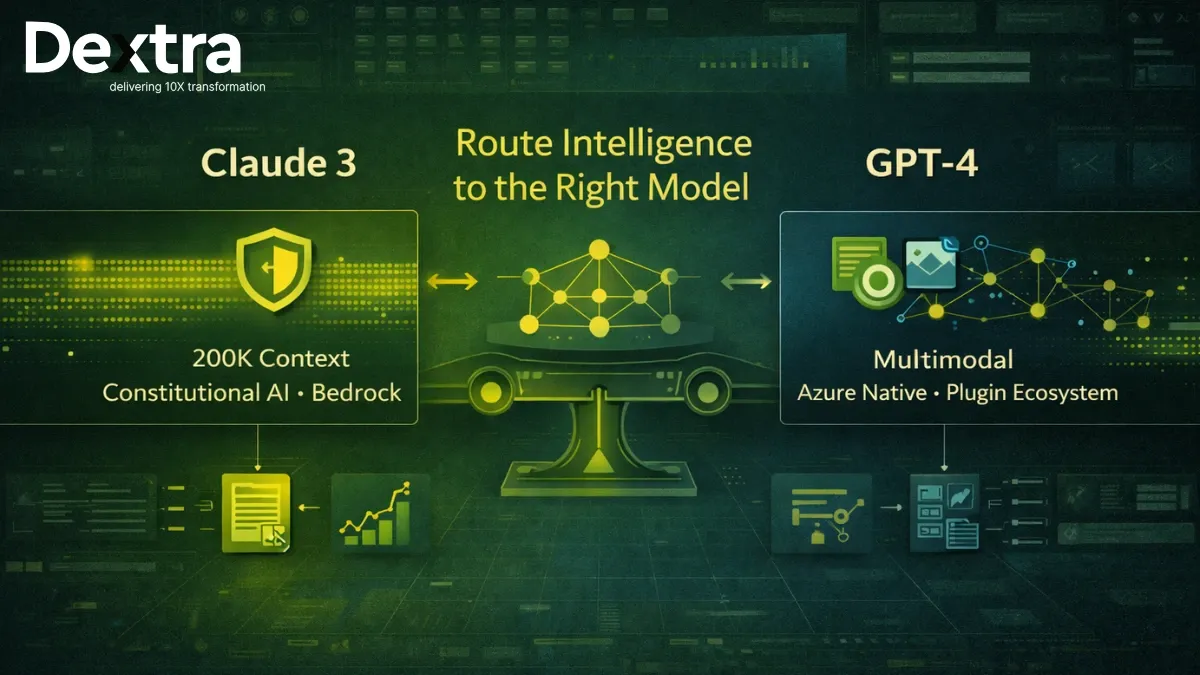

1. GPT-4o: OpenAI’s model trained end-to-end across audio, vision, and text data, accepting mixed media inputs and producing various output types.

2. Gemini 2.0 Flash: Google’s multimodal model supporting audio, image, text, and video inputs with text-only output currently.

3. LLaVA (Large Language and Vision Assistant): Open-source model combining Vicuna LLM with CLIP ViT as vision encoder, merged through a linear projector.

4. CLIP (Contrastive Language-Image Pretraining): Demonstrates high zero-shot classification accuracy through contrastive learning on massive datasets.

5. Flamingo: Uses CLIP-like vision encoder with Chinchilla LLM, optimized for few-shot learning capabilities.

Training Methodologies Explained

PrefixLM Approach: Models like SimVLM predict word sequences based on images and text prefixes, using Vision Transformers to divide images into patch sequences.

Frozen PrefixLM: Allows use of pre-trained networks while updating only image encoder parameters, as demonstrated by Flamingo’s architecture.

Knowledge Distillation: Transfers knowledge from large teacher models to smaller student models, enabling efficient VLM software deployment.

Vision NLP: Connecting Visual and Language Processing

Vision NLP combines visual understanding with text processing capabilities. Unlike traditional NLP that handles only text, vision NLP integrates visual comprehension through specialized techniques.

Language Models Components in VLMs

When examining language models into components within VLM contexts, we see:

Tokenization Layers: Convert text into processable units for the model Embedding

Layers: Create numerical representations capturing semantic meaning Attention

Mechanisms: Allow models to focus on relevant parts of both images and text Generation

Layers: Produce coherent text outputs incorporating visual and textual information

Cross-Modal Integration

Visual LLM systems use cross-attention mechanisms to connect visual and textual information. These mechanisms enable models to understand relationships between what they see and the language used to describe it.

Practical Applications: Visual Language Examples

Examples of visual language technology demonstrate practical value across industries:

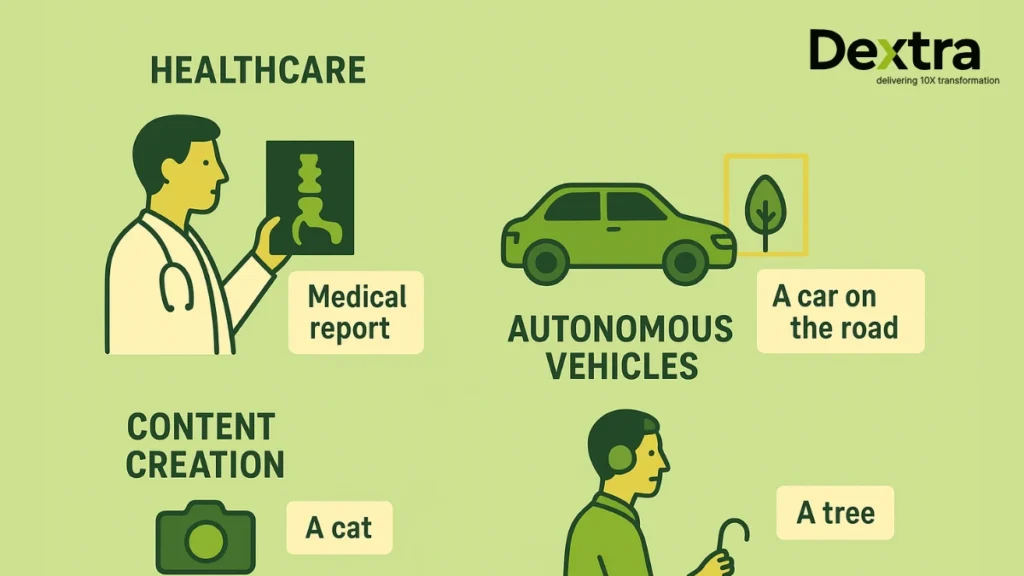

1. Healthcare Applications

- Medical image analysis with natural language reports

- Radiology assistance for X-ray and MRI interpretation

- Automated documentation from medical imagery

2. Autonomous Systems

- Vehicle perception with natural language reasoning

- Robot navigation using visual and linguistic instructions

- Real-time environment description for navigation systems

3. Content Creation

- Automated image captioning for social media

- Visual storytelling through image analysis

- Accessibility features for visually impaired users

4. Business Operations

- Product catalog automation from images

- Quality control with descriptive reporting

- Inventory management through visual recognition

Visual Question Answering

When considering “which of the following is an example of visual language?“, VQA represents a prime application. VLMs excel at answering questions about images, from simple object identification to complex reasoning about spatial relationships and abstract concepts.

Large Language Model Image Processing

Large language model image capabilities extend beyond basic recognition. Modern VLMs can:

A. Spatial Reasoning: Understand object positions and relationships within images

B. Context Awareness: Interpret images within broader conversational contexts

C. Abstract Concept Recognition: Identify emotions, themes, and complex ideas from visual content

D. Multi-step Reasoning: Draw logical conclusions combining visual and textual information

Technical Implementation: Training Vision Language Models

Pretraining Vision and Large Language Models

To pretrain vision and large language models (LLMs), developers work with massive datasets and specialized frameworks. Training VLM models from scratch requires significant computational resources, so many implementations use pre-trained components.

Key Training Datasets:

- LAION-5B: Over 5 billion image-text pairs for large-scale pretraining

- COCO: Thousands of labeled images for captioning and object detection

- ImageNet: Millions of annotated images for visual recognition tasks

- VQA Dataset: Over 200,000 images with multiple questions for fine-tuning

Development Considerations

VLM software development involves several technical challenges:

Computational Requirements: Vision and language models are complex individually; combining them increases resource needs significantly.

Data Quality: High-quality image-text pairs are essential for effective training and model performance.

Architecture Design: Balancing vision and language components requires careful engineering to achieve optimal performance.

Evaluation and Benchmarking

VLM Assessment Methods

Vision models require specialized evaluation approaches different from traditional AI benchmarks:

VQA (Visual Question Answering): One of the earliest benchmarks with open-ended questions about images

MMMU (Massive Multidiscipline Multimodal Understanding): Multimodal challenges across various academic subjects

MathVista: Specialized benchmark for visual mathematical reasoning capabilities

OCRBench: Focuses on optical character recognition abilities within VLMs

Evaluation Tools

VLMEvalKit: Open-source toolkit enabling one-command evaluation of vision language models

LMMs-Eval: Command-line evaluation suite for comprehensive model assessment

OpenVLM Leaderboard: Independent ranking system for open-source VLM AI models

Challenges and Limitations

Current VLM Limitations

Model Complexity: Combining vision and language increases computational requirements and deployment challenges.

Dataset Bias: VLMs can inherit biases from training data, requiring careful dataset curation and ongoing monitoring.

Generalization Issues: Models may struggle with novel concepts or atypical image-text combinations not seen during training.

Hallucination Problems: Vision language models can generate confident but incorrect descriptions, requiring validation mechanisms.

Technical Challenges

Compositional Understanding: VLMs sometimes fail to understand novel combinations of familiar concepts.

Scale Requirements: Effective VLM machine learning requires massive datasets and computational resources.

Evaluation Complexity: Assessing multimodal performance requires sophisticated metrics beyond traditional accuracy measures.

Industry Applications and Use Cases

1. Healthcare and Medical Imaging

VLM AI systems assist medical professionals by analyzing diagnostic images and generating natural language reports. Applications include radiology assistance, pathology analysis, and automated medical documentation.

2. Autonomous Vehicles and Robotics

Vision models enable vehicles and robots to understand their environment while communicating about it. This includes navigation assistance, obstacle identification, and interaction with human operators.

3. Content Moderation and Safety

VLMs help platforms automatically identify and describe problematic content, enabling more nuanced content moderation policies that consider both visual and contextual information.

4. Accessibility Technology

Visual LLM systems create descriptions for visually impaired users, enabling better access to visual content across digital platforms and real-world environments.

Future Directions and Trends

Emerging Research Areas

Improved Reasoning: Research focuses on better logical inference from visual information combined with language understanding.

Efficiency Optimization: Development of more efficient VLM software architectures for broader deployment.

Specialized Applications: Domain-specific VLMs for particular industries like healthcare, manufacturing, and education.

Technical Advancements

Better Integration: Improved methods for combining visual and linguistic representations.

Real-time Processing: Faster inference capabilities for practical applications.

Enhanced Multimodality: Integration with additional data types like audio and sensor information.

Getting Started with VLM Implementation

Choosing the Right VLM Model

When selecting VLM models for implementation:

Define Requirements: Identify specific vision-language tasks needed for your application

Assess Resources: Consider computational requirements and available infrastructure

Evaluate Performance: Test models on relevant benchmarks and use cases

Consider Integration: Assess how easily models integrate with existing systems

Implementation Best Practices

Data Preparation: Ensure high-quality image-text pairs for training or fine-tuning

Performance Monitoring: Implement comprehensive evaluation on relevant metrics

Bias Detection: Monitor for and address potential biases in model outputs

Continuous Improvement: Plan for ongoing model updates and performance optimization

Conclusion

Vision language models represent an important advancement in artificial intelligence, combining visual understanding with natural language processing in ways that create practical value across industries. From VLM AI applications in healthcare to autonomous systems, these models are solving real-world problems by bridging the gap between seeing and understanding.

At Dextra Labs, we continue to advance VLM software development, helping organizations implement these technologies effectively. As vision models become more capable and accessible, they will change how we interact with visual information in our digital world.

Whether you’re exploring what is a VLM for research purposes or implementing VLM model solutions for business applications, understanding these systems provides the foundation for using their capabilities. The integration of large language model image processing with natural language understanding creates new possibilities for human-computer interaction and automated reasoning about our visual world.

The field continues to advance rapidly, with improvements in efficiency, accuracy, and applicability. As training methods for pretraining vision and large language models become more refined, we can expect even more sophisticated examples of visual language applications across diverse domains.