Enterprise AI is going through a major shift. A few years ago, most organisations experimented with a single large language model or isolated automation tools. Today, this approach is no longer workable. Companies are running dozens—sometimes hundreds of AI agents, models, and workflows across departments. These systems must talk to each other, share context, connect to data sources, and operate under tight security constraints.

This is why the enterprise AI stack is being redesigned in 2025. The key drivers behind this change include:

- Move from standalone LLMs to interconnected AI ecosystems

Enterprises now expect AI to work across finance, operations, HR, customer service, supply chain, audits, compliance, and analytics without fragmentation. - Growing focus on interoperability and governance

AI systems are expected to follow enterprise-grade access controls, traceability, and repeatable workflows. - Market momentum around open standards like MCP and A2A

These standards are being adopted across cloud platforms, automation tools, and model providers, shaping how enterprises structure their AI stack for the long term. - Demand for hybrid systems combining open standards and domain-specific models

Enterprises want flexibility without losing accuracy or control.

Amid this shift, Dextralabs is helping organisations build AI stacks that are secure, scalable, and ready for cross-agent collaboration. Our focus is on practical architectures, standard-compliant integrations, and domain-specific model strategies, all built for real business environments, not just lab demos.

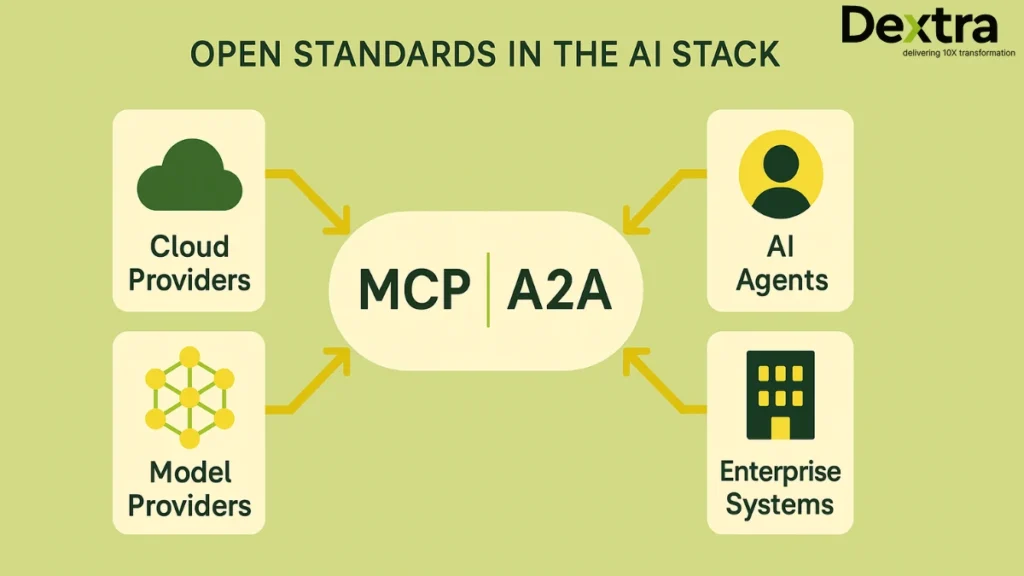

Understanding the New Open Standards: MCP and A2A

Open standards are becoming the central foundation of enterprise AI. They bring consistency, reduce integration overhead, and help organisations avoid vendor lock-in. Two major standards are shaping the market: MCP and A2A.

What Is MCP?

MCP (Model Context Protocol) is a standardised way for AI agents to access tools, APIs, data sources, and enterprise systems. Before MCP, every agent needed custom code to connect with each system, creating repetitive and expensive engineering work.

Key characteristics of MCP:

- Universal gateway for agents

Instead of building custom connectors for ERP, CRM, HRMS, databases, or internal APIs, MCP provides a common interface. - Origin and purpose

Created to solve the complexity of connecting numerous agents and systems, especially in large enterprise environments. - Primary use cases

- Integrating enterprise tools

- Secure connection to internal systems

- Orchestrating RAG pipelines

- Workflow automation

- Why MCP matters for enterprises

It reduces integration cost, improves maintainability, and creates consistency across all AI agents.

MCP acts as a stable bridge between AI and enterprise systems, something companies have needed for years.

What Is A2A?

A2A (Agent-to-Agent protocol) is a standard introduced and supported by Google and dozens of partners. Its purpose is to allow agents from different vendors and systems to discover each other and collaborate.

Key features:

- Discovery and communication between agents

Agents can exchange tasks, share capabilities, and coordinate work. - Growing ecosystem

More than 50 partners support the standard, spreading it across automation tools, cloud providers, and AI platforms. - Horizontal interoperability

A2A connects agents across departments and systems so they can work like a unified workforce. - Why A2A matters

Enterprises can mix agents from different vendors and still maintain secure collaboration. This prevents dependence on a single AI provider.

A2A is becoming a central part of multi-agent architectures that need cross-department workflows.

A2A vs MCP: Complementary Roles, Not Competing Standards

A2A and MCP serve different layers of the AI stack:

- MCP = Agent ↔ System (vertical integration)

This ensures safe, stable access to enterprise systems. - A2A = Agent ↔ Agent (horizontal collaboration)

This connects agents so they can coordinate work.

Together, they form the backbone of any modern enterprise AI stack.

Dextralabs helps enterprises determine the right way to combine both standards, depending on:

- the number of systems involved

- regulatory requirements

- volume of automated tasks

- cross-department collaboration requirements

These standards are not an optional add-on; they are becoming the foundational layer of the enterprise AI ecosystem.

Why Open Standards Alone Are Not Enough?

Open standards provide structure, but they cannot answer every problem on their own. AI models the core of any intelligent system require careful selection and tuning.

Limits of General-Purpose AI Models

Large general-purpose models are impressive, but they have weaknesses in enterprise settings:

- Broad capability, limited precision

They perform well across many tasks but may lose accuracy in industry-specific scenarios. - Higher error risk in regulated sectors

Examples include:

- credit risk decisions

- healthcare notes

- supply chain forecasting

- industrial control tasks

- Latency concerns

Running large models for high-volume inference (e.g., millions of daily transactions) creates slowdowns. - Cost inefficiency

General-purpose models usually require more compute and lead to higher overall operational cost.

Enterprises increasingly realise that not every workflow needs—or benefits from—a frontier model.

The Case for Domain-Specific Models

Domain-specific models address most limitations of general-purpose ones.

Advantages include:

- Higher accuracy for specialised tasks

Models trained on sector-specific data consistently outperform general-purpose models in areas like fraud detection, clinical summarisation, or logistics optimisation. - Faster response times

Smaller, focused models reduce inference time and run efficiently on enterprise infrastructure. - Lower operating costs

Because they are smaller, these models use less compute, especially important for high-frequency workloads. - Better regulatory alignment

Industries such as banking or healthcare often need strict control over data handling and model behaviour. Domain models make this easier. - Competitive advantage

Enterprises can use private datasets, custom reward models, and feedback loops to create models that outperform public alternatives.

Dextralabs helps organisations decide whether a task needs a small model, a medium-sized model, or a foundation model based on performance, cost, and regulatory requirements.

The 2025 Enterprise AI Stack: A Hybrid and Modular Architecture

The modern AI stack combines open standards with different types of models and agents. It must support integration, observability, security, and cross-system automation.

Below is a breakdown of the key layers.

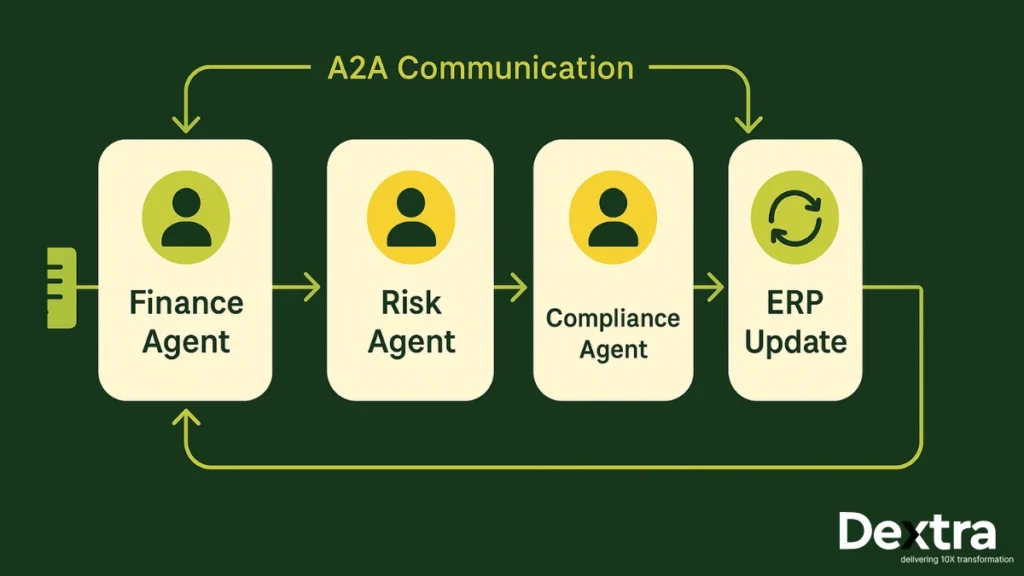

Orchestration Layer: A2A-Driven Multi-Agent Collaboration

The orchestration layer uses A2A to create communication between agents across departments.

Examples of cross-agent collaboration:

- Finance agents passing invoice details to risk agents

- HR agents coordinating onboarding tasks with IT agents

- Operations agents syncing with supply chain agents

- Procurement agents connecting with vendor management agents

Capabilities include:

- automated agent discovery

- secure task delegation

- routing tasks based on agent skills

- multi-step workflow execution

This is the layer where most multi-agent behaviour becomes visible.

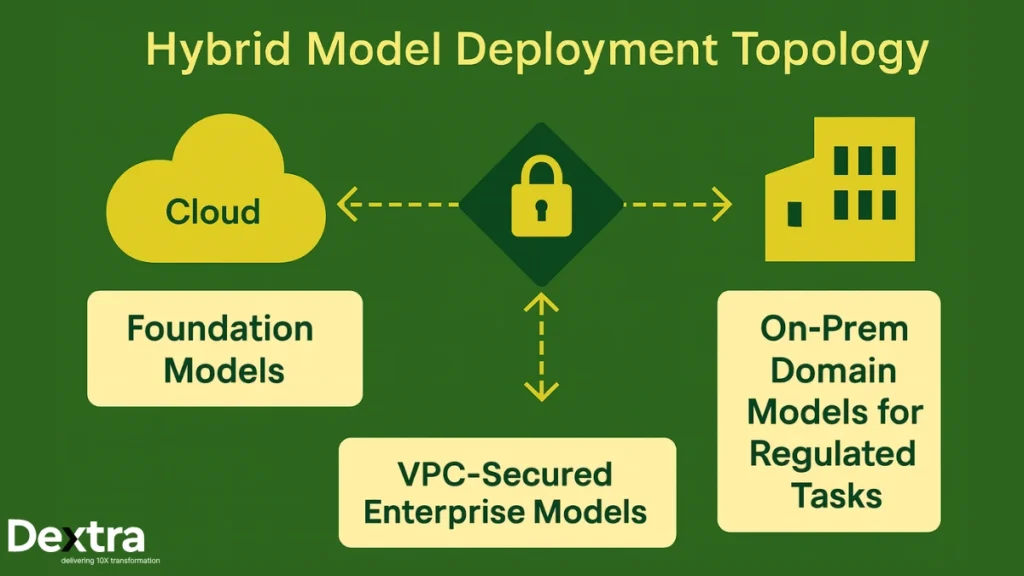

Model and Agent Layer: Blending Foundation & Vertical Models

At this layer, enterprises mix:

- Foundation models for broad reasoning

- Vertical models for specialised tasks

- Task-specific agents designed around business functions

Deployment patterns include:

- API-based access

- VPC-secured models

- On-prem deployment for sensitive workflows

Dextralabs uses proven architecture patterns to help organisations build a model ecosystem that balances performance, accuracy, and compliance.

Integration Layer: MCP Servers as the Enterprise “AI Bus”

The integration layer connects AI agents to systems. MCP servers act as a central adaptor for:

- APIs

- Databases

- ERP systems

- CRM systems

- Manufacturing execution systems

- Core banking systems

- E-commerce engines

This approach avoids custom integrations and makes enterprise IT far easier to maintain.

Common MCP+AI patterns include:

- MCP + RAG for controlled retrieval

- MCP + business apps for actions like ticket creation or transaction review

- MCP + monitoring for agent-level observability

MCP ensures every system connection is secure, logged, and consistent.

Data & Infrastructure Layer

AI systems rely heavily on reliable data pipelines. This layer includes:

- Real-time data pipelines for transaction streams

- Feature stores for predictive features

- Event-driven architectures for operational triggers

- Vector databases for semantic search and retrieval

- Observability and lineage systems to track data usage

Dextralabs builds data foundations that support both agents and humans with consistent, trustworthy information.

Governance & Security Layer

Enterprises cannot adopt AI without strict governance.

Key components include:

- role-based access control

- audit logs

- encryption in transit and at rest

- model safety filters

- secure agent sandboxes

- incident response workflows

Because both MCP and A2A include secure interfaces, they help enterprises maintain compliance across all AI interactions.

Dextralabs uses established governance blueprints for industries like BFSI, healthcare, and manufacturing.

Architectural Blueprint: How Enterprises Should Build Their 2025 AI Stack?

A mature 2025 AI stack works like a well-designed system with clear boundaries and predictable behaviour.

A strong blueprint includes:

- Open standards at the integration and orchestration layers (MCP + A2A)

- Domain-specific models for industry tasks

- Foundation models for general reasoning and creativity

- Observability systems for tracking agent behaviour

- Agent sandboxes to contain unintended actions

- Debugging and monitoring layers for continuous improvement

Using open standards reduces dependency on proprietary ecosystems. Using domain models ensures accuracy and cost-efficiency. Using proper governance ensures safety and trust.

Dextralabs integrates all these components into a single modular architecture that enterprises can evolve over time.

Build vs. Buy vs. Partner: Strategic Guidance for CTOs

CTOs face several decisions when setting up an enterprise AI stack.

When to adopt open standards immediately

- If the organisation already has multiple AI systems

- If cross-department automation is a priority

- If long-term flexibility is important

When to prioritise domain models over frontier models

- When accuracy matters more than general reasoning

- When workloads involve high-volume inference

- When the task is regulated or sensitive

How to evaluate MCP and A2A vendors

CTOs should assess:

- ecosystem maturity

- security guarantees

- compatibility with existing infrastructure

- observability features

Role of specialist AI consulting partners

Most enterprises don’t have in-house talent to build an entire AI stack from scratch. Dextralabs supports organisations with:

- architecture planning

- MCP and A2A implementation

- model selection and fine-tuning

- security and governance

- ongoing optimisation and maintenance

This reduces risk and accelerates time to value.

Case Scenarios (Industry-Specific):

BFSI

Banks and financial institutions need safety, auditability, and accuracy.

Key applications include:

- multi-agent risk analysis

- automated credit checks

- transaction investigation

- MCP-based access to core banking systems

- domain-specific models for fraud scoring

A2A enables agents to coordinate reviews and escalate cases.

Healthcare

Healthcare systems prioritise privacy and precision.

Examples include:

- VPC-deployed clinical models

- MCP-controlled EMR access

- multi-agent collaboration for patient intake, diagnostics, and reporting

A2A ensures clinical agents, billing agents, and scheduling agents work together without data leakage.

Manufacturing & Industrial

Factories rely heavily on real-time data and machine coordination.

Use cases:

- sensor-to-agent automation

- anomaly detection

- maintenance prediction

- production scheduling

- quality review systems

Domain-specific models can outperform general ones on tasks involving images, signals, and numeric data.

Retail & E-commerce

Retail requires fast decisions and personalised experiences.

Applications include:

- recommendation engines

- multi-agent dynamic pricing

- inventory planning

- customer service automation

- returns processing

Hybrid model setups are ideal for handling large-scale consumer traffic.

Dextralabs POV: Designing Future-Ready Enterprise AI Stacks

Dextralabs believes the strongest AI stacks combine flexibility, accuracy, and accountability.

Our approach focuses on:

- guiding enterprises toward open standards that avoid future lock-in

- designing hybrid ecosystems of models

- securing agent environments with strict audits and controls

- building end-to-end systems that are reliable, maintainable, and grounded in real business needs

We support clients through the full lifecycle from early planning to operational scaling.

Conclusion: The Future Belongs to Interoperable, Domain-Aware AI Systems

Enterprises that build the right AI stack in 2025 will gain a long-term advantage.

The winning approach combines:

- Open standards for interoperability

- Domain-specific models for accuracy and cost control

- Strong governance for trust and reliability

MCP and A2A are becoming essential components of enterprise AI architecture. Domain models ensure that the system performs well in specialised tasks. A hybrid, modular setup enables continuous improvement.

Dextralabs is committed to helping organisations build AI systems that are practical, secure, and ready for real-world scale.

If you’d like support evaluating your current architecture or planning a 2025-ready AI stack, we can help through:

- an AI readiness assessment

- an architecture workshop

- a pilot deployment

Your enterprise AI stack is the foundation of your next decade of innovation.

Building it well is not optional it’s strategic.