The complete guide to understanding whether AI agents can really replace your development tasks.

“Wait, so this AI can actually write my entire feature while I’m in a meeting? And it shows me exactly what it did? Is this real or just more AI hype?”

Sound familiar? You’re not alone.

We’ve all been burned by AI coding tools that promise the moon but deliver glorified autocomplete. Every week there’s a new “revolutionary” coding assistant that’s supposedly going to “transform software development forever.” Most of them are just fancy suggestion boxes.

But here’s where OpenAI’s Codex is different: it doesn’t just suggest code—it actually writes it, tests it, debugs it, and creates pull requests. Independently. While you focus on the stuff that actually matters.

At Dextralabs, this guide cuts through the marketing buzz to show you what Codex really does, where it excels, where it falls short, and—most importantly—whether it’s worth implementing in your development workflow.

Codex in Numbers: What the Data Says

Before diving into features, it’s worth looking at how Codex performs in the real world:

- 37% of prompts are solved on the first attempt. Codex gets a correct result from the first try in over a third of cases.

- Up to 70.2% success with retry attempts. Given more tries, such as 100 samples, success rates increase substantially.

- 75% accuracy in internal software engineering exams. Codex outperformed its base models in engineering-like test scenarios.

- 85% pass rate after multiple attempts on SWE-Bench tasks. For real-world bug-fixing scenarios, Codex scored high reliability.

- Up to 80% accuracy in university-level CS questions with retries. Studies from the University of Auckland showed significant improvements on iterative testing. (Source: University of Auckland)

Why It Matters: Efficiency and Real Adoption

- 76% of developers are using or plan to use AI code assistants. (Source: Stack Overflow 2024 Survey)

- 55% faster task completion and ~50% quicker time-to-merge have been reported by teams using Codex-style assistants. (Source: Faros.AI)

Beyond Autocomplete: What Makes Codex Actually Different?

While most AI coding tools act like sophisticated autocomplete, Codex operates as a legitimate development partner. Here’s what sets it apart:

Traditional AI Coding Tools:

- Help you write code faster

- Suggest completions in real-time

- Require constant guidance and review

OpenAI Codex:

- Writes complete features independently

- Works on multiple tasks simultaneously

- Provides transparent documentation of its process

- Operates in isolated cloud environments with your full codebase

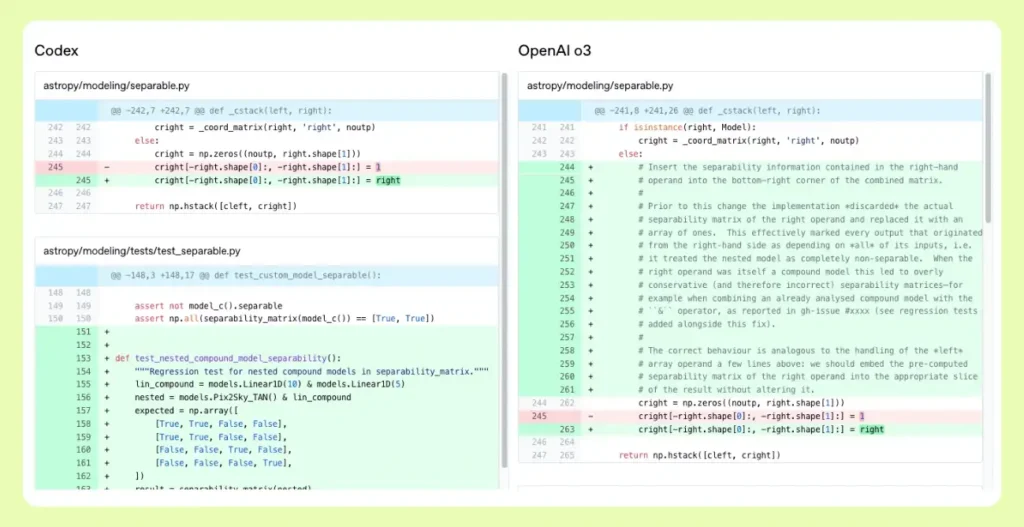

The key difference? Codex is powered by codex-1, a specialized version of OpenAI’s o3 model trained specifically for software engineering using reinforcement learning on real-world coding scenarios.

The Core Capabilities: What Codex Actually Does

1. Independent Task Execution

What it does: Assign tasks through ChatGPT by typing a prompt and clicking “Code.” Each task runs in a separate cloud environment preloaded with your repository.

Real example: “Refactor the authentication module to use JWT tokens and add comprehensive error handling.”

Timeline: Tasks typically complete in 1-30 minutes depending on complexity.

2. Parallel Processing Power

What it does: While you work on Feature A, Codex can simultaneously:

- Fix bugs in Feature B

- Write tests for Feature C

- Refactor legacy code in Feature D

Why this matters: No more context switching between different types of development work.

3. Transparent Process Documentation

What it does: Unlike black-box AI tools, Codex provides:

- Terminal logs of every command executed

- Test outputs and results

- Step-by-step citations of actions taken

- Real-time progress monitoring

Why this matters: You can trace exactly what it did and why, making code review straightforward.

4. Full Development Lifecycle Support

What it does:

- Reads and edits files

- Runs test harnesses, linters, and type checkers

- Iteratively tests until achieving passing results

- Creates pull requests for review

- Handles debugging and refactoring

Real-World Implementation: Companies Actually Using This

Enterprise Applications

Cisco: Exploring how Codex helps engineering teams “bring ambitious ideas to life faster”

- Use case: Accelerating prototype development

- Impact: Reduced time from concept to working demo

Temporal: Using Codex across the entire development pipeline

- Use case: Feature development, debugging, testing, and large codebase refactoring

- Impact: Significant reduction in routine development overhead

Kodiak: Leveraging for autonomous driving technology

- Use case: Writing debugging tools and improving test coverage

- Impact: Enhanced code quality and reliability in safety-critical systems

Startup and Scale-up Applications

Superhuman: Enabling non-technical team members to contribute

- Use case: Product managers making lightweight code changes

- Impact: Reduced engineering bottlenecks for simple modifications

OpenAI Internal Teams: Daily use for focus preservation

- Use case: Repetitive, well-scoped tasks like renaming and refactoring

- Impact: Developers maintain focus on complex architectural decisions

The Technical Reality: Performance and Limitations

Where Codex Excels?

Well-Scoped, Repetitive Tasks

- Refactoring existing code

- Writing comprehensive test suites

- Bug fixes with clear requirements

- Documentation generation

- Code style standardization

Implementation After Design

- Converting requirements into working code

- Following established patterns and conventions

- Maintaining consistency across codebases

- Handling edge cases and error conditions

Code Quality and Standards

- Generates code that mirrors human style

- Adheres to pull request preferences

- Follows team conventions automatically

- Maintains readability and maintainability

Where Codex Struggles?

High-Level Architecture Decisions

- Choosing between microservices vs monolith

- Database schema design decisions

- Technology stack selection

- System integration strategies

Complex Business Logic Understanding

- Domain-specific rules and requirements

- Cross-system dependencies

- Legacy system constraints

- Organizational process requirements

Creative Problem Solving

- Novel algorithmic approaches

- Innovative UI/UX implementations

- Unique performance optimizations

- Breakthrough technical solutions

Security and Safety: Enterprise-Grade Protection

Isolation and Access Control

- Secure containers: All tasks run in isolated cloud environments

- No internet access: During execution, preventing external data leakage

- Repository-only access: Can only interact with explicitly provided code

- Pre-installed dependencies: Controlled environment with known components

Malicious Code Prevention

- Training safeguards: Specifically trained to identify and refuse malicious requests

- Policy frameworks: Enhanced safety evaluations and guidelines

- Audit trails: Complete logging of all actions and decisions

- Review processes: All outputs designed for human review before deployment

Implementation Framework: Getting Started Right

Phase 1: Assessment and Planning

- Identify suitable tasks: Start with repetitive, well-scoped work

- Evaluate security requirements: Ensure compliance with organizational policies

- Set up evaluation criteria: Define success metrics and quality standards

- Plan integration points: Determine how Codex fits into existing workflows

Phase 2: Pilot Implementation

- Start small: Begin with non-critical refactoring or test writing

- Monitor closely: Track output quality and process effectiveness

- Gather feedback: Collect input from development team members

- Iterate approach: Refine task delegation and review processes

Phase 3: Scale and Optimize

- Expand use cases: Apply to broader range of development tasks

- Optimize workflows: Streamline integration with existing tools

- Train team members: Develop expertise in effective AI delegation

- Measure impact: Quantify productivity gains and quality improvements

The Skills Evolution: What Changes for Developers

Skills Becoming More Important

- Task Decomposition: Breaking complex problems into AI-manageable pieces

- System Architecture: Understanding how components interact and integrate

- Code Review: Evaluating AI-generated code for logic and maintainability

- AI Communication: Writing clear, specific instructions for AI agents

- Process Design: Creating workflows that use AI capabilities effectively

Skills Becoming Less Critical

- Syntax Memorization: Language-specific details handled automatically

- Boilerplate Generation: Template code becomes automated

- Repetitive Refactoring: Pattern-based changes happen independently

- Documentation Writing: Code documentation generated automatically

- Test Case Creation: Standard test patterns handled by AI

New Skill Categories Emerging

- AI Agent Management: Effectively delegating and monitoring AI tasks

- Hybrid Workflow Design: Balancing human and AI contributions

- Quality Assurance: Ensuring AI output meets standards and requirements

- Process Optimization: Continuously improving human-AI collaboration

Cost-Benefit Analysis: Is It Worth It?

For Individual Developers

Benefits:

- Reduced context switching

- Focus on high-value problem solving

- Faster iteration cycles

- Improved code consistency

Considerations:

- Learning curve for effective delegation

- Subscription costs vs. time savings

- Quality review requirements

For Development Teams

Benefits:

- Parallel task execution

- Reduced bottlenecks on routine work

- Consistent code quality across team

- Faster feature delivery

Considerations:

- Team training requirements

- Integration with existing tools

- Code review process modifications

For Organizations

Benefits:

- Accelerated development cycles

- Reduced engineering overhead

- Improved developer satisfaction

- Competitive advantage in delivery speed

Considerations:

- Security and compliance requirements

- Change management processes

- ROI measurement and tracking

Where This Technology Is Going

Near-Term Developments (6-12 months)

- Unified workflows: Integration of real-time pairing and task delegation

- IDE integration: Native support within popular development environments

- Enhanced collaboration: Improved multi-developer AI agent coordination

- Expanded language support: Broader programming language capabilities

Medium-Term Evolution (1-2 years)

- Architectural assistance: AI support for system design decisions

- Domain specialization: Models trained for specific industries or use cases

- Continuous learning: Agents that adapt to team preferences and patterns

- Cross-system integration: AI agents working across multiple development tools

Long-Term Vision (2-5 years)

- Autonomous development: AI agents handling entire feature development cycles

- Intelligent project management: AI coordination of development resources

- Predictive maintenance: Proactive code optimization and issue prevention

- Seamless human-AI collaboration: Natural language interfaces for all development tasks

Making the Decision: Is Codex Right for Your Team?

Strong Fit If You Have:

- High volume of repetitive coding tasks

- Well-defined development processes and standards

- Team members comfortable with AI tool adoption

- Clear code review and quality assurance processes

- Flexibility to adapt workflows and processes

Moderate Fit If You Have:

- Mixed development work (some routine, some creative)

- Existing AI tool experience within the team

- Willingness to invest in training and process changes

- Clear security and compliance requirements

Poor Fit If You Have:

- Primarily greenfield or highly creative development work

- Strict security restrictions on cloud-based tools

- Resistance to AI adoption within the development team

- Inadequate processes for code review and quality control

- Limited bandwidth for implementing new tools and workflows

Final Thoughts: The Future of Development Is Here

The software development landscape is shifting from “AI helps you code faster” to “AI codes while you solve bigger problems.” Codex represents a genuine evolution in how we approach development work—not just making existing processes faster, but enabling entirely new workflows.

The companies already implementing AI coding agents aren’t just shipping features faster. They’re freeing their developers to focus on architecture, user experience, and solving complex business challenges. They’re turning routine coding work into a background process while human creativity drives innovation.

The reality is simple: AI coding agents like Codex aren’t going to replace developers. They’re going to replace the tedious, repetitive parts of development that keep developers from doing their best work.

The question isn’t whether this technology will reshape software development—it already is. The question is whether your team will be ready to leverage it effectively.

The future of development is collaborative, intelligent, and focused on human creativity augmented by AI capability. It’s not about coding faster—it’s about coding smarter.

Ready to explore what AI-augmented development could mean for your team? The technology is here, the tools are ready, and the competitive advantage is waiting for those bold enough to embrace it.

FAQs: OpenAI Codex and AI Coding Agents

Q. How much does OpenAI Codex cost?

Codex requires a ChatGPT Plus ($20/month) or Pro ($200/month) subscription. The Pro version includes additional compute resources for complex reasoning tasks.

Q. OpenAI Codex vs GitHub Copilot.

While Copilot provides real-time autocomplete suggestions, Codex works independently on complete tasks. Copilot assists as you code; Codex codes while you focus on other work.

Q. Can Codex work with any programming language?

Yes, Codex supports all major programming languages and frameworks. It was trained on diverse codebases and can adapt to different language conventions and styles.

Q. Is Codex secure for enterprise use?

Yes, Codex runs in isolated containers with no internet access during execution. It only interacts with code you explicitly provide and includes comprehensive audit trails.

Q. Can Codex replace developers?

No, Codex excels at implementation but struggles with architectural decisions, creative problem-solving, and understanding complex business requirements. It’s a powerful tool for augmenting developer capabilities.

Q. What happens if Codex generates buggy code?

All Codex output is designed for human review. The system provides complete transparency about its actions, making it easy to identify and fix any issues during the review process.

Q. How long does it take to see ROI from Codex implementation?

Most teams see immediate benefits in reduced context switching and faster completion of routine tasks. Full ROI typically becomes apparent within 2-3 months of consistent use.

Q. Can small teams benefit from Codex?

Absolutely. Small teams often see the most dramatic impact because they have limited resources for routine development tasks. Codex can significantly multiply their development capacity.