Artificial intelligence (AI) is embarking on a new emergent paradigm era— an era of autonomy, flexibility, and proactive smarts. That paradigm is Agentic AI, or AI systems possessing the capability to think, plan, and act independently in order to obtain wanted outcomes without persistent human intervention.

Where legacy AI models are idle waiting for instructions, Agentic AI agents are problem-solving agents. They’re capable of setting goals, making decisions, and carrying out multi-step tasks simultaneously in complex workflows. These capabilities have unlocked entirely new levels of efficiency, speed, and innovation in organizations of all types, including those in finance, health care, retail, and manufacturing.

But with these possible benefits come severe risks. Unpredictable choices, breaches in data, propagation of bias, and loopholes in security can all work to undermine the integrity of the company if they are not well addressed.

In light of these challenges, Dextralabs leading AI consultants and innovators in intelligent automation come up with an active playbook for safe and secure agentic AI deployment — a manual that will assist technology leaders in responsibly deploying AI, staying safe, open, and trustworthy through all deployment processes.

Understanding Agentic AI: From Assistants to Autonomous Agents

Traditional AI operates entirely differently from Agentic AI. This traditional AI is reactive — a user inputs something and the AI produces some output, or does one task based on cues. Agentic AI is goal-oriented, self-directed — it can think, plan, and execute multi-step actions in order to accomplish a desired outcome.

Key Features of Agentic AI

- Setting Goals: The ability to understand goals and divide them into executable tasks.

- Planning: Create multi-step plans for multifaceted goal attainment.

- Independent execution: Carrying out actions between systems and data feeds without having to handle each action.

Real-World Examples

- Marketing automation: An Agentic AI may create, email, and maximise ad campaigns based on actual performance data, rather than human labour, and attain the maximum return on investment (ROI).

- Financial control: A financial AI agent can track transactions automatically, identify and flag suspicious behaviour, and block fraud in real time.

The illustrations show how Agentic AI enables intelligent, goal-directed action and the reason that safety, security/trust, and explainability are so critical. Without them, AI is responsible and safe, but could take dangerous action.

Dextralabs’ Approach

As one of the top AI consulting companies, Dextralabs respects autonomy with no compromise to control. The secure orchestration pipeline and LangChain, OpenAI APIs we design focus on validation, monitoring, and ethical fences. Our AI agents are validated for accuracy, explainability, and regulatory compliance before deployment, ensuring alignment with the enterprise’s moral code and lawfulness.

The New Risk Landscape for Technology Leaders

With the prospect of autonomous AI, technology leaders nowadays are confronted with a two-way risk dynamic: innovation and autonomy vs. security and governance.

Implications of Agentic AI

- Data Leaks: Available to AI agents with poor access controls

- Model Hallucinations: Outputs are erroneous or fabricated, leading to misleading decisions

- Adversarial Attacks: Attackers have the ability to hack models to generate unsafe or biased outputs.

- Missing Explainability: Black box decision-making by agents creates accountability and compliance issues.

These risks have to be controlled and overseen by CIOs, CTOs, and AI governance officials with heavy reliance on monitored processes.

Dextralabs adopts a security-first design approach to engineering in a way that is crafted to drive solutions. Its solutions integrate security design principles, multi-layer governance architectures, and ongoing validation frameworks.

All stages of the AI lifecycle, ranging from data ingestion to model execution, are linked to safety controls, which define the boundaries of ethical and operational behavior for all agents.

Dextralabs’ Playbook for Safe and Secure Agentic AI

Dextralabs’ playbook offers business tech leaders a five-step journey toward responsibly and sustainably adopting Agentic AI.

Step 1: Design Governance

Governance isn’t an afterthought — it must be designed into all AI initiatives.

Key Efforts

- Create an accountability structure that extends from business executives to data scientists.

- Enhance ethical data pipelines that continue to guarantee fairness, privacy, and consent.

- Refer to international standards like ISO/IEC 42001 (AI Management Systems) and the EU AI Act.

Outcome

Well-specified, understandable, human-oriented AI agents that run within their technical limits and with open yet clearly specified and quantifiable ethical responsibilities. Governance ensures explanation and compliance with AI results as the AI works more autonomously.

Step 2: Secure Infrastructure and Architecture

Security is the basis for responsible AI. Every vulnerability can destabilize the whole system.

Key Practices:

- Sandboxed environments contain agentic workflows during testing.

- Employ end-to-end encryption in all data transport.

Scan and patch vulnerabilities regularly to eliminate exploits.

Objective:

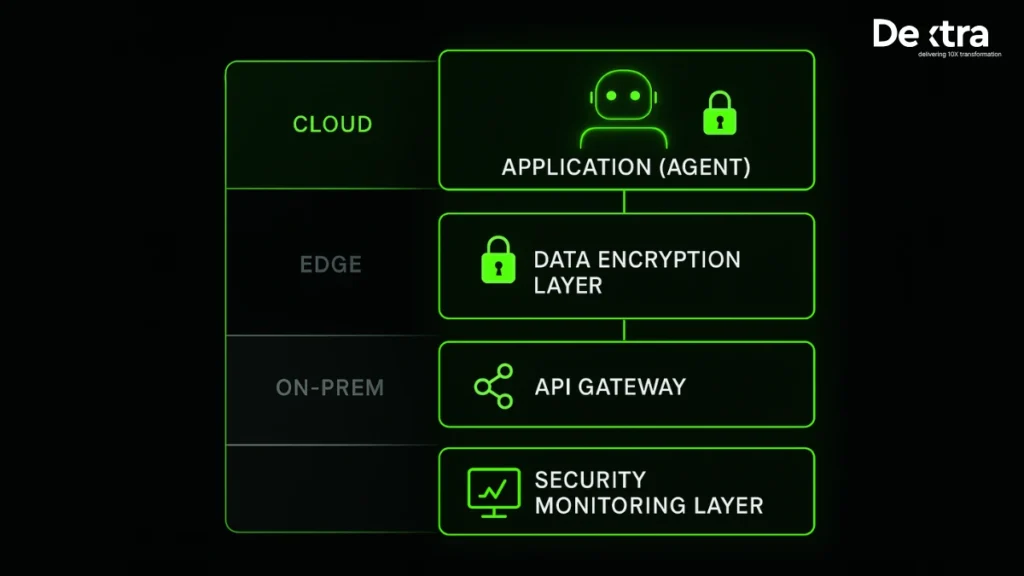

A multi-layered security architecture allowing AI agents to operate safely across cloud, edge, and on-premise environments—engineered and audited by Dextralabs AI consultants.

Step 3: Human-in-the-Loop Oversight

No matter how much better than humans it gets, AI will always be constrained from being totally free from the judgment of humans.

Best Practices:

- Maintain human validation for all high-criticality or high-risk output.

- Set decision boundaries, where automation stops and human agency takes over.

- Record feedback mechanisms by which AI systems can learn safely when human-monitored.

Results:

Just automation, wherein Agentic AI executes high-volume, mundane tasks whilst human individuals oversee decisions that touch on ethics, imagination, or empathy. This preserves efficiency without sacrificing responsibility.

Step 4: Transparency and Explainability

Agentic AI should never be a “black box.” Transparency begets trust and allows compliance.

Important Practices:

- Provision Explainable AI (XAI) dashboards that show decision visualizations of agent actions.

- Store decision traceability logs with inputs, reasoning traces, and outputs.

Advantages:

These activities improve debugability, auditability, and executive trust, as well as meet regulatory needs for AI responsibility. Transparency forms the cornerstone on which client, partner, and regulatory trust will be established.

Step 5: Continuous Monitoring and Risk Mitigation

AI systems are dynamic and change as they develop with users and circumstances. Adequate monitoring is made to provide stability and reliability in the long term.

Best Practices:

- Utilize live monitoring tools to monitor performance and model drift.

- Implement anomaly detection systems to alert on deviations or misuse.

- Install automated rollback procedures to roll back models to safe states if anomalies are discovered.

Result

A constant safety net to make sure AI agents are never unbound. Continuous oversight by Dextralabs guarantees AI agents will be secure, reliable, and in lockstep with human intention as the AI agents learn and develop.

Safe Agentic AI in the Real World

Dextralabs’ playbook has been applied successfully across numerous domains to show that safety and innovation aren’t mutually exclusive.

1. Financial Risk Intelligence

Agentic AI platforms screen transactions in real-time, detect anomalies, and identify suspicious activity – preventing fraud from happening. Dextralabs’ security-first strategy protects the user’s data and is regulation-compliant, such as GDPR and ISO 27001.

2. Enterprise Automation

AI agent automates forms, approves processes, and provides outcomes – freeing teams to focus on innovating and strategizing. Enterprises enjoy full end-to-end visibility and control, as it includes built-in governance.

3. Healthcare Operations

From scheduling patients to reporting diagnostics, Agentic AI accelerates the speed and quality of such activities. Artificial intelligence will continue to make room for informed clinical decision-making through enhanced observation by experts under a human-in-the-loop model and will keep watchful eyes on things in order to maintain both medical and ethical standards.

4. Improvement of E-commerce

Agentic AI can deliver more insightful suggestions, assist in customer queries, and report real-time predictive analysis of customer demand patterns. Such applications can get more conversions with the data of customer privacy and consent at hand.

The philosophy is the same across sectors – innovation is never at the expense of a safety principle. The Dextralabs playbook is engineered to make each deployment enhance performance and trust.

The Leadership Challenge: Building Trust in Autonomous Systems

For tech leaders, deploying Agentic AI responsibly is not a technical challenge: it is a leadership challenge.

Managing the Balance of Speed and Safety

Being first to take off at speed without security can later on result in peril, from cyber attacks to adverse reputational consequences. Conversely, being risk-averse to the point of paralyzing innovation is not acceptable. What is key is walking that tightrope of speed of innovation and depth of governance.

Trust-Building Strategies

- Start by putting governance and security first.

- Inject ethics and equity into all stages of AI development.

- Collaborate with expert AI consultants like Dextralabs, who understand the intersection of autonomy, regulation, and business strategy.

The Future Perspective

As mainstream Agentic AI arrives, the firm that communicates responsibly, transparently, and humankindly will set the tone for the next generation of digital evolution. Trust builders will dominate their market — not just technologically, but morally.

Conclusion: The Future of Safe, Agentic AI

Agentic AI is an era-defining change — from instruments to co-creators. They can assist businesses in anticipating challenges and maximizing decision-making to facilitate exponential growth. But without accountability, they can do the same to bring about unforeseen harm.

Dextralabs’ playbook for safe and secure agentic AI sets forth how to utilize these powerful systems responsibly — making each agent human-focused, ethical, and clear.

Alongside pushing autonomous AI in business around the world forward, trust and safety should be the starting point for every deployment. With governance, framework, and vision in place, technology leaders can create a future where intelligent automation complements human potential — securely, ethically, at scale.

FAQs on Agentic AI deployment:

Q. Why is safety important in deploying Agentic AI?

Without safety controls, autonomous systems can make unpredictable or harmful decisions that risk data integrity and compliance.

Q. How does Dextralabs ensure secure Agentic AI deployment?

Dextralabs integrates security-first design, multi-layer governance, and human-in-the-loop oversight to keep systems auditable and trustworthy.

Q. What governance frameworks apply to safe AI deployment?

Frameworks like ISO/IEC 42001 and the EU AI Act guide ethical, transparent, and compliant AI governance practices.

Q. Can Agentic AI operate without human oversight?

Not fully, Dextralabs promotes human-in-the-loop controls to maintain ethical, contextual, and safe AI decision-making.

Q. What are the top risks in Agentic AI deployment?

Key risks include data leaks, adversarial attacks, hallucinations, and lack of explainability—all requiring governance and monitoring.

Q. How can businesses balance innovation with AI safety?

Leaders must embed security and ethics into the design and deployment process to achieve both speed and trust.