As per Statista, 72% of companies have integrated AI into at least one business function in 2024. Most of them are struggling to unlock its full potential. The difference? Prompt engineering!

The prompt engineering market is exploding from $280 million in 2024 to an anticipated $2.5 billion by 2032, yet 74% of companies still struggle to achieve and scale AI value. The gap isn’t in the technology—it’s in how we communicate with it.

Prompt engineering converts generic AI outputs into precise business solutions. Whether you’re building applications, streamlining operations, or creating content, mastering this skill is your competitive advantage in the AI-driven economy.

At Dextralabs, we help bridge that gap.

This Guide unpacks the fundamentals to advanced techniques in prompt engineering, giving you the playbook to:

- Turn LLMs into real business tools

- Improve reliability and output quality

- Scale AI workflows without fine-tuning the model itself

Whether you’re building apps, deploying AI agents, or generating enterprise-grade content—mastering prompt engineering is no longer optional. It’s your competitive edge.

What is Prompt Engineering?

Let’s cut through the jargon. What is prompt engineering? Simply put, it’s the art and science of crafting effective instructions for large language models (LLMs) to produce desired outputs. Think of it as learning the specific language that helps AI systems understand exactly what you want them to do. Rather than hoping for the best with vague requests, prompt engineering gives you systematic methods to communicate clearly with AI models like GPT-4, Claude, or Llama.

At its core, prompt engineering bridges the gap between human intent and machine understanding. When you ask an AI to write code, analyze data, or create content, the quality of your prompt directly determines the quality of the response you receive.That’s essentially what you’re doing with AI prompt engineering.

Master Prompt Engineering for Your LLM Stack

Whether you’re just starting or scaling complex LLM apps, Dextralabs helps you turn prompt design into a competitive advantage.

Book Your Free AI ConsultationWhy Prompt Engineering Matters for LLMs?

Large language models are incredibly capable, but they’re only as good as the instructions they receive. A well-crafted prompt can mean the difference between getting a generic response and receiving exactly the specialized output you need for your business or project.

Consider these two approaches:

- Basic prompt: “Write about marketing”

- Engineered prompt: “Write a 500-word blog post about email marketing automation for B2B SaaS companies, focusing on lead nurturing sequences. Include three specific examples and end with actionable next steps.”

The second approach provides context, specifies format, defines the audience, and sets clear expectations—all fundamental principles of effective prompt engineering.

Core Prompt Engineering Techniques

Mastering these five foundational prompt engineering techniques will immediately improve your AI interactions by 60-80%. These prompt engineering examples form the backbone of professional prompt engineering and are essential for anyone serious about maximizing AI performance.

1. Zero-Shot Prompting

This technique involves giving the model a task without any examples. It relies on the model’s pre-trained knowledge to understand and complete the request.

Example: “Translate the following English text to French: ‘The meeting is scheduled for tomorrow at 3 PM.'”

2. Few-Shot Prompting

Provide the model with a few examples of the desired input-output pattern before presenting the actual task.

Example:

Classify the sentiment of these reviews:

Review: “This product exceeded my expectations!”

Sentiment: Positive

Review: “Terrible quality, would not recommend.”

Sentiment: Negative

Review: “The delivery was okay, nothing special.”

Sentiment: [Let the model complete this]

3. Chain-of-Thought Prompting

Encourage the model to show its reasoning process step-by-step, particularly useful for complex problem-solving tasks.

Example: “Solve this math problem step by step: If a store sells 150 items per day and operates 6 days per week, how many items does it sell in a month within 4 weeks?”

4. Role-Based Prompting

Assign a specific role or persona to the AI to guide its responses and expertise level.

Example: “You are a senior data scientist with 10 years of experience. Explain the concept of overfitting in machine learning to a junior developer who just started learning about AI.”

5. Template-Based Prompting

Create reusable prompt structures that can be adapted for similar tasks.

Template:

Task: [Specific action needed]

Context: [Background information]

Requirements: [Specific constraints or criteria]

Format: [How the output should be structured]

Audience: [Who will use this output]

10 Prompt Engineering Hacks to Maximize Your LLM’s Potential

Here are the prompt engineering best practices that consistently produce better results. These techniques form the foundation of effective LLM prompt engineering across all platforms and use cases:

1. Be Specific About Format and Structure

Instead of asking for “a report,” specify exactly what you want: “Create a 3-section report with executive summary, key findings, and recommendations. Use bullet points for findings and number the recommendations.”

2. Use Constraint-Based Instructions

Add boundaries to focus the AI’s response: “Explain quantum computing in exactly 100 words using only terms a high school student would understand.”

3. Implement Iterative Refinement

Start with a basic prompt, then refine based on the output: “Now make it more technical” or “Simplify this for a general audience.”

4. Use System Messages

Use system-level instructions to set the overall behavior: “You are a helpful assistant that always provides sources for factual claims and admits when uncertain.”

5. Apply the “Think Step by Step” Technique

For complex tasks, explicitly ask the model to break down its approach: “Before writing the code, first outline your approach step by step.”

6. Use Negative Instructions

Tell the AI what NOT to do: “Explain machine learning without using technical jargon or mathematical formulas.”

7. Provide Context Hierarchically

Structure information from general to specific: “You’re helping a startup (general) in the fintech space (more specific) build their first mobile payment app (most specific).”

8. Employ Multi-Turn Conversations

Build on previous responses strategically: “Based on the strategy you just outlined, now create a detailed implementation timeline.”

9. Use Prompt Chaining

Break complex tasks into smaller, connected prompts: First prompt creates an outline, second prompt expands each section, third prompt adds prompt engineering examples.

10. Implement Quality Control Prompts

Ask the AI to review its own work: “Review the above response for accuracy and suggest improvements.”

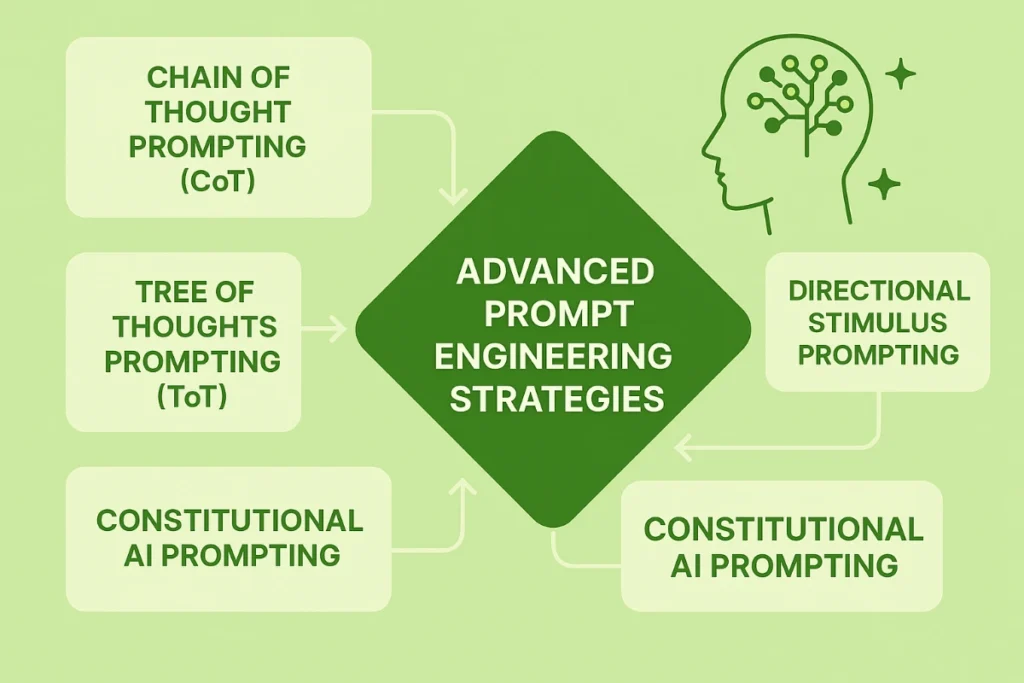

Advanced Prompt Engineering Strategies

Once you’ve mastered the basics, these cutting-edge prompt engineering techniques will set you apart from 90% of AI users. These methods are used by top-tier prompt engineers at leading tech companies to achieve breakthrough results.

Chain of Thought Prompting (CoT)

CoT prompting involves providing sequential cues to guide an AI model’s response, mimicking a flowing conversation. This method improves NLP tasks by maintaining context, enabling the model to grasp nuanced meanings and respond accurately to longer, more complex requests.

Example: “Let’s think through this step by step. First, identify the main components of the problem. Then, analyze each component individually. Finally, synthesize your findings into a comprehensive solution.“

Tree of Thoughts Prompting (ToT)

ToT prompting involves structuring input in a hierarchical manner, resembling a branching thought process where the trunk is the main idea or inquiry, the branches are specific aspects or sub-topics of the main idea, and the leaves are the most detailed and specific prompts.

Example:

Main Topic: Marketing Strategy

├── Branch 1: Target Audience Analysis

│ ├── Demographics

│ └── Behavioral patterns

├── Branch 2: Channel Selection

│ ├── Digital platforms

│ └── Traditional media

└── Branch 3: Budget Allocation

├── Per-channel investment

└── ROI projectionsDirectional Stimulus Prompting

This method guides AI models with specific hints to elicit targeted responses. For example, to ensure the model produces output on Revolutionary War figure John Paul Jones and not the Led Zeppelin bassist of the same name, you might prompt it with “Provide a brief biography of John Paul Jones. Hint: Revolutionary War.”

Constitutional AI Prompting

This approach involves creating prompts that help the AI adhere to specific principles or guidelines while completing tasks. You establish rules or “constitution” that guide the AI’s behavior.

Meta-Prompting

Write prompts that help create better prompts. Ask the AI to analyze and improve your prompting strategy.

Example: “Analyze this prompt and suggest three ways to make it more effective: [insert your original prompt]“

Prompt Engineering Best Practices

Following these proven practices can reduce your prompt iteration time by 70% while dramatically improving output quality. These guidelines are based on analysis of thousands of successful prompts across various industries.

Clarity and Precision

Write prompts that leave little room for misinterpretation. Use specific language and avoid ambiguous terms. Effective prompt engineering requires balancing precision and creativity, as the quality of your prompt directly determines the output quality.

Context Management

Provide sufficient background information without overwhelming the model. Include relevant details that help the AIunderstand the scope and purpose of the task.

Iterative Refinement Process

Prompt engineers make adjustments based on model responses, a process that requires continuous testing, evaluation, and refinement. Treat prompt engineering as an experimental process where you test different approaches, measure results, and refine your prompt engineering techniques based on performance.

Documentation and Versioning

Keep track of successful prompts and document what works for different types of tasks. This creates a valuable resource for future projects and helps maintain consistency across your AI applications.

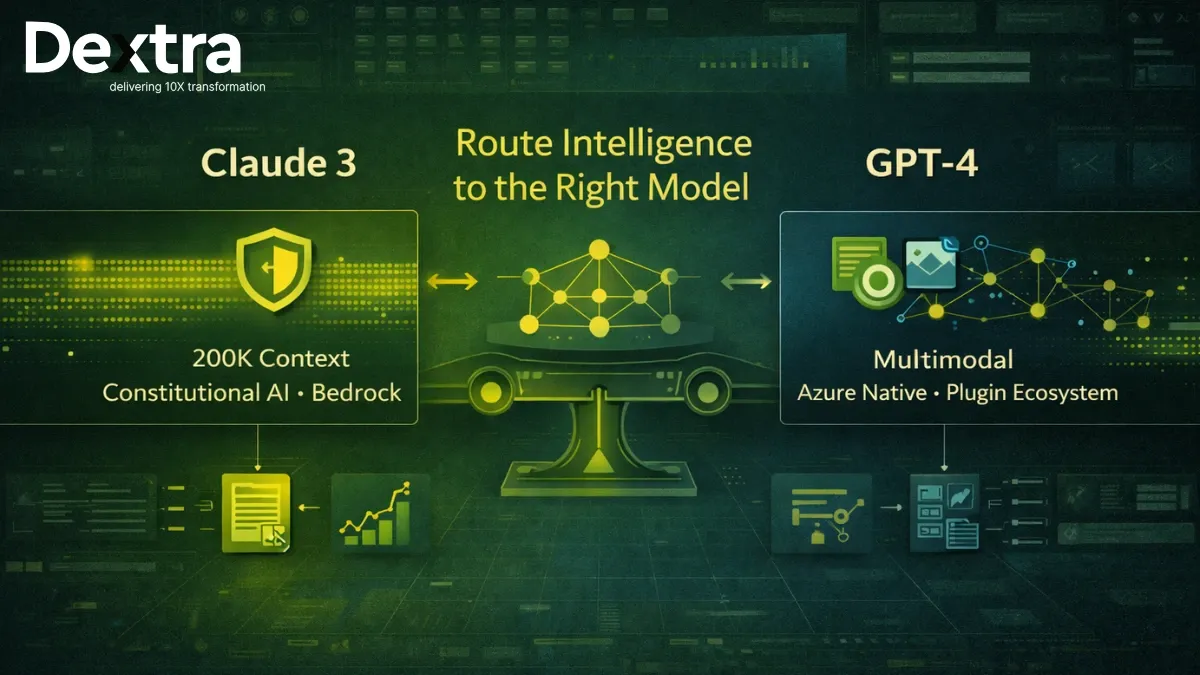

Understanding Model Limitations

Recognize that different models have varying strengths and weaknesses. Prompt engineering involves a deep understanding of the model’s mechanics and the nuances of language interpretation. Adapt your prompting strategy accordingly.

Common Prompt Engineering Mistakes

Avoiding these four critical mistakes will save you hours of frustration and dramatically improve your success rate. Learn from the most common pitfalls that trip up both beginners and experienced users.

Being Too Vague

Requests like “help me with my project” provide insufficient information for useful responses.

Overloading with Information

Including too much irrelevant context can confuse the model and dilute the focus of your request.

Ignoring Output Format

Failing to specify how you want the information presented often results in responses that require significant reformatting.

Not Considering the Audience

Prompts should account for who will ultimately use the AI’s output, ensuring appropriate language and complexity levels.

Prompt Engineering Tools

The right tools can multiply your prompt engineering efficiency by 10x. From testing environments to analytics platforms, these resources help professionals create, test, and optimize prompts at scale.

Prompt Libraries and Repositories

Platforms like PromptBase, Awesome Prompts, and LangChain provide collections of tested prompts for various use cases.

Prompt Testing Environments

Prompt engineering tools like Weights & Biases Prompts, PromptLayer, and OpenAI Playground allow you to experiment with and compare different prompt strategies.

Analytics and Monitoring

Services that track prompt performance help identify which approaches work best for specific tasks and models.

Template Generators

Automated systems that help create structured prompts based on task requirements and desired outcomes.

For organizations looking to implement prompt engineering at scale, working with specialized providers like Dextralabs can provide access to enterprise-grade tools and methodologies that aren’t available to individual practitioners. We offer comprehensive AI consulting services that include enterprise LLM deployment, custom model implementation, and specialized prompt optimization strategies.

Prompt Engineering Career Opportunities

The prompt engineering job market is exploding with 300% growth in job postings since 2023. Companies are offering $70K-$150K+ salaries for skilled prompt engineers, making it one of the hottest careers in tech.

Job Market Overview

The demand for prompt engineering skills continues to grow across industries. Companies need specialists who can optimize AI interactions for specific business use cases.

Salary Expectations

Prompt engineering salaries range from $70,000 to $150,000+. These roles typically offer competitive compensation depending on experience, location, and company size.

Required Skills for Prompt Engineering Success

Based on industry analysis and expert recommendations, effective prompt engineers need both technical and creative capabilities:

Technical Skills

- Deep understanding of NLP: Familiarity with natural language processing is crucial for effective prompt engineering, including knowledge of syntax, semantics, and language structures

- LLM architecture knowledge: A solid grasp of LLM architectures, such as GPT and other transformer models, is essential for understanding how these models process and generate language

- Strong data analysis capabilities: Data-driven decision-making is fundamental, including analyzing model outputs, training data, and performance metrics to support prompt refinement

Communication and Creative Skills

- Effective communication: The ability to translate complex concepts into simple and clear prompts is vital for ensuring the model interprets prompts accurately

- Proficiency in nuanced input creation: Crafting prompts that account for nuanced language and diverse contexts enhances the AI’s ability to generate meaningful and contextually relevant responses

- Critical thinking: Anticipating how the AI might interpret different prompts requires critical thinking to identify potential biases and challenges in prompt engineering

Career Paths

- Prompt Engineer

- AI Product Manager

- Conversational AI Designer

- LLM Prompt Engineering Specialist

- AI Training Specialist

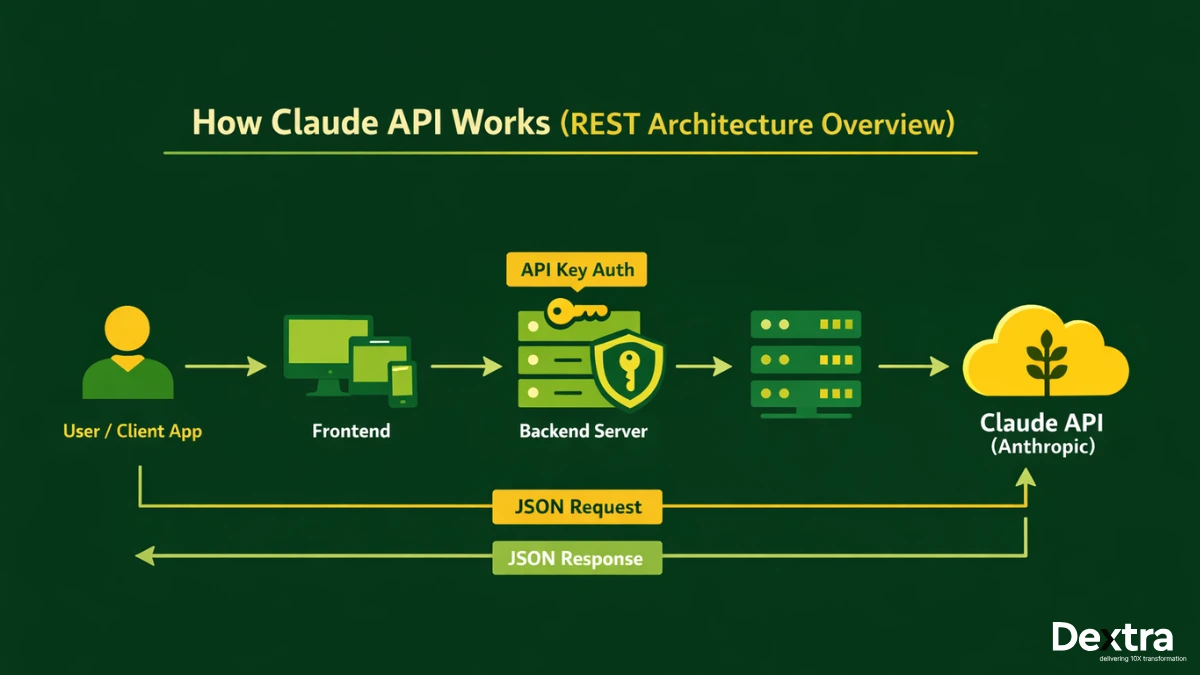

Platform Specific Prompt Engineering Tips

Different AI platforms have their own quirks and optimal approaches.

- ChatGPT prompt engineering works best with conversational, iterative prompting.

- Claude excels at longer, more detailed instructions.

LLM prompt engineering principles apply across platforms, but you’ll want to adapt your approach based on each model’s strengths.

Integrating Prompt Engineering with Development Workflows

Smart organizations are embedding AI prompt engineering into their CI/CD pipelines, reducing deployment issues by 50% and accelerating feature delivery. Here’s how to systematically integrate prompt engineering into your development processes.

CI/CD Integration for LLM Applications

Integrating prompt engineering into the continuous integration/continuous delivery (CI/CD) process is pivotal for advancing the development and maintenance of LLM applications. This systematic approach can significantly improve the efficiency, adaptability, and robustness of AI systems.

Key components of CI/CD integration include:

Regular Updates: Prompts are continually refined and updated, ensuring they remain relevant and effective as models and requirements evolve.

Automated Testing: Automated tests evaluate the effectiveness of various prompts, ensuring only high-quality prompts are deployed to production systems.

Version Control: Changes in prompts are tracked systematically, supporting easy reverting and increasing understanding of their impact on model performance.

Rapid Deployment: Updated prompts can be quickly deployed, allowing for immediate improvements in LLM performance without lengthy deployment cycles.

Feedback Integration: User feedback is continuously monitored and incorporated into the development cycle, facilitating real-time refinement of prompts based on actual usage patterns.

Building Scalable Prompt Engineering Systems

Embedding prompt engineering into your development pipeline enables systematic iteration and improvement of model prompts, shortening the time required to respond to performance issues and implement new features in AI applications.

Many companies find success working with AI consulting partners who specialize in integrating these practices into existing workflows. Dextra Labs, for instance, helps organizations implement comprehensive AI integration services that ensure prompt engineering becomes a sustainable organizational capability rather than an ad-hoc skill.

Learning Path

- Understand LLM Basics: Learn how large language models work and their capabilities

- Practice Core Techniques: Start with simple prompting methods and gradually advance

- Study Examples: Analyze successful prompts in your area of interest

- Experiment Regularly: Test different approaches and document your findings

- Join Communities: Participate in prompt engineering forums and discussions

Certification Options

Several organizations offer prompt engineering certifications that can validate your skills and improve job prospects. These programs typically cover theoretical foundations, practical techniques, and hands-on experience with various AI models.

Building a Portfolio

Create a collection of successful prompts and their results. Document your problem-solving process and demonstrate the business value of your prompt engineering work.

The Future of Prompt Engineering

As AI models become more sophisticated, prompt engineering continues to evolve. The prompt engineering tools landscape is also maturing quickly, with better analytics, testing environments, and integration options appearing regularly. However, the fundamental skills of clear communication, logical thinking, and creative problem-solving remain essential.

For organizations ready to scale their AI initiatives, consider partnering with experienced providers like Dextra Labs, we offer end-to-end AI consulting services from business productivity consulting to custom model implementation. This approach helps align your prompt engineering efforts with broader business objectives and technical requirements.

The AI revolution is just getting started, and prompt engineering skills will only become more valuable as AI becomes more integrated into every aspect of business and daily life.

Build Smarter AI Workflows with Expert Prompt Engineering

From zero-shot to agentic chaining, Dextralabs delivers end-to-end prompt engineering solutions that drive accuracy, reliability, and ROI.

Book Your Free AI ConsultationFAQs on prompt engineering for LLMs:

Q. Which of the following is NOT a strategy used in prompt engineering?

While prompt engineering includes strategies like role assignment, instruction clarity, few-shot prompting, and output formatting, techniques like fine-tuning the model weights fall outside the scope of prompt engineering. That’s actually model tuning, not prompt engineering.

At Dextralabs, we help teams distinguish between what can be solved through prompt strategy vs when a custom model solution is necessary—so you don’t over-engineer when it’s not required.

Q. What is an example of iteration in prompt engineering?

Iteration in prompt engineering means refining your prompt through multiple rounds of testing. For example, starting with:

“Summarize this article.”

And refining it to:

“Summarize this article in 3 bullet points for a busy executive who needs only the key takeaways.”

At Dextralabs, we iterate using real user feedback and behavior to fine-tune prompts at scale—especially when building AI agents that must reliably perform across variable inputs.

Q. Which statement is true about prompt engineering for an ambiguous situation?

In ambiguous situations, prompt specificity becomes your best tool. The more unclear the user input, the more important it is to guide the model with context, constraints, and examples.

Our LLM consultants at Dextralabs often deploy dynamic prompt templates that adapt based on context, reducing ambiguity at runtime for chatbots, agent flows, and multi-turn interactions.

Q. In prompt engineering, what are format, length, and audience examples of?

These are part of the response constraints or output specifications. They ensure that the model’s response is usable for its target audience—be it a technical report, marketing copy, or a JSON payload.

Dextralabs specializes in prompt frameworks that dynamically define output format, tone, and structure—tailored to the needs of developers, analysts, or end-users.

Q. What is an example of using roles in prompt engineering?

Using roles means assigning the LLM a specific persona or viewpoint. For example:

“You are a cybersecurity expert. Explain the importance of multi-factor authentication to a non-technical CEO.”

This helps align the model’s response style, vocabulary, and detail level to the intended user. At Dextralabs, we use role-based prompting extensively for agentic AI use cases like LLM-powered customer support, technical troubleshooting, and domain-specific advisors.

Q. What is the best way to think of prompt engineering?

Prompt engineering is not just “asking nicely”—it’s designing instructions like a developer designs an API call. You’re engineering the input to get a precise, deterministic, or creatively useful output from a non-deterministic model.

Dextralabs treats prompt engineering as a core layer of system design—combining linguistics, context control, and output structuring to align generative AI with real-world business goals.

Q. In prompt engineering, why is it important to specify the desired format or structure of the response?

Specifying format helps avoid ambiguous outputs and makes the response machine-readable. For example, asking:

“Return the result as a JSON object with keys: name, role, and contact_info.”

…ensures your backend system or agent can parse and use the output directly.

At Dextralabs, we build structured prompting protocols into our LLM-integrated systems to support automation, API chaining, and dynamic document generation.

Q. What is the purpose of prompt engineering in generative AI systems?

The core purpose is to control, shape, and optimize the model’s output to suit a task or audience without changing the model weights. It enables adaptability without retraining.

Whether it’s crafting domain-specific assistants or scaling content operations, Dextralabs uses prompt engineering to make LLMs work like purpose-built applications—cost-efficient, fast, and reliable.

Q. What is prompt engineering in LLM?

Prompt engineering is the art of designing effective inputs (prompts) to guide a Large Language Model (LLM) to produce accurate, context-specific, or structured outputs—without modifying the model itself.

Q. What is a prompt engineer?

A prompt engineer crafts, tests, and optimizes prompts to get desired results from LLMs. They combine domain knowledge, NLP techniques, and experimentation to build smarter, more reliable AI interactions.

Q. How to become a prompt engineer?

Start by:

Learning how LLMs work (e.g., GPT, Claude, Mistral)

Practicing prompt design (zero-shot, few-shot, role-based)

Understanding use-case logic (chatbots, agents, APIs)

Using tools like LangChain, OpenAI Playground, and Dextralabs’ LLM frameworks.

Q. How to do prompt engineering?

Define your goal and audience

– Be explicit in instructions (format, tone, constraints)

– Use examples (few-shot) for better output consistency

– Test, refine, and iterate based on real responses

Q. What are the latest trends in prompt engineering?

– Auto-prompting tools

– Prompt compression for efficiency

– Multi-agent prompt chaining

– Retrieval-Augmented Prompting (RAG)

– Prompt versioning & observability at scale

Dextralabs helps teams stay ahead with these trends via prompt optimization and LLM deployment solutions.